by Paul Gilster | Dec 7, 2022 | Missions |

Some topics just take off on their own. Several days ago, I began working on a piece about Europa Clipper’s latest news, the installation of the reaction wheels that orient the craft for data return to Earth and science studies at target. But data return is one thing for spacecraft working at radio frequencies within the Solar System, and another for much more distant craft, perhaps in interstellar space, using laser methods.

So spacecraft orientation in the Solar System triggered my recent interest in the problem of laser pointing beyond the heliosphere, which is acute for long-haul spacecraft like Interstellar Probe, a concept we’ve recently examined. Because unlike radio methods, laser communications involve an extremely tight, focused beam. Get far enough from the Sun and that beam will have to be exquisitely precise in its placement.

So let’s take a quick look at Europa Clipper’s methods for orienting itself in space, and Voyager’s as well, and then move on to how Interstellar Probe intends to get its signal back to Earth. NASA has just announced that engineers have installed four reaction wheels aboard Europa Clipper, to provide orientation for the transmission of data and the operation of its instruments as it studies the Jovian moon. The wheels are slow to have their effect, with 90 minutes being needed to rotate Europa Clipper 180 degrees, but they run usefully on electrical power from the spacecraft’s solar arrays rather than relying on fuel that would have to be carried for its thrusters.

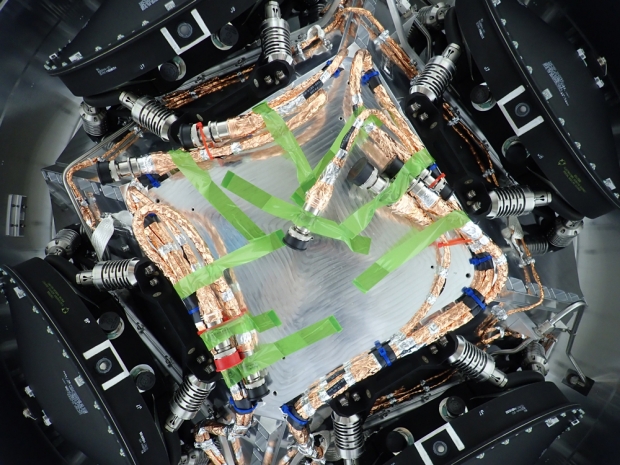

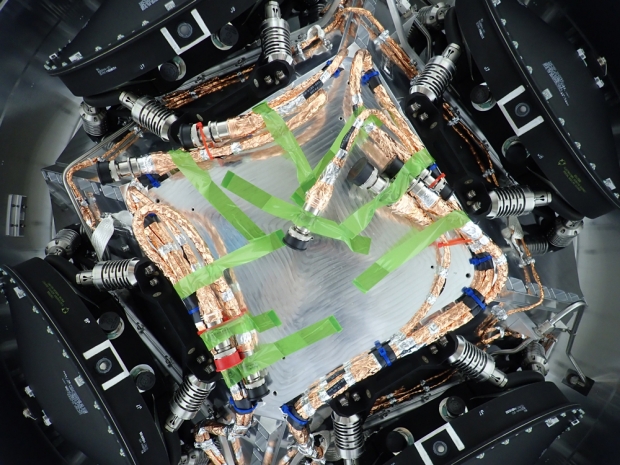

Image: All four of the reaction wheels installed onto NASA’s Europa Clipper are visible in this photo, which was shot from underneath the main body of the spacecraft while it is being assembled at the agency’s Jet Propulsion Laboratory in Southern California. The spacecraft is set to launch in October 2024 and will head toward Jupiter’s moon Europa, where it will collect science observations while flying by the icy moon dozens of times. During its journey through deep space and its flybys of Europa, the spacecraft’s reaction wheels rotate the orbiter so its antennas can communicate with Earth and so its science instruments, including cameras, can stay oriented. Two feet wide and made of steel, aluminum, and titanium, the wheels spin rapidly to create a force that causes the orbiter to rotate in the opposite direction. The wheels will run on electricity provided by the spacecraft’s vast solar arrays. NASA/JPL-Caltech.

Interstellar Pointing Accuracy

How do reaction wheels fit into missions much further out? In our recent look at Interstellar Probe, the NASA design study out of the Johns Hopkins University Applied Physics Laboratory (JHU/APL), I mentioned problems with pointing accuracy when it came to a hypothetical laser communications system aboard. The team working on Interstellar Probe (IP) chose not to go with a laser comms system, opting instead for X-band communications (or conceivably Ka-band), because as principal investigator Ralph McNutt told me, several problems arose when trying to point such a tight communications signal at Earth from the ultimate mission target: 1000 AU.

IP, remember, has 1000 AU as a design specification – the idea is to produce a craft that, upon reaching this distance, would still be able to transmit its findings back to Earth, but whether this distance can be achieved within the cited 50 year time frame is another matter. Wherever the distance of the craft is 50 years after launch, though, the design calls for it to be able to communicate with Earth. We can still talk to the Voyagers, but that brings up the issue of the best method to make the connection.

Both Voyagers are a long way from home, but nothing like 1000 AU, with Voyager 1 at 158 AU and Voyager 2 at 131 AU from the Sun. The craft are equipped with six sets of thrusters to control pitch, yaw and roll, allowing the orientation with Earth needed for radio communications (Voyager transmits at either 2.3 GHz or 8.4 GHz). But what about those reaction wheels we just looked at with Europa Clipper, which allow three-axis attitude control without using attitude control thrusters or other external sources of torque? Here we run into a technology with a history that is problematic for going beyond the Solar System or, indeed, extending a mission closer to home. Just how problematic we learned all too clearly with the Kepler mission.

For reaction wheels are all too prone to failure over time. The hugely successful exoplanet observatory found itself derailed in May of 2013, when the second of its reaction wheels failed (the first had given out the previous July). Operating something like a gyroscope, the reaction wheels were designed to spin up in one direction so as to move the spacecraft in the other, thus allowing data return from the rich star field Kepler was studying. Kepler had four reaction wheels and needed three to function properly. With only two wheels operational, the spacecraft quickly went into safe mode.

The problem, likely the result of something as mundane as issues with ball bearings, is hardly confined to a single mission, and although the Kepler team was able to mount a successful K2 extended mission, the larger question extends to any long-term mission relying on this technology. Reaction wheels were a problem on NASA’s Far Ultraviolet Spectroscopic Explorer in 2001 and complicated the Japanese Hayabusa mission in 2004 and 2005. The DAWN mission had two reaction wheel failures during the course of its operations. A NASA mission called Thermosphere, Ionosphere, Mesosphere Energetics and Dynamics (TIMED) suffered a reaction wheel failure in 2007.

So by the time Kepler was close to launch, the question of reaction wheels was much in the air. We should keep in mind that the reaction wheel failures occurred despite extensive precautions taken by the mission controllers, who sent the Kepler reaction wheels back to the manufacturer, Ithaco Space Systems in Ithaca, NY, removing them from the spacecraft in 2008 and replacing the ball bearings before the 2009 launch. It became clear with the reaction wheel failures Kepler sustained that the technology was vulnerable, although it did function up to the end of the spacecraft’s primary mission.

Based on experience, the technology shows a shelf-life on the order of a decade, which is why the Interstellar Probe team had to reject the reaction wheel concept for laser pointing. Remember that IP is envisioned as a fully operational spacecraft for 50 years, able to return data from well beyond the heliosphere at that time. As McNutt pointed out in an email, the usable laser beam size at the Earth, based on a 2003 NIAC study, was approximately Earth’s own diameter. Let me quote Dr. McNutt on this:

“With a downlink per week from 1000 au that lasted ~8 hours for that concept, one would have to point the beam ahead, so that the Earth would be “under it” when the laser train of light signals arrived. It also meant that we needed an onboard clock good to a few minutes after 50 years at worst and a good ephemeris on board to tell where to point in the first place. These start at least heading toward some of the performance of Gravity Probe B… but one needs these accuracies to hold for ~50 years.”

This gets complicated indeed. From a 2002 paper on optical and microwave communications for an interstellar explorer craft operating as far as 1000 AU (McNutt was a co-author here, working on a study that fed directly into the current Interstellar Probe design), note the possible errors that must be foreseen:

These include trajectory knowledge derived from an onboard clock and ephemerides to track the receiving station and downlink platform so that the spacecraft-to-earth line-of-sight orientation is known sufficiently accurately within the total spacecraft pointing error budget. In order to maintain the transmitter boresight accurately a high-precision star tracker is also needed, which must be aligned very accurately with respect to the laser antenna. Alignment errors between the transmitter and star tracker can be minimized by using the same optical system for the star tracker and laser transmitter and compensating any residual dynamic errors in real-time. This must be accomplished subject to various spacecraft perturbations, such as propellant bursts, or solar radiation induced moments. To also avoid significant beam loss when coupling into the receiver near Earth, the beam shape should be controlled, i.e., be a diffraction-limited single mode beam as well.

X-band radio communications, as considered by the Interstellar Probe team at JHU/APL, thus emerges as the better option considering that a mission coming out of the upcoming heliophysics decadal would be launching in the 2030s, with the recent analysis from Pontus Brandt et al. noting that “Although, optical laser communication offers high data rates, it imposes an unrealistic pointing requirement on the mission architectures under study.”

What to do? From the Brandt et al. paper (my additions are in italics):

The conclusion following significant analysis was that the implementation with the largest practical monolithic HGA [High Gain Antenna] with the corresponding lower transmission frequency to deal with a larger pointing dead-band. This corresponds to a 5-m diameter HGA at X-band for Options 1 and 2 and a smaller, 2-m HGA at Ka-band for Option 3 [here the options refer to the mass of the spacecraft]. The corresponding guidance and control system is based upon thrusters and must provide the required HGA pointing as commensurate with spacecraft science needs.

I checked in with Ralph McNutt again while working on this post on the question of how IP would orient the spacecraft. He confirmed that attitude control thrusters would be the method, and went on to note that, at flight-tested status (TRL 9), control authority of ~0.25° with thrusters is possible; we also have much experience with the technology.

Dr. McNutt passed our discussion along to JHU/APL’s Gabe Rogers, who has extensive experience on the matter not only with the Interstellar Probe concept but through flight experience with NASA’s Van Allen Probes. Dr. Rogers likened IP’s attitude control to Pioneer 10 and 11 more than Voyager, saying that IP would be primarily spin-stabilized rather than, like Voyager, 3-axis stabilized. The Pioneers carried six hydrazine thrusters, two of which maintained the spin rate, while two controlled forward thrust and two controlled attitude.

As to reaction wheels, they turn out to be both a lifetime and a power issue, ruling them out. Both scientists added that surviving launch vibration and acceleration is a factor, as are changes in moments of inertia as fuel is burned for guidance and control.

Says McNutt:

“One way of dealing with this (looks good on paper) is actively moving masses around to compensate for pointing issues – but then one has to worry about the lifetime of mechanisms. Galileo actually had motors to control the boom deployments of its two RTGs to control the moments of inertia of the spinning section (a different “issue”). Of course, Galileo is also the poster child of what can happen if deployment mechanisms fail on a $1B + spacecraft – in that case the HGA deployment. The LECP [Low-Energy Charged Particle] stepper motors on Voyager have gone through over 7 million steps – but that was not the “plan” or “design.”

What counts is the result. Will engineers fifty years after launch be able to download meaningful scientific data from a craft like Interstellar Probe? The question frames the entire discussion as we move toward interstellar space. Rogers adds:

“We can always mitigate risk, but we have to think very carefully about the best, most reliable way to recover the science data requested. Sometimes simpler is better. The key is to get the most bits down to the ground. I would rather have a 1000 bit per second data rate that would work 8 hours per day than a 3000 bps data rate that worked 2 hours per day. X-band is also less susceptible to rain in Spain falling mainly on the plains.”

Indeed, and with RF as opposed to laser, we have less concern about where the clouds are. So the current thinking about using X-band resolves issues beyond pointing accuracy. Bear in mind that we are talking about a spacecraft deliberately crafted to be operational for 50 years or more, a seemingly daunting challenge in what McNutt calls ‘longevity by design,’ but every indication is that longevity can be achieved, as the Voyagers remind us despite their not being built for the task.

And while I had never heard of the Oxford Electric Bell before this correspondence, I’ve learned in these discussions that it was set up in 1840 and has evidently run ever since its construction. So we’ve been producing long-lived technologies for some time. Now we incorporate them intentionally into our spacecraft to move beyond the heliosphere.

As to Europa Clipper’s reaction wheels, they fit the timeframe of the mission, considering we have a decade to work with, from 2024 launch to end of operations (presumed in 2034). But aware of the previous problems posed by reaction wheels, Europa Clipper’s engineers have installed four rather than three to provide a backup, and we can hope that knowledge hard-gained through missions like Kepler will afford an even longer lifetime for the steel, aluminum, and titanium wheels aboard Clipper.

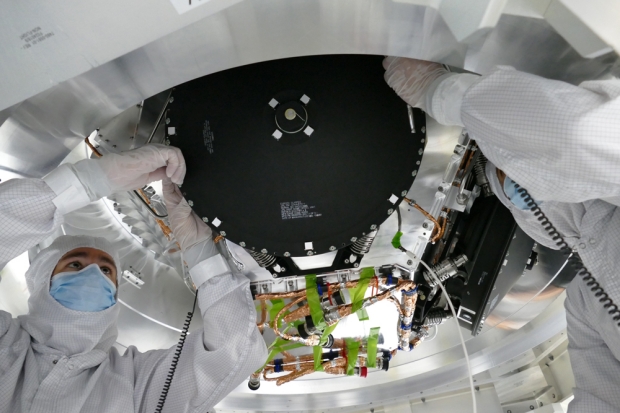

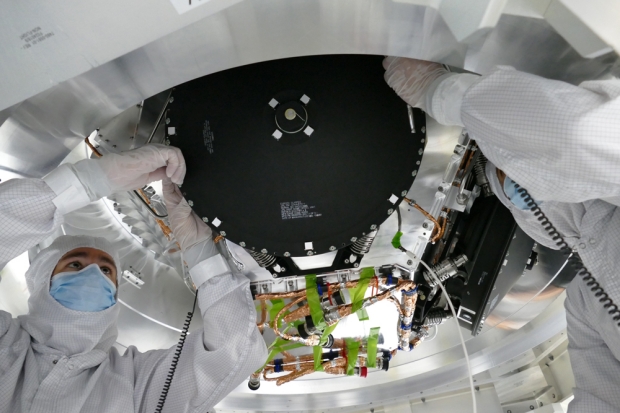

Image: Engineers install 2-foot-wide reaction wheels onto the main body of NASA’s Europa Clipper spacecraft at the agency’s Jet Propulsion Laboratory. The orbiter is in its assembly, test, and launch operations phase in preparation for a 2024 launch. Credits: NASA/JPL-Caltech.

Many thanks to Ralph McNutt and Gabe Rogers for their help with this article. The study on optical communications I referenced above is Boone et al., “Optical and microwave communications system conceptual design for a realistic interstellar explorer,” Proc. SPIE 4821, Free-Space Laser Communication and Laser Imaging II, (9 December 2002). Abstract. The Brandt paper on IP is “Interstellar Probe: Humanity’s exploration of the Galaxy Begins,” Acta Astronautica Volume 199 (October 2022), pages 364-373 (full text). For broader context, be aware as well of Rogers et al., “Dynamic Challenges of Long Flexible Booms on a Spinning Outer Heliospheric Spacecraft,” published in 2021 IEEE Aerospace Conference (full text).

by Paul Gilster | Nov 23, 2022 | Missions |

The Interstellar Probe concept being developed at Johns Hopkins Applied Physics Laboratory is not alone in the panoply of interstellar studies. We’ve examined the JHU/APL effort in a series of articles, the most recent being NASA Interstellar Probe: Overview and Prospects. But we should keep in mind that a number of white papers have been submitted to the European Space Agency in response to the effort known as Cosmic Vision and Voyage 2050. One of these, called STELLA, has been put forward to highlight a potential European contribution to the NASA probe beyond the heliosphere.

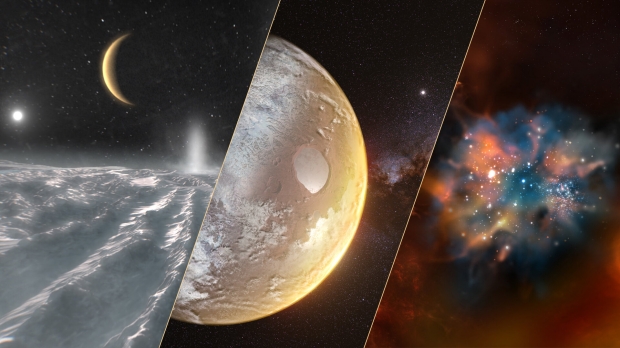

Image: A broad theme of overlapping waves of discovery informs ESA’s Cosmic Vision and Voyage 2050 report, here symbolized by icy moons of a gas giant, an temperate exoplanet and the interstellar medium itself, with all it can teach us about galactic evolution. Among the projects discussed in the report is NASA’s Interstellar Probe concept. Credit: ESA.

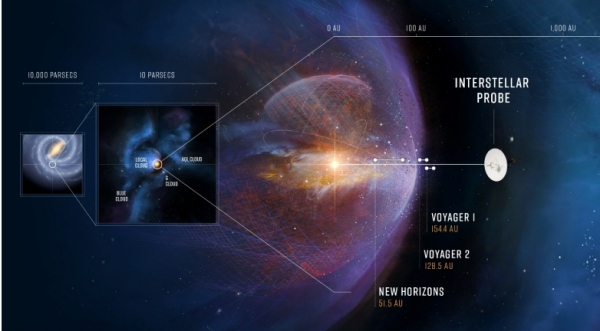

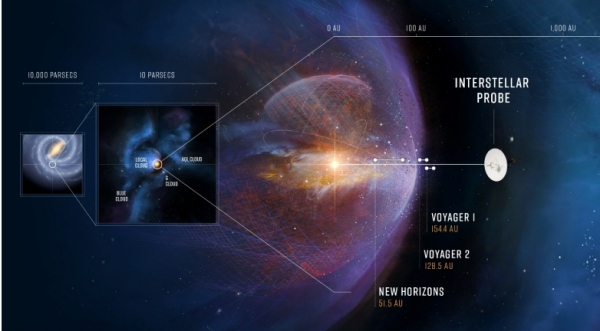

Remember that Interstellar Probe (which needs a catchier name) focuses on reaching the interstellar medium beyond the heliosphere and studying the interactions there between the ‘bubble’ that surrounds the Solar System and interstellar space beyond. The core concept is to launch a probe explicitly designed (in ways that the two Voyagers currently out there most certainly were not) to study this region. The goal will be to travel faster than the Voyagers with a complex science payload, reaching and returning data from as far away as 1000 AU in a working lifetime of 50 years.

But note that ‘as far away as 1000 AU’ and realize that it’s a highly optimistic stretch goal. A recent paper, McNutt et al., examined in the Centauri Dreams post linked above, explains the target by saying “To travel as far and as fast as possible with available technology…” and thus to reach the interstellar medium as fast as possible and travel as far into it as possible with scientific data return lasting 50 years. From another paper, Brandt et al. (citation below) comes this set of requirements:

- The study shall consider technology that could be ready for launch on 1 January 2030.

- The design life of the mission shall be no less than 50 years.

- The spacecraft shall be able to operate and communicate at 1000 AU.

- The spacecraft power shall be no less than 300 W at end of nominal mission.

This would be humanity’s first mission dedicated to reaching beyond the Solar System in its fundamental design, and it draws attention across the space community. How space agencies work together could form a major study in itself. For today, I’ll just mention a few bullet points: ESA’s Faint Object Camera (FOC) was aboard Hubble at launch, and the agency built the solar panels needed to power up the instrument. The recent successes of the James Webb Space Telescope remind us that it launched with NIRSpec, the Near-InfraRed Spectrograph, and the Mid-InfraRed Instrument (MIRI), both contributed by ESA. And let’s not forget that JWST wouldn’t be up there without the latest version of the superb Ariane 5 launcher, Ariane 5 ECA. Nor should we neglect the cooperative arrangements in terms of management and technical implementation that have long kept the NASA connection with ESA on a productive track.

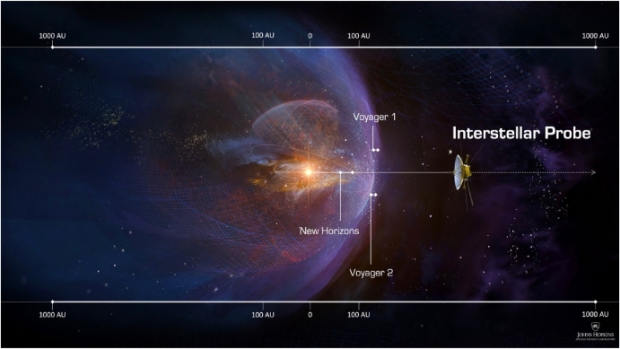

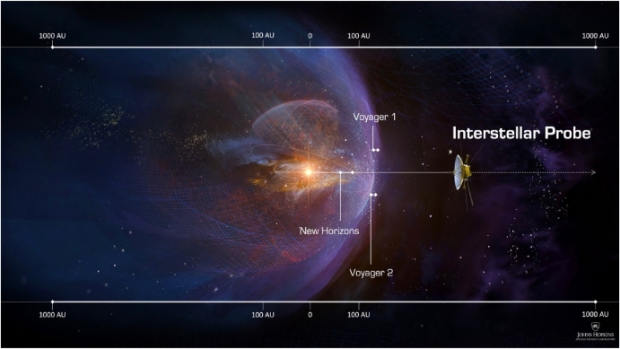

Image: This is Figure 1 from Brandt et al., a paper cited below out of JHU/APL that describes the Interstellar Probe mission from within. Caption: Fig. 1. Interstellar Probe on a fast trajectory to the Very Local Interstellar Medium would represent a snapshot to understand the current state of our habitable astrosphere in the VLISM, to ultimately be able to understand where our home came from and where it is going.

So it’s no surprise that a mission like Interstellar Probe would draw interest. Earlier ESA studies on a heliopause probe go back to 2007, and the study overview of that one can be found here. Outside potential NASA/ESA cooperation, I should also note that China is likewise studying a probe, intrigued by the prospect of reaching 100 AU by the 100th anniversary of the current government in 2049. So the idea of dedicated missions outside the Solar System is gaining serious traction.

But back to the Cosmic Vision and Voyage 2050 report, from which I extract this:

The great challenge for a mission to the interstellar medium is the requirement to reach 200 AU as fast as possible and ideally within 25-30 years. The necessary power source for this challenging mission requires ESA to cooperate with other agencies. An Interstellar Probe concept is under preparation to be proposed to the next US Solar and Space Physics Decadal Survey for consideration. If this concept is selected, a contribution from ESA bringing the European expertise in both remote and in situ observation is of significance for the international space plasma community, as exemplified by the successful joint ESA-NASA missions in solar and heliospheric physics: SOHO, Ulysses and Solar Orbiter.

I’m looking at the latest European white paper on the matter, whose title points to what could happen assuming the JHU interstellar probe concept is selected in the coming Heliophysics Decadal Survey (as we know, this is a big assumption, but we’ll see). The paper, “STELLA—Potential European contributions to a NASA-led interstellar probe,” appeared recently in Frontiers of Astronomy and Space Science (citation below), highlighting possible European contributions to the JHU/APL Interstellar Probe mission, and offering a quick overview of its technology, payload and objectives.

As mentioned, the only missions to have probed this region from within are the Voyagers, although the boundary has also been probed remotely in energetic neutral atoms by the Interstellar Boundary Explorer (IBEX) as well as the Cassini mission to Saturn. We’d like to go beyond the heliosphere with a dedicated mission not just because it’s a step toward much longer-range missions but also because the heliosphere itself is a matter of considerable controversy. Exactly what is its shape, and how does that shape vary with time? Sometimes it seems that our growing catalog of data has only served to raise more questions, as is often the case when pushing into territories previously unexplored. The white paper puts it this way:

The many and diverse in situ and remote-sensing observations obtained to date clearly emphasize the need for a new generation of more comprehensive measurements that are required to understand the global nature of our Sun’s interaction with the local galactic environment. Science requirements informed by the now available observations drive the measurement requirements of an ISP’s in situ and remote-sensing capabilities that would allow [us] to answer the open questions…

We need, in other words, to penetrate and move beyond the heliosphere to look back at it, producing the overview needed to study these interactions properly. But let’s pause on that term ‘interstellar probe.’ Exactly how do we characterize space beyond the heliosphere? Both our Voyager probes are now considered to be in interstellar space, but we should consider the more precise term Very Local Interstellar Medium (VLISM), and realize that where the Voyagers are is not truly interstellar, but a region highly influenced by the Sun and the heliosphere. The authors are clear that even VLISM doesn’t apply here, for to reach what they call the ‘pristine VLISM’ demands capabilities beyond even the interstellar probe concept being considered at JHU.

Jargon is tricky in any discipline, but in this case it helps to remember that we move outward in successive waves that are defined by our technological capabilities. If we can get to several hundred AU, we are still in a zone roiled by solar activity, but far enough out to draw meaningful conclusions about the heliosphere’s relationship to the solar wind and the effects of its termination out on the edge. In these terms, we should probably consider JHU/APL’s Interstellar Probe as a mission toward the true VLISM. Will it still be returning data when it gets there? A good question.

IP is also a mission with interesting science to perform along the way. A spacecraft on such a trajectory has the potential for flybys of outer system objects like dwarf planets (about 130 are known) and the myriad KBOs that populate the Kuiper Belt. Dust observations at increasing distances would help to define the circumsolar dust disk on which the evolution of the Solar System has depended, and relate this to what we see around other stars. We’ll also study extragalactic background light that should provide information about how stars and galaxies have evolved since the Big Bang.

Image: A visualization of Interstellar Probe leaving the Solar System. Credit: European Geosciences Union, Munich.

The white paper offers the range of outstanding science questions that come into play, so I’ll send you to it for more but ultimately to the latest two analytical descriptions out of JHU/APL, which are listed in the citations below. To develop instruments to meet these science goals would involve study by a NASA/ESA science definition team, and of course depends on whether the Interstellar Probe concept makes it through the Decadal selection. It’s interesting to see, though, that among the possible contributions this white paper suggests from ESA is one involving a core communications capability:

One of the key European industrial and programmatic contributions proposed in the STELLA proposal to ESA is an upgrade of the European deep space communication facility that would allow the precise range and range-rate measurements of the probe to address STELLA science goal Q5 [see below] but would also provide additional downlink of ISP data and thus increase the ISP science return. The facility would be a critical augmentation of the European Deep Space Antennas (DSA) not only for ISP but also for other planned missions, e.g., to the icy giants.

Q5, as referenced above, refers to testing General Relativity at various spatial scales all the way up to 350 AU, and the authors note that less than a decade after launch, such a probe would need a receiving station with the equivalent of 4 35-meter dishes, an architecture that would be developed during the early phases of the mission. On the spacecraft itself, the authors see the potential for providing the high gain antenna and communications infrastructure in a fully redundant X-band system that represents mature technology today. I’m interested to see that they eschew optical strategies, saying these would “pose too stringent pointing requirements on the spacecraft.”

STELLA makes the case for Europe:

The architecture of the array should be studied during an early phase of the mission (0/A). European industries are among the world leaders in the field. mtex antenna technology. (Germany) is the sole prime to develop a production-ready design and produce a prototype 18-m antenna for the US National Research Observatory (NRAO) Very Large Array (ngVLA) facility. Thales/Alenia (France/Italy), Schwartz Hautmont (Spain) are heavily involved in the development of the new 35-m DSA antenna.

As the intent of the authors is to suggest possible European vectors for collaboration in Interstellar Probe, their review of key technology drivers is broad rather than deep; they’re gauging the likelihood of meshing areas where ESA’s expertise can complement the NASA concept, some of them needing serious development from both sides of the Atlantic. Propulsion via chemical methods could work for IP, for example, given the options of using heavy lift vehicles like NASA SLS and the possibility, down the road, of a SpaceX Starship or BlueOrigin vehicle to complement the launch catalog. The availability of such craft coupled with a passive gravity assist at Jupiter points to a doubling of Voyager’s escape velocity, reaching 7.2 AU per year. (roughly 34 kilometers per second).

As to power, NASA is enroute to bringing the necessary nuclear package online via the Next-Generation Radioisotope Thermoelectric Generator (NextGen RTG) under development at NASA Glenn. But improvements in communications at this range represent one area where European involvement could play a role, as does reliability of the sort that can ensure a viable mission lasting half a century or more. Thus:

Development and implementation of qualification procedures for missions with nominal lifetimes of 50 years and beyond. This would provide the community with knowledge of designing long-lived space equipment and be helpful for other programs such as Artemis.

This area strikes me as promising. We’ve already seen how spacecraft never designed for missions of such duration have managed to go beyond the heliosphere (the Voyagers), and developing the hardware with sufficient reliability seems well within our capabilities. Other areas ripe for further development are pointing accuracy and deep space communication architectures, thus the paper’s emphasis on ESA’s role in refining the use of integrated deep space transponders for Interstellar Probe.

Whether the JHU/APL Interstellar Probe design wins approval or not, the fact that we are considering these issues points to the tenacious vitality of space programs looking toward expansion into the outer Solar System and beyond, a heartening thought as we ponder successors to the Voyagers and New Horizons. The ice giants and the VLISM region will truly begin to reveal their secrets when missions like these fly. And how much more so if, along the way, a propulsion technology emerges that reduces travel times to years instead of decades? Are beamed sails the best bet for this, or something else?

The paper is Wimmer-Schweingruber et al., “STELLA—Potential European contributions to a NASA-led interstellar probe,” a whitepaper that was submitted to NASA’s 2023/2024 decadal survey based on a proposal submitted to the European Space Agency (ESA) in response to its 2021 call for medium-class mission proposals. Frontiers in Astronomy and Space Sciences, 17 November 2022 (full text).

For detailed information about Interstellar Probe, see McNutt et al., “Interstellar probe – Destination: Universe!” Acta Astronautica Vol. 196 (July 2022), 13-28 (full text) as well as Brandt et al., “Interstellar Probe: Humanity’s exploration of the Galaxy Begins,” Acta Astronautica Volume 199 (October 2022), pages 364-373 (full text).

by Paul Gilster | Jul 22, 2022 | Missions |

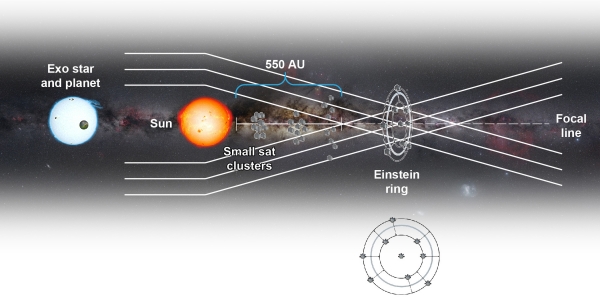

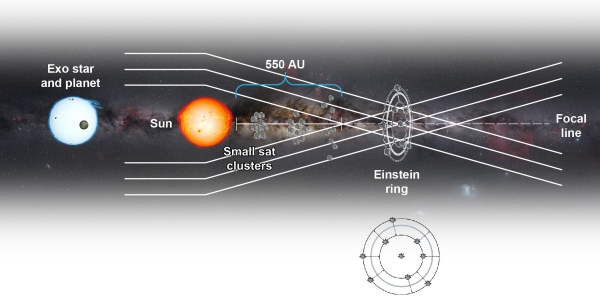

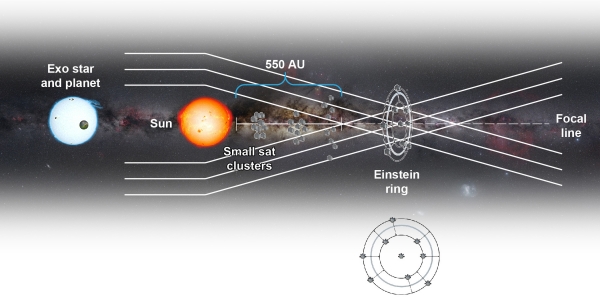

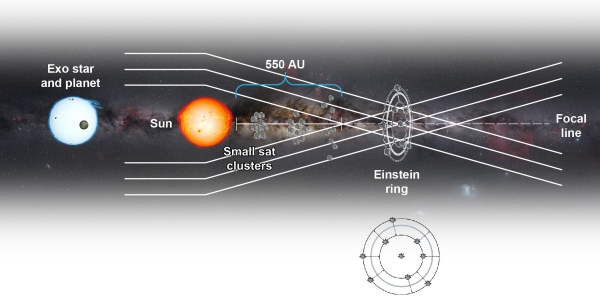

The last time we looked at the Jet Propulsion Laboratory’s ongoing efforts toward designing a mission to the Sun’s gravitational lens region beyond 550 AU, I focused on how such a mission would construct the image of a distant exoplanet. Gravitational lensing takes advantage of the Sun’s mass, which as Einstein told us distorts spacetime. A spacecraft placed on the other side of the Sun from the target exoplanetary system would take advantage of this, constructing a high resolution image of unprecedented detail. It’s hard to think of anything short of a true interstellar mission that could produce more data about a nearby exoplanet.

In that earlier post, I focused on one part of the JPL work, as the team under the direction of Slava Turyshev had produced a paper updating the modeling of the solar corona. The new numerical simulations led to a powerful result. Remember that the corona is an issue because the light we are studying is being bent around the Sun, and we are in danger of losing information if we can’t untangle the signal from coronal distortions. And it turned out that because the image we are trying to recover would be huge – almost 60 kilometers wide at 1200 AU from the Sun if the target were at Proxima Centauri distance – the individual pixels are as much as 60 meters apart.

Image: JPL’s Slava Turyshev, who is leading the team developing a solar gravitational lens mission concept that pushes current technology trends in striking new directions. Credit: JPL/S. Turyshev.

The distance between pixels turns out to help; it actually reduces the integration time needed to pull all the data together to produce the image. The integration time (the time it takes to gather all the data that will result in the final image) is in fact reduced when pixels are not adjacent at a rate proportional to the inverse square of the pixel spacing. I’ve more or less quoted the earlier paper there to make the point that according to the JPL work thus far, exoplanet imaging at high resolution using these methods is ‘manifestly feasible,’ another quotation from the earlier work.

We now have a new paper from the JPL team, looking further at this ongoing engineering study of a mission that would operate in the range of 550 to 900 AU, performing multipixel imaging of an exoplanet up to 100 light years away. The telescope is meter-class, the images producing a surface resolution measured in tens of kilometers. Again I will focus on a specific topic within the paper, the configuration of the architecture that would reach these distances. Those looking for the mission overview beyond this should consult the paper, the preprint of which is cited below.

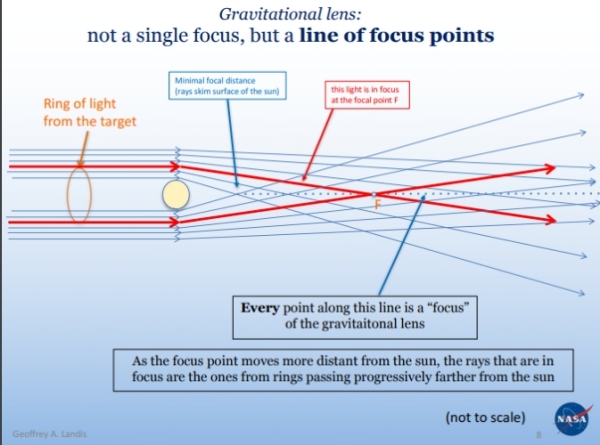

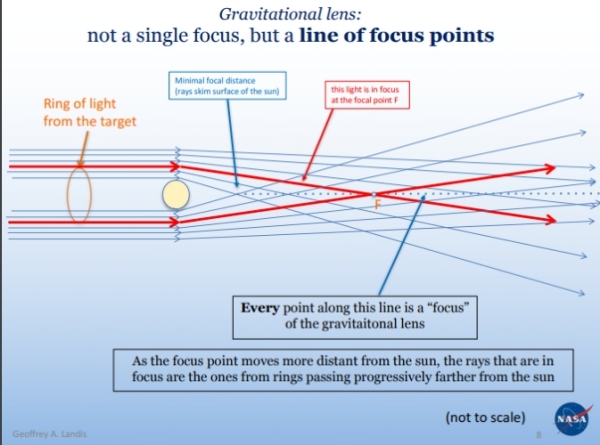

Bear in mind that the SGL (solar gravitational lens) region is, helpfully, not a focal ‘point’ but rather a cylinder, which means that a spacecraft stays within the focus as it moves further from the Sun. This movement also causes the signal to noise ratio to improve, and means we can hope to study effects like planetary rotation, seasonal variations and weather patterns over integration times that may amount to months or years.

Image: From Geoffrey Landis’ presentation at the 2021 IRG/TVIW symposium in Tucson, a slide showing the nature of the gravitational lens focus. Credit: Geoffrey Landis.

Considering that Voyager 1, our farthest spacecraft to date, is now at a ‘mere’ 156 AU, a journey that has taken 44 years, we have to find a way to move faster. The JPL team talks of reaching the focal region in less than 25 years, which implies a hyperbolic escape velocity of more than 25 AU per year. Chemical methods fail, giving us no more than 3 to 4 AU per year, while solar thermal and even nuclear thermal move us into a still unsatisfactory 10-12 AU per year in the best case scenario. The JPL team chooses solar sails in combination with a close perihelion pass of the Sun. The paper examines perihelion possibilities at 15 as well as 10 solar radii but notes that the design of the sailcraft and its material properties define what is going to be possible.

Remember that we have also been looking at the ongoing work at the Johns Hopkins Applied Physics Laboratory involving a mission called Interstellar Probe, which likewise is in need of high velocity to reach the distances needed to study the heliosphere from the outside (a putative goal of 1000 AU in 50 years has been suggested). Because the JHU/APL effort has just released a new paper of its own, I’ll also be referring to it in the near future, because thus far the researchers working under Ralph McNutt on the problem have not found a close perihelion pass, coupled with a propulsive burn but without a sail, to be sufficient for their purposes. But more on that later. Keep it in mind in relation to this, from the JPL paper:

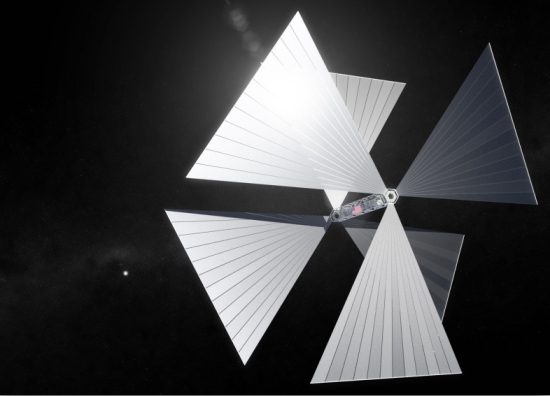

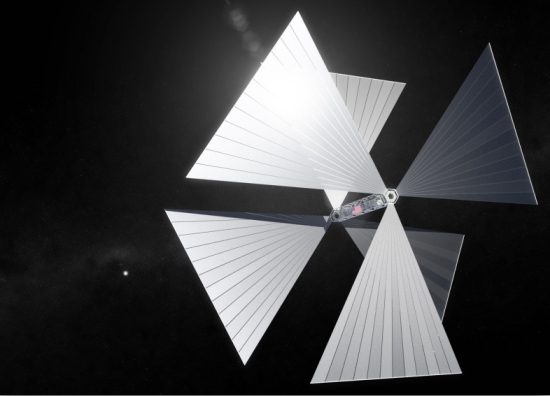

…the stresses on the sailcraft structure can be well understood. For the sailcraft, we considered among other known solar sail designs, one with articulated vanes (i.e., SunVane). While currently at a low technology readiness level (TRL), the SunVane does permit precision trajectory insertion during the autonomous passage through solar perigee. In addition, the technology permits trimming of the trajectory injection errors while still close to the Sun. This enables the precision placement of the SGL spacecraft on its path towards the image cylinder which is 1.3 km in diameter and some 600+ AU distant.

Is the SunVane concept the game-changer here? I looked at it 18 months ago (see JPL Work on a Gravitational Lensing Mission), where I used the image below to illustrate the concept. The sail is constructed of square panels aligned along a truss. In the Phase II study for NIAC that preceded the current papers, a sail based on SunVane design could achieve 25 AU per year – that would be arrival at 600 AU in 26 years in conjunction with a close solar pass – using a craft with total sail area of 45,000 square meters (that’s equivalent to a roughly 200 X 200 square meter single sail).

Image: The SunVane concept. Credit: Darren D. Garber (Xplore, Inc).

With sail area distributed along the truss rather than confined to the sail’s center of gravity, this is a highly maneuverable design that continues to be of great interest. Maneuverability is a key factor as we look at injecting spacecraft into perihelion trajectory, where errors can be trimmed out while still in close proximity to the Sun.

But current thinking goes beyond flying a single spacecraft. What the JPL work has developed through the three NIAC phases and beyond is a mission built around a constellation of smaller spacecraft. The idea is chosen, the authors say, to enhance redundancy, enable the needed precision of navigation, remove the contamination of background light during SGL operations, and optimize the return of data. What intrigues me particularly is the use of in-flight assembly, with the major spacecraft modules placed on separate sailcraft. This will demand that the sailcraft fly in formation in order to effect the needed rendezvous for assembly.

Let’s home in on this concept, pausing briefly on the sail, for this mission will demand an attitude control system to manage the thrust vector and sail attitude once we have reached perihelion with our multiple craft, each making a perihelion pass followed by rendezvous with the other craft. I turn to the paper for more:

Position and velocity requirements for the incoming trajectory prior to perihelion are < 1 km and ?1 cm/sec. Timing through perihelion passage is days to weeks with errors in entry-time compensated in the egress phase. As an example, if there is a large position and/or velocity error upon perihelion passage that translated to an angular offset of 100” from the nominal trajectory, there is time to correct this translational offset with the solar sail during the egress phase all the way out to the orbit of Jupiter. The sail’s lateral acceleration is capable of maneuvering the sailcraft back to the desired nominal state on the order of days depending on distance from the Sun. This maneuvering capability relaxes the perihelion targeting constraints and is well within current orbit determination knowledge threshold for the inner solar system which drive the ?1 km and ?1 cm/sec requirements.

Why the need to go modular and essentially put the craft together during the cruise phase? The paper points out that the 1-meter telescope that will be necessary cannot currently be produced in the mass and volume range needed to fit a CubeSat. The mission demands something on the order of a 100 kg spacecraft, which in turn would demand solar sails of extreme size as needed to reach the target velocity of 20 AU per year or higher. Such sails will be commonplace one day (I assume), but with the current state of the art, in-flight robotic assembly leverages our growing experience with miniaturization and small satellites and allows for a mission within a decade.

If in-flight assembly is used, because of the difficulties in producing very large sails, the spacecraft modules…are placed on separate sailcraft. After in-flight assembly, the optical telescope and if necessary, the thermal radiators are deployed. Analysis shows that if the vehicle carries a tiled RPS [radioisotope power system]…where the excess heat is used for maintaining spacecraft thermal balance, then there is no need for thermal radiators. The MCs [the assembled spacecraft] use electric propulsion (EP) to make all the necessary maneuvers for the cruise (?25 years) and science phase of the mission. The propulsion requirements for the science phase are a driver since the SGL spacecraft must follow a non inertial motion for the 10-year science mission phase.

According to the authors, numerous advantages accrue from using a modular approach with in-space assembly, including the ability to use rideshare services; i.e., we can launch modules as secondary payloads, with related economies in money and time. Moreover, such a use means that we can use conventional propulsion rather than sails as an option for carrying the cluster of sailcraft inbound toward perihelion in formation. In any case, at some point the sailcraft deploy their sails and establish the needed trajectory for the chosen solar perihelion point. After perihelion, the sails — whose propulsive qualities diminish with distance from the Sun — are ejected, perhaps nearing Earth orbit, as the sailcraft prepare for assembly.

Flying in formation, the sailcraft reduce their relative distance outbound and begin the in-space assembly phase while passing near Earth orbit. The mission demands that each of the 10-20 kg mass spacecraft be a fully functional nanosatellite that will use onboard thrusters for docking. Autonomous docking in space has already been demonstrated, essentially doing what the SGL mission will have to do, assembling larger craft from smaller ones. It’s worth noting, as the authors do, that NASA’s space technology mission directorate has already begun a project called On-Orbit Autonomous Assembly from Nanosatellites-OAAN along with a CubeSat Proximity Operations Demonstration (CPOD) mission, so we see these ideas being refined.

What demands attention going forward is the needed development of proximity operation technologies, which range from sensor design to approach algorithms, all to be examined as study of the SGL mission continues. There was a time when I would have found this kind of self-assembly en-route to deep space fanciful, but there was also a time when I would have said landing a rocket booster on its tail for re-use was fanciful, and it’s clear that self-assembly in in the SGL context is plausible. The recent deployment of the James Webb Space Telescope reinforces the same point.

The JPL team has been working with simulation tools based on concurrent engineering methodology (CEM), modifying current software to explore how such ‘fractionated’ spacecraft can be assembled. Note this:

Two types of distributed functionality were explored. A fractionated spacecraft system that operates as an “organism” of free-flying units that distribute function (i.e., virtual vehicle) or a configuration that requires reassembly of the apportioned masses. Given that the science phase is the strong driver for power and propellant mass, the trade study also explored both a 7.5 year (to ?800 AU) and 12.5 year (to ?900 AU) science phase using a 20 AU/yr xit velocity as the baseline. The distributed functionality approach that produced the lowest functional mass unit is a cluster of free-flying nanosatellites…each propelled by a solar sail but then assembled to form a MC [mission capable] spacecraft.

Image: Various approaches will emerge about the kind of spacecraft that might fly a mission to the gravitational focus of the Sun. In this image (not taken from the Turyshev et al. paper), swarms of small sailcraft capable of self-assembly into a larger spacecraft are depicted that could fly to a spot where our Sun’s gravity distorts and magnifies the light from a nearby star system, allowing us to capture a sharp image of an Earth-like exoplanet. Credit: NASA/The Aerospace Corporation.

The current paper goes deeply into the attributes of the kind of nanosatellite that can assemble the final design, and I’ll send you to it for further details. Each of the component craft has the capability of a 6U CubeSat/nanosat and each carries components of the final craft, from optical communications to primary telescope mirror. Current thinking is that the design is in the shape of a round disk about 1 meter in diameter and 10 cm thick, with a carbon fiber composite scaffolding. The idea is to assemble the final craft as a stack of these units, producing the final round cylinder.

What a fascinating, gutsy mission concept, and one with the possibility of returning extraordinary data on a nearby exoplanet. The modular approach can be used to enhance redundancy, the authors note, as well as allowing for reconfiguration to reduce the risk of mission failure. Self-assembly leverages current advances in miniaturization, composite materials, and computing as reflected in the proliferation of CubeSat and nanosat technologies. What this engineering study is pointing to is a mission to the solar gravity lens that seems feasible with near-term technologies.

The paper is Helvajian et al., “A mission architecture to reach and operate at the focal region of the solar gravitational lens,” now available as a preprint. The earlier report on the study’s progress is “Resolved imaging of exoplanets with the solar gravitational lens,” (preprint). The Phase II NIAC report on this work is Turyshev & Toth, “Direct Multipixel Imaging and Spectroscopy of an Exoplanet with a Solar Gravity Lens Mission,” Final Report NASA Innovative Advanced Concepts Phase II (2020). Full text.

by Paul Gilster | Apr 19, 2022 | Missions |

We’ve talked about the ongoing work at the Jet Propulsion Society on the Sun’s gravitational focus at some length, most recently in JPL Work on a Gravitational Lensing Mission, where I looked at Slava Turyshev and team’s Phase II report to the NASA Innovative Advanced Concepts office. The team is now deep into the work on their Phase III NIAC study, with a new paper available in preprint form. Dr. Turyshev tells me it can be considered a summary as well as an extension of previous results, and today I want to look at the significance of one aspect of this extension.

There are numerous reasons for getting a spacecraft to the distance needed to exploit the Sun’s gravitational lens – where the mass of our star bends the light of objects behind it to produce a lens with extraordinary properties. The paper, titled “Resolved Imaging of Exoplanets with the Solar Gravitational Lens,” notes that at optical or near-optical wavelengths, the amplification of light is on the order of ~ 2 X 1011, with equally impressive angular resolution. If we can reach this region beginning at 550 AU from the Sun, we can perform direct imaging of exoplanets.

We’re talking multi-pixel images, and not just of huge gas giants. Images of planets the size of Earth around nearby stars, in the habitable zone and potentially life-bearing.

Other methods of observation give way to the power of the solar gravitational lens (SGL) when we consider that, according to Turyshev and co-author Viktor Toth’s calculations, to get a multi-pixel image of an Earth-class planet at 30 parsecs with a diffraction-limited telescope, we would need an aperture of 90 kilometers, hardly a practical proposition. Optical interferometers, too, are problematic, for even they require long-baselines and apertures in the tens of meters, each equipped with its own coronagraph (or conceivably a starshade) to block stellar light. As the paper notes:

Even with these parameters, interferometers would require integration times of hundreds of thousands to millions of years to reach a reasonable signal-to-noise ratio (SNR) of ? 7 to overcome the noise from exo-zodiacal light. As a result, direct resolved imaging of terrestrial exoplanets relying on conventional astronomical techniques and instruments is not feasible.

Integration time is essentially the time it takes to gather all the data that will result in the final image. Obviously, we’re not going to send a mission to the gravitational lensing region if it takes a million years to gather up the needed data.

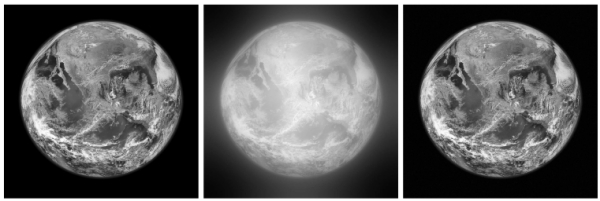

Image: Various approaches will emerge about the kind of spacecraft that might fly a mission to the gravitational focus of the Sun. In this image (not taken from the Turyshev et al. paper), swarms of small solar sail-powered spacecraft are depicted that could fly to a spot where our Sun’s gravity distorts and magnifies the light from a nearby star system, allowing us to capture a sharp image of an Earth-like exoplanet. Credit: NASA/The Aerospace Corporation.

But once we reach the needed distance, how do we collect an image? Turyshev’s team has been studying the imaging capabilities of the gravitational lens and analyzing its optical properties, allowing the scientists to model the deconvolution of an image acquired by a spacecraft at these distances from the Sun. Deconvolution means reducing noise and hence sharpening the image with enhanced contrast, as we do when removing atmospheric effects from images taken from the ground.

All of this becomes problematic when we’re using the Sun’s gravitational lens, for we are observing exoplanet light in the form of an ‘Einstein ring’ around the Sun, where lensed light from the background object appears in the form of a circle. This runs into complications from the Sun’s corona, which produces significant noise in the signal. The paper examines the team’s work on solar coronagraphs to block coronal light while letting through light from the Einstein ring. An annular coronagraph aboard the spacecraft seems a workable solution. For more on this, see the paper.

An earlier study analyzed the solar corona’s role in reducing the signal-to-noise ratio, which extended the time needed to integrate the full image. In that work, the time needed to recover a complex multi-pixel image from a nearby exoplanet was well beyond the scope of a practical mission. But the new paper presents an updated model for the solar corona modeling whose results have been validated in numerical simulations under various methods of deconvolution. What leaps out here is the issue of pixel spacing in the image plane. The results demonstrate that a mission for high resolution exoplanet imaging is, in the authors’ words, ‘manifestly feasible.’

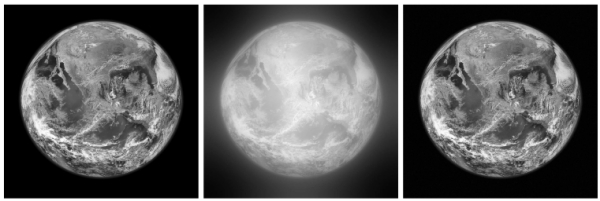

Pixel spacing is an issue because of the size of the image we are trying to recover. The image of an exoplanet the size of the Earth at 1.3 parsecs, which is essentially the distance of Proxima Centauri from the Earth, when projected onto an image plane at 1200 AU from the Sun, is almost 60 kilometers wide. We are trying to create a megapixel image, and must take account of the fact that individual image pixels are not adjacent. In this case, they are 60 meters apart. It turns out that this actually reduces the integration time of the data to produce the image we are looking for.

From the paper [italics mine]:

We estimated the impact of mission parameters on the resulting integration time. We found that, as expected, the integration time is proportional to the square of the total number of pixels that are being imaged. We also found, however, that the integration time is reduced when pixels are not adjacent, at a rate proportional to the inverse square of the pixel spacing.

Consequently, using a fictitious Earth-like planet at the Proxima Centauri system at z0 = 1.3 pc from the Earth, we found that a total cumulative integration time of less than 2 months is sufficient to obtain a high quality, megapixel scale deconvolved image of that planet. Furthermore, even for a planet at 30 pc from the Earth, good quality deconvolution at intermediate resolutions is possible using integration times that are comfortably consistent with a realistic space mission.

Image: This is Figure 5 from the paper. In the caption, PSF refers to the Point Spread Function, which is essentially the response of the light-gathering instrument to the object studied. It measures how much the light has been distorted by the instrument. Here the SGL itself is considered as the source of the distortion. The full caption: Simulated monochromatic imaging of an exo-Earth at z0 = 1.3 pc from z = 1200 AU at N = 1024 × 1024 pixel resolution using the SGL. Left: the original image. Middle: the image convolved with the SGL PSF, with noise added at SNRC = 187, consistent with a total integration time of ?47 days. Right: the result of deconvolution, yielding an image with SNRR = 11.4. Credit: Turyshev et al.

The solar gravity lens presents itself not as a single focal point but a cylinder, meaning that we can stay within the focus as we move further from the Sun. The authors find that as the spacecraft moves ever further out, the signal to noise ratio improves. This heightening in resolution persists even with the shorter integration times, allowing us to study effects like planetary rotation. This is, of course, ongoing work, but these results cannot but be seen as encouraging for the concept of a mission to the gravity focus, giving us priceless information for future interstellar probes.

The paper is Turyshev & Toth., “Resolved imaging of exoplanets with the solar gravitational lens,” available for now only as a preprint. The Phase II NIAC report is Turyshev et al., “Direct Multipixel Imaging and Spectroscopy of an Exoplanet with a Solar Gravity Lens Mission,” Final Report NASA Innovative Advanced Concepts Phase II (2020). Full text.

by Paul Gilster | Apr 15, 2022 | Missions |

A recent paper in Acta Astronautica reminds me that the Mission Concept Report on the Interstellar Probe mission has been available on the team’s website since December. Titled Interstellar Probe: Humanity’s Journey to Interstellar Space, this is the result of lengthy research out of Johns Hopkins Applied Physics Laboratory under the aegis of Ralph McNutt, who has served as principal investigator. I bring the mission concept up now because the new paper draws directly on the report and is essentially an overview to the community about the findings of this team.

We’ve looked extensively at Interstellar Probe in these pages (see, for example, Interstellar Probe: Pushing Beyond Voyager and Assessing the Oberth Maneuver for Interstellar Probe, both from 2021). The work on this mission anticipates the Solar and Space Physics 2023-2032 Decadal Survey, and presents an analysis of what would be the first mission designed from the top down as an interstellar craft. In that sense, it could be seen as a successor to the Voyagers, but one expressly made to probe the local interstellar medium, rather than reporting back on instruments designed originally for planetary science.

The overview paper is McNutt et al., “Interstellar probe – Destination: Universe!,” a title that recalls (at least to me) A. E. van Vogt’s wonderful collection of short stories by the same name (1952), whose seminal story “Far Centaurus” so keenly captures the ‘wait’ dilemma; i.e., when do you launch when new technologies may pass the craft you’re sending now along the way? In the case of this mission, with a putative launch date at the end of the decade, the question forces us into a useful heuristic: Either we keep building and launching or we sink into stasis, which drives little technological innovation. But what is the pace of such progress?

I say build and fly if at all feasible. Whether this mission, whose charter is basically “[T]o travel as far and as fast as possible with available technology…” gets the green light will be determined by factors such as the response it generates within the heliophysics community, how it fares in the upcoming decadal report, and whether this four-year engineering and science trade study can be implemented in a tight time frame. All that goes to feasibility. It’s hard to argue against it in terms of heliophysics, for what better way to study the Sun than through its interactions with the interstellar medium? And going outside the heliosphere to do so makes it an interstellar mission as well, with all that implies for science return.

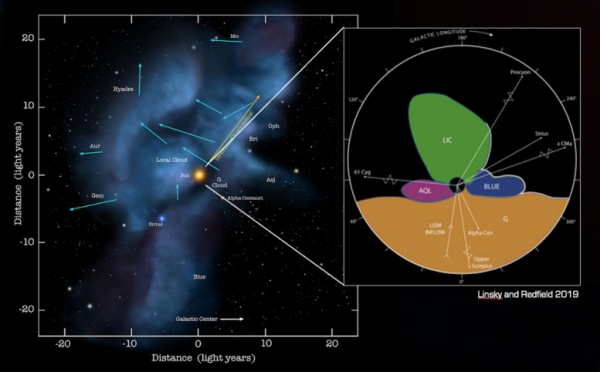

Image: This is Figure 2-1 from the Mission Concept Report. Caption: During the evolution of our solar system, its protective heliosphere has plowed through dramatically different interstellar environments that have shaped our home through incoming interstellar gas, dust, plasma, and galactic cosmic rays. Interstellar Probe on a fast trajectory to the very local interstellar medium (VLISM) would represent a snapshot to understand the current state of our habitable astrosphere in the VLISM, to ultimately be able to understand where our home came from and where it is going. Credit: Johns Hopkins Applied Physics Laboratory.

Crossing through the heliosphere to the “Very Local” interstellar medium (VLISM) is no easy goal, especially when the engineering requirements to meet the decadal survey specifications take us to a launch no later than January of 2030. Other basic requirements include the ability to take and return scientific data from 1000 AU (with all that implies about long-term function in instrumentation), with power levels no more than 600 W at the beginning of the mission and no more than half of that at its end, and a mission working lifetime of 50 years. Bear in mind that our Voyagers, after all these years, are currently at 155 and 129 AU respectively. A successor to Voyager will have to move much faster.

But have a look at the overview, which is available in full text. Dr. McNutt tells me that we can expect a companion paper from Pontus Brandt (likewise at APL) on the science aspects of the larger Mission Concept Report; this is likewise slated for publication in Acta Astronautica. According to McNutt, the APL contract from NASA’s Heliophysics Division completes on April 30 of this year, so the ball now lands in the court of the Solar and Space Physics Decadal Survey Team. And let me quote his email:

“Reality is never easy. I have to keep reminding people that the final push on a Solar Probe began with a conference in 1977, many studies at JPL through 2001, then studies at APL beginning in late 2001, the Decadal Survey of that era, etc. etc. with Parker Solar Probe launching in August 2018 and in the process now of revolutionizing our understanding of the Sun and its interaction with the interplanetary medium.”

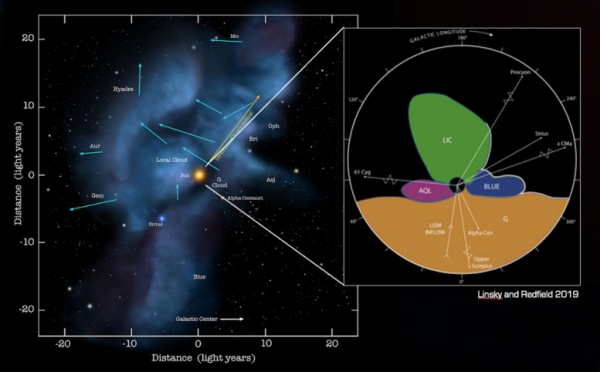

Image: This is Figure 2-8 from the Mission Concept Report. Caption: Recent studies suggest that the Sun is on the path to leave the LIC [Local Interstellar Cloud] and may be already in contact with four interstellar clouds with different properties (Linsky et al., 2019). (Left: Image credit to Adler Planetarium, Frisch, Redfield, Linsky.)

Our society has all too little patience with decades-long processes, much less multi-generational goals. But we do have to understand how long it takes missions to go through the entire sequence before launch. It should be obvious that a 2030 launch date sets up what the authors call a ‘technology horizon’ that forces realism with respect to the physics and material properties at play here. Note this, from the paper:

…the enforcement of the “technology horizon” had two effects: (1) limit thinking to what can “be done now with maybe some “‘minor’ extensions” and (2) rule out low-TRL [technology readiness level] “technologies” which (1) we have no real idea how to develop, e.g., “fusion propulsion” or “gas-core fission”, or which we think we know how to develop but have no means of securing the requisite funds, e.g., NEP (while some might argue with this assertion, the track record to date does not argue otherwise).

Thus the dilemma of interstellar studies. Opportunities to fund and fly missions are sparse, political support always problematic, and deadlines shape what is possible. We have to be realistic about what we can do now, while also widening our thinking to include the kind of research that will one day pay off in long-term results. Developing and nourishing low-TRL concepts has to be a vital part of all this, which is why think tanks like NASA’s Innovative Advanced Concept office are essential, and why likewise innovative ideas emerging from the commercial sector must be considered.

Both tracks are vital as we push beyond the Solar System. McNutt refers to a kind of ‘relay race’ that began with Pioneer 10 and has continued through Voyagers 1 and 2. A mission dedicated to flying beyond the heliopause picks up that baton with an infusion of new instrumentation and science results that take us “outward through the heliosphere, heliosheath, and near (but not too near) interstellar space over almost five solar cycles…” Studies like these assess the state of the art (over 100 mission approaches are quantified and evaluated), defining our limits as well as our ambitions.

The paper is McNutt et al., “Interstellar probe – Destination: Universe!” Acta Astronautica Vol. 196 (July 2022), 13-28 (full text).

by Paul Gilster | Mar 25, 2022 | Missions |

Epsilon Eridani has always intrigued me because in astronomical terms, it’s not all that far from the Sun. I can remember as a kid noting which stars were closest to us – the Centauri trio, Tau Ceti and Barnard’s Star – wondering which of these would be the first to be visited by a probe from Earth. Later, I thought we would have quick confirmation of planets around Epsilon Eridani, since it’s a scant (!) 10.5 light years out, but despite decades of radial velocity data, astronomers have only found one gas giant, and even that confirmation was slowed by noise-filled datasets.

Even so, Epsilon Eridani b is confirmed. Also known as Ægir (named for a figure in Old Norse mythology), it’s in a 3.5 AU orbit, circling the star every 7.4 years, with a mass somewhere between 0.6 and 1.5 times that of Jupiter. But there is more: We also get two asteroid belts in this system, as Gerald Jackson points out in his new paper on using antimatter for deceleration into nearby star systems, as well as another planet candidate.

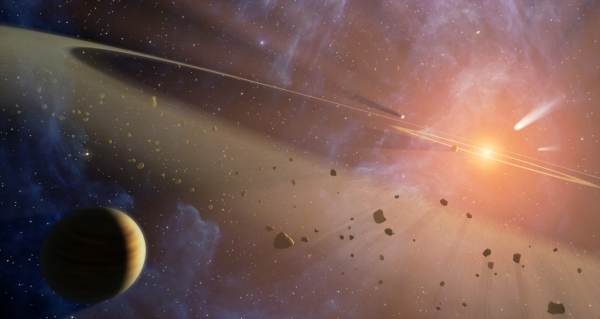

Image: This artist’s conception shows what is known about the planetary system at Epsilon Eridani. Observations from NASA’s Spitzer Space Telescope show that the system hosts two asteroid belts, in addition to previously identified candidate planets and an outer comet ring. Epsilon Eridani is located about 10 light-years away in the constellation Eridanus. It is visible in the night skies with the naked eye. The system’s inner asteroid belt appears as the yellowish ring around the star, while the outer asteroid belt is in the foreground. The outermost comet ring is too far out to be seen in this view, but comets originating from it are shown in the upper right corner. Credit: NASA/JPL-Caltech/T. Pyle (SSC).

This is a young system, estimated at less than one billion years. For both Epsilon Eridani and Proxima Centauri, deceleration is crucial for entering the planetary system and establishing orbit around a planet. The amount of antimatter available will determine our deceleration options. Assuming a separate method of reaching Proxima Centauri in 97 years (perhaps beamed propulsion getting the payload up to 0.05c), we need 120 grams of antiproton mass to brake into the system. A 250 year mission to Epsilon Eridani at this velocity would require the same 120 grams.

Thus we consider the twin poles of difficulty when it comes to antimatter, the first being how to produce enough of it (current production levels are measured in nanograms per year), the second how to store it. Jackson, who has long championed the feasibility of upping our antimatter production, thinks we need to reach 20 grams per year before we can start thinking seriously about flying one of these missions. But as both he and Bob Forward have pointed out, there are reasons why we produce so little now, and reasons for optimism about moving to a dedicated production scenario.

Past antiproton production was constrained by the need to produce antiproton beams for high energy physics experiments, requiring strict longitudinal and transverse beam characteristics. Their solution was to target a 120 GeV proton beam into a nickel target [41] followed by a complex lithium lens [42]. The world record for the production of antimatter is held by the Fermilab. Antiproton production started in 1986 and ended in 2011, achieving an average production rate of approximately 2 ng/year [43]. The record instantaneous production rate was 3.6 ng/year [44]. In all, Fermilab produced and stored 17 ng of antiprotons, over 90% of the total planetary production.

Those are sobering numbers. Can we cast antimatter production in a different light? Jackson suggests using our accelerators in a novel way, colliding two proton beams in an asymmetric collider scenario, in which one beam is given more energy than the other. The result will be a coherent antiproton beam that, moving downstream in the collider, is subject to further manipulation. This colliding beam architecture makes for a less expensive accelerator infrastructure and sharply reduces the costs of operation.

The theoretical costs for producing 20 grams of antimatter per year are calculated under the assumption that the antimatter production facility is powered by a square solar array 7 km x 7 km in size that would be sufficient to supply all of the needed 7.6 GW of facility power. Using present-day costs for solar panels, the capital cost for this power plant comes in at $8 billion (i.e., the cost of 2 SLS rocket launches). $80 million per year covers operation and maintenance. Here’s Jackson on the cost:

…3.3% of the proton-proton collisions yields a useable antiproton, a number based on detailed particle physics calculations [45]. This means that all of the kinetic energy invested in 66 protons goes into each antiproton. As a result, the 20 g/yr facility would theoretically consume 6.7 GW of electrical power (assuming 100% conversion efficiencies). Operating 24/7 this power level corresponds to an energy usage of 67 billion kW-hrs per year. At a cost of $0.01 per kW-hr the annual operating cost of the facility would be $670 million. Note that a single Gerald R. Ford-class aircraft carrier costs $13 billion! The cost of the Apollo program adjusted for 2020 dollars was $194 billion.

Science Along the Way

Launching missions that take decades, and in some cases centuries, to reach their destination calls for good science return wherever possible, and Jackson argues that an interstellar mission will determine a great deal about its target star just by aiming for it. Whereas past missions like New Horizons could count on the position of targets like Pluto and Arrokoth being programmed into the spacecraft computers, the preliminary positioning information uploaded to the craft came from Earth observation. Our interstellar craft will need more advanced tools. It will have to be capable of making its own astrometrical observations, sending its calculations to the propulsion system for deceleration into the target system and orbital insertion, thus refining exoplanet parameters on the fly.

Remember that what we are considering is a hybrid mission, using one form of propulsion to attain interstellar cruise velocity, and antimatter as the method for deceleration. You might recall, for example, the starship ISV Venture Star in the film Avatar, which uses both antimatter engines and a photon sail. What Jackson has added to the mix is a deep dive into the possibilities of antimatter for turning what would have been a flyby mission into a long-lasting planet orbiter.

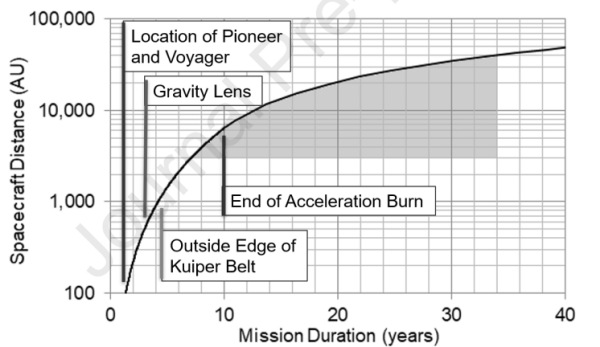

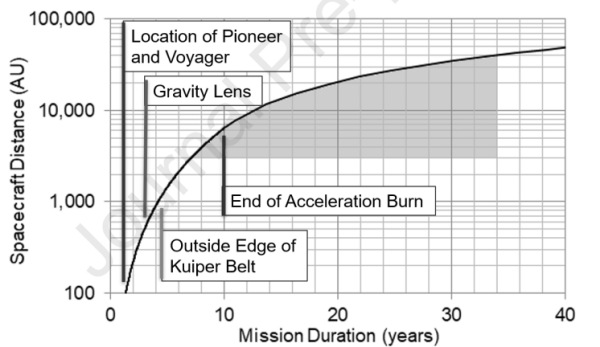

Let’s consider what happens along the line of flight as a spacecraft designed with these methods makes its way out of the Solar System. If we take a velocity of 0.02c, our spacecraft passes the outgoing Voyager and Pioneer spacecraft in two years, and within three more years it passes into the gravitational lensing regions of the Sun beginning at 550 AU. A mere five years has taken the vehicle through the Kuiper Belt and moved it out toward the inner Oort Cloud, where little is currently known about such things as the actual density distribution of Oort objects as a function of radius from the Sun. We can also expect to gain data on any comparable cometary clouds around Proxima Centauri or Epsilon Eridani as the spacecraft continues its journey.

By Jackson’s calculations, when we’re into the seventh year of such a mission, we are encountering Oort Cloud objects at a pretty good clip, with an estimated 450 Oort objects within 0.1 AU of its trajectory based on current assumptions. Moving at 1 AU every 5.6 hours, we can extrapolate an encounter rate of one object per month over a period of three decades as the craft transits this region. Jackson also notes that data on the interstellar medium, including the Local Interstellar Cloud, will be prolific, including particle spectra, galactic cosmic ray spectra, dust density distributions, and interstellar magnetic field strength and direction.

Image: This is Figure 7 from the paper. Caption: Potential early science return milestones for a spacecraft undergoing a 10-year acceleration burn with a cruise velocity of 0.02c. Credit: Gerald Jackson.

It’s interesting to compare science return over time with what we’ve achieved with the Voyager missions. Voyager 2 reached Jupiter about two years after launch in 1977, and passed Saturn in four. It would take twice that time to reach Uranus (8.4 years into the mission), while Neptune was reached after 12. Voyager 2 entered the heliopause after 41.2 years of flight, and as we all know, both Voyagers are still returning data. For purposes of comparison, the Voyager 2 mission cost $865 million in 1973 dollars.

Thus, while funding missions demands early return on investment, there should be abundant opportunity for science in the decades of interstellar flight between the Sun and Proxima Centauri, with surprises along the way, just as the Voyagers occasionally throw us a curveball – consider the twists and wrinkles detected in the Sun’s magnetic field as lines of magnetic force criss-cross, and reconnect, producing a kind of ‘foam’ of magnetic bubbles, all this detected over a decade ago in Voyager data. The long-term return on investment is considerable, as it includes years of up-close exoplanet data, with orbital operations around, for example, Proxima Centauri b.

It will be interesting to see Jackson’s final NIAC report, which he tells me will be complete within a week or so. As to the future, a glimpse at one aspect of it is available in the current paper, which refers to what the original NIAC project description referred to as “a powerful LIDAR system…to illuminate, identify and track flyby candidates” in the Oort Cloud. But as the paper notes, this now seems impractical:

One preliminary conclusion is that active interrogation methods for locating 10 km diameter objects, for example with the communication laser, are not feasible even with megawatts of available electrical power.

We’ll also find out in the NIAC report whether or not Jackson’s idea of using gram-scale chipcraft for closer examination of, say, objects in the Oort has stood up to scrutiny in the subsequent work. This hybrid mission concept using antimatter is rapidly evolving, and what lies ahead, he tells me in a recent email, is a series of papers expanding on antimatter production and storage, and further examining both the electrostatic trap and electrostatic nozzle. As both drastically increasing antimatter production, as well as learning how to maximize small amounts, are critical for our hopes to someday create antimatter propulsion, I’ll be tracking this report closely.