by Paul Gilster | Feb 23, 2011 | Communications and Navigation |

You would think that Internet pioneer Vint Cerf would be too busy with the upcoming transition from Internet Protocol version 4 to IPv6 — not to mention his other duties as Google’s Chief Internet Evangelist — to keep an eye on space communications. But the man behind the Net’s TCP/IP protocols never lets the human future off-planet get too far from his thoughts. These days the long hours he has already spent on developing a new methodology that lets us network not just Earth-based PCs but far-flung spacecraft have begun to pay off. 2011 should be a banner year for what many have already begun to call the InterPlanetary Internet.

At issue is a key problem with the way the Internet works. TCP/IP stands for Transmission Control Protocol/Internet Protocol, and it describes a method by which data are broken into small data envelopes and labeled for routing through the network. When they reach their destination, the packets are then reassembled. We know how the Net that grew out of these protocols transformed communications, but for TCP/IP to work well, it relies on the fast turnover of data. The key tools are ‘chatty,’ exchanging data over and over again to function.

The classic case is File Transfer Protocol, or FTP, which takes eight round trips — that’s eight cycles of data connection — before the requested file can make its trip, and as anyone who has worked with FTP (especially in the early days of the Net) knows, FTP servers will time out after a certain period of inactivity. But if you’re trying to network spacecraft, you can’t work under these conditions. At interplanetary distances, speed of light delays add up, and latency that could make an Earth-based connection hiccough can quickly grow to hours and days.

The new Bundle Protocol that the InterPlanetary Internet project under Cerf’s direction has come up with takes care of this problem, compensating for all these delays. It’s part of the larger concept of delay tolerant networking now being overseen by the Delay Tolerant Networking Research Group, which ultimately grew out of the Internet Society’s Interplanetary Internet group, with Cerf at the thick of things all along. In a recent interview in Network World, Cerf noted that the Bundle Protocol has gone beyond theory into actual space operations.

You’ll find the new method, for example, running aboard the International Space Station. And delay tolerant networking protocols have been uploaded to the EPOXI spacecraft, whose most recent claim to fame was its visit to Comet Hartley 2 — this is the same vehicle, then called Deep Impact, that drove an impactor into comet Tempel 1 back in 2005. The new protocols have already been tested with a light-delay time of approximately 80 light seconds, and much more is in the works. Here’s Cerf on what’s coming up:

…during 2011, our initiative is to “space qualify” the interplanetary protocols in order to standardize them and make them available to all the space-faring countries. If they chose to adopt them, then potentially every spacecraft launched from that time on will be interwoven from a communications point of view. But perhaps more important, when the spacecraft have finished their primary missions, if they are still functionally operable — they have power, computer, communications — they can become nodes in an interplanetary backbone. So what can happen over time, is that we can literally grow an interplanetary network that can support both man and robotic exploration.

Networking our space resources is a key element of a space-based infrastructure. Right now, most space exploration has involved point-to-point radio links. When you wanted to communicate with a distant spacecraft like Voyager 2, you had to dedicate expensive radio dishes to that specific task. A system in which we can store and forward data among spacecraft lets us maximize our communications, letting multiple missions forward their data to a central node on the interplanetary Net for subsequent transmission to Earth. As space testing of delay tolerant methods grows, the Bundle Protocol is also in action in various academic settings and in NASA laboratories. The building of an interplanetary network backbone has commenced.

by Paul Gilster | Nov 29, 2010 | Communications and Navigation |

We’ve seen some remarkable feats of celestial navigation lately, not the least of which has been the flyby of comet Hartley 2 by the EPOXI mission. But as we continue our push out into the Solar System, we’re going to run into the natural limits of our navigation methods. The Deep Space Network can track a spacecraft from the ground and achieve the kind of phenomenal accuracy that can thread a Cassini probe through a gap in the rings of Saturn. But positional errors grow with distance, and can mount up to 4 kilometers per AU of distance from the Earth.

To go beyond the Solar System, we’ll need a method that works independently, without the need for ground station assistance. Pulsar navigation is one way around the problem. Imagine a spacecraft equipped with a radio telescope that can determine its position by analyzing the signals from distant pulsars. These super-dense remnants of stellar explosions emit a beam of electromagnetic radiation that is extremely regular, and as we’ve seen in these pages before, that offers a navigational opportunity, especially when we’re dealing with millisecond pulsars.

Scientists have been studying how to use pulsars for navigation since the objects were first discovered and several proposals have surfaced that are based on measuring the time of arrival of pulses or the phase difference between pulses, all in reference to the Solar System barycenter, the center of mass for all orbiting objects in the system. But a new paper from Angelo Tartaglia (INFN, Torino) and colleagues takes a look at an operational approach for defining a what they call an ‘autonomous relativistic positioning and navigation system’:

We assume that a user is equipped with a receiver that can count pulses from a set of sources whose periods and positions in the sky are known; then, reckoning the periodic electromagnetic signals coming from (at least) four sources and measuring the proper time intervals between successive arrivals of the signals allow to localize the user, within an accuracy controlled by the precision of the clock he is equipped with.

Moreover, the spacecraft determines its own position solely by reference to the signals it receives, which no longer have to flow from Earth:

This system can allow autopositioning with respect to an arbitrary event in spacetime and three directions in space, so that it could be used for space navigation and positioning in the Solar System and beyond. In practice the initial event of the self-positioning process is used as the origin of the reference, and the axes are oriented according to the positions of the distant sources; all subsequent positions will be given in that frame.

Hence the term ‘autonomous’ to describe the system. Marissa Cevallos did a terrific job on the pulsar navigation story in a recent online post, talking to the researchers involved and noting a key problem of conventional spacecraft navigation: We can use Doppler shift to calculate a spacecraft’s position, but we lack accuracy when it comes to generating a three-dimensional view of the vehicle’s trajectory. What pulsars could provide would be an ability to place the spacecraft in that three dimensional frame, as the Italian team was able to demonstrate through computer simulations using software that worked with artificial signals to test the method.

This is celestial navigation of a kind that conjures up sailing ships deep in southern seas in the 18th Century, using the stars to fix their position. We know, of course, that neither stars nor pulsars are fixed in the sky, but the regularity of pulsars is such a huge advantage that we can adjust for long-term movement. What is more problematic is the weakness of the pulsar signal, which could demand the use of a large radio telescope aboard the spacecraft. That will remain an issue for work outside the Solar System, but in the inner System, the Italian team wonders whether we could combine pulsars signals with those of local transmitters. From the paper:

For the use in the Solar system, one could for instance think to lay down regular pulse emitters on the surface of some celestial bodies: let us say the Earth, the Moon, Mars etc. The behaviour of the most relevant bodies is indeed pretty well known, so that we have at hands the time dependence of the direction cosines of the pulses: this is enough to apply the method and algorithm we have described and the final issue in this case would be the position within the Solar system. In principle the same can be done in the terrestrial environment: here the sources of pulses would be onboard satellites, just as it happens for GPS, but without the need of continuous intervention from the ground: again the key point is a very good knowledge of the motion of the sources in the reference frame one wants to use.

What’s fascinating about this work is that while it does not consider the numerous technological problems involved in building such a positioning system, it does define an autonomous method which fully moves the positioning frame from Earth to spacetime, in what the authors call a ‘truly relativistic viewpoint.’ The paper goes on:

The procedure is fully relativistic and allows position determination with respect to an arbitrary event in flat spacetime. Once a null frame has been defined, it turns out that the phases of the electromagnetic signals can be used to label an arbitrary event in spacetime. If the sources emit continuously and the phases can be determined with arbitrary precision at any event, it is straightforward to obtain the coordinates of the user and his worldline.

The spacecraft using these methods, then, is fully capable of navigating without help from the Earth. For nearby missions, emitters on inner system objects can supplement the observation of a single bright pulsar to produce the data necessary for the positional calculation, but deep space will demand multiple pulsars and the onboard capabilities of an X-ray telescope to acquire the needed signals. How we factor that into payload considerations is a matter for future engineering — right now the key task is to work out the feasibility of a pulsar navigation system that could one day guide us in interstellar flight.

The paper is Tartaglia et al., “A null frame for spacetime positioning by means of pulsating sources,” accepted for publication in Advances in Space Research (preprint). See also Ruggiero et al., “Pulsars as celestial beacons to detect the motion of the Earth” (preprint).

Related (and focused on the analysis of X-ray pulsar signals): Bernhardt et al., “Timing X-ray Pulsars with Application to Spacecraft Navigation,” to be published in the proceedings of High Time Resolution Astrophysics IV – The Era of Extremely Large Telescopes, held on May 5-7, 2010, Agios Nikolaos, Crete, Greece (preprint). Thanks to Mark Phelps for the pointer to this one.

by Paul Gilster | Apr 23, 2010 | Astrobiology and SETI, Communications and Navigation |

Are there better ways of studying the raw data from SETI? We may know soon, because Jill Tarter has announced that in a few months, the SETI Institute will begin to make this material available via the SETIQuest site. Those conversant with digital signal processing are highly welcome, but so are participants from the general public as the site gears up to offer options for all ages. Tarter speaks of a ‘global army’ of open-source code developers going to work on data collected by the Allen Telescope Array, along with students and citizen scientists anxious to play a role in the quest for extraterrestrial life.

SETI@home has been a wonderful success, but as Tarter notes in this CNN commentary, the software has been limited. You took what was given you and couldn’t affect the search techniques brought to bear on the data. I’m thinking that scattering the data to the winds could lead to some interesting research possibilities. We need the telescope hardware gathered at the Array to produce these data, but the SETI search goes well beyond a collection of dishes.

Ponder that the sensitivity of an instrument is only partly dependent on the collecting area. We can gather all the SETI data we want from our expanding resources at the Allen Telescope Array, but the second part of the equation is how we analyze what we gather. Claudio Maccone has for some years now championed the Karhunen-Loève Transform, developed in 1946, as a way of improving the sensitivity to an artificial signal by a factor of up to a thousand. Using the KL Transform could help SETI researchers find signals that are deliberately spread through a wide range of frequencies and undetectable with earlier methods.

Image: Dishes at the ATA. What new methods can we bring to bear on how the data they produce are analyzed? Credit: Dave Deboer.

SETI researchers used a detection algorithm known as the Fourier Transform in early searches, going under the assumption that a candidate extraterrestrial signal would be narrow-band. By 1965, it became clear that the new Fast Fourier Transform could speed up the analysis and FFT became the detection algorithm of choice. It was in 1982 that French astronomer and SETI advocate François Biraud pointed out that here on Earth, we were rapidly moving from narrow-band to wide-band telecommunications. Spread spectrum methods are more efficient because the information, broken into pieces, is carried on numerous low-powered carrier waves which change frequency and are hard to intercept.

What Biraud noticed, and what Maccone has been arguing for years, is that our current SETI methods using FFT cannot detect a spread spectrum signal. Indeed, despite the burden the KLT’s calculations place even on our best computers, Maccone has devised methods to make it work with existing equipment and argues that it should be programmed into the Low Frequency Array and Square Kilometer Array telescopes now under construction. The KLT, in other words, can dig out weak signals buried in noise that have hitherto been undetectable.

But wait, wouldn’t a signal directed at our planet most likely be narrow in bandwidth? Presumably so, but extraneous signals picked up by chance might not be. It makes sense to widen the radio search to include methods that could detect both kinds of signal, to make the search as broad as possible.

I bring all this up because it points to the need for an open-minded approach to how we process the abundant data that the Allen Telescope Array will be presenting to the world. By making these data available over the Web, the SETI Institute gives the field an enormous boost. We’re certainly not all digital signal analysts, but the more eyes we put on the raw data, the better our chance for developing new strategies. As Tarter notes:

This summer, when we openly publish our software detection code, you can take what you find useful for your own work, and then help us make it better for our SETI search. As I wished, I’d like to get all Earthlings spending a bit of their day looking at data from the Allen Telescope Array to see if they can find patterns that all of the signal detection algorithms may still be missing, and while they are doing that, get them thinking about their place in the cosmos.

And let me just throw in a mind-bending coda to the above story. KLT techniques have already proven useful for spacecraft communications (the Galileo mission employed KLT), but Maccone has shown how they can be used to extract a meaningful signal from a source moving at a substantial percentage of the speed of light. Can we communicate with relativistic spacecraft of the future when we send them on missions to the stars? The answer is in the math, and Maccone explains how it works in Deep Space Flight and Communications (Springer/Praxis, 2009), along with his discussion of using the Sun as a gravitational lens.

by Paul Gilster | Jan 11, 2010 | Communications and Navigation, Missions |

The Project Icarus weblog is up and running in the capable hands of Richard Obousy (Baylor University). The notion is to re-examine the classic Project Daedalus final report, the first detailed study of a starship, and consider where these technologies stand today. Icarus is a joint initiative between the Tau Zero Foundation and the British Interplanetary Society, the latter being the spark behind the original Daedalus study, and we’ll follow its fortunes closely in these pages. For today, I want to draw your attention to Pat Galea’s recent article on the Icarus blog on communications.

‘High latency, high bandwidth’ is an interesting way to consider interstellar signaling. Suppose, for example, that we do something that on the face of it seems absurd. We send a probe to a nearby star and, as one method of data return, we send another probe back carrying all the acquired data. Disregard the obvious propulsion problem for a moment — from a communications standpoint, the idea makes sense. ‘High latency, high bandwidth’ translates into huge amounts of data delivered over long periods of time. I remember Vint Cerf, the guru of TCP/IP, reminding a small group of researchers at JPL ‘Never underestimate the bandwidth of a pickup truck carrying a full load of DVDs,’ a reference commonly used in descriptions of the latency issue.

I was sitting in on that meeting, taking furious notes and anxious to learn more about how the basic Internet protocols would have to be juggled to cope with the demands of deep space communications. And the point was clear: If the wait time is not an issue, then low tech, high bandwidth makes a lot of sense. From the interstellar perspective, alas, we don’t have the propulsion technologies or the patience for this kind of communication. Radio is infinitely better, but we face the problem of beam spread over distance, even with higher and higher radio frequencies being employed. The Daedalus team had two approaches, summed up here by Pat:

a. Make the engine’s reaction chamber be a parabolic dish shape. When the boost phase has ended, use this dish as an enormous reflector to focus the radio transmissions back to Earth.

b. On Earth (or in near-Earth space), set up a huge array of parabolic dish receivers. (An array of receivers is almost as effective as a single receiver of the same size as the array.) This allows much more of the signal to be picked up than would be possible with just a single large dish. (The design was based on the proposed Project Cyclops, which was to be used to search for signals from extra-terrestrial intelligence.)

When we turn to laser communications, things get a good deal better. Extensive testing at JPL and other research centers has shown that much higher bandwidth can return data from deep space for the same amount of power as would be used in more conventional radio systems. Indeed, this is the approach JPL’s James Lesh uses in his study of communications from a Centauri probe. Lesh knows all about propulsion issues, but he’s straightforward in saying that if we surmounted those problems and did reach Centauri with a substantial payload, a laser communications system would be practicable.

Not that it would be easy. Galea again:

Over interstellar distances, despite the fact that lasers create a very tight beam, the beam spreading does cause a problem. The laser also has to be aimed very accurately, and this aim has to be maintained. The tiniest amount of jitter in the craft could cause the beam to miss the target completely. This would be a very tough engineering challenge, combining navigation (so that the craft knows exactly how it is oriented, and exactly where the target is) and control (so that it is actually able to point the laser accurately at the target).

Pat also gets into Claudio Maccone’s interesting notion of using gravitational foci at both Sun and destination star, with a craft at each focus along the line joining them and the two stars. In such a scenario, power requirements are at an absolute minimum, but the trick is the engineering, which assumes a level of technology at the target star that we would not have in place with our early probes. Further into the future, though, a gravitational lensing approach could indeed be used to establish powerful communications links between distant colonies around other stars.

As the Icarus weblog gains momentum, it will be fascinating to watch background articles like these emerge and to keep up with team members as they report on the progress of the project. I recommend adding the Project Icarus blog to your RSS feed. Pat Galea includes a list of references at the end of his article, and I’ll add the Lesh paper, which is “Space Communications Technologies for Interstellar Missions,” Journal of the British Interplanetary Society 49 (1996), pp. 7-14.

by Paul Gilster | Nov 6, 2009 | Communications and Navigation |

If we can get the right kind of equipment to the Sun’s gravitational focus, remarkable astronomical observations should follow. We’ve looked at the possibilities of using this tremendous natural lens to get close-up images of nearby exoplanets and other targets, but in a paper delivered at the International Astronautical Congress in Daejeon, South Korea in October, Claudio Maccone took the lensing mission a step further. For in addition to imaging, we can also use the lens for communications.

The communications problem is thorny, and when I talked to JPL’s James Lesh about it in terms of a Centauri probe, he told me that a laser-based design he had worked up would require a three-meter telescope slightly larger than Hubble to serve as the transmitting aperture. Laser communications in such a setup are workable, but getting a payload-starved probe to incorporate a system this large would only add to our propulsion frustrations. The gravitational lens, on the other hand, could serve up a far more practical solution.

Keeping Bit Error Rate Low

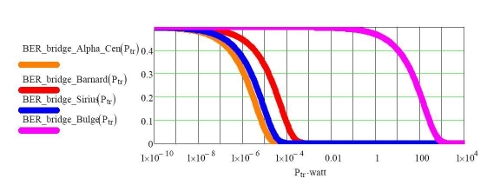

Maccone goes to work on Bit Error Rate, a crucial measure of signal quality, in assessing the possibilities. Bit error rate charts the number of erroneous bits received divided by the total number of bits transmitted. Working out the numbers, Maccone posits a human probe in Centauri space trying to communicate with a typical NASA Deep Space Network antenna (70 meter dish), using a 12-meter antenna aboard the spacecraft (probably inflatable).

Using a link frequency in the Ka band (32 GHz), a bit rate of 32 kbps, and forty watts of transmitting power (and juggling the other parameters reasonably), the math is devastating: we get a 50 percent probability of errors. So much for data integrity as we operate within conventional systems.

But if we send Maccone’s FOCAL probe to the Sun’s gravitational lens at 550 AU, we now tap the tremendous magnification of the lens, which brings us a huge new gain. Using the same forty watts of power, we derive a completely acceptable bit rate. In fact, Maccone’s figures show that the bit error rate does not begin to become remotely problematic until we reach a distance of nine light years, when the increase in BER begins slowly increasing.

Image: Figure 4. The Bit Error Rate (BER) (upper, blue curve) tends immediately to the 50% value (BER = 0.5) even at moderate distances from the Sun (0 to 0.1 light years) for a 40 watt transmission from a DSN antenna that is a DIRECT transmission, i.e. without using the Sun’s Magnifying Lens. On the contrary (lower red curve) the BER keeps staying at zero value (perfect communications!) if the FOCAL space mission is made, so as the Sun’s magnifying action is made to work. Credit: Claudio Maccone.

Building a Radio Bridge

Now this is interesting stuff because it demonstrates that when we do achieve the ability to create a human presence around a nearby star, we will have ways to establish regular, reliable communications. A second FOCAL mission, one established at the gravitational lens of the target star, benefits us even more. We could, for instance, create a Sun-Alpha Centauri bridge. The bit error rate becomes less and less of a factor:

…the surprise is that… for the Sun-Alpha Cen direct radio bridge exploiting both the two gravitational lenses, this minimum transmitted power is incredibly… small! Actually it just equals less than 10-4 watts, i.e. one tenth of a milliwatt is enough to have perfect communication between the Sun and Alpha Cen through two 12-meter FOCAL spacecraft antennas.

This seems remarkable, but gravitational lenses make remarkable things possible. Recall that it was only months ago that the first tentative discovery of an extrasolar planet in the Andromeda galaxy (M31) was made, using gravitational lensing to make the observation.

Into the Galactic Bulge

Maccone goes on to work out the numbers for other interstellar scenarios, such as a similar bridge between the Sun and Barnard’s Star, the Sun and Sirius A, and the Sun and a Sun-like star in the galactic bulge. That third possibility takes us into into blue sky territory, but it’s a fascinating exercise. If somehow we could use the gravitational lens of the star in the galactic bulge as well as our own gravitational lens, we would have a workable bridge at power levels higher than 1000 watts.

Image: Bit Error Rate (BER) for the double-gravitational-lens of the radio bridge between the Sun and Alpha Cen A (orangish curve) plus the same curve for the radio bridge between the Sun and Barnard’s star (reddish curve, just as Barnard’s star is a reddish star) plus the same curve of the radio bridge between the Sun and Sirius A (blue curve, just as Sirius A is a big blue star). In addition, to the far right we now have the pink curve showing the BER for a radio bridge between the Sun and another Sun (identical in mass and size) located inside the Galactic Bulge at a distance of 26,000 light years. The radio bridge between these two Suns works and their two gravitational lenses works perfectly (i.e. BER = 0) if the transmitted power higher than about 1000 watt. Credit: Claudio Maccone.

I’m chuckling as I write this because Maccone concludes the paper by imagining a similar bridge between the Sun and a Sun-like star inside M31, using the gravitational lenses of both. We’re working here with a distance of 2.5 million light years, but a transmitted power of about 107 watts would do the trick. This paper is a dazzling dip into the possibilities the gravitational lens allows us if we can find ways to reach and exploit it.

The paper is Maccone, “Interstellar Radio Links Enhanced by Exploiting the Sun as a Gravitational Lens,” presented at the recent IAC. I’ll pass along publication information as soon as the paper appears.

by Paul Gilster | Jun 1, 2009 | Communications and Navigation |

If we can use GPS satellites to find out where we are on Earth, why not turn to the same principle for navigation in space? The idea has a certain currency — I remember running into it in John Mauldin’s mammoth (and hard to find) Prospects for Interstellar Travel (AIAA/Univelt, 1992) some years back. But it was only a note in Mauldin’s ‘astrogation’ chapter, which also discussed ‘marker’ stars like Rigel (Beta Orionis) and Antares (Alpha Scorpii) and detailed the problems deep space navigators would face.

The European Space Agency’s Ariadna initiative studied pulsar navigation relying on millisecond pulsars, rotating neutron stars that spin faster than 40 revolutions per second. The pitch here is that pulsars that fit this description are old and thus quite regular in their rotation. Their pulses, in other words, can be used as exquisitely accurate timing mechanisms. You can have a look at ESA’s “Feasibility study for a spacecraft navigation system relying on pulsar timing information” here (download at bottom of page).

Pulsars have huge advantages. A deep space satellite network to fix position is a costly option — it doesn’t scale well as we expand deeper into the Solar System and beyond it. Autonomous navigation is clearly preferable, tying the navigation system to a natural reference frame like pulsars. The down side: Pulsar signals are quite weak and thus put demands upon spacecraft constrained by mass and power consumption concerns. So there’s no easy solution to this.

But several readers (thanks especially to Frank Smith and Adam Crowl) have pointed out a recent paper by Bartolome Coll (Observatoire de Paris) and Albert Tarantola (Institut de Physique du Globe de Paris) that speculates on a system based on four millisecond pulsars: 0751+1807 (3.5 ms), 2322+2057 (4.8 ms), 0711-6830 (5.5 ms) and 1518+0205B (7.9 ms). The origin of the space-time coordinates the authors use is defined as January 1, 2001 at the focal point of the Cambridge radiotelescope where pulsars were discovered in 1967. Thus, the paper continues:

…any other space-time event, on Earth, on the Moon, anywhere in the Solar system or in the solar systems in this part of the Galaxy, has its own coordinates attributed. With present-day technology, this locates any event with an accuracy of the order of 4 ns, i.e., of the order of one meter. This is not an extremely precise coordinate system, but it is extremely stable and has a great domain of validity.

If these numbers are correct, they represent quite a jump over the ESA study cited above, which worked out the minimal hardware requirements for a pulsar navigation system and arrived at a positioning accuracy of no better than 1000 kilometers. ESA is working within near-term hardware constraints and discusses ways of enhancing accuracy, but the report does point out the huge and perhaps prohibitive weight demands these solutions will make upon designers.

The paper is Coll and Tarantola, “Using pulsars to define space-time coordinates,” available online.