If you’re headed for another planet, celestial markers can keep your spacecraft properly oriented. Mariner 4 used Canopus, a bright star in the constellation Carina, as an attitude reference, its star tracker camera locking onto the star after its Sun sensor had locked onto the Sun. This was the first time a star had been used to provide second axis stabilization, its brightness (second brightest star in the sky) and its position well off the ecliptic making it an ideal referent.

The stars are, of course, a navigation tool par excellence. Mariners of the sea-faring kind have used celestial navigation for millennia, and I vividly remember a night training flight in upstate New York when my instructor switched off our instrument panel by pulling a fuse and told me to find my way home. I was forcefully reminded how far we’ve come from the days when the night sky truly was a celestial map for travelers. Fortunately, a few bright cities along the way made dead reckoning an easy way to get home that night. But I told myself I would learn to do better at stellar navigation. I can still hear my exasperated instructor as he pointed out one celestial marker: “For God’s sake, see that bright star? Park it over your left wingtip!”

Celestial navigation of various kinds can be done aboard a spacecraft, and the use of pulsars will help future deep space probes navigate autonomously. Until then, our methods rely heavily on ground-based installations. Delta-Differential One-Way Ranging (Delta-DOR or ∆DOR) can measure the angular location of a target spacecraft relative to a reference direction, the latter being determined by radio waves from a source like a quasar, whose angular position is well known. Only the downlink signal from the spacecraft is used in a precision technique that has been employed successfully on such missions as China’s Chang’e, ESA’s Rosetta and NASA’s Mars Reconnaissance Orbiter.

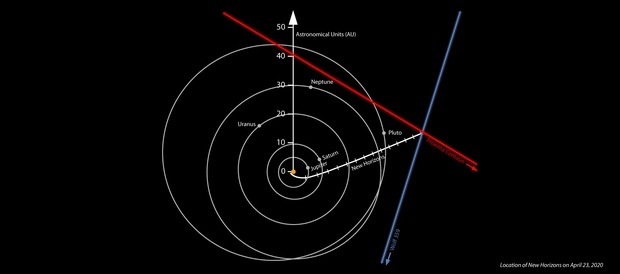

The Deep Space Network and Delta-DOR can perform marvels in terms of the directional location of a spacecraft. But we’ve also just had a first in terms of autonomous navigation through the work of the New Horizons team. Without using radio tracking from Earth, the spacecraft has determined its distance and direction by examining images of star fields and the observed parallax effects. Wonderfully, the two stars that the team chose for this calculation were Wolf 359 and Proxima Centauri, two nearby red dwarfs of considerable interest.

The images in question were captured by New Horizons’ Long Range Reconnaissance Imager (LORRI) and studied in relation to background stars. These twp stars are almost 90 degrees apart in the sky, allowing team scientists to flag New Horizons’ location. The LORRI instrument offers limited angular resolution and is here being used well outside the parameters for which it was designed, but even so, this first demonstration of autonomous navigation didn’t do badly, finding a distance close to the actual distance of the spacecraft when the images were taken, and a direction on the sky accurate to a patch about the size of the full Moon as seen from Earth. This is the largest parallax baseline ever taken, extending for over four billion miles. Higher resolution imagers, as reported in this JHU/APL report, should be able to do much better.

Image: Location of NASA’s New Horizons spacecraft on April 23, 2020, derived from the spacecraft’s own images of the Proxima Centauri and Wolf 359 star fields. The positions of Proxima Centauri and Wolf 359 are strongly displaced compared to distant stars from where they are seen on Earth. The position of Proxima Centauri seen from New Horizons means the spacecraft must be somewhere on the red line, while the observed position of Wolf 359 means that the spacecraft must be somewhere on the blue line – putting New Horizons approximately where the two lines appear to “intersect” (in the real three dimensions involved, the lines don’t actually intersect, but do pass close to each other). The white line marks the accurate Deep Space Network-tracked trajectory of New Horizons since its launch in 2006. The lines on the New Horizons trajectory denote years since launch. The orbits of Jupiter, Saturn, Uranus, Neptune and Pluto are shown. Distances are from the center of the solar system in astronomical units, where 1 AU is the average distance between the Sun and Earth. Credit: NASA/Johns Hopkins APL/SwRI/Matthew Wallace.

Brian May, known for his guitar skills with the band Queen as well as his knowledge of astrophysics, helped to produce the images below that show the comparison between these stars as seen from Earth and from New Horizons. A co-author of the paper on this work, May adds:

“It could be argued that in astro-stereoscopy — 3D images of astronomical objects – NASA’s New Horizons team already leads the field, having delivered astounding stereoscopic images of both Pluto and the remote Kuiper Belt object Arrokoth. But the latest New Horizons stereoscopic experiment breaks all records. These photographs of Proxima Centauri and Wolf 359 – stars that are well-known to amateur astronomers and science fiction aficionados alike — employ the largest distance between viewpoints ever achieved in 180 years of stereoscopy!”

Here are two animations showing the parallax involving each star, with Proxima Centauri being the first image. Note how the star ‘jumps’ against background stars as the view from Earth is replaced by the view from New Horizons.

Image: In 2020, the New Horizons science team obtained images of the star fields around the nearby stars Proxima Centauri (top) and Wolf 359 (bottom) simultaneously from New Horizons and Earth. More recent and sophisticated analyses of the exact positions of the two stars in these images allowed the team to deduce New Horizons’ three-dimensional position relative to nearby stars – accomplishing the first use of stars imaged directly from a spacecraft to provide its navigational fix, and the first demonstration of interstellar navigation by any spacecraft on an interstellar trajectory. Credit: JHU/APL.

This result from New Horizons marks the first time that optical stellar astrometry has been applied to the navigation of a spacecraft, but it’s clear that our hitherto Earth-based methods of navigation in space will have to give way to on-board methods as we venture still farther out of the Solar System. Thus far the use of X-ray pulsars has been demonstrated only in Earth orbit, but it will surely be among the techniques employed. These rudimentary observations are likewise proof-of-concept whose accuracy will need dramatic improvement.

The paper notes the next steps in using parallactic measurements for autonomous navigation:

Considerably better performance should be possible using the cameras presently deployed on other interplanetary spacecraft, or contemplated for future missions. Telescopes with apertures plausibly larger than LORRI’s, with diffraction-limited optics, delivering images to Nyquist-sampled detectors [a highly accurate digital signal processing method], mounted on platforms with matching finepointing control, should be able to provide astrometry with few milli-arcsecond accuracy. Extrapolating from LORRI, position vectors with accuracy of 0.01 au should be possible in the near future.

The paper on this work is Lauer et al., “A Demonstration of Interstellar Navigation Using New Horizons,” accepted at The Astronomical Journal and available as a preprint.

I’m reminded that celestial readings were used for navigation, competing with mechanical clocks for the “Longitude Prize”. I hadn’t realized that celestial navigation was used into the 19th century according to this article based on Dava Sobel’s “Longitude”. It seems that celestial navigation has a similar romantic hold on humans as travel by sailing ships using only the wind, and incidentally, is being slowly rediscovered to reduce costs and pollution from shipping.

One advantage New Horizons and future spacecraft will have over pilots navigating by the stars is the absence of obscuring clouds and increasingly light pollution. Do pilots still use the stars in an emergency? In the age of radio fixes and now GPS, I was surprised that dead reckoning was still an option on the USS Hornet, now moored in San Francisco Bay as an attraction. I presume dead reckoning was used in the WWII period. In that time, submarine navigation in the oceans must have been even more difficult.

As a fan of the old Dan Dare comic in Britain’s Eagle magazine of my youth, I still find charm in that space age strip with interplanetary (and later interstellar) flight, where navigation still appears to use celestial cues, rather than radio navigation, at least in deep space. New Horizons seems to be making what is old new again. Dare’s batman, and space navigator Digby, would be pleased.

Hi, Alex

Yes, celestial navigation is still used at sea, as a backup to satellite systems like the Global Positioning System (GPS). During my time on a missile frigate in the late 1960s I was trained on the basics of the art, although most of our navigation was done by LORAN. Celestial was only employed when we were out of range of the LORAN transmitters, far offshore, and then only by the officers and senior enlisted men.

Today, celestial is mostly used by yachtsmen and hobbyists.

https://goodoldboat.com/wp-content/uploads/2018/03/50-53_GOB97_JA97_Sextant.pdf

Last time I was on a small Boston Whaler design boat, GPS was used. The owner just set the desired coordinates and the boat took us to the location! Look, ma, no hands! “Self-driving” cars do the same, but use maps to stay on the road!

IIUC, the LORAN system was a more sophisticated version of radio beam navigation used by WWII night flying bombers. The German Luftwaffe used 2 radio beams with different frequencies to triangulate their position on bombing raids over Britain. Unlike Paul’s experience using lit cities, blackouts meant the land was dark, with only rivers and lakes reflecting moonlight to give hints of location.

Notably, the German V2 used a system of dead reckoning with a crude inertial guidance system. Feedback was from British news reports of V2 impacts. The Brits cleverly faked the impact reports (disinformation) to upset the guidance calculations.

I think civil aircraft were using inertial navigation for trans oceanic flight at one time. More recently, I read of more accurate inertial systems to measure the travel of subsurface trains as the tunnels blocked GPS signals.

If we lose our electronic systems in a conflict, no doubt these older systems of navigation will be brushed off and repurposed. I have an image of mechanical-only robots using celestial navigation to navigate.

Thank you for that lovely story, Henry; I never actually knew the procedures you described.

And thanks for mentioning morse code…just as my own proficiency exceeded the speed for my Extra Class license, wouldn’t you know that a year later code was substantially deprecated? Time moves on…taking code and sextants along for the ride.

Parallax also plays a role in maritime navigation.

The locations on the celestial sphere of the Sun, Mars and Venus are affected by the location on Earth from which the mariner observes them with his sextant. Because of their distance from Earth, the correction for these bodies is tiny and can be ignored for most applications. For Jupiter, Saturn and the stars, the the distance and introduced error is beyond the precision of the human eye and a hand-held instrument.

The Moon, however, is another story. Our satellite’s orbital semi-major axis is approximately 60 Earth radii, so parallax is substantial (up to about about one degree) and must be corrected for, depending on the Moon’s distance from the horizon and the time of the observation. The Nautical Almanac lists the lunar parallax (HP) for every hour of every day to a precision of 0′.1 and the navigator must enter this argument into the tables he uses to correct his sextant reading.

Ignoring lunar parallax can result in an error in position of about a degree of arc or about 60 nautical miles (111 km).

On Earth, we assume the stars are fixed, but we know that they move over time. The Gaia satellite has given us the best data on these movements. We have had posts on the relative movements of stars, especially close encounters with our sun, over long periods.

But now we are also thinking about long interstellar journeys. These timeframes of 500+ years will result in both stellar parallax and stellar movement during the voyage. Using just the stellar parallax would result in incorrect positioning during the voyage. The use of pulsars might well be better for positioning, but even they move, so I wonder what errors would be introduced in a voyage even using these stars as location beacons.

OTOH, movement can be useful. The SciFi TV series Beacon 23 is an artificial space station, like an interstellar lighthouse and way station. It is supposedly fixed in location. Known stellar movement could be used to triangulate position over a known period. [Rather than triangulate between 2 known, fixed objects, use time to allow movement of an object to triangulate position, at least in a 2D plane.]

I remember one science fiction author (I’m afraid I don’t remember who) solved the problem of cross-galactic navigation neatly without resorting to external landmarks and bearings for piloting and dead reckoning.

The spacecraft (FTL of course, and apparently not affected by relativistic effects) simply took periodic pictures astern as it plunged into the countless multitudes of stars ahead. Each exposure was different from those preceding and following, but still similar enough that the navigators could reconstruct the star fields they would see out the bridge windows on the voyage home. Of course, they would have no choice to but follow the exact same path back as they did on the way out, no matter how many twists and turns they took.

As much as I love Star Trek and the view screen of stars passing, by we would not see anything at FTL speeds. One can only see stars at sublight speeds according to my thought experiments of the physics of the Alcubierre Warp drive using general and special relativity. For example. There is only a free fall geodesic when the space warp is turned on both the front and back of the ship so the space is expanding in back and contracting in front. If only one part of it was working like the front only or back only, then it’s occupants would feel inertial effects of special relativity and therefore it would be a sublight drive.

Now turn them both on simultaneously, and we immediately are in a free fall geodesic. It depends on the amplitude and intensity of the gravity and anti gravity waves for the speed of acceleration, but the spacecraft would eventually reach C or faster due to runaway motion like a diametric drive, bondi and bonner 1957. Wikipedia, negative mass. The moment it reaches C, the speed of light and faster, an event horizon forms and the sky is completely black without any light. That event horizon would prevent any radio transmissions outside the pocket of space the AWD was trapped inside. Starlight also can’t get inside which is one of the interesting effects of being in hyperspace. Star Wars got it right.

https://vixra.org/abs/1106.0053

I understand the New Horizons guys are looking for one last target for a flyby before it is lost to the void and or the heliophysics guys.

Instead of peering ahead, perhaps they can work with the Sun-study crowd–after all, SOHO and others find all kinds of comets being slung outwards.

I am thinking that at least some of these things–extrasolar or not–will be thrown towards NH’s path.

In the same way you need to catch a NEO early enough to have it gradually deviate so as to miss Earth– by looking for fast moving targets close it–it allows NH to gradually veer towards the path of an object on its way out of the solar system.

Knowing where you are means knowing where you are at a certain moment in time. This means choosing external landmarks: a bell tower, a lighthouse, a star, etc., and eventually counting the time from the starting point. Until the invention of the marine chronometer, people weren’t in too much of a hurry, and a few nm of error didn’t bother anyone.

Technology has imposed greater precision: you can go round in circles for a while with a 747, but when it runs out of kerosene, you have no choice but to land it or fall off…ask Sully ;) We’ve achieved remarkable precision on earth with GPS, especially as we now know our planet so well.

With space exploration, we’ve already had to invent another form of navigation. Paul pointed out Mariner; I’d add Apollo 11 to the headache NASA had to solve when it came to creating ANOTHER precise coordinate system for landing the LEM: from memory, an earth-moon-sun triangulation.

** The question of navigation involves the goal and the degree of precision we wish to achieve **.

During the launch phase, we set a course without “great precision”; during the flight, we try to keep this course towards the goal, to which we apply corrections according to the environment and the hazards of the flight (drift; behavior of the mobile; number of glasses of vodka swallowed etc.:) On the other hand, landing requires precision. The same applies to a LEM; an uboot torpedo; an atomic bomb…

There is therefore not one, but several degrees of precision to be obtained on a trajectory, which requires several often complementary technologies: I.L.S., radio, visual GPS, etc. Ultimate precision with a single technology on a single trajectory would be highly uncertain.

Which raises the question of data. All systems must first and foremost be fed with information, as we ourselves are constantly being fed. Sirus was one of the first landmarks of ancient civilizations: one of the first “information” that the human species was able to exploit.

What about future interstellar travel ?

The further away we are from our cradle, the more we’ll have to find new reference points and therefore modernize or reinvent our positioning systems. I think it’s our ability – or that of machines – to synthesize information that will condition interstellar travel. Over “short” distances, parallax will be used. For longer distances, cepheids or pulsars will do the trick. The precision required will require not one, but several complementary positioning systems. But what’s next?

How will we find our bearings if we reach FTL speeds where time and distance have nothing in common with what we know?

We can also imagine that the mobile will have to pass through one or more environments with different reference frames (radio shadow zone; hyper-gravity and, why not, black holes or other exotic objects?

One last remark: it’s been said that if you’re caught in an avalanche, you have to urinate to find your way back. What does this tell us? That you have to eject something to find your way in an environment. isn’t there a lesson to be learned from this?

doesn’t the mobile have to send something into its environment to get information back? doesn’t it remind you of radar ? ;)

I might call the big Cold Spot 0/0 for the largest scales :)

Not a true center of the universe (no such animal) but it will do for a start.

“Brian May, known for his guitar skills with the band Queen as well as his knowledge of astrophysics”

Now that’s a line you don’t see every day!

May is an unusual character, that’s for sure. And he knows his stuff when it comes to astrophysics.

Look for his nice book “Bang” with P. Moore ;)

https://www.amazon.com/Bang-Complete-Universe-Brian-May/dp/0233004807

Explain to me again that remark about urinating in an avalanche.

I have a feeling that, in my case, that would be a completely involuntary response.

Really, Henry ? you pee and gravity does the rest, allowing you to find your way up and down again. I learned this from a mountaineer in the Alps :D

BTW I’m not sure that many yachtsmen on the French coasts still know how to draw an height line … you press the GPS and hop! On the other hand, I think our Navy is still teaching its officers.

That never occurred to me. At first I thought the rescue dogs were trained to seek out the scent of human body fluids.

Hi Paul

Yes amazing work by New Horizons and good to see the graphics in the post too giving you an idea of the change. I haven’t seen the latest Political news but I sure hope this mission is able to keep going.

“I vividly remember a night training flight in upstate New York when my instructor switched off our instrument panel by pulling a fuse and told me to find my way home.” This sounds like an interesting story and a topic for a post Paul :)

Cheers Edwin

Each spacecraft we send out with a camera will be able to do this parallax to give a more accurate position to stars in our neighbourhood. If Starshot is sent out it should be able to do this as well, so in effect science can be done straight away before even entering the target star system.