Centauri Dreams

Imagining and Planning Interstellar Exploration

Exoplanet Atmospheres: Recalibrating Our Models

We may be measuring planetary temperatures with less than optimum tools. Calling it a “new phenomenon,” Cornell University’s Nikole Lewis described the background of a just published paper looking into hot Jupiter temperatures. Lewis had been increasingly puzzled by earlier work on the matter, which produced temperatures colder than scientists expected. The deputy director of the Carl Sagan Institute, Lewis joined colleagues Ryan MacDonald and Jayesh Goyal in looking for the reason, reporting their results in Astrophysical Journal Letters.

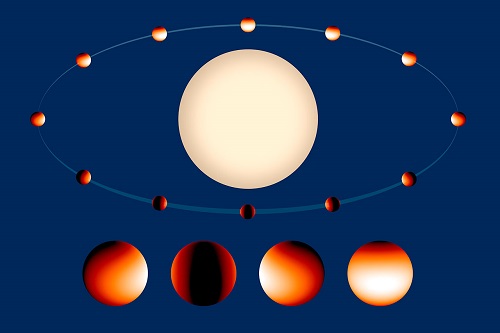

What emerged was the need to fine-tune our analysis of exoplanet atmospheres, as delivered by the technique called transmission spectroscopy, in which the light of a parent star is filtered through a planetary atmosphere during a transit. Have a look, for example, at an illustration of the hot-Jupiter WASP-43b as it transits its star. Scientists have been able to construct temperature maps for the planet as well as probing its atmosphere to understand the molecular chemistry within. But the data on atmospheric composition have to be interpreted correctly.

Image: In this artist’s illustration the Jupiter-sized planet WASP-43b orbits its parent star with a year lasting just 19 hours. The planet is tidally locked, meaning it keeps one hemisphere facing the star, just as the Moon keeps one face toward Earth. The color scale on the planet represents the temperature across its atmosphere. This is based on data from a 2014 study (not the Lewis paper) that mapped the temperature of WASP-43b in more detail than had been done for any other exoplanet at that time. Credit: NASA, ESA, and Z. Levay (STScI).

Hot Jupiters orbit close enough to their star to become tidally locked, while the intense gravitational forces at work can cause the planet to bulge, making it egg-shaped. What we would expect is a wide range of temperatures, varying by thousands of degrees, between the blistering ‘day’ side of the star and the frigid side turned away from the star. Averaging temperatures like these can be a problem, according to the Cornell researchers, and the range of temperatures likewise can promote entirely different chemistry between the two sides.

“When you treat a planet in only one dimension, you see a planet’s properties – such as temperature – incorrectly,” Lewis said. “You end up with biases. We knew the 1,000-degree differences were not correct, but we didn’t have a better tool. Now, we do.”

Let’s dig into this. We have over 40 examples of hot Jupiters with transmission spectra, meaning that the science of exoplanet atmospheres is becoming established — eventually, we’ll drill down to smaller rocky worlds to learn about possible biosignatures, but for now, we’re detecting various atoms and molecules in the atmospheres of close-in gas giants. Observations of high enough precision can use transmission spectroscopy to learn about temperatures at the terminator, the zone where day meets night and the temperature contrast can be huge.

Both optical and near-infrared data are needed to draw accurate conclusions. The anomaly that the authors are addressing is that almost all of the retrieved temperatures for hot Jupiters are cooler than planetary equilibrium temperatures derived for the planet. In fact, the retrieved temperatures for most hot Jupiters are between 200 and 600 K cooler than the equilibrium temperature ought to be. The equilibrium temperature is the point at which the planet emits as much thermal energy as it receives from the star. It is commonly denoted as Teq.

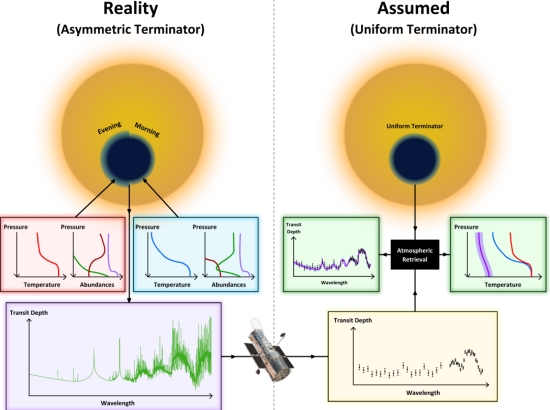

The authors propose that the colder temperatures being found via transmission spectra are the result of the use of 1-dimensional models. The atmosphere at the terminator is more complex than the 1D model can show. They call for more complex 3D general circulation models (GCM) to replace them. Sets of differential equations are put to work in a GCM, with the planet divided into a 3-dimensional grid and analyzed in terms of winds, heat transfer, radiation and other factors, taking into account their interactions with other parts of the grid. The difference is striking, as the image below, drawn from the paper, demonstrates.

Image: This is Figure 1 from the paper. Caption: Schematic explanation of the cold retrieved temperatures of exoplanet terminators. Left: a transiting exoplanet with a morning-evening temperature difference (observer’s perspective). Differing temperature and abundance profiles encode into the planet’s transmission spectrum. Right: the observed spectrum is analysed by retrieval techniques assuming a uniform terminator. The retrieved 1D temperature profile required to fit the observations is biased to colder temperatures. Credit: MacDonald et al.

Thus the earlier models bias the results toward colder temperatures. And this can be significant in our evaluation of planetary atmospheres, as the paper notes:

Those [chemical] species exhibiting compositional differences have retrieved 1D abundances biased lower than the true terminator-averaged values. Even species uniform around the terminator (here, H2O) are biased, though to higher abundances. Compositional biases become more severe as the retrieved P-T profile deviates further from the true terminator temperature. In the most extreme case, the retrieved H2O abundance is biased by over an order of magnitude, such that one would incorrectly believe a solar-metallicity atmosphere was 15 × super-solar at > 3σ confidence.

We need to resolve this matter, then, to conduct more accurate atmospheric work. The paper continues:

The retrieved cold temperatures of exoplanet terminators in the literature can be explained by inhomogenous morning-evening terminator compositions. The inferred temperatures arise from retrievals assuming uniform terminator properties. We have demonstrated analytically that the transit depth of a planet with different morning and evening terminator compositions, when equated to a 1D transit depth, results in a substantially colder temperature than the true average terminator temperature. This also holds for state-of-the-art retrieval codes, with the added complication that retrieved chemical abundances can also be significantly biased.

Using the older models has meant that the temperatures of hot Jupiters thus far measured may be biased by hundreds of degrees below their true value. The figure reaches 1000 K in the case of ultra-hot Jupiters. An implication here is that the chemistry derived from the older models is less reliable. The authors call for the use of more sophisticated retrieval tools, acknowledging the increased computer overhead involved with 3D approaches but arguing that such models will produce more accurate data on atmospheric temperatures and composition.

The paper is MacDonald et al., “Why Is it So Cold in Here? Explaining the Cold Temperatures Retrieved from Transmission Spectra of Exoplanet Atmospheres,” Astrophysical Journal Letters Vol. 893, No. 2 (23 April 2020). Abstract / preprint.

Into the Magellanics

Somehow it feels as if the Hubble Space Telescope has been with us longer than the 30 years now being celebrated. But it was, in fact, on April 24, 1990 that the instrument was launched aboard the space shuttle Discovery, being deployed the following day. 1.4 million observations have followed, with data used to write more than 17,000 peer-reviewed papers. It’s safe to say that Hubble’s legacy will involve decades of research going forward as its archives are tapped by future researchers. That’s good reason to celebrate with a 30th anniversary image.

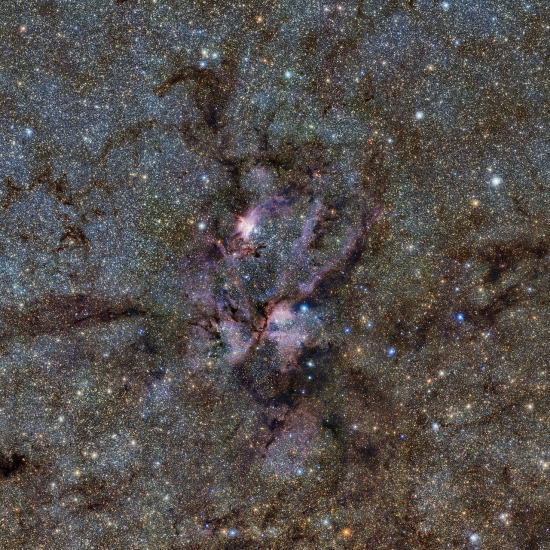

I’m reminded that the recent work we looked at on the interstellar comet 2I/Borisov involved Hubble as part of the effort that detected the highest levels of carbon monoxide ever seen in a comet so close to the Sun. Using Hubble data is simply a given wherever feasible. And given yesterday’s article on star formation and conditions in the Sun’s birth cluster that may have produced leftover material from other stellar systems still orbiting our star, it’s worth noting the portrait Hubble just took of two extraordinary nebulae that are part of a star-formation complex.

Image: This image is one of the most photogenic examples of the many turbulent stellar nurseries the NASA/ESA Hubble Space Telescope has observed during its 30-year lifetime. The portrait features the giant nebula NGC 2014 and its neighbor NGC 2020 which together form part of a vast star-forming region in the Large Magellanic Cloud, a satellite galaxy of the Milky Way, approximately 163,000 light-years away. Credit: NASA, ESA, and STScI.

Here we’re deep in the Large Magellanic Cloud. This is a visible-light image showing NGC 2014 and NGC 2020, which although appearing separate here, are part of a large star-forming region filled with stars much more massive than the Sun, many of them with lifetimes of little more than a few million years. Dominating the image, a group of stars at the center of NGC 2014 has shed the hydrogen gas and dust of stellar birth (shown in red) so that the star cluster lights up nearby regions in ultraviolet while sculpting and eroding the gas cloud above and to the right of the young stars. Bubble-like structures within the gas churn with the debris of starbirth.

At the left, NGC 2020 is dominated by a star 200,000 times more luminous than the Sun, a Wolf-Rayet star 15 times more massive than our own. Here the stellar winds have again cleared out the area near the star. According to the ESA/Hubble Information Center, the blue color of the nebula is oxygen gas being heated to 11,000 degrees Celsius, far hotter than the surrounding hydrogen gas. We should keep in mind when seeing such spectacular imagery that massive stars of this kind are relatively uncommon, but the processes at work in this image show how strong a role their stellar winds and supernovae explosions play in shaping the cosmos.

Inevitably I’m reminded of science fictional treatments of the Magellanics, especially Olaf Stapledon’s Star Maker (1937), in which a symbiotic race living in the Large Magellanic Cloud (LMC) is the most advanced life form in the galaxy. But let’s also remember that when Arthur C. Clarke’s starship leaves the Sun in Rendezvous with Rama (1973), it’s headed for the LMC. Among scores of other references, from Haldeman’s The Forever War to Blish’s Cities in Flight, I’m particularly fond of Robert Silverberg’s less known Collision Course, probably because a shorter version fired my imagination as a boy in a copy of Amazing Stories I still possess.

Image: The brilliant Cele Goldsmith was editing Amazing Stories by the time the July, 1959 issue containing “Collision Course” came out. The full novel would be published in 1961.

There is something about what you would see in the night sky from a satellite galaxy, as opposed to our own view of the Milky Way from within its disk, that fires the imagination. For an entirely different view of the galaxy, read Poul Anderson’s World Without Stars (1966), as discussed in these pages back in 2014 in The Milky Way from a Distance.

Identifying Asteroids from Other Stars

Objects of interstellar origin in our own Solar System continue to draw attention. Comets from other stars like 2I/Borisov give us the chance to delve into the composition of different stellar systems, while the odd ‘Oumuamua still puzzles astronomers. Comet? Asteroid?

Now we have a paper from Fathi Namouni (Observatoire de la Côte d’Azur, France) and Maria Helena Morais (Universidade Estadual Paulista, Brazil) targeting what the duo believe to be a population of asteroids captured from other stars in the distant past. Published in Monthly Notices of the Royal Astronomical Society, the paper relies on a high-resolution statistical search for stable orbits, ‘unwinding’ these orbits back in time to explain the location of certain Centaurs, asteroids moving perpendicular to the orbital plane of the planets and other asteroids.

Centaurs, most of which do not occupy such extreme positions, are a population of asteroids moving between the outer planets in what have until now been considered unstable orbits. The ones Namouni and Morais focus on are not recent interstellar ‘interlopers’ like ‘Oumuamua and Borisov, but objects that may have been drawn into the Sun’s gravitational pull at a time when the Solar System was still in formation, some 4.5 billion years ago. In those days, the Sun would have been part of a star cluster, with many young stars in close proximity. The authors believe they can identify 19 asteroids that once orbited other stars and now orbit the Sun.

Image: A stellar nursery in the Lobster Nebula (NGC6357), where star systems exchange asteroids as our Solar System is thought to have done 4.5 billion years ago. Credit: ESO / VVV Survey / D. Minniti. Acknowledgement: Ignacio Toledo (CC BY 4.0).

The authors delve into the dynamical evolution of Centaurs through statistical searches for stable orbits, to find out whether any could have survived from the days of the Solar System’s formation. In 2018, Namouni and Morais produced a paper using these methods that led to the identification of a retrograde co-orbital asteroid of Jupiter called (514107) Ka‘epaoka‘awela as an object of likely interstellar origin. The new study extends their simulations to the past orbits of 17 high-inclination Centaurs and two trans-Neptunian objects (2008 KV42 and (471325) 2011 KT19) with polar orbits. From the paper:

The statistical distributions show that their orbits were nearly polar 4.5?Gyr in the past, and were located in the scattered disc and inner Oort cloud regions. Early polar inclinations cannot be accounted for by current Solar System formation theory as the early planetesimal system must have been nearly flat in order to explain the low-inclination asteroid and Kuiper belts. Furthermore, the early scattered disc and inner Oort cloud regions are believed to have been devoid of Solar system material as the planetesimal disc could not have extended far beyond Neptune’s current orbit in order to halt the planet’s outward migration. The nearly polar orbits of high-inclination Centaurs 4.5?Gyr in the past therefore indicate their probable early capture from the interstellar medium.

The conclusion that these high-inclination Centaurs had polar inclinations at the time of the Solar System’s formation can be tested by further observations to firm up their orbits in comparison to the simulated results. It’s a natural leap from that possibility to the Sun’s birth cluster of stars, which would have supplied plentiful source material in the form of asteroids and comets available for capture. Thus the idea that all Centaurs are on unstable orbits is contradicted by 4.5 billion year orbits for high-inclination Centaurs, as well as some lower-inclination Centaurs like Chiron, which the authors also factored into their computations:

Either Chiron is an outlier that belonged to the planetesimal disc and whose cometary activity by some unknown mechanism increased its inclination far above the planetesimal disc’s mid-plane, or it could be itself of interstellar origin. Asteroid capture in the Sun’s birth cluster does not necessarily favour objects whose orbits have or evolve to polar or high-inclination retrograde motion (Hands et al. 2019). An astronomical illustration of the principle may be found in the distribution of the irregular satellites of the giant planets. Applying the high-resolution statistical stable orbit search to low-inclination Centaurs is likely to shed light on the possible common capture events that occurred in the early Solar system.

The paper is Namouni et al., “An Interstellar Origin for High-Inclination Centaurs,” Monthly Notices of the Royal Astronomical Society Volume 494, Issue 2 (May 2020), pp. 2191–2199. Abstract / preprint. And on the interesting question of Jupiter’s retrograde co-orbital asteroid and its possible interstellar origins, the paper is Namouni and Morais, “An interstellar origin for Jupiter’s retrograde co-orbital asteroid,” Monthly Notices of the Royal Astronomical Society: Letters 21 May 2018 (abstract).

An Image of Proxima Centauri c?

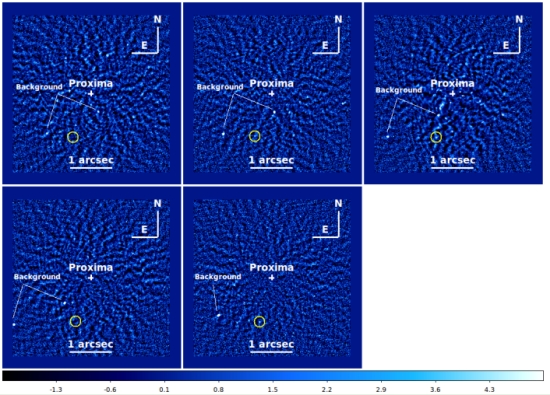

I’m keeping an eye on the recent attention being paid to Proxima Centauri c, the putative planet whose image may have been spotted by careful analysis of data from the SPHERE (Spectro-Polarimetric High-Contrast Exoplanet Research) imager mounted on the European Southern Observatory’s Very Large Telescope. A detection by direct imaging of a planet found first by radial velocity methods would be a unique event, and the fact that this might be a planet in the nearest star system to our own makes the story even more interesting.

I hasten to add that this is not Proxima b, the intriguing planet in the star’s habitable zone, but the much larger candidate world, likely a mini-Neptune, that has been identified but not yet confirmed. Proxima Centauri c could use a follow-up to establish its identity, and this direct imaging work would fit the bill if it holds up. But for now, the planet is still a candidate rather than a known world. From the paper:

While we are not able to provide a firm detection of Proxima c, we found a possible candidate that has a rather low probability of being a false alarm. If our direct NIR/optical detection of Proxima c is confirmed (and the comparison with early Gaia results indicates that we should take it with extreme caution), it would be the first optical counterpart of a planet discovered from radial velocities. A dedicated survey to look for RV planets with SPHERE lead to non-detections (Zurlo et al. 2018b).

But we’re not far enough along to spend much time on this in these pages — a great deal of follow-up work will be needed to nail down what is at best an unlikely catch. I call it that because it strains credulity to believe that we would find a planet whose mass suggests a world far less bright than this one is (if indeed it is a planet), with a luminosity that demands something like a huge ring system to explain it. Other explanations will need to be ruled out, and that’s going to take time. The radial velocity work on Proxima Centauri c points to a world of a minimum six Earth masses, orbiting 1.5 AU out. But if this direct imaging work has indeed identified (and confirmed) Proxima c, it is a planet with a most unusual makeup:

If real, the detected object (contrast of about 16-17 mag in the H-band) is clearly too bright to be the RV [radial velocity] planet seen due to its intrinsic emission; it should then be circumplanetary material shining through reflected star-light. In this case we envision either a conspicuous ring system (Arnold & Schneider 2004), or dust production by collisions within a swarm of satellites (Kennedy & Wyatt 2011; Tamayo 2014), or evaporation of dust boosting the planet luminosity (see e.g. Wang & Dai 2019). This would be unusual for extrasolar planets, with Fomalhaut b (Kalas et al. 2008), for which there is no dynamical mass determination, as the only other possible example. Proxima c candidate is then ideal for follow-up with RVs observations, near IR imaging, polarimetry, and millimetric observations.

So good for Raffaele Gratton (INAF – Osservatorio Astronomico di Padova, Italy) and colleagues for pursuing this investigation and for suggesting the numerous ways it can be approached with various follow-up methods. And kudos to Mario Damasso (Astrophysical Observatory of Turin) as well. Damasso was lead author of the discovery paper on Proxima Centauri c, and it was he who suggested to Gratton that the SPHERE instrument might just be able to detect it.

Now we have a wait on our hands before we have anything definitive. At this point we have a possible detection that is tantalizing but definitely no more than tentative. Meanwhile, here’s a figure from the paper that gives an idea what Gratton and team are talking about.

Image: This is Figure 2 from the paper. The SPHERE images were acquired during four years through a survey called SHINE, and as the authors note, “We did not obtain a clear detection.” The figure caption in the paper reads like this: Fig. 2. Individual S/N maps for the five 2018 epochs. From left to right: Top row: MJD 58222, 58227, 58244; bottom row: 58257, 58288. The candidate counterpart of Proxima c is circled. Note the presence of some bright background sources not subtracted from the individual images. However, they move rapidly due to the large proper motion of Proxima, so that they are not as clear in the median image of Figure 1. The colour bar is the S/N. S/N detection is at S/N=2.2 (MJD 58222), 3.4 (MJD 58227), 5.9 (MJD 58244), 1.2 (MJD=58257), and 4.1 (MJD58288). Credit: Gratton et al.

The paper is Gratton et al., “Searching for the near infrared counterpart of Proxima c using multi-epoch high contrast SPHERE data at VLT,” accepted at Astronomy & Astrophysics (abstract).

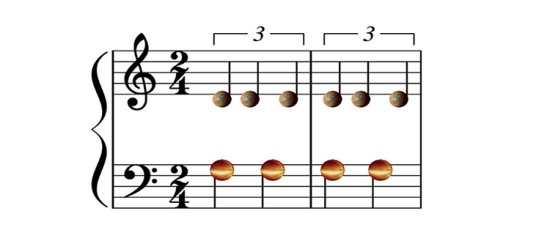

HD 158259: 6 Planets, Slightly Off-Tune

What an exceptional system the one around HD 158259 is! Here we have six planets, uncovered with the SOPHIE spectrograph at the Haute-Provence Observatory in the south of France, with the innermost world also confirmed through space-based TESS observations. Multiple things jump out about this system. For one thing, all six planets are close to, but not quite in, a 3:2 resonance. That ‘close to’ tells the tale, for researchers believe there are clues to the formation history of the system within their observations of this resonance.

Image: In the planetary system HD 158259, all pairs of subsequent planets are close to the 3:2 resonance : the inner one completes about three orbits as the outer completes two. Credit & Copyright: UNIGE/NASA.

The primary, HD 158259, is itself interesting, in that it’s a G-class star about 88 light years out, an object just a little more massive than our Sun. But tucked well within the distance of Mercury from the Sun we find all six of the thus far discovered planets. In fact, the outermost planet orbits at a distance 2.6 times smaller than Mercury’s, making this a compact arrangement indeed. Five of the planets are considered ‘mini-Neptunes’, while the sixth is a ‘super-Earth.’

The innermost world masses about twice the mass of Earth, while the five outer planets weigh in at about six times Earth’s mass each. The 3:2 resonance detected here runs through the entire set of planets, so that as the planet closest to the star completes three orbits, the next one out completes two, or close to it (remember, this is an ‘almost resonant’ situation). And so on — the second planet completes three orbits while the third completes about two.

Nathan Hara (University of Geneva), who led the study, likens the resonance to music, saying “This is comparable to several musicians beating distinct rhythms, yet who beat at the same time at the beginning of each bar.” The researchers involved (who used, by the way, the same telescope deployed by Michel Mayor and Didier Queloz in their ground-breaking detection of 51 Pegasi b in 1995, though with added help from SOPHIE) believe that the ‘almost resonances’ here suggest that what had been a tight resonance was disrupted by synchronous migration.

In other words, the six planets would have formed further out from the star and then moved inward together. As Hara puts it:

“Here, ‘about’ is important. Besides the ubiquity of the 3:2 period ratio, this constitutes the originality of the system. Furthermore, the current departure of the period ratios from 3:2 contains a wealth of information. With these values on the one hand, and tidal effect models on the other hand, we could constrain the internal structure of the planets in a future study. In summary, the current state of the system gives us a window on its formation.”

And here’s how the paper deals with the issue;

…period ratios so close to 3:2 are very unlikely to stem from pure randomness. It is therefore probable that the planets underwent migration in the protoplanetary disk, during which each consecutive pair of planets was locked in 3:2 MMR [mean-motion orbital resonance]. The observed departure of the ratio of periods of two subsequent planets from exact commensurability might be explained by tidal dissipation, as was already proposed for similar Kepler systems (e.g., Delisle & Laskar 2014). Stellar and planet mass changes have also been suggested as a possible cause of resonance breaking (Matsumoto & Ogihara 2020). The reasons behind the absence of three-body resonances, which are seen in other resembling systems (e.g., Kepler-80, MacDonald et al. 2016), are to be explored.

It’s interesting that while other systems with compact planets in near-resonance conditions have been detected (TRAPPIST-1 is the outstanding example, I suppose, but as we see above, Kepler-80 also fits the bill), this is the first to have been found through radial velocity methods. According to the authors, the method demands a high number of data points and accurate accounting of possible instrumental or stellar noise in the signal. In this work, 290 radial velocity measurements were taken, and supplemented by the TESS transit data on the inner planet.

Compact systems with multiple planets on close orbits do not appear only among M-dwarfs like HD 158259. Kepler-223, for example, is a G-class star with four known planets. Here the orbital periods are 7, 10, 15 and 20 days respectively. The Dispersed Matter Planet Project (DMPP) has turned up data on an F-class star (HD 38677) with four massive planets with orbital periods ranging from 2.9 to 19 days. The ancient Kepler-444 is a K-class star with five evidently rocky worlds orbiting the star in less than ten days.

Rather than the size of the star, at least one recent paper argues that metallicity is a key factor in producing compact systems (Brewer et al., (2018) “Compact multi-planet systems are more common around metal-poor hosts,” Astrophys J 867:L3). Clearly we have much to learn about planet formation and migration in compact systems. Such systems are near or below the current detection limits of radial velocity surveys — this is where the work on HD 158259 truly stands out — but they are good targets for transit studies. It will be instructive to see what TESS comes up with as it continues its work.

The paper is Hara et al., “The SOPHIE search for northern extrasolar planets. XVI. HD 158259: A compact planetary system in a near-3:2 mean motion resonance chain,” Astronomy & Astrophysics Vol. 636, L6 (April 2020). Abstract / preprint.

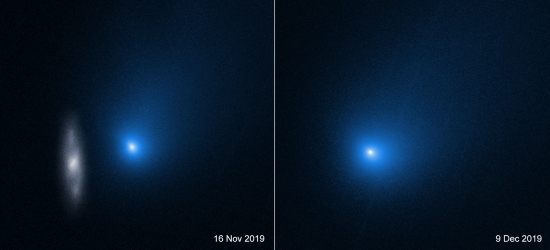

A Look into the Origins of Interstellar Comet 2I/Borisov

We’re learning interesting things about 2I/Borisov, the first interstellar comet discovered entering our Solar System (‘Oumuamua may have been a comet as well, but the lack of an active gas and dust coma makes it hard to say for sure). Moving at 33 kilometers per second, 2I/Borisov is on a trajectory clearly indicating an interstellar origin. Now two different studies have shown that in terms of composition, the visiting object is unlike most of the comets found in our own system.

Both the Hubble Space Telescope and the Atacama Large Millimeter/submillimeter Array (ALMA) have found levels of carbon monoxide (CO) higher than expected, a concentration greater than any comet yet detected within 2 AU of the Sun (about 300 million kilometers). The ALMA team finds the CO concentration to be somewhere between 9 and 26 times higher than inner system comets, while Hubble sees levels at least 50 percent more abundant than the average of comets in the inner system. Dennis Bodewits (Auburn University) is lead author of the paper on the Hubble work, which appears in Nature Astronomy:

“The amount of carbon monoxide did not drop as expected as the comet receded from the Sun. This means that we are seeing the primitive layers of the comet, which really reflect what this object is made of. Because of the abundance of carbon monoxide ice that survived so close to the Sun, we think that comet Borisov comes from a much colder place and from a very different debris disk around a star than our own.”

Image: Comet 2I/Borisov, captured here in two separate images from NASA’s Hubble Space Telescope, including one with a background galaxy (left), is the second interstellar object known to enter our Solar System. New analysis of Borisov’s bright, gas-rich coma indicates the comet is much richer in carbon monoxide gas than water vapor, a characteristic very unlike comets from our Solar System. Credit: NASA, ESA and D. Jewitt (UCLA).

2I/Borisov isn’t completely off the charts — there is one Oort Cloud comet, the recently discovered C/2016 R2 (PanStarrs), that showed even higher CO levels than Borisov when it reached a distance of 2.8 AU from the Sun. But the Borisov results are intriguing and give us a preliminary benchmark against which to measure interstellar objects discovered in the future. The Hubble work was performed using the instrument’s Cosmic Origins Spectrograph, sensitive to ultraviolet, with data taken in four periods from Dec. 2019 to Jan. 2020. NASA’s Swift satellite was able to confirm Hubble’s readings with measurements taken over the same period.

Carbon monoxide is volatile enough that it begins to sublimate (turn from ice to gas) far out in the Solar System, perhaps as much as three times the distance between Pluto and the Sun. Water, on the other hand, remains an ice until somewhere between Mars and the inner edge of the main asteroid belt, and water is the prevalent signature of comets in the inner system. Adds Bodewits: “Borisov’s large wealth of carbon monoxide implies that it came from a planet formation region that has very different chemical properties than the disk from which our solar system formed.”

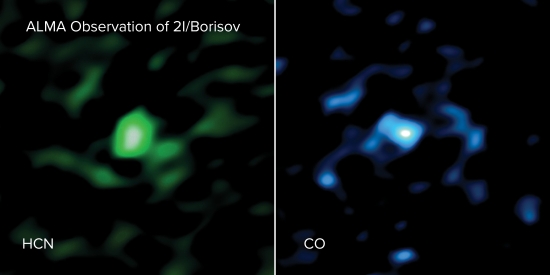

In a second study reported in the same issue of Nature Astronomy, scientists using ALMA data collected in the same time frame as Hubble’s (15-16 December 2019) detected both hydrogen cyanide (HCN) and CO in Borisov. The HCN showed up at levels that were not surprising to researchers, but the CO amounts had researchers speculating on the object’s origins. Martin Cordiner, along with Stefanie Milam, led an international team at NASA GSFC:

“Most of the protoplanetary disks observed with ALMA are around younger versions of low-mass stars like the Sun. Many of these disks extend well beyond the region where our own comets are believed to have formed, and contain large amounts of extremely cold gas and dust. It is possible that 2I/Borisov came from one of these larger disks… This is the first time we’ve ever looked inside a comet from outside our solar system, and it is dramatically different from most other comets we’ve seen before.”

Image: ALMA observed hydrogen cyanide gas (HCN, left) and carbon monoxide gas (CO, right) coming out of interstellar comet 2I/Borisov. The ALMA images show that the comet contains an unusually large amount of CO gas. ALMA is the first telescope to measure the gases originating directly from the nucleus of an object that travelled to us from another planetary system. Credit: ALMA (ESO/NAOJ/NRAO), M. Cordiner & S. Milam; NRAO/AUI/NSF, S. Dagnello.

The Hubble team considers a carbon-rich circumstellar disk around a cool red dwarf as one possibility for 2I/Borisov’s birthplace. Here, gravitational interactions with a planet orbiting in the outer reaches of the system could have ejected the comet. “These stars have exactly the low temperatures and luminosities where a comet could form with the type of composition found in comet Borisov,” says John Noonan (Lunar and Planetary Laboratory, University of Arizona, Tucson).

This is interesting speculation, but the real news here is that this is the first measurement of the carbon monoxide composition of a comet from another star. The work points to the entirely new area of exoplanetology that is opening up, the study of debris from other stellar systems that will be further enabled as new telescopes and survey methods come online. Future objects should help us clarify how to use such data as we explore planet formation around other stars.

The papers are Bodewits et al., “The carbon monoxide-rich interstellar comet 2I/Borisov,” Nature Astronomy 20 April 2020 (abstract) and Cordiner et al., “Unusually high CO abundance of the first active interstellar comet,” in the same issue of Nature Astronomy (full text).