Finding small and possibly habitable worlds around M-dwarfs has already proven controversial, as we’ve seen in recent work on Gliese 581. The existence of Gl 581d, for example, is contested in some circles, but as Guillem Anglada-Escudé argues below, sound methodology turns up a robust signal for the world. Read on to learn why as he discusses the early successes of the Doppler technique and its relevance for future work. Dr. Anglada-Escudé is a physicist and astronomer who did his PhD work at the University of Barcelona on the Gaia/ESA mission, working on the mission simulator and data reduction prototype. His first serious observational venture, using astrometric techniques to detect exoplanets, was with Alan Boss and Alycia Weinberger during a postdoctoral period at the Carnegie Institution for Science. He began working on high-resolution spectroscopy for planet searches around M-stars during that time in collaboration with exoplanet pioneer R. Paul Butler. In a second postdoc, he worked at the University of Goettingen (Germany) with Prof. Ansgar Reiners, participating in the CRIRES+ project (an infrared spectrometer for the VLT/ESO), and joined the CARMENES consortium. Dr. Anglada-Escudé is now a Lecturer in Astronomy at Queen Mary University of London, working on detection methods for very low-mass extrasolar planets around nearby stars.

by Guillem Anglada-Escudé

The Doppler technique has been the driving force for the first fifteen years of extrasolar planet detection. The method is most sensitive to close-in planets and many of its most exciting results come from planets around low-mass stars (also called M-dwarfs). Although these stars are substantially fainter than our Sun, the noise floor seems to be imposed stellar activity rather than instrumental precision or brightness, meaning that small planets are more easily detected here than around Sun-like stars. In detection terms, the new leading method is space-transit photometry, brilliantly demonstrated by NASA’s Kepler mission.

Despite its efficiency, the transit method requires a fortunate alignment of the orbit with our line of sight, so planets around the closest stars are unlikely to be detected this way. In the new era of space-photometry surveys and given all the caveats associated with accurate radial velocity measurements, the most likely role of the Doppler method for the next few years will be the confirmation of transiting planets, and detection of potentially habitable super-Earths around the nearest M-dwarfs. It is becoming increasingly clear that the Doppler method might be unsuitable to detect Earth analogs, even around our closest sun-like neighbors. Unless there is an unexpected breakthrough in the understanding of stellar Doppler variability, nearby Earth-twin detection will have to wait a decade or two for the emergence of new techniques such as direct imaging and/or precision space astrometry. In the meantime, very exciting discoveries are expected from our reddish and unremarkable stellar neighborhood.

The Doppler years

We knew stars should have planets. After the Copernican revolution, it had been broadly acknowledged that Earth and our Sun occupy unremarkable places in the cosmos. Our solar system has 9 planets, so it was only natural to expect them around other stars. After years of failed or ambiguous claims, the first solid evidence of planets beyond the Solar system arrived in the early 90’s. First came the pulsar planets (PSR+1257). Despite the claims of their existence being well consolidated, these planets were regarded as space oddities. That is, a pulsar is the remnant core of an exploded massive star, so the recoil of planets after such an event is unlikely to be the most universal channel for planet formation.

In 1995, the first planets around main sequence stars were reported. The hot Jupiters came by the hand of M. Mayor and D. Queloz (51 Peg, 1995), and shortly thereafter a series of gas giants were announced by the competing American duo G. Marcy and P. Butler (70 Vir, 47 UMa, etc.). These were days of wonder and the Doppler method was the norm. In a few months, the count grew from nothing to several worlds. These discoveries became possible thanks to the possibility of measuring the radial velocities of stars at ~3 meters-per-second (m/s) precision, that is, human running speed. 51 Peg b periodically moves its host star at 50 m/s and 70 Vir b changes the velocity of its parent star by 300 m/s, so these became easily detectable once precision reached that level.

Lighter and smaller planets

Given the technological improvements, and solid proof that planets were out there in possibly large numbers, the exoplanet cold war ramped up. Large planet-hunting teams built up around Mayor & Queloz (Swiss) and Marcy & Butler (Americans) in a strongly competitive environment. Precision kept improving and, when combined with longer time baselines, a few tens of gas giants were already reported by 2000. Then the first exoplanet transiting in front of its host star was detected. Unlike the Doppler method, the transit method measures the dip in brightness caused by a planet crossing in front of the star. Such alignment happens randomly, so a large numbers of stars (10 000+) need to be monitored simultaneously to find planets using this technique.

Plans to engage in such surveys suddenly started to consolidate (TrES, HAT, WASP) and small (COROT) to medium-class space missions (NASA’s Kepler, Eddington/ESA – cancelled later) started to be seriously considered. By 2002, the Doppler technique led to the first reports of hot Neptunes (GJ 436b) and the first so-called super-Earths (GJ 876d, M ~ 7 Mearth) came into the scene. Let me note that the first discoveries of such ‘smaller’ planets were found around the even more unremarkable small stars called M-dwarfs.

While not obvious at that moment, such a trend would later have serious consequences. Several hot Neptunes and super-Earths followed during the mid-2000’s, mostly coming from the large surveys led by the Swiss and American teams. By then the first instruments specifically designed to hunt for exoplanets had been built, such as the High Accuracy Radial velocity Planet Searcher (or HARPS), by a large consortium led by the Geneva observatory and the European Southern Observatory (ESO). While the ‘American method’ relied on measuring the stellar spectrum simultaneous to the spectral features in Iodine gas, the HARPS concept consisted in stabilizing the hardware as much as possible. After 10 years of operation of HARPS, it has become clear that the stabilized instrument option overperforms the Iodine designs, as it significantly reduces the data-processing effort needed to obtain accurate measurements (~1 m/s or better). Dedicated Iodine spectrometers are now in operation delivering comparable precisions (APF, PFS), which seems to point out towards a fundamental limit in the stars rather than in the instruments.

Sun-like stars (G dwarfs) were massively favoured in the early Doppler surveys. While many factors were folded into target selection, there were two main reasons for this choice. First, sun-like stars were considered more interesting due to the similarity to our host star (search for planets like our own) and second, M-dwarfs are intrinsically fainter so the number of bright enough targets is quite limited. For main sequence stars, the luminosity of the stars grows as the 4th power of the mass and their apparent brightness falls as the square of the distance.

As a result, one quickly runs out of intrinsically faint objects. Most of the stars we see in the night sky have A and F spectral types, some are distant supergiants (eg. Betelgeuse), and only a handful of sun-like G and K dwarfs are visible (Alpha Centauri binary, Tau Ceti, Epsilon Eridani, etc). No M-dwarf is bright enough to be visible by the naked eye. By setting a magnitude cut-off of V ~ 10, early surveys included thousands of yellow G-dwarfs, a few hundreds of orange K dwarfs, and a few tens of red M-dwarfs. Even though M-dwarfs were clearly disfavoured in numbers, many ‘firsts’ and most exciting exoplanet detection results come from these ‘irrelevant’ tiny objects.

M-dwarfs have masses between 0.1 and 0.5 Msun and radii between 0.1 and 0.5 Rsun. Since temperatures are known from optical to near infrared photometry (~3500 K, to be compared to 5800 K of the Sun) the basic physics of blackbody radiation shows that their luminosities are between 0.1% to 5% that of the Sun. As a result, orbits at which planets can keep liquid water on their surface are much closer-in and have shorter periods. All things combined, one finds that ‘warm’ Earth mass planets would imprint wobbles of 1-2 m/s on an M-dwarf (0.1 m/s Earth/Sun), and the same planet would cause a ~0.15% dip in the star-light during transit (0.01% Earth/Sun).

Two papers by the Swiss group from 2007 and 2009 (Udry et al., http://adsabs.harvard.edu/abs/2007A%26A…469L..43U, Mayor et al. http://adsabs.harvard.edu/abs/2009A%26A…507..487M) presented evidence for the first super-Earth with realistic chances of being habitable orbiting around the M-dwarf GJ 581 (GJ 581d). Although its orbit was considered too cold in a first instance, subsequent papers and climatic simulations (for example, see Von Paris et al. 2010, http://cdsads.u-strasbg.fr/abs/2010A%26A…522A..23V) indicated that there was no reason why water could not exist on its surface given the presence of reasonable amounts of greenhouse gases. As of 2010, GJ 581d is considered the first potentially habitable planet found beyond the Solar system. The word potentially is key here. It just acknowledges that, given the known information, the properties of the planet are compatible with having a solid surface and sustainable liquid water over its life-time. Theoretical considerations about the practical habitability of these planets is – yet another – source of intense debate.

GJ 581 was remarkable in another important way. Its Doppler data could be best explained by the existence of (at least) 4 low-mass planets in orbits with periods shorter than ~2 months (orbit of Mercury). A handful of similar other systems were known (or reported) during those days, including HD 69830 (3 Neptunes, G8V), HD 40307 (3 super-Earths, K3V) and 61 Vir (3 sub-Neptunes). These and many other Doppler planet reports for the large surveys led to the first occurrence rate estimates for sub-Neptune mass planets by ~2010. According to those (for example, see http://adsabs.harvard.edu/abs/2010Sci…330..653H ), at least ~30% of the stars hosted one super-Earth within the orbit of our Mercury. Simultaneously, the COROT mission started to produce its first hot-rocky planet candidates (eg. COROT 7b) and the Kepler satellite was slowly building up its high quality space-based light curves.

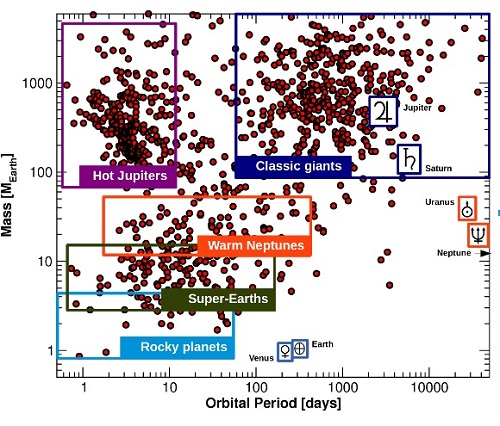

What Kepler was about to reveal was even more amazing. Not only did 30% of stars host ‘hot’ super-Earths, but, at least, ~30% of the star hosted compact (highly co-planar) planetary systems with small planets, again with orbits interior to our Mercury. Thanks to this unexpected overabundance of compact systems, the Kepler reports of likely planets came in the thousands (famously known as Kepler Objects of Interest, or KOIs), which in astronomy means we can move from interesting individual objects to a fully mature discipline where statistical populations can be drawn. Today, the exoplanet portrait is smoothly covered by ~2000 reasonably robust detections, extending down to sub-Earth sized planets in orbits down to a few hours (eg. Kepler 78) up to thousands of days for those Jupiter analogs that (at the end of the day) have been found to be rather rare (<5% of the stars). Clustering of objects in the different regions of the mass-period diagram (see Figure 1) encodes the tale of planet formation and the origin. This is where we are now in terms of detection techniques.

Figure 1: The exoplanet portrait (data extracted from exoplanet.eu, April 1st 2015). Short period planets are generally favoured by the two leading techniques (transits and Doppler spectroscopy), which explains why the left part of the diagram is the most populated. The ‘classic’ gas giants are on the top right (massive and long periods), and the bottom left is the realm of the hot neptunes, and super-Earths. The relative paucity of planets in some areas of this diagram tells us about important processes that formed them and shaped the architectures of the systems. For example, the horizontal gap between the Neptunes and the Jupiters is likely caused by the runaway accretion of gas once a planet grows a bit larger than Neptune in the protoplanetary nebula, quickly jumping into the Saturn mass regime. The large abundance of hot-jupiters on the top left is an observational bias due to the high detection efficiency (large planets and short period orbits), but the gap between the hot-Jupiters and the classical gas giants is not well understood and probably has to do with the migration process involved in dragging the hot-Jupiters so close to the star. Detection efficiency quickly drops to the right (longer periods) and bottom (very small planets).

Having reached this point, and given the wild success of Kepler, we might ask ourselves what is the relevance of the Doppler method as a detection method for small planets. The transit method requires a lucky alignment of the orbit. Using statistical arguments one can easily find out that most transiting planets will be detected around distant stars. Instead, the Doppler technique can achieve great precision on individual objects and detect the planets irrespective of their orbital inclination (unless in the rare cases when the orbits are close to face-on). Therefore, the hunt for nearby planets remains the niche of the Doppler technique. Small planets around nearby stars should enable unique follow-up opportunities (direct imaging attempts in 10-20 years) and transmission spectroscopy in the rare cases of transits.

However, there are other reasons why nearby stars are really exciting. These are brand new worlds next to ours that might be visited one day. Nearby stars trigger the imagination of the public, science fiction writers, filmmakers and explorers. While the scientific establishment tends to deem this quality irrelevant, many of us still find such motivation perfectly valid. As in many other areas, this in not only about pure scientific knowledge but about exploration.

For those who prefer a more oriented results-per-dollar approach, the motivational aspect of nearby exoplanets cannot be ignored either. Modern mathematics and physical sciences were broadly motivated by the need to improve our understanding of observations of the Solar system. Young scientists keep being attracted to space sciences and technology because of this (combined with the push from the film and video-game industry). A nearby exoplanet is not one more point in a diagram. It represents a place, a goal and a driver. Under this scope, reports and discoveries of nearby Doppler detections (even if tentative) still rival or surpass the social relevance of those exotic worlds in the distant Kepler systems. As long as there is public support and wonder for exploration, we will keep searching for evidence of nearby worlds. And to do this we need spectrometers.

Why is GJ 581 d so relevant?

We have established that nearby M-dwarfs are great places to look for small planets. But there is a caveat. The rotation periods of mature stars are in the 20-100 days range, meaning that spots or features in the stellar surface will generate apparent Doppler signals in the same range. After some years of simulation and solar observations, we think that these spurious signals will produce Doppler amplitudes between 0.5 and 3 m/s, even for the most quiet stars (highly object dependent). Moreover, this variability is not strictly random, which causes all sorts of difficulties. In technical terms, structure in the noise is often referred as correlated noise (or red-noise, activity-induced variability, etc.).

Detecting a small planet is like trying to measure the velocity of a pan filled with boiling water, by looking at its wiggling surface. If we can wait long enough, the surface motion averages out. However, consecutive measurements over fractions of seconds will not be random and can be confused with periodic variability in these same timescales. The same happens with stars. We can get arbitrarily great precision (down to cm/s) but our measurements will also be tracing occasional flows and spectral distortions caused by the variable surface.

Going back to the boiling water example, we could in principle disentangle the true velocity from the jitter if we have access to more information, such as the temperature or the density of the water at each time. Our hope is that this same approach can be applied to stars by looking at the so-called ‘activity indicators’. In the case of Gliese 581d, Robertson et al. subtracted an apparent correlation of the velocities with a measure of a chromospheric activity index. As a result, the signal of GJ 581d vanished, so they argued the planet was unlikely to exist (http://adsabs.harvard.edu/abs/2014Sci…345..440R).

However, in our response to that claim, we argued that one cannot just remove possible effects relevant to the observations. Instead, one needs to fold in all the information in a comprehensive model of the data (http://adsabs.harvard.edu/abs/2015Sci…347.1080A). When doing that, the signal of GJ 581d shows up again as very significant. This is a subtle point with far-reaching consequences. The activity-induced variability is in the 1-3 m/s regime, and the amplitude of the planetary signal is about 2 m/s. Unless activity is modeled at the same level as the planetary signal, there is no hope in obtaining a robust detection. By comparison, the amplitude of the signal induced by Earth on the Sun is 10 cm/s while the Solar spurious variability is on the 2-3 m/s range. With a little more effort, we are likely going to detect many potentially habitable planets around M-stars using new generation spectrometers. Once we can agree on the way to do that, we can try to go one step further and attempt similar approaches with Sun-like stars.

The debate is on and the jury is still out, but clarifying all these points is essential to the viability of the Doppler technique and future plans for new instruments (What’s the need for more precise machines if we have already hit the noise floor?)

This same boiling pan effect sets the physical noise floor for other techniques as well, but the impact on the detection sensitivity can be rather different. For example, photometric measurements (eg. Kepler) are now mostly limited by the noise floor set by the Sun-like stars which, on average, have been found to be twice more active than our Sun. However, the transit ‘signal’ (short box-like feature, strictly periodic) is harder to emulate by stellar variability. It is only a matter of staring longer on target to be sure the transit-like feature repeats itself at a very precise time. The Kepler mission had been extended to 3.5 years to account for this, and it would have probably succeeded if its reaction wheel hadn’t failed (note most ‘warm Earth-sized’ objects are around K and M-stars). The PLATO/ESA mission (http://sci.esa.int/plato/) will likely finish the job and detect a few dozens of Earth twins, among many other things.

So, what’s next?

New generation spectrometers will become available soon. Designed to reach similar or better hardware stability than HARPS, these instruments will extend the useful wavelength range towards the red and near-infrared part of the spectrum. A canonical example is the CARMENES spectrometer (https://carmenes.caha.es/), which will cover from 500 nm up to 1.7 microns (HARPS covers from 380 to 680 nm). CARMENES is expected to go into the telescope this summer. In addition to collecting more photons, access to other regions of the spectrum will enable the incorporation of many more observables in the analysis. In the meantime, a series of increasingly ambitious space-photometry missions will keep identifying planet-sized objects by the thousands. In this context, a careful use of Doppler instruments will provide confirmation and mass measurements for transiting exoplanet candidates.

In parallel, the high follow-up potential and the motivational component of nearby stars justifies the continued use of precision spectrometers, at least on low-mass stars. In addition to this, stabilized spectrometers ‘might’ play a key role in atmospheric characterization of transiting super-Earths around nearby M-dwarf stars. Concerning the nearest Sun-like stars, alternative techniques such as direct imaging or astrometry should be viable once dedicated space missions are built, maybe in the next 15-20 years. However, given the trend towards stagnant economies and increasingly long technological cycles for space instrumentation, we might need to hope for the era of space industrialization (or something as dramatic as a technological singularity taking over the hard work) to catch a glimpse of the best targets for interstellar travel.

Being a long-time observer and commentator on the search for extrasolar planets and planetary habitability, I really enjoyed reading this piece by Dr. Anglada-Escudé. But there is another point that needs to be made in regards to the current controversy surrounding GJ 581d. While Dr. Anglada-Escudé and his colleague, Mikko Tuomi, were certainly correct in their recent critique in some of the data analysis methods employed by Robertson et al., there is still the issue that the rotational period of GJ 581 derived by Robertson et al. (130 days) is almost exactly twice the orbital period of GJ 581d (66 days). This sort of whole number ratio with the stellar rotation period casts doubt on the planetary interpretation of the observed 66-day periodicity in the radial velocity variation and has been sufficient cause to reject a planetary interpretation in the past:

http://www.drewexmachina.com/2015/03/09/habitable-planet-reality-check-gj-581d/

A similar issue has also been raised in a new paper just submitted for publication by Robertson et al. in regards to Kapteyn’s Star. In this new work, Robertson et al. found that the rotational period of Kapteyn’s Star is 143 days. This is almost exactly three times longer than the reported orbital period of 48 days for Kapteyn b whose discovery had made headlines just a year ago. Once again, this whole number relation in the stellar rotational period with the observed periodicity in the radial velocity casts doubt on a planetary interpretation.

http://www.drewexmachina.com/2015/05/14/kapteyn-b-has-another-habitable-planet-disappeared/

While I am looking forward to seeing how this all plays out, I was pleased to see Dr. Anglada-Escudé discuss the issues with reaching the noise floor of current Doppler velocity measurements (an issue I have raised) and plans for the future. Thanks for a great essay!

It’s probably worth mentioning that Robertson et al. did respond to the comment on their analysis of Gliese 581.

Robertson et al. (2015) ‘Response to Comment on “Stellar activity masquerading as planets in the habitable zone of the M dwarf Gliese 581”‘

Sure Andrew. All these planets can be activity. We are not delusional, but I am also convinced all HZ super-Earths detected by Doppler can be questioned (last I heart is that Prof. Greogry had doubts on GJ 667Cc as well), so I take an optimistic approach to the problem (periodic doppler signal + unconvincing correlation with activity -> likely planet candidate). Plus, I see the Kepler statistics on the transiting cool KOIs and I know the ice is not that thin. The problem is that the discussion is not occurring at a constructive level. One of the points of our Science comment/responses is that coincidence or correlation does not imply causality. We have well defined tools to deal with decision making (statistical tests and model comparison) to assist us on that. Statistics does not imply certainty, but at least it is methodological and provides objetive critera. If I have to overthink too much to justify a result, then something is likely amiss…

For Kapteyn (and the others), we will do additional checks, hopefully get more data, and move keep moving forward. The one thing I agree is that more data will always be welcome. Detecting these planets is hard enough and data analysis comes cheap in comparison to obtaining more data. A bit more rigour and the benefit of the doubt would be appreciated.

ps. as a side note, and given you explicitly mention it, just note that activity indices as presented in the new Robertson et al manuscript on Kapteyn´s actually favour a long period trend rather than the 140-ish signal (just look at the periodograms). A rather sophisticated argument is built around that, and statistical objectivity is once again thrown to the recycle bin…

With ESPRESSO and the potential noise floor for RV drawing potentially closer and the necessary astrometry to resolve such issues on even close planets is at least ten years away are we to see more and more disagreements over RV based discoveries ? Transition photometry is far from perfect either , yet we will be required to rely on both these techniques when TESS goes live in just two years time. What can be done to avoid near weekly disagreements on existence even before we get to JWST atmospheric constraint. I ask to a solution gentlemen .

FYI: I went to the link in this article about Peter van de Kamp where I see that the twentieth anniversary of his death is coming up on May 18.

What is really sad is that the first exoplanet around a main sequence star was found just a few months later, on October 6, 1995:

http://www.solstation.com/stars2/51pegasi.htm

At least van de Kamp got to learn about the pulsar planets discovered in 1992:

http://www2.astro.psu.edu/users/alex/pulsar_planets.htm

@Guillem Anglada-Escude May 15, 2015 at 16:11

Thank you for taking the time for your thoughtful reply to my comment.

I am not advocating the extreme view that the low-level radial velocity signatures of all extrasolar planets are suspect nor is anyone else. But when the period of the radial velocity variation is a whole number ratio of the period of stellar rotation, it raises a red flag about a planetary interpretation of that RV signal regardless of what the statistics might suggest about any obvious correlation with stellar activity. This same argument has been used before to discount a planetary interpretation in other instances including recently for a signal with a 9.2-day periodicity in RV data for GJ 3543 (N. Astudillo-Defru et al., “The HARPS search for southern extra-solar planets XXXV. Planetary systems and stellar activity of the M dwarfs GJ 3293, GJ 3341, and GJ 3543”, arVix 14111.7048).

As for your observation about the power at longer periods in the periodograms of activity tracers for Kapteyn’s Star, yes, there is certainly peaks present at these longer periods. But Robertson et al. do state in their paper “periodograms of the activity indices also show power at very long periods, potentially indicative of a long-term trend trend or curvature; indeed, visual inspection of the activity series appears to show strong curvature as might be expected for a long-period cycle. However, as explained further below, we believe this is likely a spurious artifact caused by sparse sampling of the stellar rotation signal”.

Maybe this is indeed an instance where “statistical objectivity is once again thrown to the recycle bin” as you state. However, as an outside observer who has no skin in this game with either a planetary or stellar activity interpretation of the data for GJ 581, Kapteyn’s Star, GJ 667C, or what have you (as well as a scientist with a fair amount of data processing experience), I think it is safe to say that more data is required to pin down the true nature of these signals. In the mean time, I feel that some healthy skepticism is not unwarranted.

Good reply Andrew. And good questions too that must be answered with so much ahead in the next decade. To be valid good science must be falsifiable . We all agree on that.

Hello,

All the points raised are perfectly valid, but things are not as pretty as they appear on the surface. I am quite belligerent against papers like P. Robertson because they do have impact in higher level ‘policy’. Ok, some planet planet signals ‘might’ be rotation induced. I just don’t see the need for a paper every three months repeating the same (statements like ‘GJxxx does not exist’, do not help either). Acquiring more data for comfirmation hasn’t work well for us either. I want to share the last comment we received when we trying to get more telescope time to improve sampling, and deal with stellar activity (another star) :

> Strength: The possibility of an Earth-like planet in the habitable zone

> around GJ… is tantalizing and appealing. The observing strategy appears

> adequate. Weaknesses: Such a planet is hinted at by RV measurements,

> but the result is uncertain because it relies heavily on a few high-cadence

> measurements, which could have correlated noise biasing the result. ”

sigh… and the orbital period is not even close to a fraction of the rotation.

You are also right in that there is much more data than the presented one. Doppler searches of exoplanets are facing a textbook case of File drawer bias (http://en.wikipedia.org/wiki/Publication_bias ). There are thousands of HARPS public spectra (hundreds of stars, I am not bluffing) with all these uninteresting activity induced signals that remain unpublished. In my opinion, a more constructive approach would be going on these ‘uninteresting’ ones and try pulling signals out of them (or improve those models for variability).

Skepticism driven by a constructive attitude is badly needed in this field. I’ll drink for van de Kamp and his ‘mistake’ on the 20th!

“I’ll drink for van de Kamp and his ‘mistake’ on the 20th!”

Looking forward to your anticipated news.

“I’ll drink for van de Kamp and his ‘mistake’ on the 20th!”

I am intrigued by this “hint” as well. Decades of astrometric and RV measurements have discounted the reality of van de Kamp’s purported Jupiter-size planets. Indeed, the presence of any planets orbiting Barnard’s Star with Msini values above about 1 to 10 times that of the Earth have been excluded by RV measurements in orbits with periods ranging from about one day to two years, respectively. For more details:

http://www.drewexmachina.com/2015/04/23/search-for-planets/

This was a fascinating entry, but I must ask:

It is May 21 — was anything announced on the 20th? I’ve checked sites ranging from arXiv.org to twitter, and haven’t seen any anouncements related to Barnard’s star, Guillem Anglada-Escudé’s research, Peter van de Kamp’s claims, and so on.

Thank you for any updates, or at least, any further “hints”!

Hi Eric and all. Nothing to report on Barnard’s star for my side. I didn’t mean to imply we had some new results on it (unfortunately). Sorry about the confussion. I just wanted to highlight the boldness of Van de Kaamp, who i deeply admire.

@Guillem Anglada May 22, 2015 at 5:27

Oh darn! I was hoping we had a scoop on some news for Barnard’s Star. And for the record, I am a long-time admirer of Peter van de Kamp as well. The planets he claimed to have found orbiting Barnard’s Star is part of the reason I got interested in the nearby stars and prompted me to write to Dr. van de Kamp in 1975 for more information. He kindly sent me a reprint of his review paper, “The Nearby Stars”, which I still have in my collection of research material even after forty years. While I had avidly consumed articles from periodicals like Sky & Telescope at the time, this paper was the first technical astronomical publication I had ever read. I was quite disappointed to discover when I was doing research for an astronomy project as an undergrad around 1982 that the case for these planets had unraveled.

On the Move with Barnard’s Star and 61 Cygni

By: Bob King | June 3, 2015

Stars may appear static, but they’re on the move. Put these two speed demons on your observing list this summer. When you return in a year or two, you’ll be pleasantly surprised.

Barnard’s Star would be an undistinguished red dwarf in Ophiuchus were it not for its rapid motion across the sky. It measures 1.9 times Jupiter’s diameter and lies only 6 light-years from Earth.

Last week we visited with mover-and-shaker star Arcturus in Boötes. Despite its great speed, it requires a minimum of a couple decades for us to see the orange giant shift against the more distant background stars in a telescope.

While that might make an excellent very-long-term observing project, most of us would prefer something a smidge more immediate. Fortunately, there are two stars visible this season to accommodate our wishes. The first, Barnard’s Star, is a 9.5-magnitude red dwarf in Ophiuchus, just 6 light-years from Earth. That makes it the second closest star to Earth after the Alpha Centauri system.

Discovered by American astronomer E. E. Barnard in 1916, it scoots faster across the sky than any other star in the heavens. Moving at a rate of 10.3? per year, Barnard’s Star covers a quarter degree, or half a full Moon diameter, in a human lifetime.

Full article here:

http://www.skyandtelescope.com/observing/on-the-move-with-barnards-star-and-61-cygni06032105/