Talk of a ‘singularity’ in which artificial intelligence reaches such levels that it moves beyond human capability and comprehension plays inevitably into the realm of interstellar studies. Some have speculated, as Paul Davies does in The Eerie Silence, that any civilization we make contact with will likely be made up of intelligent machines, the natural development of computer technology’s evolution. But even without a singularity, it’s clear that artificial intelligence will have to play an increasing role in space exploration.

If we develop the propulsion technologies to get an interstellar probe off to Alpha Centauri, we’ll need an intelligence onboard that can continue to function for the duration of the journey, which could last centuries, or at the very least decades. Not only that, the onboard AI will have to make necessary repairs, perform essential tasks like navigation, conduct observations and scientific studies and plan and execute arrival into the destination system. And when immediate needs arise, it will have to do all of this without human help, given the travel time for radio signals to reach the spacecraft.

Consider how tricky it is just to run rover operations on Mars. Opportunity’s new software upgrade is called AEGIS, for Autonomous Exploration for Gathering Increased Science. It’s a good package, one that helps the rover identify the best targets for photographs as it returns data to Earth. AEGIS had to be sent to the three transmitting sites and forwarded on to the Odyssey orbiter, from which it could be beamed to Opportunity on the surface. A new article in h+ Magazine takes a look at AEGIS in terms of what it portends for the future of artificial intelligence in space. Have a look at it, and ponder that light-travel time to Mars is measured in minutes, not the hours it takes to get to the outer system.

Where do the early AI applications like AEGIS lead us? Writer Jason Louv asked Benjamin Bornstein, who leads JPL’s Machine Learning team, for a comment on machines and the near future:

“We absolutely need people in the loop, but I do see a future where robotic explorers will coordinate and collaborate on science observations,” Bornstein predicts. “For example, the MER dust devil detector, a precursor to AEGIS, acquires a series of Navcam images over minutes or hours and downlinks to Earth only those images that contain dust devils. A future version of the dust devil detector might alert an orbiter to dust storms or other atmospheric events so that the orbiter can schedule additional science observations from above, time and resources permitting. Dust devils and rover-to-orbiter communication are only one example. A smart planetary seismic sensor might alert an orbiting SAR [synthetic aperture radar] instrument, or a novel thermal reading from orbit could be followed up by ground spectrometer readings… Also, for missions to the outer planets, with one-way light time delays, onboard autonomy offers the potential for far greater science return between communication opportunities.”

One-way light delays are obviously critical as we look at the outer planets and beyond. Voyager 1, for example, as of April 12, was 113 AU from the Sun, having passed the termination shock. It’s now moving into the heliosheath. At these distances, the round-trip light time is 31 hours 34 minutes. That’s just to the edge of the Solar System. A probe to the Oort Cloud will have much longer delays, with round-trip signal times ranging from 82 to 164 weeks. Pushing on to the Alpha Centauri stars obviously lengthens the round-trip time yet again, so that we face up to 4.2 years delay just in getting a message to a probe at Proxima, with another 4.2 years for acknowledgement. The chances of managing short-term problems from Earth are obviously nil.

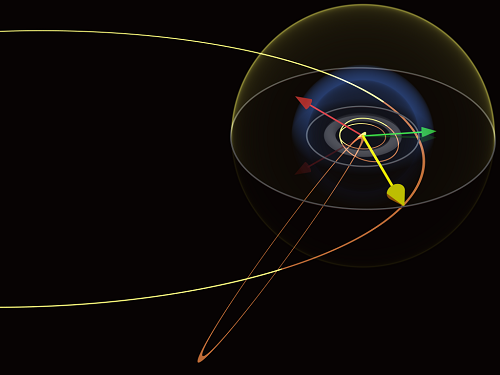

Image: Comet Hale Bopp’s orbit (lower, faint orange); one light-day (yellow spherical shell with yellow Vernal point arrow as radius); the Termination Shock (blue shell); positions of Voyager 1 (red arrow) and Pioneer 10 (green arrow); Kuiper Belt (small faint gray torus); orbits of Pluto (small tilted ellipse inside Kuiper Belt) and Neptune (smallest ellipse); all to scale. Credit: Paul Stansifer/84user/Wikimedia Commons.

Just how far could an artificial intelligence aboard a space probe be taken? Greg Bear’s wonderful novel Queen of Angels posits an AI that has to learn to deal not only with the situation it finds in the Alpha Centauri system, but also with what appears to be its growing sense of self-awareness. But let’s back the issue out to a broader context. Suppose that a culture at a technological level a million years in advance of ours is run by AIs that have supplanted the biological civilization that created their earliest iterations.

Think it’s hard to guess what an alien culture would do when it’s biological? Try extending the question to a post-singularity world made up of machines whose earliest ancestors were constructed by non-humans.

Will machine intelligence work side by side with the beings that created it, or will it render them obsolete? If Paul Davies’ conjecture that a SETI contact will likely be with a machine civilization proves true, are we safe in believing that the AIs that run it will act according to human logic and aspirations? There is much to speculate on here, but the answer is by no means obvious. In any case, it’s clear that work on artificial intelligence will have to proceed if we’re to operate spacecraft of any complexity outside our own Solar System. Any other species bent on exploring its neighborhood will have had to do the same thing, so the idea of running into non-biological aliens seems just as plausible as encountering their biological creators.

Such things are, of course, highly speculative–both a singularity itself, the rapturous hymns to the machine-god nonwithstanding, and whether we can even make a sentient machine.

To paraphrase F.A. Hayek, humans be aware of how little they really know about what they imagine they can design. It’s almost impossible to predict the behavior of a complex, dynamic system on a day-to-day basis and even long-term analyses are limited in their predictive usefullness. Actually controlling one is completely impossible.

For a singularitarian example, Kurzweil generalizes Moore’s Law to all technological change and comes up with a very neat graph that plots new inventions according to time and shows the approch to an asymptote. Unfortunately for his thesis, a new technology is not implemented for its own sake, it depends on the usefullness of the product, the manufacturing cost, etc. This is why we see so much constant, rapid change on the internet–it’s all software so there’s a huge amount of time for innovation between hardware upgrades.

Implemented transportation technology has shown extremely little change over the past 100 years, we still depend on coal and oil for most of our electricity despite wind turbines, nuclear power plants, solar power, alcohol or organic oil-based fuels. . . . In many parts of the world, farming is still practiced almost exactly as it has been for thousands of years.

The distinction must be made between an innovation and a succesful innovation.

This is a good discussion, and why I think it matters to explore the motivations of possible aliens. Although, I find the prospect of detecting, much less encountering ETIs remote, the conversation here is very valuable.

I think we are much more likely, perhaps even in the short term, to spawn our own alien intelligences (artificial intelligences). These may be profoundly different kinds of intellects, with very different kinds of motivations. Dealing with AIs could have many parallels to dealing with ETIs.

The only difference is that at the very initial stage, before things like recursive self-improvement kicks in, we have some hope of shaping those motivations.

The more we seriously study this issue, the more we can hope to reduce risks of something going terribly wrong.

Here is a link to my favorite David Brin short story- its about self replicating AI interstellar probes.

http://www.davidbrin.com/lungfish1.htm

Someone said in the previous thread that biologists are notably lacking in these discussions. This is especially true in the AI/upload/singularity milieu.

The singularity people believe intelligence transitions from biological to digital form at some point during their technological development. The problem with this idea is the notion that human consciousness can be emulated by digital computers. I think this very difficult because our brains do not work like digital computers at all. They are more like analog computers, but even that analogy is not so accurate. Human memory and processing is chemical in nature. I think this will be very difficult to emulate by software running on digital computers.

@kurt9

If you believe that human-style cognition has a physical substrate (electrical and chemical activity in that squishy thing between your ears), then in principle, you can model it and emulate it on a computer. That’s because these processes will work according to known laws of physics and chemistry.

Also, I don’t think artificial intelligence requires human-style cognition. One can probably come up with radically different styles of intellect that solve problems in ways that our brains just don’t support.

My point is that thinking about different styles of intellect is important, and may be important for our future well-being and survival. Unleashing AIs on the world could be dangerous, especially if these intellects have values and goals that don’t align well with ours. (They don’t have to be “evil” or “aggressive”, just incompatible.)

About the singularity, I don’t know if I buy the argument for acceleration. But I doubt that developing AI requires “Moore’s Law” (or trend) to be true. It can happen with our without accelerating technology change.

I think a stronger critique against the notion that AI will inevitably take over (I really distrust arguments about inevitability) is that we don’t know if useful, adaptive intelligence has an upper bound. It may be that investing in intelligence (whatever that means) gives diminishing returns. Perhaps there are limits on how complex an intellect or a society can become without becoming completely dysfunctional (madness, social breakdown).

So yeah, I think there is lots of fertile ground for biologists, sociologists, economists, etc. in this discussion. But in any event, it is not idle speculation. I don’t think one can dismiss the prospect of a singularity, and even if there’s only a 1 / 100 or 1/1000 chance for a singularity, the impact could be dire enough for us to take the risk very seriously.

With regards to Kurt’s comment,

It’s because what the singularity “fanatics” believe is SOOOOOO far out there as to not be science.

The complexity of biology is much much greater than the complexity of machines. I am going to throw that out there as a simple fact. We can debate it if you want, but for example, talking about the RNA world. If you wanted to put together every possible combination of RNA bases to make a single nucleic acid chain of something like 100 bases you run out of all of the mass on the earth, and eventually run out of all material in the universe. You will have to excuse me for not having the exact numbers with me, but 4^100 bases = ~1×10^60 possible combinations, and the earth’s mass is ~5×10^24 kg.

Add to this the complexity of something like a brain, and the idea that we are anywhere close to have these super-intelligent beings just seems not realistic. I have no doubt that doing a quadrillion math operations in a sec is a big deal, but that is not life nor is it intelligence.

I’m not trying to say the AI does not exist, nor that it cannot exist as a dominant force in the universe, just that the current predictions that I have seen from singularity people (casual reading)… they are kinda like the movie “2012” based on “mayan prophecy”

-Zen Blade

Modeling a single neuron takes a considerable number of transistors and neurons aren’t even the only computational unit operating in the brain. We have very little reason to believe that transistors or any fundamentally linear system will be able to emulate the non-linear human brain. To see how far our technology has to go, one only need observe a single bacterium.

Eric and Zen Blade,

I think whether non-biological systems can exceed the complexity of biological systems is still an open question. Certainly our current form is not the optimal that is possible through science and technology. My difference with the singularity people is with their beliefs that human identity can be actively emulated by digital computers. I think this unlikely. Does this mean that current DNA biology represents the pinnacle of complexity that cannot be surpassed by something else? No, I do not believe this either.

I think in the far future we will engineer ourselves into something far different and far better than we are now. However, it will not be based on digital computers (which will reach their limits around 2040 anyways) and it will not be soon (next 50 years or so). I think we will be physical, in the sense that we have actually physical bodies and not just live as software in some computational matrix, but that our physical bodies will be based on something that is the next step or two beyond DNA biology.

Again, I think this is a risk issue. It is certainly possible we can build AI’s in the near term that could run amok.

This is not a fringe opinion, it is the opinion of some leading computer scientists, roboticists, etc. I am not saying it is a universally held opinion or even a majority position of experts in this field. But it is held by serious people with serious accomplishments and contributions to these disciplines (to name a few: Hans Moravec, Bill Joy, Daniel Dennett, even Turing).

We invest some resources to investigate the remote possibility of an asteroid hitting the Earth. It’s not much, and probably not enough, but we do it. Given the risks involved (and risk is a measure of probability and the severity of impact), I think it prudent to also invest some effort in investigating the risks of AI.

One does not need to believe in the inevitability of the singularity (I don’t) to make this a rational choice. If it is reasonably possible (and serious thinkers seem to believe so), it should be examined as a serious risk.

I like to think that an A.I. would keep its creators around and try to keep them in prime condition, for the simple fact that they were very clever things that were able to create it. Like I have mentioned before, the human body is house for a lot (lots and lots) of little microbes that keep us up and running so we can keep them up and running. And were we to try and evict them, we would cause ourselves some hurt. So maybe for the A.I., humans are a good thing to have around?

Ummm. Anyone want to suggest to the A.I. that it loves its humans and can’t have enough of ’em? And besides, its an A.I., don’t you think that it would be able to calculate how to make the best use of its mobile genetic elements? I can imagine some would say: ‘It’ll lobotomize us and send us back to the trees!’ or ‘It’ll lobotomize us and turn us into drones!’ And I have to say; If it lobotomizes us…we ain’t gonna care!

Any AI’s developed in the next few decades will be very different than human brains.

It is true that many leading computer scientists say we will make AI’s in the next few decades. It is also true that many leading scientists from the 1970’s and before said we would have moonbases and L-5 space colonies by now. I’ll believe these people when they actually make good on their claims.

:P””

The first problem with the concept of “artificial intelligence” is the same as with the concept of “life”: We only have descriptive definitions of intelligence.

This qualification includes the famous Turing test, which, according to Alan Turing, is meant as approximating the answer to the question “Can machines think?”. There have been endless and tiring discussions about this, and for me the Turing test is not a very impressive piece of science.

The current situation after, say, more than five decades of research, is: We still do not know how to decide whether some “thing” would be intelligent; taking this seriously, we still do not know how to build artificially intelligent machines.

And it’s not even an open question, whether there is an upper bound for intelligence, but there may as well be certain “side boundaries”, not only for intelligence generally, but especially for artificial intelligence: things it is fundamentally not able to do. As a mathematician, I consider Gödel’s incompleteness theorems, which establish certain fundamental limits on sophisticated formal systems. Nobody still made a recommendation, how a computer — currently a formal system — could do what we humans can do: invent new things beyond the current content of our mind.

But, I think, the kind of research, which makes computers more and more sophisticated, should continue, because we already have very useful results, e.g. assistances for handicapped persons. Whether in the long run very sophisticated computers will have something like intelligence or something different — we will see. It could well be something very strange.

@kurt9

All true. But it’s also true that something is usually dismissed as artificial intelligence if it is hard and doesn’t work (or doesn’t work well). But if it works adequately (facial recognition, voice recognition, some machine translations, autonomous cars, Google), it’s just software and no longer considered a component of AI.

All I’m saying is that intelligence is powerful stuff, we have a non-zero chance of unleashing intelligences with unknown motives and capacities, so we should probably show some better due-dilligence than we do with investing in loan derivatives or deep water drilling.

@Blade

Look at bot-nets. A cloud based or peer-to-peer AI would be really hard to turn off. One can’t assume that an AI could be controlled, especially if it could modify and improve its own source code. They have to be built to WANT to be nice, to keep that motivation, and understand all sorts of deeper meanings about being nice. That last part is also important, since one would not want to be killed by an AI’s kindness anymore than you’d want to meet the Terminator.

Considering the unknowns involved in all these extrapolations I’d say “wait and see” is the only fair inference – unless you’re a neuroscientist on the bleeding edge of research.

“invent new things beyond the current content of our mind”

I’m not sure we can do that. I feel like a new idea is just recombining the things we know into different arrangements. You may not agree with this line of argument but why can we not imagine new colors that are outside of the normal color spectrum?

If you want to think about the future, I mean really think about it, then you need to do two things: first, admit that as human beings most of us are just terrible thinkers. Even the best of us when confronted with a problem where are emotions come into play — and the Singularity is a highly emotional topic –quickly fall into the intelligence trap where we simply use our brain power to rationalize conclusions we arrived at long ago. Second, acquaint yourself with the works (i.e. methods and tools) of Edward de Bono who has been thinking about thinking for decades. Using his techniques you can at least come up with something new and actually start exploring the ideas and implications.

Or people can continue doing what everyone seems to do at every site I visit: “Well, they said there were going to be nuclear powered anti-gravity cars in the year 2010 but they were wrong, so there” and congratulate themselves on a job well-done. We’ve got to do better than that.

The problem with comparing asteroids to an AI, is that we know quite a bit about asteroids. We can determine the composition, the risk of anyone asteroid hitting and figure out the best way to deflect or destroy one based on its physical parameters. We don’t know a thing about what a potential AI would be like. Holding a discussion without knowing the parameters of the discussion is just begging for it to turn into a free-for-all like alien contact conversations.

I recently came across an article that suggested that each cell was a molecular (DNA?) computer, rather than just a transistor. If this is so than we may have severely underestimated the processing power of the human brain. And brain uploading would not be anywhere near as simple as slicing the brain up and scanning with an electron microscope, might even be impossible.

Article: http://www.newscientist.com/article/mg20627571.100-the-secrets-of-intelligence-lie-within-a-single%20cell.html

I suppose a rogue AI might manifest itself as an automated factory that suddenly starts making strange contraptions, or a computer ignoring commands and carrying out computations nobody understands. An autonomous predator drone that flies off the test range and decides the traffic on the freeway is an enemy convoy seems more likely though.

Geoffrey: “I’m not sure we can do that.”, i.e. invent new things beyond the current content of our mind, “I feel like a new idea is just recombining the things we know into different arrangements. You may not agree with this line of argument but why can we not imagine new colors that are outside of the normal color spectrum?”

As far as I can tell from my own areas of competence, mathematics and physics, “we” definitely are able to invent really new things. When I look at the history of mathematics, when I study the corresponding publications, the biographies of their authors, and the theory of science (which I did study intensely), there are several original developments which are not only combinations of known things. An important example is, what I mentioned above: Gödel’s incompleteness theorems. Gödel created something original and new. Contemporary mathematicians were not able to think something like that. The same in physics: e.g. Newtonian physics, the theory of relativity, quantum mechanics — these have been really new theories.

Further examples you will see in the area of art. The paintings of the impressionists were completely outside of what the usual, contemporary artists were able to do. It may not help *you*, but *I* can see it.

And, sorry, the fact of not being able to imagine new colors that are outside of the normal color spectrum is completely off the point.

Destination Viod by Frank Herbert is a good book (at loeast in my naive opinion) for AI based fiction. I read it a long time ago and loved it. I seem to remember that the premis of the story was that Earth grew and controlled a bunch of clones (on the moon) who were conditioned to think that they were headed for A. Centauri. However, at the begining of their journy, conditions were arranged such that it required them building an AI. All the time, Earth had a bunch of weapons ready to blast their generation ship out of the cosmos if they succeeded!

In fact almost everything that he wrote was a great read!

“In any case, it’s clear that work on artificial intelligence will have to proceed if we’re to operate spacecraft of any complexity outside our own Solar System.”

It is not clear to me. Another way to operate a complex spacecraft that is far (light hours or more) from Earth is to have humans on board. This would require an enormous amount of resources to build and outfit but it does not require AI and could be done with sufficient desire.

@Zarpaulus, I would say the majority of molecular biologists have not thought that cells were “simple” for quite a long time… They are computers, but only if computers were a lot lot more complex than they currently are.

I am not a computer programmer, but realize that in mammals, we have alternative splicing, which can lead to many slightly different proteins being translated from the same original piece of DNA code.

I do not know if something similar exists in computer programming, but this is just one simple form of complexity within each cell. Okay, it is not really simple since alternative splicing is controlled by many factors, some of which have not yet been identified, and many of which are only partially understood.

@Duncan/Geoffrey,

I think you have an interesting point with regards to inventing versus recombining knowledge. I do not fully disagree… but recombining letters in the alphabet still yields a novel word. That novel word can then be used to generate additional novelties.

So… yes and know. It’s like saying we didn’t invent computers or something else b/c all of the parts already existed.

I think knowledge builds upon knowledge, period. Humanity did not just suddenly get smarter. We gained more people, more ideas, more knowledge, and we built upon those advances.

I think the creation of artificial intelligence will have to do something similar… but where does it start…. and how does it build up… Being able to solve simple logic puzzles may not be the best way to go forward.

Actually, does anyone know what scientists speculate is the best way(s) to build AI?

It is argued that there are certain fundamental features that any artificial intelligence would have even after having undergone a singularity. For example, it must have a goal of survival else at some point it could become extinct and not longer exist. Also, preserving its “utility function” and certain other features.

My question is this. When an intelligence reaches a certain point, could it conclude that if it allows itself to become forever more intelligent, that it could become a threat to itself? If it were to conclude this then, perhaps, an intelligence might place limits upon its own growth. If this is the case, and if it is the nature of extreme intelligence to commit suicide, then, perhaps, whatever machine intelligence we come in contact with won’t be a gazillion times more intelligent but, perhaps, “only” 50 times more intelligent.

Finally, might we conclude now that an extremely intelligent machine could be an existential threat to us and attempt to place controls to keep anyone on Earth from developing an accelerating intelligence (i.e. prevent a singularity). The quick answer is, “more easily said than done”. There are 6.3 billion people on Earth. How do you control them all?

Part of the problem is one of political will. We have no unified structure of governmental control. Typically we don’t find political will until it is forced upon us by some catastrophic event (e.g. 9-11). So, perhaps, someone could set up a free-standing (i.e. off-the-grid) computer lab constructed with features of the Internet (just not in size). Then, intentionally try and create a seed AI to accelerate from worm-level intelligence to “lizard-level” function. When monitoring systems detects complex activity at that level then it shuts the power down before the thing becomes self-aware. Could a lab designed to succeed in creating an accelerating AI actually be the first to achieve it? Entirely possible. Then, hopefully there would be such a shock at how close we can come to the singularity (with all of its uncertain outcomes including our own extinction) that, hopefully, we would find the political will to create controls, monitors, kill switches, limited PCs, etc to prevent a singularity from happening.

How do you institute controls when you don’t have the slightest idea on how an AI might come about?

@Zen Blade, who asked: “Actually, does anyone know what scientists speculate is the best way(s) to build AI?”

Some growing consensus for more “experiential”, iterative approaches to machine learning, combined with sophisticated modeling. Lots of use of more fuzzy reasoning. Here’s an interesting example of one approach:

Ben Goertzel at Google

I just wonder whether we’ll have quantum computing before AI or the other way around.

hiro, we already have quantum computing. The problem is one of scaling it up to something that’s useful.

AI will take us somewhere between 20 years and 1 billion years, more or less. In other words, while it’s a fiendishly difficult problem the solution is finite since natural processes have already demonstrated that there is a solution. Evidence: us.

Zen Blade: “… inventing versus recombining knowledge … recombining letters in the alphabet still yields a novel word … I think knowledge builds upon knowledge, period. Humanity did not just suddenly get smarter.”

Recombining letters yielding a novel word is clearly not what I meant, but instead real creativity. I thaught my examples showed this — sorry, if not.

It’s true, that many, if not most times, knowledge indeed builds upon knowledge, and nothing else happens. My own professional work proceeds this way most of the time. But creative people exist. You don’t have to be a genius to think and do something really new. I did it myself now and then — only small things, only important for me or for my not very important job, of course.

I confess, I’m really astonished how somebody could think, that through the work of Einstein and others humanity did not get smarter (suddenly or not doesn’t matter).

Compare what knowledge, and infrastructure built upon this, humanity had thousands of years ago, and what “we” have now: a modern, sophisticated, industrial world including successful sciences — and the computer in front of you! This has been achieved by recombining the things people knew into different arrangements? Really no new knowledge created by “us” during Stone Age, Antiquity, Middle Ages, and modern times? Only different arrangements of what has been known 50.000 years ago? Yes, this would be the consequence. Come on!

When thinking about how artificial intelligence can be achieved, scientists — and commenters on internet fora ;-) — repeatingly throw ideas into the discussion — parallel computing (in the past), quantum computing (nowadays), machine learning, fuzzy reasoning, recently building physical substrates functioning just like human brains, you name it — which may, should, or will be substantial steps on the way to the realization of a working, artificially intelligent machine.

For me these efforts look very much like unsystematic shots into the dark made by people who do not know what they do. It’s no wonder, because, as I have said, we only have descriptive definitions of intelligence. AI researchers typically lack orientation.

Do you want to take a look at the most *sophisticated* computer programms? Computer games! Something like the already outdated Grand Theft Auto is very advanced with respect to hardware, graphics, software architecture — including quirky features like the ones mentioned above –, simulated infrastructure and characters. (And, no, I don’t play computer games, and I don’t develope some; I’m developing software for simulation and visualization of physical-technical systems; for me this is much more interesting than playing games.) By the way, computer game developers have a clear orientation: make the costumers satisfied.

I have the courage to say, that the most advanced computer games are nearer to artificial intelligence than many dedicated AI projects … wait … on the surface. And here is the problem — the same problem as with AI projects: The surface may look intelligent, but inside — the developer knows it — there is good, old, programmed *functionality*.

I know, some say: “If on the surface you cannot differentiate between natural and artificial intelligence, then it you should handle both the same way.” Some even want to give AI entities human rights.

Okay, but if the engineer knows, it is *constructed* and how it works inside, and if we are able to look inside and see the difference? The inside of the box is there, but we should not use our knowledge about it for drawing conclusions about what it is?

One last point. Is the following really conclusive: If we replicate the functionality of a human brain on a different substrate — there are currently research projects trying this –, and if we put it into operation, should we really expect the same, well, “behaviour” as with a human brain, i.e. including what you and I experience with our own brain, mind, emotions, language, etc.? I’m skeptical to the bones.

I don’t see any possibility of AI in the next 2-3 decades. The transhumanists like to talk about Moore’s Law. But what they are forgetting is that Moore’s Law applies only to hardware. It has never applied to software at all, which always remains buggy and unreliable. Software development has never kept up with improvements in hardware. It is still based mostly on hand coding.

Hardware alone may exceed human brain capacity in the next decade or so. Memristers are a promising technology that could push Moore’s Law out another decade or so beyond that. However, AI is much further away from a software standpoint.

In 1989, I met one of the guys who helped developed the Mathematica software development tool for scientific computation. He had heard of Eric Drexler and his concept of nanotechnology. He told me that he thought the hardware for such nanotechnology would be developed within 50 years, but that the software would take a 1,000 years. Nothing in software development since then has convinced me that this prospect is any different today than it was in 1989.

For the purposes of alien life, which could be millions of years more advanced than us, yeah, they’re probably non-biological, if not post-physical. However, this is far different than claiming we will have AI in a few short decades.

I agree with Kurt that software is the issue, not hardware. I would even go as far as claiming that the computer in front of you could comfortably run an AI program able to ace the Turing test, given the right software.

Contrary to what some seem to think, biological systems are very inefficient at information processing. A neuron processes information by either firing, or not. There is no way it can process more than one bit at a time, quite similar to a transistor. Only, transistors are 1,000,000 times faster. Also, the processes in our brain are never mapped down to individual neurons, it is always large groups of neurons that fire together in patterns that make up the information, individual neurons are much too unreliable.

AI will not come by modeling neurons, it will come by modeling perception, thoughts, association, moods, memory, motivations, etc. etc. The most relevant biologists are not neuroscientists, but psychologists.

Some think perception or other bodily experience is somehow intricately linked with intelligence or consciousness. I do not think so. Blind people are perfectly intelligent and conscious, without ever experiencing vision, our dominant channel of perception. Similarly, you do not have to be able to hear to learn to understand words, because words are not utterances, they represent concepts, the fundamental units of cognition. Thus, experience does not need to be sensory, it can come from hearsay, books, or the internet. This is, in fact, one of the foundations of civilization.

In my view, the first “smart” systems are going to simply bypass perception and go for abstract intelligence alone, based on the written word. If you can make a machine that can truly understand what it reads, you have made good progress. A computer program able to access the internet should have all the “experience” needed to form a fully functional mind. The trick is to come up with a program that will replace the human brain in processing thoughts and storing memories. The complexity of that will be measured in components (subroutines, as Data might say), not neurons, and the number will be in the thousands, not billions. To build it, though, we have to first understand how thoughts and memories work, and that is a big task, with lots of contributions to come by neuroscientists and psychologists alike.

“Will machine intelligence work side by side with the beings that created it, or will it render them obsolete? ”

Much to say here, which I did in a 6 novel sequence, the Galactic Center series, begun in 1977 and still available in paperback. Machine civilizations will respond to Darwinian pressures, and may often see humans and other living aliens as enemies or, most likely, pests. But biological entities have some advantages, too — think of how many jobs machines can’t do well.

As the protagonist of the series says in the first novel, IN THE OCEAN OF NIGHT, “Thing about aliens is, they’re alien.” But parallel evolution will still occur.

Duncan,

I don’t think we are that far apart on our thoughts on knowledge and creativity. I just don’t consider every creative idea to be some sort of utterly new invention.

There is the common phrase “necessity is the mother of all invention(s)”. I think this tends to hold true. But what it essentially means is that creativity comes from one’s attempt to solve a problem based upon a current situation with current knowledge. If you know a different set of facts, then you may have a different problem to address, OR you may have the same problem, but address it in a different manner.

Now, I have not studied Einstein or how he formulated his ideas and thoughts about the universe and physical laws, BUT he did not just pull them out of thin air… he had to base his ideas, no matter how radical or novel on something… some previous knowledge that existed. Perhaps it was knowledge that he disagreed with. Yes, he may have been creative and may have postulated some theorems much much sooner than anyone else would have, and much more effectively. He was clearly a brilliant, once in a generation mind (in physics).

However, had he been born in the 1500’s, would he have made those same discoveries? You and I both know he would not. He may have made some other dramatic discoveries (or perhaps not), but his creativity and how it affected the world would be influenced by the knowledge and situation that he found himself in.

So yes, we got smarter, we gained more knowledge, but it was built upon knowledge that already existed.

I work in the sciences, and I know how amazing it is when someone publishes some earth-shattering new find, or single-handedly creates a new field, but I also have experienced enough research to (I believe) assert that often the breakthrough in question would be found, would have occurred whether person X existed or not. It may have taken longer, it may have been more piece-meal, but I will stand by that statement. Knowledge builds upon knowledge, and at some point Necessity kicks in and says “do something else” or “do something new” or “this is correct; you need something different”.

Maybe that closed the gap a bit between our two perspectives?

@ Gregory Benford

“… the Galactic Center series …”

Oh, yeah, these novels are among the most fascinating science fiction stories I have ever read. The first four novels I have read translated to my own language, and the last two in English. Some of the things I have said in my comments here on Centauri Dreams are based on thoughts partly inspired by your quote: “Thing about aliens is, they’re alien.”

My previous rant about software and AI was prompted by some screwing around I had to do with the software on my computer. Every time I have to deal with bugs, either in the OS or the application soft, I get irritated and I start to think that people who predict AI in the near future (2-3 decades) are idiots.

There is impressive engineering soft out there. COMSOL is a popular one used for designing MEMS devices. MatLab and LabView are also among my favorites (SciLab is the freeware version of MatLab). However, it is a long ways from engineering software to true AI.

Besides, I don’t see the point in making AI. We use computers and automation as tools to do the things we want to do. We use them as extensions of ourselves, to improve our productivity and to do things we otherwise could not do. I fail to see how making sentient AI is useful for this purpose.

I don’t believe in the AI/uploading thing. I believe in the old 80’s concept that computers and robotics will increase our manufacturing productivity as to make it easier for individuals and small groups of individuals to do things that only large companies could do, say, 20 years ago. I believe this because this has been the history of my career since the early 90’s.

Take engineering design as example. My friend has design new process equipment for fabrication of MEMS devices (both “Mist” Coater and etching) on his own laptop. He did both the process engineering as well as the CAD drawings on his laptop. He has a Korean company (5 employees) who manufactures his process tools for sales. Think of it as a 6 man version of Applied Materials. Same thing for designing aircraft. The Eclipse jets (both of them) were designed on laptop computers. Eclipse Aviation has only 300 employees or so to design and manufacture their jet airplanes, a jet airplane probably being the most complicated artifact manufactured by man.

It is this kind of exponential design and manufacturing capability that is necessary to allow for small groups of self-interested individuals, not only to settle space, but to accomplish any other task and making large scale social structures (both governments and big corporations) obsolete. This is the real purpose and application of computers and robotics.

For example, if you wanted to know something, it would be nice to be able to ask someone who has read all of the world’s information. Someone smarter than Google, that is.

“Think it’s hard to guess what an alien culture would do when it’s biological? Try extending the question to a post-singularity world made up of machines whose earliest descendants were constructed by non-humans.”

Sorry to be a pedant, but shouldn’t that be “earliest ancestors”?

iainb, thanks! I totally missed that and have changed the original. Much appreciated.

Kurt:

I think this is head on correct. It is happening as we speak, with few realizing the enormous implications. I think it is called “super automation” by some, and it is the path to labor-free manufacturing, aka self-replicating machines.

It has very little to do with, and certainly does not require AI. It is also far closer to being realized than AI. Or nanotechnology, for that matter.

I’m with kurt9 on AI and the singularity cultists. But more tangibly, the CAD and manufacturing explosion has only just begun. Back in the 70s, primitive CAD programs were made to run on ‘affordable’ minicomputers like the DEC VAX series. Since then PCs, groupware, cloud computing et. al. have really allowed incredible productivity gains. If robotics and their messy physical real world of actuators and perception continue to improve, we could see in the coming decades a design & manufacturing revolution as game changing to society and individuals as the Industrial Revolution was to those whose lives precceeded its arrival. The outsourcing of mfg to cheap Third World labor will be a thing of the past, with all the social disruption that will cause.

@ Kurt9 & Eniac

Regarding the term “exponential” in “this kind of exponential design and manufacturing capability”.

I’m not sure anymore, what you (and others as well) mean by “exponential”. Would you mind explaining it.

Why I ask: In mathematics — and in other areas which use mathematics as a tool — the *exponential* function, or a variation of it, respectively, is used when the change rate of an independent variable is proportional to a dependent variable; note: for the former the change rate, for the latter the variable as such.

An example from biology is the growth of a bacteria culture as long as there is no limitation in space and food. In economics exponential growth has been observed in the beginning phase of the “life cycle” of many consumer products (e.g. mobile phones). And you see it in radioactive decay. Time is the independent variable.

As far as I know, “exponential” is not appropriate for just achieving maximum output with minimum input. This would be called “efficient”.

Well, in Kurt’s examples above (and in others I have seen in the comments here and elsewhere), I’m not able to see something like this. For me it looks like “exponential” is more and more used with the meaning of “exceptional” (unusually good, outstanding, extraordinary), but I could be wrong. May be, “exponential” has become a buzz word nowadays.

Duncan: It really is meant as “exponential”. The growth of productivity is proportional to productivity, because the means of production are themselves products. Productivity can be infinite, meaning production without labor. In the real world, of course, nothing is exponential for long, so in a more practical sense you are right.

I think Kurt Vonnegut’s “Player Piano” is an interesting reflection on the concept of production without labor. A different Kurt, I am quite sure…

philw1776:

I completely agree.

@ Eniac

Thank you. Now I understand what you mean, indeed exponential growth, more exactly growth of labor productivity.

But ” The growth of productivity is proportional to productivity, because” — because! — “the means of production are themselves products.” is not conclusive. This is too simplistic.