Centauri Dreams

Imagining and Planning Interstellar Exploration

Where Does the Kuiper Belt End?

Looking for new Kuiper Belt targets for the New Horizons spacecraft pays off in multiple ways. While we can hope to find another Arrokoth for a flyby, the search also contributes to our understanding of the dynamics of the Kuiper Belt and the distribution of comets in the inner Oort Cloud. Looking at an object from Earth or near-Earth orbit is one thing, but when we can collect data on that same object with a spacecraft moving far from the Sun, we extend the range of discovery. And that includes learning new things about KBOs that are already cataloged, as a new paper on observations with the Subaru Telescope makes clear.

The paper, in the hands of lead author Fumi Yoshida (Chiba Institute of Technology) and colleagues, points to Quaoar and the use of New Horizons data in spawning further research. A key aspect of this work is the phase angle as the relative position of the object changes with different observing methods.

One of the unique perspectives of observing KBOs from a spacecraft flying through the Kuiper Belt is that they can be observed with a significantly larger solar phase angle, and from a much closer distance, compared with ground-based or Earth-based observation. For example, the New Horizons spacecraft observed the large classical KBO (50000) Quaoar at solar phase angles of 51°, 66°, 84°, and 94° (Verbiscer et al. 2022). Ground-based observation can only provide data at small solar phase angles (≲2°). The combination of observations at large and small solar phase angles provides us with knowledge of the surface reflectance of the object and enables us to infer information about a KBO’s surface properties in detail (e.g., Porter et al. 2016; Verbiscer et al. 2018, 2019, 2022).

In the new paper, the Subaru Telescope’s Hyper Suprime-Cam (HSC) is the source of data that is sharpening our view of the Kuiper Belt through wide and deep imaging observations Located at the telescope’s prime focus, HSC involves over 100 CCDs covering a 1.5 degree field of view. Early results from Yoshida’s work support the idea that we can think in terms of extending the Kuiper Belt, whose outer edge seems to end abruptly at around 50 AU. This adds weight to recent work with the New Horizons team’s Student Dust Counter (SDC), which has been measuring dust beyond Neptune and Pluto. The SDC results point to such an extension. See the citation below, and you might also want to check New Horizons: Mapping at System’s Edge in these pages.

Other planetary systems also raise the question of why our outer debris belt should be as limited as it has been thought to be. Says Yoshida:

“Looking outside of the Solar System, a typical planetary disk extends about 100 au from the host star (100 times the distance between the Earth and the Sun), and the Kuiper Belt, which is estimated to extend about 50 au, is very compact. Based on this comparison, we think that the primordial solar nebula, from which the Solar System was born, may have extended further out than the present-day Kuiper Belt.”

If it does turn out that our system’s early planetary disk was relatively small, this could be the result of outer objects like the much discussed (and still unknown) Planet 9. The distribution of objects in this region thus points to the evolution of the Solar System, with the implication that further discoveries will flesh out our view of the process.

The Subaru work was focused on two fields along New Horizon’s trajectory, an area of sky equivalent to about 18 full moons. Using these datasets, drawn from thirty half-nights of observations, the New Horizons science team has been able to find more than 240 objects. The new paper pushes these findings further by studying the same observations with different analytical tools, using JAXA software called the Moving Object Detection System, which normally is deployed for spotting near-Earth objects. Out of 84 KBO candidates, seven new objects have emerged whose orbits can be traced. Two of these have been assigned provisional designations by the Minor Planet Center of the International Astronomical Union.

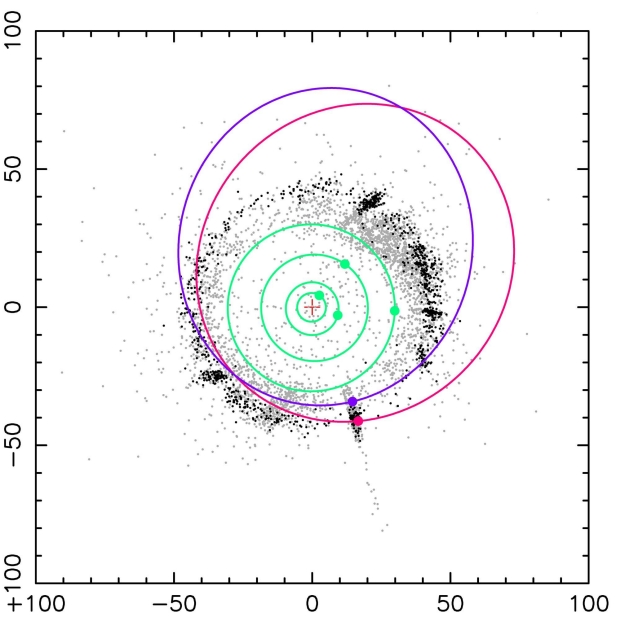

Image: Schematic diagram showing the orbits of the two discovered objects (red: 2020 KJ60, purple: 2020 KK60). The plus symbol represents the Sun, and the green lines represent the orbits of Jupiter, Saturn, Uranus, and Neptune, from the inside out. The numbers on the vertical and horizontal axes represent the distance from the Sun in astronomical units (au, one au corresponds to the distance between the Sun and the Earth). The black dots represent classical Kuiper Belt objects, which are thought to be a group of icy planetesimals that formed in situ in the early Solar System and are distributed near the ecliptic plane. The gray dots represent outer Solar System objects with a semi-major axis greater than 30 au. These include objects scattered by Neptune, so they extend far out, and many have orbits inclined with respect to the ecliptic plane. The circles and dots in the figure represent their positions on June 1, 2024. Credit: JAXA.

The semi-major axes of the two provisionally designated objects are greater than 50 AU, pointing to the possibility that as such observations continue, we will be able to extend the edge of the Kuiper Belt. Further work using the HSC is ongoing.

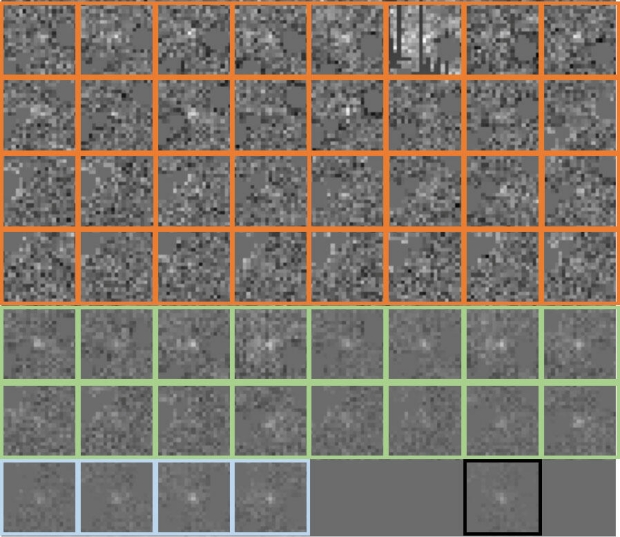

Image: An example of detection by JAXA’s Moving Object Detection System. Moving objects are detected from 32 images of the same field taken at regular time intervals (the images in the orange frames in the above figure). Assuming the velocity range of Kuiper Belt objects, each image is shifted slightly in any direction and then stacked. The green, light blue, and black framed images are the result of stacking 2 images each, 8 images each, and 32 images, respectively. If there is a light source in the center of a single image as well as each of the overlapping images, it is considered a real object. (Credit: JAXA)

Observations of tiny objects at these distances are fraught with challenges at any time, but the authors take note of the fact that while their datasets cover the period between May 2020 and June 2021 (the most recently released datasets), their particular focus is on the June 2020 and June 2021 data. This marks the time when the observation field was close to opposition; i.e., directly opposite the Sun as seen from Earth. At opposition the objects should be brightest and their motion across the sky most accurately measured.

Additional datasets are in the pipeline and will be analyzed when they are publicly released. The authors intend to adjust their software in a computationally intensive way that will bring more accurate results near field stars that can otherwise confuse a detection. They also plan to deploy a machine-learning framework as the effort continues. Meanwhile, New Horizons presses on, raising the question of its successor. Right now we have exactly one spacecraft in the Kuiper Belt. How and when will we build the probe that continues its work?

The paper is Yoshida et al., “A deep analysis for New Horizons’ KBO search images,” Publications of the Astronomical Society of Japan (May 29, 2024). Full text. The SDC paper is Doner et al., “New Horizons Venetia Burney Student Dust Counter Observes Higher than Expected Fluxes Approaching 60 au,” The Astrophysical Journal Letters Vol. 961, No. 2 (24 January 2024), L38 (abstract).

Remembering the Y2K ‘Flasher’

Transients have always been intriguing because whether at optical, radio or other wavelengths, they usually flag an object worth watching. Consider a supernova, or a Fast Radio Burst. But non-repeating transients can have astronomers both professional and amateur tearing their hair out. What was Henry Cordova, for instance, seeing in the Florida sky back in 1999? The date seems significant, as we were moving toward the Y2K event, and despite preparation, there was some concern about its effects in computer coding. Henry, a retired map maker and geographer as well as a dedicated astronomer, had a transient that did repeat, but only for a short time, and one that may well have been entangled in geopolitical events of the time. I’m reminded of our reliance on electronics, and the fact that some 60,000 commercial flights have encountered bogus GPS signals, according to The New York Times (strikingly, the U.S. has no civilian backup system for GPS). What goes on in orbit may keep us guessing as we begin to build a cislunar infrastructure. As witness this oddity.

by Henry Cordova

At about 11:30 PM, EST on 31 December 1999, I was taking out the garbage. I live in South Florida, so the weather was warm (I was wearing gym shorts, flip-flops and a t-shirt) and the sky was crystal clear. I paused to admire Orion, high in the sky and near the meridian, when I noticed a bright (about magnitude -1 or -2) strobe-like flash just south and west of Rigel. This sort of visual phenomenon is difficult to spatially locate with any precision; let’s just say it appeared to be roughly near the SW corner of the Hunter asterism. As for the flash itself, this is not a particularly unexpected event in my nighttime sky. I often see aircraft at high altitudes where their navigation lights are not visible, but their strobes stand out clearly.

I paused for a moment to see if I could catch it again, and sure enough, about a minute later it reappeared. The same bright flash, in the same general area. But unlike an aircraft, this time the light did not seem to move as you would expect a light attached to an airplane or satellite. It was in the same general area, I couldn’t judge exactly where, but it was definitely not moving. I continued observing and sure enough, about a minute later I saw it again, same flash, same place.

It had my attention. Over the next hour or so, I remained in the yard, next to my garbage cans, eyes riveted on Orion, high in the sky at my latitude. I missed the Times Square New Year’s festivities on TV. I contemplated going into the house to get my binoculars, or a watch, but decided against it. I did not want to risk missing anything or losing my eyes’ dark adaptation. I saw several more flashes, of the same brightness, at the same intervals, and at the same location. Sometime after midnight the show ended, and I went back in the house.

The following evening (1 Jan 2000!), at about the same time, while jogging, I saw the flasher again. It’s been over twenty years now, so I don’t remember how many times I saw it, or what time of night exactly, but it was in the same place and it looked the same. It flashed more than once, two or three times, about a minute apart, and then…nothing. I’ve never seen it again.

So what was it? A few possibilities can be immediately ruled out: it was not a high-flying aircraft or a satellite in low Earth orbit, I am familiar with those objects and that is not what I saw. The flashes did not move on the celestial sphere so it must be something further out. Another possibility is a solar reflection off a geosynchronous satellite; at that time and date, the solstice Sun, Earth and flasher were roughly aligned and the celestial equator runs through Orion’s belt.

I live just north of the Tropic of Cancer so the geometry for a reflection is certainly possible. But the flasher was bright, as bright as the old Echo satellites, or the ISS. I’ve heard of amateur astronomers photographing solar reflections off geosynchronous orbiters, but they are very faint, requiring specialized equipment and highly skilled observers. And it’s unlikely to be an accidental reflection, which would not occur repeatedly, and especially at the turn of the new Millennium.

I suppose the flashers could be the manifestation of some cosmological event in deep space, perhaps something like the “X-ray or gamma ray flashers”, but if so, I’ve never heard anything about them in the astronomical press. Head-on meteors are another possibility, but I think that’s highly unlikely.

My guess is that these are reflections off surveillance satellites, spies in the skies. The flashes were not solar, but beacons or reflections from powerful lasers, used in some ranging or calibration procedure, or perhaps the sign of an actual attack on the satellite–an attempt to blind or confuse its sensors.

I also recall reading somewhere that there is a gravitational “sweet spot” somewhere along longitude 90 W. The Earth’s gravitational field is not perfectly spherical. It has bumps and wrinkles, places inherently unstable or stable, respectively, to geosynchronous satellites. An object placed at one of the dips would have a tendency to remain there and not require constant fuel expenditure to remain on station.

Such spots would no doubt be reserved for national security orbiters. A geostationary satellite placed there would be able to continuously monitor radio traffic or missile launches on the eastern USA or the Caribbean basin. To an observer on Earth, such a satellite would appear to slowly wander east and west, and north and south, of a spot on the celestial equator. These would be highly secret missions, but I suspect these are big birds, perhaps with huge antennas deployed on them.

You will recall all the concern in those years about possible “Y2K” events, errors caused by programmers failing to code their software to accommodate the change to the new century/millennium. As it turns out, not much really happened, but there was a general fear that problems might occur. I even recall reading that there might be issues with our spy satellites. As it turns out, I do recall reading in the general press a few weeks later that some “minor Y2K problems” occurred in our orbital reconnaissance vehicles, but that they were quickly taken care of. Hmmmm… even if this were true, why would they bother publicizing it?

I certainly don’t know what the flashers were, and I’ve never heard about anyone else seeing them. Perhaps someone reading these lines can suggest a solution to the mystery. Until then, I can continue to think that maybe, just maybe, I may have witnessed one of the last battles of the Cold War.

Space Butterfly: A Living Star Probe

Browsing through the correspondence that makes up Freeman Dyson’s wonderful Maker of Patterns: An Autobiography Through Letters (Liveright, 2018), I came across this missive, describing to his parents in 1958 why space exploration occupied his time at General Atomic, where he was working on Orion, the nuclear pulse concept that would explode atomic devices behind huge pusher plates to produce thrust. Dyson had no doubts about the value of humanity moving ever outward as it matured:

I am something of a fanatic on this subject. You might as well ask Columbus why he wasted his time discovering America when he could have been improving the methods of Spanish sheep farming. I think the parallel is a close one… We shall know what we go to Mars for only after we get there. The study of whatever forms of life exist on Mars is likely to lead to better understanding of life in general. This may well be of more benefit to humanity than irrigating ten Saharas. But that is only one of many reasons for going. The main purpose is a general enlargement of human horizons.

But there’s the thing, the driver for the entire Centauri Dreams effort these past twenty years. Just how do we go? And I mean that not only in terms of propulsion, the nuts and bolts of engines as well as the theory that drives them, but how we move outward carrying the cultural and scientific values of our species, Dyson thought deeply about these matters, as did Shklovskii and Sagan in their Intelligent Life in the Universe (Holden Day, 1966), who mused that an advanced civilization might view interstellar travel as a driver for creativity and philosophical growth. Perhaps culture remakes itself with each new exploration.

Greg Matloff’s investigations of these matters in numerous papers and key books like Deep Space Probes (Springer, 2005) have laid out the propulsion options from Project Orion to beamed lightsails, but his new paper, written with the artist C Bangs, draws on Dyson’s Astrochicken concept, first published in 1985, one of the few times I’ve seen it discussed in the literature (although I gave it a look in my 2005 Centauri Dreams book). Astrochicken was to be a one-kilogram probe to Uranus, a genetically engineered device powered by artificial intelligence.

As Dyson describes it in Infinite in All Directions (Harper & Row, 1988), “The plant component has to provide a basic life-support system using closed-cycle biochemistry with sunlight as the energy source. The animal component has to provide sensors and nerves and muscles with which it can observe and orient itself and navigate to its destination. The electronic component has to receive instructions from Earth and transmit back the results of its observations.”

Integrating all of this is artificial intelligence, creating a probe “…as agile as a hummingbird with a brain weighing no more than a gram.” Some years after Dyson introduced Astrochicken, Matloff discussed such a living probe, flitting from world to world, in Deep Space Probes, seeing elegance in the idea of wedding biology to technology. There he imagines a spacecraft like this fully fleshed out in the interstellar context, with a harvesting capability in the destination star system. He describes it thus:

…a living Astrochicken with miniaturized propulsion subsystems, autonomous computerized navigation via pulsar signals, and a laser communications link with Earth. The craft would be a bioengineered organism. After an interstellar crossing, such a living Astrochicken would establish orbit around a habitable planet. The ship (or being) could grow an incubator nursery using resources of the target solar system, and breed the first generation of human colonists using human eggs and sperm in cryogenic storage.

We have in this symbiosis of plant, animal and electronic components the possibility of leaving the Solar System and conceivably creating a von Neumann probe that combines engineering with the genetic manipulation of plant and animal DNA. Our probes need not be robotic, or at least entirely robotic, even if humans are not aboard.

Out of this seed comes Space Butterfly, aptly named for its large, thin wings that can unfurl for a close stellar pass for acceleration and trajectory adjustments. In the new paper in JBIS, Matloff notes that this is a spacecraft with an affinity for starlight, using its wings as solar panels to power up its suite of scientific and communications equipment. Driven by its AI brain, it would be capable of mining resources found in exoplanetary systems, moving between stellar systems in passages of millennial length. Here I’m reminded of the oft-cited fact that only a small fraction of the Sun’s projected lifetime would serve for such space butterflies to explore and fill the galaxy, even moving at velocities that exceed Voyager by only a small amount. Quoting the paper:

Using hyper thin all-metallic sails and close perihelion passes, Space Butterfly could traverse the separation between neighboring stars in a few millennia. If it elects to come to rest temporarily within a planetary system, it can decelerate by electromagnetically reflecting encountered interstellar photons and pointing the fully unfurled sail towards the destination star…

If ET elects to construct Space Butterflies with very long lives, many spare AI ‘brains’ could be carried. This should produce no major problem since these units could have masses well under one gram. Spare parts could also be carried to replace non-biological portions of Space Butterfly.

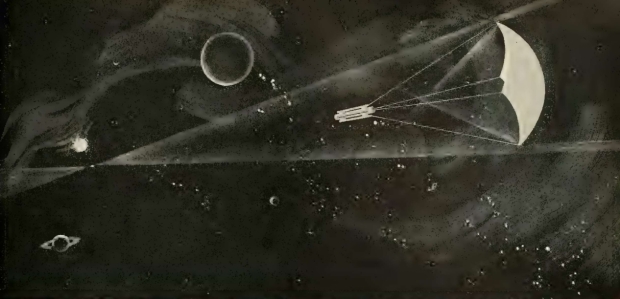

Image: Flitting from star to star, the Space Butterfly concept is perhaps more like a Space Moth, with its affinity to starlight. Credit: C Bangs.

Plugging in the known characteristics of the interstellar object ‘Oumuamua, Matloff speculates on its characteristics if it were a Space Butterfly, exploring the kinematics – trajectory, velocity, acceleration – of this kind of probe. He uses a framework of mathematical tools that have evolved for the analysis of sail technologies, ranging from the lightness number of the sail (the ratio of radiation pressure force on the sail and solar gravitational force on the spacecraft), as well as radiation pressure from the Sun at perihelion, given what we know about sail materials and thickness.

If ‘Oumuamua were a sail, it would be a slow one, moving at an interstellar cruise velocity in the range of 26 kilometers per second, and thus requiring a solid 50,000 years for a crossing between the Sun and the Alpha Centauri stars, for example. A Space Butterfly should be able to do a good deal better than that, but we are still talking about crossings involving thousands of years. Civilizations interested in filling the galaxy with such probes clearly would have long lifetimes and attention spans.

Such timeframes challenge all our assumptions about a civilization’s survival and indeed the lifespan of the beings who operate within its strictures. Given that we know of no extraterrestrial civilizations, we can only speculate, and in my view the prospect of an advanced culture operating over millennial timeframes in waves of slow exploration is as likely as one patterned on the human model. A sentient probe carrying perhaps a post-biological consciousness not at the mercy of time’s dictates might find ranging the interstellar depths a matter of endless fascination. For such a being, the journey of discovery and contemplation may be of more value than any single arrival.

The paper is Matloff & Bangs, “Space Butterfly: Combining Artificial Intelligence and Genetic Engineering to Explore Multiple Stellar Systems,” Journal of the British Interplanetary Society Vol. 77 (2024), 16-19.

The Beamed Lightsail Emerges

If you look at Galaxy’s December, 1962 issue, which I have in front of me from my collection of old SF magazines, you’ll find a name that appears only once in the annals of science fiction publishing: George Peterson Field. The article, “Pluto – Doorway to the Stars,” is actually by Robert Forward, who was at that time indulging in a time-honored practice, concealing an appearance in a science fiction venue so as not to raise any eyebrows with management at his day job at Hughes Aircraft Company.

Aeronautical engineer Carl Wiley had done the same thing with an article on solar sails in Astounding back in May of 1951, choosing the pseudonym Russell Saunders as cover for his work at Goodyear Aircraft Corporation (later Lockheed Martin). Both these articles were significant, as they introduced propulsion concepts for deep space to a popular audience outside the scientific journals. While solar sails had been discussed by the likes of J. D. Bernal and Konstantin Tsiolkovsky, the idea of sails in space now begins to filter into popular fiction available on any newsstand.

But despite being frequently referenced in the literature, Forward’s foray into Galaxy did not focus on sail technologies at all. Instead, it dwells on an entirely different concept, one that Forward called a ‘gravitational catapult.’ This is itself entertaining, so let’s talk about it for just a moment before pushing on to the actual first appearance of laser beaming to a sail, which Forward would produce in a different journal in the same year.

Forward is the master of gigantic engineering projects. Pluto had caught his attention because its eccentric orbit matched up with what Percival Lowell had predicted for a planet beyond Neptune, but its size was far too small to account for its supposed effects. Lowell had calculated that it would mass about six times Earth’s mass, a figure later corroborated by W. H. Pickering. But given Pluto’s actual size, Forward found that if it were the outer system perturber Lowell had predicted, it would have to have a density hundreds of times greater than water.

Remember, this was 1962, and in addition to being a physicist, Forward was a budding science fiction author playing with ideas in Galaxy, which had just passed from the editorship of H. L. Gold to that of Frederick Pohl, a man of lively imagination and serious SF chops himself. Why not play with the notion of Pluto as artifact? I think this was Forward’s first gigantic project. Thus:

…we can envision how such a gravitational catapult could be made. It would require a large, very dense body with a mass larger than the Earth, made of collapsed matter many times heavier than water. It would have to be whirling in space like a gigantic, fat smoke ring, constantly turning from inside out.

The forces it would exert on a nearby object, such as a spaceship, would tend to drag the ship around to one side, where it would be pulled right through the center of the ring under terrific acceleration and expelled from the other side. If the acceleration were of the order of 1000 g’s, then after a minute or so it would take to pass through, the velocity of the ship on the other side would be near that of light…

Forward imagined a network of such devices, each of them losing a bit of energy each time they accelerated a ship, but gaining it back when they decelerated an incoming ship. The Pluto reference is a playful speculation that what was then considered the ninth planet was actually one of these devices, which we would find waiting for us along with a note from the Galactic Federation welcoming us to use it. A sort of ‘coming out present’ to an emerging species. I can see the twinkle in his eye as he wrote this.

In any case, we have to change the history of beamed sails slightly to reflect the fact that the Galaxy appearance did not deal with sails, despite having a name similar to an article Forward published in the journal Missiles and Rockets in that same year. “Pluto – Gateway to the Stars” ran in the journal’s April, 1962 issue as part of a series by various authors on technologies for sending spacecraft to other planets. I had never seen the actual article until my friend Adam Crowl was kind enough to forward it the other day. Adam’s collection of interstellar memorabilia is formidable and has often fleshed out my set of early deep space papers.

Here what Forward latches onto is the most significant drawback to solar sailing, which relies on the momentum imparted by photons. This is the inverse square law, which tells us that the push we can get from solar photons decreases with the square of our distance from the Sun. Solar sails lose their punch somewhere around the orbit of Jupiter. What Carl Wiley first discussed in Astounding was the utility of sails for interplanetary exploration. Forward wanted to go a lot farther.

“Pluto – Gateway to the Stars” ran through the options for deep space available to the imagination in 1962, homing in on antimatter and concluding “it would be a solution if you were a science fiction writer,” which of course Forward would become. But he noted “there are a few engineering details.” The first of these would be the problem of antimatter production. The second is storage. With both of these remaining huge problems today, it’s intriguing that Forward actually spends more of this article on antimatter than on his innovative laser concept but runs aground on the problem of gamma radiation.

When the hydrogen-anti-hydrogen streams collide, the matter in the atoms will be transformed into pure energy, but the energy will be in the form of intense gamma radiation. We can stop the gamma rays in heavy lead shields and get our thrust this way, but the energy in the gamma rays will turn into heat energy in the shields and it will not be long before the whole rocket melts. What is needed is a gamma-ray reflector – and such a material does not exist. In fact, there are strong physical arguments against every finding any such material since the wavelengths of the gamma rays are smaller than the atomic structure of matter.

But sails beckon, and here the innovation is clear: Leave the propellant behind. Forward made the case that standard reaction methods could not obtain speeds anywhere near the speed of light because their mass ratios would be appallingly high, not to mention thermal problems with any design carrying its own propellant plant and energy sources. For interstellar purposes, he mused, the energy source and the reaction mass would have to be external to the vehicle, as indeed they are in a solar sail. But to go interstellar, we have to get around the inverse square law. Hence the laser:

There is a way to extend the idea of solar driven sails to the problem of interstellar travel at large distances from the sun. This is to use very large Lasers in orbits close to the sun. They would convert the random solar energy into intense, coherent, very narrow light beams that can apply radiation pressure at distances of light years.

However, since the Laser would have to be over 10 kilometers in diameter, this particular method does not look feasible for interstellar travel and other methods of supplying propulsive energy from fixed power plants must be found.

The editors of Missiles and Rockets seem to have raised their eyebrows at this early instance of Forward’s engineering, as witness the end of their caption to the image that accompanied the text.

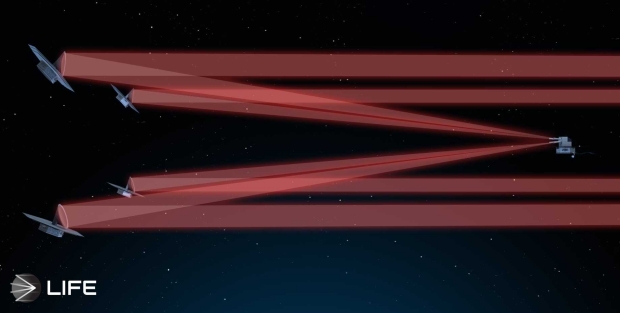

Image: This is the original image from the Missiles and Rockets article. Caption: Theoretical method for providing power for interstellar travel is use of a very large Laser in orbit close to sun. Laser would convert random solar energy into intense, very narrow light beams that would apply radiation pressure to solar sail carrying space cabin at distances of light years. Rearward beam from Laser would equalize light pressure. Author Forward observes, however, that the Laser would have to be over 10 kilometers in diameter. Therefore other means must be developed.

At this point in his career, Forward’s thinking leaned toward fusion to solve the interstellar conundrum, but as events would prove, he would increasingly return to beamed sails of kilometer scale, and power station and lensing structures that are far beyond our capabilities today. But if we ever do create smart assemblers at the nanotech level, the idea of megastructures of our own devising may not seem quite so preposterous. And this 1962 introduction to beamed sails is to my knowledge their first appearance in the literature. Today the concept continues to inspire research on beaming technologies at various wavelengths and using cutting edge sail materials.

Finding a Terraforming Civilization

Searching for biosignatures in the atmospheres of nearby exoplanets invariably opens up the prospect of folding in a search for technosignatures. Biosignatures seem much more likely given the prospect of detecting even the simplest forms of life elsewhere – no technological civilization needed – but ‘piggybacking’ a technosignature search makes sense. We already use this commensal method to do radio astronomy, where a primary task such as observation of a natural radio source produces a range of data that can be investigated for secondary purposes not related to the original search.

So technosignature investigations can be inexpensive, which also means we can stretch our imaginations in figuring out what kind of signatures a prospective civilization might produce. The odds may be long but we do have one thing going for us. Whereas a potential biosignature will have to be screened against all the abiotic ways it could be produced (and this is going to be a long process), I suspect a technosignature is going to offer fewer options for false positives. I’m thinking of the uproar over Boyajian’s Star (KIC 8462852), where the false positive angles took a limited number of forms.

If we’re doing technosignature screening on the cheap, we can also worry less about what seems at first glance to be the elephant in the room, which is the fact that we have no idea how long a technological society might live. The things that mark us as tool-using technology creators to distant observers have not been apparent for long when weighed against the duration of life itself on our planet. Or maybe I’m being pessimistic. Technosignature hunter Jason Wright at Penn State makes the case that we simply don’t know enough to make statements about technology lifespans.

On this point I want to quote Edward Schwieterman (UC-Riverside) and colleagues from a new paper, acknowledging Wright’s view that this argument fails because the premise is untested. We don’t actually know whether non-technological biosignatures are the predominant way life presents itself. Consider:

In contrast to the constraints of simple life, technological life is not necessarily limited to one planetary or stellar system, and moreover, certain technologies could persist over astronomically significant periods of time. We know neither the upper limit nor the average timescale for the longevity of technological societies (not to mention abandoned or automated technology), given our limited perspective of human history. An observational test is therefore necessary before we outright dismiss the possibility that technospheres are sufficiently common to be detectable in the nearby Universe.

So let’s keep looking, which is what Schwieterman and team are advocating in a paper focusing on terraforming. In previous articles on this site we’ve looked at the prospect of detecting pollutants like chlorofluorocarbons (CFCs), which emerge as byproducts of industrial activity, but like nitrogen dioxide (NO₂) these industrial products seem a transitory target, given that even in our time the processes that produce them are under scrutiny for their harmful effect on the environment. What the new paper proposes is that gases that might be produced in efforts to terraform a planet would be longer lived as an expanding civilization produced new homes for its culture.

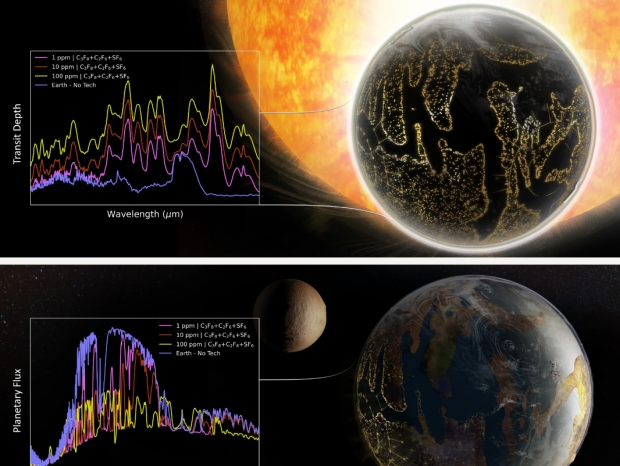

Enter the LIFE mission concept (Large Interferometer for Exoplanets), a proposed European Space Agency observatory designed to study the composition of nearby terrestrial exoplanet atmospheres. LIFE is a nulling interferometer working at mid-infrared wavelengths, one that complements NASA’s Habitable Worlds Observatory, according to its creators, by following “a complementary and more versatile approach that probes the intrinsic thermal emission of exoplanets.”

Image: The Large Interferometer for Exoplanets (LIFE), funded by the Swiss National Centre of Competence in Research, is a mission concept that relies on a formation of flying “collector telescopes” with a “combiner spacecraft” at their center to realize a mid-infrared interferometric nulling procedure. This means that the light signal originating from the host star of an observed terrestrial exoplanet is canceled by destructive interference. Credit: ETH Zurich.

In search of biosignatures, LIFE will collect data that can be screened for artificial greenhouse gases, offering high resolutions for studies in the habitable zones of K- and M-class stars in the mid-infrared. The Schwieterman paper analyzes scenarios in which this instrument could detect fluorinated versions of methane, ethane, and propane, in which one or more hydrogen atoms have been replaced by fluorine atoms, along with other gases. The list includes Tetrafluoromethane (CF₄), Hexafluoroethane (C₂F₆), Octafluoropropane (C₃F₈), Sulfur hexafluoride (SF₆) and Nitrogen trifluoride (NF₃). These gases would not be the incidental byproducts of other industrial activity but would represent an intentional terraforming effort, a thought that has consequences.

After all, any attempt to transform a planet the way some people talk about terraforming Mars would of necessity be dealing with long-lasting effects, and terraforming gases like these and others would be likely to persist not just for centuries but for the duration of the creator civilization’s lifespan. Adjusting a planetary atmosphere should present a large and discernable spectral signature precisely in the infrared wavelengths LIFE will specialize in, and it’s noteworthy that gases like those studied here have long lifetimes in an atmosphere and could be replenished.

LIFE will work via direct imaging, but the study also takes in detection through transits by calculating the observing time needed with the James Webb Space Telescope’s instruments as applied to TRAPPIST-1 f. The results make the detection of such gases with our current technologies a clear possibility. As Schwieterman notes, “With an atmosphere like Earth’s, only one out of every million molecules could be one of these gases, and it would be potentially detectable. That gas concentration would also be sufficient to modify the climate.”

Indeed, working with transit detections for TRAPPIST-1 f produces positive results with JWST’s MIRI Low Resolution Spectrometer (LRS) and NIRSpec instrumentation (with “surprisingly few transits”). But while transits are feasible, they’re also more scarce, whereas LIFE’s direct imaging in the infrared takes in numerous nearby stars.

From the paper:

We also calculated the MIR [mid infrared] emitted light spectra for an Earth-twin planet with 1, 10, and 100 ppm of CF₄, C₂F₆, C₃F₈, SF₆, and NF₃… and the corresponding detectability of C₂F₆, C₃F₈, and SF₆ with the LIFE concept mission… We find that in every case, the band-integrated S/Ns were >5σ for outer habitable zone Earths orbiting G2V, K6V, or TRAPPIST-1-like (M8V) stars at 5 and 10 pc and with integration times of 10 and 50 days. Importantly, the threshold for detecting these technosignature molecules with LIFE is more favorable than standard biosignatures such as O₃ and CH₄ at modern Earth concentrations, which can be accurately retrieved… indicating meaningfully terraformed atmospheres could be identified through standard biosignatures searches with no additional overhead.

Image: Qualitative mid-infrared transmission and emission spectra of a hypothetical Earth-like planet whose climate has been modified with artificial greenhouse gases. Credit: Sohail Wasif/UCR.

The choice of TRAPPIST-1 is sensible, given that the system offers seven rocky planet targets aligned in such a way that transit studies are possible. Indeed, this is one of the most highly studied exoplanetary systems available. But the addition of the LIFE mission’s instrumentation shows that direct imaging in the infrared expands the realm of study well beyond transiting worlds. So whereas CFCs are short lived and might flag transient industrial activity, the fluorinated gases discussed in this paper are chemically inert and represent potentially long-lived signatures for a terraforming civilization.

The paper is Schwieterman et al., “Artificial Greenhouse Gases as Exoplanet Technosignatures,” Astrophysical Journal Vol. 969, No. 1 (25 June 2024), 20 (full text).

Space Exploration and the Transformation of Time

Every now and then I run into a paper that opens up an entirely new perspective on basic aspects of space exploration. When I say ‘new’ I mean new to me, as in the case of today’s paper, the relevant work has been ongoing ever since we began lofting payloads into space. But an aspect of our explorations that hadn’t occurred to me was the obvious question of how we coordinate time between Earth’s surface and craft as distant as Voyager, or moving as close to massive objects as Cassini. We are in the realm of ‘time transformations,’ and they’re critical to the operation of our probes.

Somehow considering all this in an interstellar sense was always much easier for me. After all, if we get to the point where we can push a payload up to relativistic speeds, the phenomenon of time dilation is well known and entertainingly depicted in science fiction all the way back to the 1930s. But I remember reading a paper from Roman Kezerashvili (New York City College of Technology) that analyzed the relativistic effects of a close solar pass upon a spacecraft, the so-called ‘sundiver’ maneuver. Kezerashvili and colleague Justin Vazquez-Poritz showed that without calculating the effects of General Relativity induced by the Sun’s mass at perihelion, the craft’s course could be seriously inaccurate, its destination even missed entirely. Let me quote this:

…we consider a number of general relativistic effects on the escape trajectories of solar sails. For missions as far as 2,550 AU, these effects can deflect a sail by as much as one million kilometers. We distinguish between the effects of spacetime curvature and special relativistic kinematic effects. We also find that frame dragging due to the slow rotation of the Sun can deflect a solar sail by more than one thousand kilometers.

Clearly, what seem like tiny effects get magnified as we examine their consequences on spacecraft moving under differing conditions of velocity and gravity. The measurement of time is a key aspect of this. And even the tiniest adjustments are critical if we are to build communication networks that operate accurately even in so close an environment as that between the Earth and the Moon. Thus the occasion for this musing, a paper from the Jet Propulsion Laboratory’s Slava Turyshev and colleagues that discusses how the effects of gravity and motion can be understood between the Earth and the network of assets we’re building around the Moon and on its surface. Exploration in this space will depend upon synchronizing our tools.

The Turyshev paper puts it this way:

As our lunar presence expands, the challenge of synchronizing an extensive network of assets on the moon and in cis-lunar space with Earth-based systems intensifies. To address this, one needs to establish a common system time for all lunar assets. This system would account for the relativistic effects that impact time measurement due to different gravitational and motion conditions, ensuring precise and efficient operations across cislunar space.

And in fact a recent memorandum from the White House Cislunar Technology Strategy Interagency Working Group was released on April 2 of this year noting the “policy to establish time standards at and around celestial bodies other than Earth to advance the National Cislunar S&T [Science and Technology] Strategy.” So here is a significant aspect of our growth into a cislunar culture that is growing organically out of our current explorations, and will be critical as we expand deeper into the system. One day we may go interstellar, but we won’t do it with a Solar System-wide infrastructure.

As an avid space buff, I should have been aware of this all along, especially since gravitational time dilation is easily demonstrated. A clock on the lunar surface, for example, runs a bit faster than a clock on Earth. Because time runs slower closer to a massive object, our GPS satellites have to deal with this effect all the time. Clearly, any spacecraft moving away from Earth experiences time in ways that vary according to its velocity and the gravitational fields it encounters during the course of its mission. These effects, no matter how minute, have to be plugged into operational software adjusting for the variable passage of time.

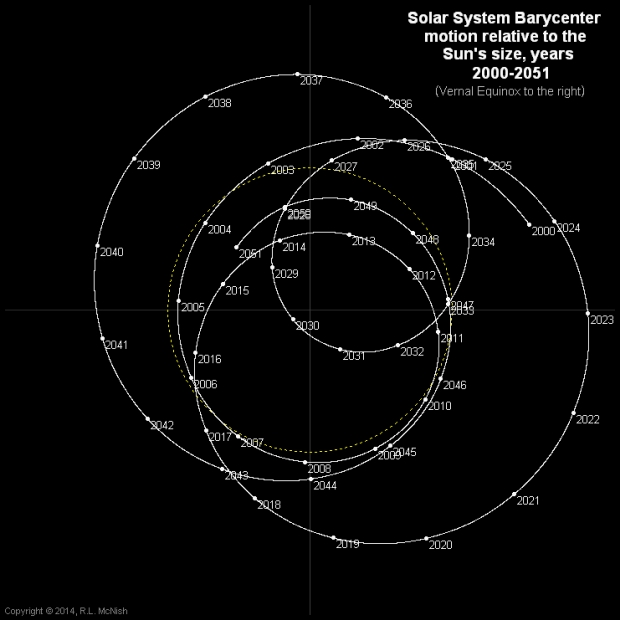

So moving from time and space coordinates in one inertial frame (the Earth’s surface), we need to reckon with their manifestation in another inertial frame, that aboard a spacecraft, to make clocks synchronize accurately and hence enable essential navigation, not to mention communications and scientific measurements. The necessary equations to handle this task are known in the trade as ‘relativistic time transformations,’ and it’s critical to have a reference system like the Solar System Barycentric coordinate frame (SSB) that is built around the center of mass of the Solar System itself. This allows accurate trajectory calculations for space navigation.

Image: The complexity of establishing reference systems for communications and data return is suggested by movements in the Solar System’s barycenter itself, shown here in a file depicting its own motion. Credit: Larry McNish / via Wikimedia Commons.

As you would guess, the SSB coordinate frame has been around for some time, becoming formalized as we began sending spacecraft to other planetary targets. It was a critical part of mission planning for the early Pioneer probes. Synchronization with resources on Earth occurs when data from a spacecraft are time-stamped using SSB time so that they can be converted into Earth-based time systems. Supervising all this is an international organization called the International Earth Rotation and Reference Systems Service (IERS), which maintains time and reference systems, with its central bureau hosted by the Paris Observatory.

‘Systems’ is in the plural not just because we have an Earth-based time and a Solar System Barycentric coordinate frame, but also because there are other time scales. We use Coordinated Universal Time as a global standard, and a familiar one. But there are others. There is, for example, an International Atomic Time (TAI – from the French ‘Temps Atomique International’), a standard that is based on averaging atomic clocks around the world. There is also a Terrestrial Time (TT), which adds to TAI a scale reflecting time on the surface of the Earth without the effect of Earth’s rotation.

But we can’t stop there. Universal Time (UT) adjusts for location, affected by the longitude and the polar motion of the Earth, both of which have relevance to celestial navigation and astronomical observations. Barycentric Dynamical Time (TDB, from ‘Temps Dynamique Barycentrique’) accounts for gravitational time dilation effects, while Barycentric Coordinate Time (TCB, from ‘Temps Coordonné Barycentrique’) is centered, as mentioned before, on the Solar System’s barycenter but excluding gravitational time dilation effects near Earth’s orbit. All of these transformations aim to account for relativistic and gravitational effects to keep observations consistent.

These time transformations (i.e., the equations necessary for accounting for these differing and crucial effects) have been a part of our space explorations for a long time, but they hover beneath the surface and don’t usually make it into the news. But consider the complications of a mission like New Horizons, moving into the outer Solar System and needing to account not only for the effects of that motion but the gravitational time dilation effects of an encounter not only with Pluto but the not insignificant mass of Charon, all of this coordinated in such a way that data returning to Earth can be precisely understood and referenced according to Earth’s clocks.

The Turyshev paper focuses on the transformations between Barycentric Dynamical Time and time on the surface of the Moon, and the needed expressions to synchronize Terrestrial Time with Lunar Time (TL). We’re going to be building a Solar System-wide infrastructure one of these days, an effort that is already underway with the gradual push into cislunar space that will demand these kinds of adjustments. These relativistic corrections will be needed to work in this environment with complete coordination between Earth’s surface, the surface of the Moon, and the Solar System’s barycenter.

The paper produces a new Luni-centric Coordinate Reference System (LCRS). We are talking about a lunar presence involving numerous landers and rovers in addition to orbiting craft. It is the common time reference that ensures accurate timing between all these vehicles and also allows autonomous systems to function while maintaining communication and data transmission. Moreover, the LCRS is needed for navigation:

An LCRS is vital for precise navigation on the Moon. Unlike Earth, the lunar surface presents unique challenges, including irregular terrain and the absence of a global magnetic field. A dedicated reference system allows for precise positioning and movement of landers and rovers, ensuring they can target and reach specific, safe landing sites. This is particularly important for resource utilization, such as locating and extracting water ice from the lunar poles, which requires high positional accuracy.

Precise location information through these time and position transformations will be, clearly, a necessary step wherever we go in the Solar System, and a vital part of shaping the activity that will build that system-wide infrastructure so necessary if we are to seriously consider future probes into the Oort Cloud and to other stars. Turyshev and team refer to all this as the establishment of a ‘geospatial context’ within which the placement of instruments can be optimized, but the work also becomes vital for everything from the creation of bases to necessary navigational tools. For the immediate future, we are firming up the steps that will give us a foothold on the Moon.

The paper is Turyshev et al., “Time transformation between the solar system barycenter and the surfaces of the Earth and Moon,” now available as a preprint. If you want to really dig into time transformations, the IERS Conventions document is available online. The Roman Kezerashvili paper cited above is R. Ya. Kezerashvili and J. F. Vazques-Poritz, “Escape Trajectories of Solar Sails and General Relativity,” Physics Letters B Volume 681, Issue 5 (16 November 2009), pp. 387-390 (abstract).