Centauri Dreams

Imagining and Planning Interstellar Exploration

Les Shepherd, RIP

There are so many things to say about Les Shepherd, who died on Saturday, February 18, that I scarcely know where to begin. Born in 1918, Leslie Robert Shepherd was a key player in the creation of the International Astronautical Federation (IAF), becoming its third president in 1957 — this was at the 8th Congress in Barcelona just a week after the launch of Sputnik — and in 1962 he would be called upon to serve as its president for a second time. A specialist in nuclear fission who became deeply involved in nuclear reactor technology, Shepherd was one of the founding members of the International Academy of Astronautics (IAA), and served as chairman of the Interstellar Space Exploration Committee, which met for the first time at the 1984 IAF Congress in Lausanne, Switzerland.

The IAF Congress in Stockholm the following year was the scene of the first ISEC symposium on interstellar flight, one whose papers were subsequently collected in one of the famous ‘red cover’ issues of the Journal of the British Interplanetary Society. But let’s go back a bit. Those ‘red cover’ issues might never have occurred were it not for the labors of Shepherd, who was an early member and, in 1954, the successor to Arthur C. Clarke as chairman of the organization. It was in 1952 that Shepherd’s paper “Interstellar Flight” first appeared in JBIS, a wide-ranging look at the potential for deep space journeys and their enabling technologies. He served again as BIS chairman (later president) between 1957 and 1960 and from 1965 to 1967.

I first learned about Shepherd’s career through conversations with Giancarlo Genta at the Aosta interstellar conference several years ago, a fitting place given his long association and friendship with Italian interstellar luminaries Giovanni Vulpetti, Claudio Maccone and Genta himself. It was only later that I learned that Shepherd was the organizer and first chairman of the Aosta sessions, which continue today. When I heard of Shepherd’s death, I wrote to both Vulpetti and Maccone for their thoughts, because from everything I could determine, the “Interstellar Flight” paper was one of the earliest scientific studies on how we might reach the stars. I considered it a driver for future investigations and suspected that it had a powerful influence on the next generation of scientists.

Dr. Vulpetti was able to confirm the importance of the work, which looked at nuclear fission and fusion as well as ion propulsion and went on to ponder the possibilities of antimatter. The latter is significant given how little antimatter propulsion had been studied at the time. Vulpetti goes on to say:

It may be interesting to consider the state of particle physics in which the paper of 1952 was written… For understanding some key aspects, we have to remember (a) that Dr. Shepherd was born in 1918, (b) the positron was discovered in 1932 by C. D. Anderson (USA), and (c) the antiproton was found in 1955 by E. Segré (Italy). Thus, [Shepherd] was aware of the prediction of antiproton existence made by P.A.M. Dirac in the late 1920s and 1930, but – when he wrote the paper – there existed no experimental evidence about the antiproton. Nevertheless, Dr. Shepherd realized that the matter-antimatter annihilation might have the capability to give a spaceship a high enough speed to reach nearby stars. In other words, the concept of interstellar flight (by/for human beings) may go out from pure fantasy and (slowly) come into Science, simply because the Laws of Physics would, in principle, allow it! This fundamental concept of Astronautics was accepted by investigators in the subsequent three decades, and extended/generalized just before the end of the 2nd millennium.

That the 1952 paper was ground-breaking should not minimize the contribution Shepherd made in other papers, including his 1949 and later collaborations with rocket engineer Val Cleaver on the uses of atomic energy in rocket technologies that not only examined nuclear-thermal propulsion but looked as well at the kind of nuclear-electric schemes we are now seeing actively used in operations like the Dawn mission to Vesta and Ceres. But it may be that his thoughts on antimatter in “Interstellar Flight” were his most provocative at the time — he published that paper a year before Eugen Sänger’s famous paper on photon rockets. Vulpetti adds that his work revealed the “important relationship between the mass of an annihilation-based rocket spaceship and its payload mass. This was confirmed in the 1970s, and generalized in the 1980s.”

Reading through the pages of “Interstellar Flight” is a fascinating exercise. Shepherd must confront not only the immense distances involved but the fact that at the time, we had no knowledge of any planetary systems other than our own. Yet even in this very early era of astronautics he is thinking through the implications of future technologies, and I suspect that a few science fiction stories may also have crossed his path as he pondered the likelihood of ‘generation ships’ that could take thousands of people on such journeys. From the paper:

It is obvious that a vehicle carrying a colony of men to a new system should be a veritable Noah’s Ark. Many other creatures besides man might be needed to colonize the other world. Similarly, a wide range of flora would need to be carried. A very careful control of population would be required, particularly in view of the large number of generations involved. This would apply alike to humankind and all creatures transported. Life would go on in the vehicle in a closed cycle, it would be a completely self-contained world. For this and many other obvious reasons the vehicle would assume huge proportions: it would, in fact, be a very small planetoid, weighing perhaps a million tons excluding the dead weight of propellants and fuel. Even this would be pitifully small, but clever design might make it a sufficiently varied world to make living bearable.

So wide-ranging is the “Interstellar Flight” paper that it also takes in relativistic flight (here there is no option, he believes, but antimatter for propulsion) and time dilation as experienced by the crew, and goes from there to an examination of the interstellar medium and the problems it could present to such a fast-moving vehicle. Shepherd saw early on that collisions with dust particles and interstellar gas had to be considered if a vehicle were moving at a substantial percentage of the speed of light, working out that at velocities of 200,000 kilometers per second or more, the oncoming flux would be about 1011 times as intense as that found at the top of the Earth’s atmosphere. He saw that a considerable mass of material would have to shield the living quarters of any spacecraft moving at these velocities. Project Daedalus would, in the 1970s, re-examine the problem and consider various mechanisms for shielding its unmanned probe.

Leslie Shepherd had many collaborators and, as Dr. Vulpetti told me, encouraged wide studies in propulsion systems for deep space exploration (Vulpetti himself was one of Shepherd’s collaborators — I give the citation below). I thank Giovanni Vulpetti and Claudio Maccone for their thoughts on Shepherd. Thanks also to Kelvin Long and Robert Parkinson of the BIS for helpful background information. But we’re not done: Dr. Maccone was kind enough to send along some personal recollections of Shepherd and his work that I want to run tomorrow — I had originally intended to publish them today but they give such a good sense of the man that I want to run them in their entirety as a tribute to a great figure in astronautics whose loss is deeply felt.

Les Shepherd’s ground-breaking paper on interstellar propulsion is “Interstellar Flight,” JBIS, Vol. 11, 149-167, July 1952. His 1994 paper with Giovanni Vulpetti is “Operation of Low-Thrust Nuclear-Powered Propulsion Systems from Deep Gravitational Energy Wells,” IAA-94-A.4.1.654, IAF Congress, Jerusalem, October 1994.

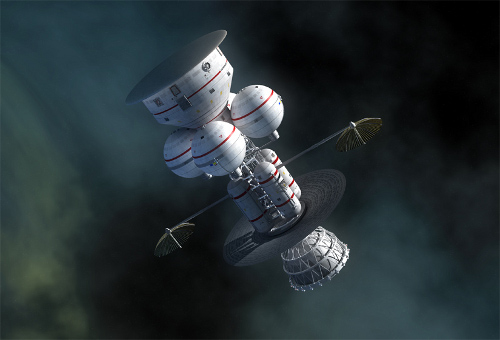

Project Icarus: Contemplating Starship Design

Andreas Tziolas, current leader of Project Icarus, gave a lengthy interview recently to The Atlantic‘s Ross Andersen, who writes about starship design in Project Icarus: Laying the Plans for Interstellar Travel. Icarus encounters continuing controversy over its name, despite the fact that the Icarus team has gone to some lengths to explain the choice. Tziolas notes the nod to Project Daedalus leader Alan Bond, who once referred to “the sons of Daedalus, perhaps an Icarus, that will have to come through and make this a much more feasible design.”

I like that sense of continuity — after all, Icarus is the follow-on to the British Interplanetary Society’s Project Daedalus of the 1970s, the first serious attempt to engineer a starship. I also appreciate the Icarus’ team’s imaginative re-casting of the Icarus myth, which imagines a chastened Icarus washed up on a desert island planning to forge wings out of new materials so he can make the attempt again. But what I always fall back on is this quote from Sir Arthur Eddington which, since I haven’t run it for two years, seems ready for a repeat appearance:

In ancient days two aviators procured to themselves wings. Daedalus flew safely through the middle air and was duly honoured on his landing. Icarus soared upwards to the sun till the wax melted which bound his wings and his flight ended in fiasco. The classical authorities tell us, of course, that he was only “doing a stunt”; but I prefer to think of him as the man who brought to light a serious constructional defect in the flying-machines of his day. So, too, in science. Cautious Daedalus will apply his theories where he feels confident they will safely go; but by his excess of caution their hidden weaknesses remain undiscovered. Icarus will strain his theories to the breaking-point till the weak joints gape. For the mere adventure? Perhaps partly, this is human nature. But if he is destined not yet to reach the sun and solve finally the riddle of its construction, we may at least hope to learn from his journey some hints to build a better machine.

I love the bit about straining theories to the breaking point, and also ‘hints to build a better machine.’ Anyway, those unfamiliar with the Icarus project can use the search engine here to find a surfeit of prior articles, or check the Icarus Interstellar site for still more. You’ll also get the basics from the Andersen interview, which goes into numerous issues, not least of which is propulsion. Tziolas notes that Project Icarus has focused on fusion, although ‘the flavor of fusion is still up for debate.’ Seen as an extension of the Daedalus design, fusion is a natural choice here, because what Icarus is attempting to do is to re-examine what Daedalus did in light of more modern developments. But the He3 demanded by Daedalus is a problem because it would involve a vast operation to harvest the He3 from a gas giant’s atmosphere.

Image: Icarus project leader Andreas Tziolas. Credit: Icarus Interstellar.

As the team studies fusion alternatives, other options persist. Beamed propulsion strikes me as a solid contender if you’re in the business of starship design in a world where sustained fusion has yet to be demonstrated in the laboratory, much less in the tremendously demanding environment of a spacecraft. We’ve already had solar sails deployed, the Japanese IKAROS being the pathfinder, and laboratory work has likewise demonstrated that beamed propulsion via microwave or laser can drive a sail. But beam divergence is a problem, which is why Robert Forward envisioned giant lenses in the outer system demanding a robust space manufacturing capability. So the dismaying truth is that at present, both the fusion and beamed sail options look to be not only beyond our engineering, but well beyond the wildest dreams of our budgets.

Where we are clearly making the most progress in interstellar terms is in the choice of a destination. Obviously, we lack a current target, but within the next two decades it is well within our capabilities to launch the kind of ‘planet-finder’ spacecraft that can not only home in on an Earth analogue around another star but also study its atmosphere. It would be all to the good if we found that blue and green world we’re hoping for orbiting a nearby system like Centauri B, but we’re going to be learning very soon (depending on what gets budgeted for and when) which nearby stars have planets that might be suitable targets. Icarus is not just looking for any old rock — the goal is to design a craft that could reach a world that could be habitable for humans.

Image: One vision of the Icarus craft, by the superb space artist Adrian Mann.

Why that criterion? Survival of the species is a serious interstellar motivation. Tziolas asks whether, if humanity becomes capable of going to the stars and chooses not to, it wouldn’t deserve a Darwin Award, the kind of achievement that marks its recipient as doomed. But motivations cover a wide range. I’ve written about the human urge to explore on many occasions, and Tziolas talks about pushing back technological boundaries as another prime driver. Hard problems, in other words, drive us to push the envelope in terms of solutions:

In order to achieve interstellar flight, you would have to develop very clean and renewable energy technologies, because for the crew, the ecosystem that you launch with is the ecosystem you’re going to have for at least a hundred years. With our current projections, we can’t get this kind of journey under a hundred years. So in developing the technologies that enable interstellar flight, you could serendipitously develop the technologies that could help clean up the earth, and power it with cheap energy. If you look toward the year 2100, and assume that the 100 Year Starship Study has been prolific, and that Project Icarus has been prolific, at a minimum we’d have break-even fusion, which would give us abundant clean energy for millennia. No more fossil fuels.

And let’s not forget ongoing miniaturization, also a prime player in any starship technology:

We’d also have developed nanotechnology to the point where any type of technology that you have right now, anything technology-based, will be able to function the same way it does now, but it won’t have any kind of footprint, it will only be a square centimeter in size. Some people have characterized that as “nano-magic,” because everything around you will appear magical. You wouldn’t be able to see the structures doing it, but there would be light coming out of the walls, screens that are suspended that you can move around any surface, sensors everywhere — everything would be extremely efficient.

This is a lively and informative interview, one that circles back to the need to drive a shift in cultural attitudes as a necessary part of any long-haul effort. Some of this is simply practical — the creative souls who volunteered their engineering and scientific skills on Daedalus are retiring and some have already passed away. A new design requires a new generation of interstellar engineers, one recruited from that subset of the population that continues to take the long view of history, acknowledging that without the early and incremental steps, a great result cannot occur.

FTL Neutrinos: Closing In on a Solution

The news that the faster-than-light neutrino results announced to such widespread interest by the OPERA collaboration have now been explained has been spreading irresistibly around the Internet. But the brief piece in ScienceInsider that broke the news was stretching a point with a lead reading “Error Undoes Faster-Then-Light Neutrino Results.” For when you read the story, you see that a fiber optic cable connection is a possible culprit, though as yet an unconfirmed one.

Sean Carroll (Caltech) blogged on Cosmic Variance that while he wanted to pass the news along, he was reserving judgment until a better-sourced statement came to hand. I’ve thought since the beginning that a systematic error would explain the ‘FTL neutrino’ story, but I still was waiting for something with more meat on it than the ScienceInsider news. It came later in the day with an official CERN news release, and this certainly bears quoting:

The OPERA collaboration has informed its funding agencies and host laboratories that it has identified two possible effects that could have an influence on its neutrino timing measurement. These both require further tests with a short pulsed beam.

So we have not just one but two possibilities here, both with ramifications for the neutrino timing measurements and both needing further testing. And let’s go on with the news release:

If confirmed, one would increase the size of the measured effect, the other would diminish it. The first possible effect concerns an oscillator used to provide the time stamps for GPS synchronizations. It could have led to an overestimate of the neutrino’s time of flight. The second concerns the optical fibre connector that brings the external GPS signal to the OPERA master clock, which may not have been functioning correctly when the measurements were taken. If this is the case, it could have led to an underestimate of the time of flight of the neutrinos. The potential extent of these two effects is being studied by the OPERA collaboration. New measurements with short pulsed beams are scheduled for May.

Image: Detectors of the OPERA (Oscillation Project with Emulsion-tRacking Apparatus) experiment at the Italian Gran Sasso underground laboratory. Credit: CERN/AFP/Getty Images.

We may well be closing on an explanation for a result many scientists had found inconceivable. Here’s a BBC story on the possibility of trouble with the oscillator and/or an issue with the optical fiber connection. We learn here that a new measurement of the neutrino velocity will be taken in 2012, taking advantage of international facilities ranging from CERN and the Gran Sasso laboratory in Italy to Fermilab and the Japanese T2K. The story quotes Alfons Weber (Oxford University), who is working on the Minos effort to study the neutrino measurements at Fermilab:

“I can say that Minos will quite definitely go ahead… We’ve already installed most of the equipment we need to make an accurate measurement. Even if Opera now publish that ‘yes, everything is fine’, we still want to make sure that we come up with a consistent, independent measurement, and I assume that the other experiments will go forward with this as well.”

So this is where we are: An anomalous and extremely controversial result is being subjected to a variety of tests to find out what caused it. If I were a betting man, I would put a great deal of money on the proposition that the FTL results will eventually be traced down to something as mundane as the optical fiber connector that is now the subject of so much attention. But we’ll know that when it happens, and this is the way science is supposed to work. OPERA conducted numerous measurements over a three year period before announcing the FTL result. Let’s now give the further work time to sort out what really happened so we can put this issue to rest.

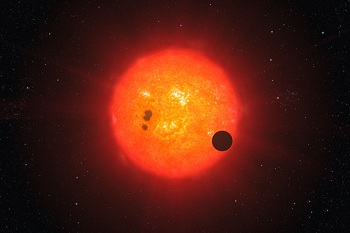

M-Dwarfs: A New and Wider Habitable Zone

I want to work a new paper on red dwarf habitability in here because it fits in so well with yesterday’s discussion of the super-Earth GJ1214b. The latter orbits an M-dwarf in Ophiuchus that yields a hefty 1.4 percent transit depth, meaning scientists have a strong lightcurve to work with as they examine this potential ‘waterworld.’ In transit terms, red dwarfs, much smaller and cooler than the Sun, are compelling exoplanet hosts because any habitable worlds around them would orbit close to their star, making transits frequent.

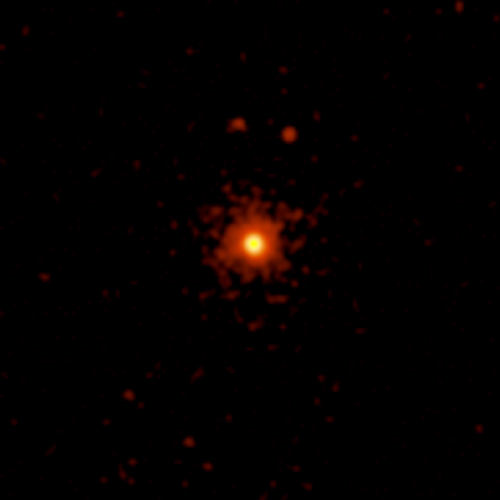

When I first wrote about red dwarfs and habitability in my Centauri Dreams book, it was in connection with the possibilities around Proxima Centauri, but of course we can extend the discussion to M-dwarfs anywhere, this being the most common type of star in the galaxy (leaving brown dwarfs out of the equation until we have a better idea of their prevalence). Manoj Joshi and Robert Haberle had published a paper in 1997 that described their simulations for tidally locked planets orbiting red dwarf stars, findings that held open the possibility of atmospheric circulation moderating temperatures on the planet’s dark side. There seemed at least some possibility for extraterrestrial life on such a world, although the prospect remains controversial.

Image: X-ray observations of Proxima Centauri, the nearest star to the Sun, have shown that its surface is in a state of turmoil. Flares, or explosive outbursts occur almost continually. This behavior can be traced to the star’s low mass, about a tenth that of the Sun. In the cores of low mass stars, nuclear fusion reactions that convert hydrogen to helium proceed very slowly, and create a turbulent, convective motion throughout their interiors. This motion stores up magnetic energy which is often released explosively in the star’s upper atmosphere where it produces flares in X-rays and other forms of light. X-rays from Proxima Centauri are consistent with a point-like source. The extended X-ray glow is an instrumental effect. The nature of the two dots above the image is unknown – they could be background sources. Credit: NASA/CXC/SAO.

A lot of work has been done on M-dwarfs and habitability in the years since, and we also have the problem of this class of stars emitting flares of X-ray or ultraviolet radiation, making the prospects for life still uncertain. It would be helpful, then, if we could find a way to back a planet off from its host star while still allowing it to be habitable. The flare problem would be partially mitigated, and tidal lock might not be a factor. Joshi, now studying planetary atmospheric models at the University of East Anglia, has recently published a new paper with Haberle (University of Reading) arguing that the habitable zone around M-dwarfs may actually extend as much as 30 percent further out from the parent star than had been previously thought.

At issue is the reflectivity of ice and snow. M-dwarfs emit a much greater fraction of their radiation at wavelengths longer than 1 μm than the Sun does, a part of the spectrum where the reflectivity (albedo) of snow and ice is smaller than at visible light wavelengths. The upshot is that more of the long-wave radiation emitted by these stars will be absorbed by the planetary surface instead of being reflected from it, thus lowering the average albedo and keeping the planet warmer. Joshi and Haberle modeled the reflectivity of ice and snow on simulated planets around Gliese 436 and GJ 1214, finding both the snow and ice albedos to be significantly lower given these constraints.

The finding has no bearing on the inner edge of the habitable zone, as the paper notes:

The effect considered here should not move the inner edge of the habitable zone, usually considered as the locus of orbits where loss rates of water become significant to dry a planet on geological timescales (Kasting et al 1993), away from the parent M-star. This is because when a planet is at the inner edge of the habitable zone, surface temperatures should be high enough to ensure that ice cover is small. For a tidally locked planet, this implies that ice is confined to the dark side that perpetually faces away from the parent star; such ice receives no stellar radiation, which renders albedo effects unimportant.

The dependence of ice and snow albedo on wavelength is small for wavelengths shorter than 1 μm, which is where the Sun emits most of its energy, so we see little effect on our own climate. But the longer wavelengths emitted by red dwarfs could keep snow and ice-covered worlds warmer than we once thought. How various atmospheric models would affect the absorption of the star’s light is something that will need more detailed work, say the authors, but they consider their extension of the outer edge of the habitable zone to be a robust conclusion.

The paper is Joshi and Haberle, “Suppression of the water ice and snow albedo feedback on planets orbiting red dwarf stars and the subsequent widening of the habitable zone,” accepted by Astrobiology (preprint).

A Waterworld Around GJ1214

I love the way Zachory Berta (Harvard-Smithsonian Center for Astrophysics) describes his studies of the transiting super-Earth GJ1214b. Referring to his team’s analysis of the planet’s atmosphere, Berta says “We’re using Hubble to measure the infrared color of sunset on this world.” And indeed they have done just this, discovering a spectrum that is featureless over a wide range of wavelengths, allowing them to deduce that the planet’s atmosphere is thick and steamy. The conclusion most consistent with the data is a dense atmosphere of water vapor.

Discovered in 2009 by the MEarth project, GJ1214b has a radius 2.7 times Earth’s and a mass 6.5 times that of our planet. It’s proven to be a great catch, because its host star, an M-dwarf in the constellation Ophiuchus, offers up a large 1.4 percent transit depth — this refers to the fractional change in brightness as the planet transits its star. Transiting gas giants, for example, usually have transit depths somewhere around 1 percent, while the largest transit depth yet recorded belongs to HD 189733b, a ‘hot Jupiter’ in Vulpecula that shows a depth of approximately 3 percent.

Image: This artist’s impression shows how the super-Earth around the nearby star GJ1214 may look. Discovered by the MEarth project and investigated further by the HARPS spectrograph on ESO’s 3.6-metre telescope at La Silla, GJ1214b has now been the subject of close analysis using the Hubble Space Telescope, allowing researchers to learn not only about the nature of its atmosphere but also its internal composition. Credit: ESO/L. Calçada.

GJ1214b is also near enough (13 parsecs, or about 40 light years) that Berta’s follow-up studies on its atmosphere using the Hubble Space Telescope’s WFC3 instrument have proven fruitful. What the researchers are looking at is the tiny fraction of the star’s light that passes through its upper atmosphere before reaching us. The variations of the transit depth as a function of wavelength comprise the planet’s transmission spectrum, which the team is able to use to examine the mean molecular weight of the planet’s atmosphere. Earlier work on GJ1214b’s atmosphere had been unable to distinguish between water in the atmosphere and a worldwide haze, but the new work takes us a good way farther and into the planet’s internal structure.

The reason: A dense atmosphere rules out models that explain the radius of GJ1214b by the presence of a low-density gas layer. The researchers take this to its logical conclusion:

Such a constraint on GJ1214b’s upper atmosphere serves as a boundary condition for models of bulk composition and structure of the rest of the planet. It suggests GJ1214b contains a substantial fraction of water throughout the interior of the planet in order to obviate the need for a completely H/He- or H2-dominated envelope to explain the planet’s large radius. A high bulk volatile content would point to GJ1214b forming beyond the snow line and migrating inward, although any such statements about GJ1214b’s past are subject to large uncertainties in the atmospheric mass loss history…

If you examine the density of this planet (known because we have good data on its mass and size), you get about 2 grams per cubic centimeter, a figure that suggests GJ1214b has much more water than the Earth and a good deal less rock (Earth’s average density is 5.5 g/cm3). We can deduce a fascinating internal structure, one in which, as Berta says, “The high temperatures and high pressures would form exotic materials like ‘hot ice’ or ‘superfluid water’ – substances that are completely alien to our everyday experience.”

We wind up with a waterworld enveloped in a thick atmosphere, one orbiting its primary every 38 hours at a distance of some 2 million kilometers, with an estimated temperature of 230 degrees Celsius. I was delighted to see that Berta and colleagues have investigated the possibility of exomoons around this planet, looking for “shallow transit-shaped dimmings or brightenings offset from the planet’s transit light curve.” The team believes no moon would survive further than 8 planetary radii from GJ1214b, and indeed they find no evidence for Ganymede-size moons or greater, but the paper is quick to note that:

Due to the many possible configurations of transiting exomoons and the large gaps in our WFC3 light curve, our non-detection of moons does not by itself place strict limits on the presence of exo-moons around GJ1214b.

All of which is true, but what a pleasure to see the hunt for exomoons continuing to heat up.

The paper is Berta et al., “The Flat Transmission Spectrum of the Super-Earth GJ1214b from Wide Field Camera 3 on the Hubble Space Telescope,” accepted for publication in The Astrophysical Journal (preprint).

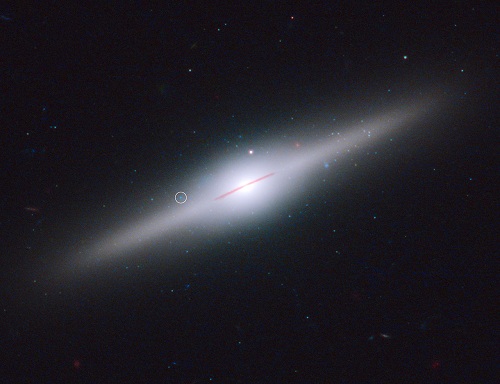

Black Hole Flags Galactic Collision

HLX-1 (Hyper-Luminous X-ray source 1) is thought to be a black hole, one that’s a welcome discovery for astronomers trying to puzzle out the mysteries of black hole formation. Located roughly 290 million light years from Earth and situated toward the edge of a galaxy called ESO 243-49, this black hole looks to be some 20,000 times the mass of the Sun, which makes it mid-sized when compared with the supermassive black holes at the center of many galaxies. The latter can have masses up to billions of times more than the Sun — the black hole at the center of our own galaxy is thought to comprise about four million solar masses.

Just how a supermassive black hole forms remains a subject for speculation, but study of HLX-1 is giving us clues that point in the direction of a series of mergers of small and mid-sized black holes. For it turns out that HLX-1, discovered by Sean Farrell (Sydney Institute for Astronomy in Australia and University of Leicester, UK) and team at X-ray wavelengths, shows evidence for a cluster of young, hot stars surrounding the black hole itself. Working in ultraviolet, visible and infrared light using the Hubble instrument as well as in X-rays using the Swift satellite, the team found that the accretion disk alone could not explain the emissions they were studying.

All of this leads to an interesting supposition about the black hole’s origins, as Farrell notes:

“The fact that there’s a very young cluster of stars indicates that the intermediate-mass black hole may have originated as the central black hole in a very low-mass dwarf galaxy. The dwarf galaxy was then swallowed by the more massive galaxy.”

Image: This spectacular edge-on galaxy, called ESO 243-49, is home to an intermediate-mass black hole that may have been purloined from a cannibalised dwarf galaxy. The black hole, with an estimated mass of 20,000 Suns, lies above the galactic plane. This is an unlikely place for such a massive back hole to exist, unless it belonged to a small galaxy that was gravitationally torn apart by ESO 243-49. The circle identifies a unique X-ray source that pinpoints the black hole. Credit: NASA, ESA, and S. Farrell (University of Sydney, Australia and University of Leicester, UK).

The cluster of young stars appears to be about 250 light years across and encircles the black hole. We may be looking, then, at the remains of a galaxy that has been effectively destroyed by its collision with another galaxy, while the black hole at the dwarf’s center interacted with enough gaseous material to form the new stars. Farrell and Mathieu Servillat (Harvard-Smithsonian Center for Astrophysics) peg the cluster’s age at less than 200 million years, meaning that most of these stars would have formed after the dwarf’s collision with the larger galaxy. Says Servillat:

“This black hole is unique in that it’s the only intermediate-mass black hole we’ve found so far. Its rarity suggests that these black holes are only visible for a short time.”

The paper on this work discusses the process in greater detail:

Tidally stripping a dwarf galaxy during a merger event could remove a large fraction of the mass from the dwarf galaxy, with star formation triggered as a result of the tidal interactions. This could result in the observed IMBH [intermediate mass black hole] embedded in the remnant of the nuclear bulge and surrounded by a young, high metallicity, stellar population. It has been proposed that such accreted dwarf galaxies may explain the origin of some globular clusters, with the remnant cluster appearing more like a classical globular cluster as its stellar population ages.

HLX-1’s X-ray signature is still relatively bright (which is how Farrell found it in 2009 using the European Space Agency’s XMM-Newton X-ray space telescope). But as it depletes the supply of gas around it, the black hole’s X-ray signature will weaken. Its ultimate fate may indeed be to spiral into the center of ESO 243-49, to merge with the supermassive black hole there. Consider intermediate-sized black holes like this one the ‘missing link’ between stellar mass black holes and their supermassive counterparts at galactic center. The nuclei of dwarf galaxies (along with globular clusters) have previously been suggested as the likely environments for their formation, ideas which are given powerful support through the study of what’s happening in ESO 243-49.

The paper is Farrell et al., “A Young Massive Stellar Population Around the Intermediate Mass Black Hole ESO 243-49 HLX-1,” accepted for publication in The Astrophysical Journal Letters (preprint).