Centauri Dreams

Imagining and Planning Interstellar Exploration

A ‘Pinched’ Beam for Interstellar Flight

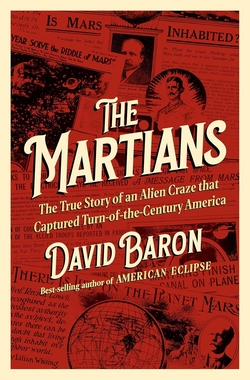

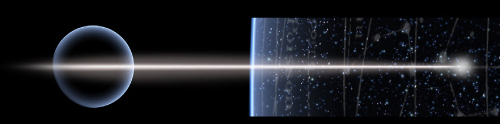

Take a look at the image below. It’s a jet coming off the quasar 3C273. I call your attention to the length of this jet, some 100,000 light years, which is roughly the distance across the Milky Way. Jeff Greason pointed out at the Montreal symposium of the Interstellar Research Group that images like this suggest it may be possible for humans to produce ‘pinched’ relativistic electron jets over the much smaller distances needed to propel a spacecraft out of the Solar System. This is an intriguing image if you’re interested in high-energy beams pushing payloads to nearby stars.

Greason is a self-described ‘serial entrepreneur,’ the holder of some 29 patents and chief technologist of Electric Sky, which is all about beaming energy to craft much closer to home. But he moonlights as chairman of the Tau Zero Foundation and is a well known figure in interstellar studies. Placing beaming into context is a useful exercise, as it suggests alternative ways to generate and use a beam. In all of these, we want to carry little or no fuel aboard the craft, drawing our propulsion from the home system.

Image: Composite false-color image of the quasar jet 3C273, with emission from radio waves to X-rays extending over more than 100,000 light years. The black hole itself is to the left of the image. Colors indicate the wavelength region where energetic particles give off most of their energy: yellow contours show the radio emission, with denser contours for brighter emission (data from VLA); blue is for X-rays (Chandra); green for optical light (Hubble); and red is for infrared emission (Spitzer). Credit: Y. Uchiyama, M. Urry, H.-J. Röser, R. Perley, S. Jester.

Laser beaming to a starship comes first to mind, going back as it does to the days of Robert Forward and György Marx, who explored options in the infancy of the technology. Later work on laser ad well as microwave beaming has included such luminaries as Geoffrey Landis, Gregory Matloff and James Benford, not to mention today’s intense laser effort via Breakthrough Starshot and the ongoing work at UC-Santa Barbara under Philip Lubin. A separate track has followed beamed options using elementary particles or, indeed, larger particles; the name Clifford Singer comes first to mind here, though Landis has done key work. A major problem: Beam power is inversely proportional to effective range. If we’re after faster, bigger ships, we need to find a way to extend the range of whatever kind of beam we’re sending.

We’ve lost some of the scientists who have dug deeply into these matters. Dana Andrews died last January, and Jordin Kare left us some six years ago (I will have more to say about Dr. Andrews in a future post). Kare developed ‘sailbeam,’ which was a string of micro-sails sent as fuel fodder to a larger starship. Pushing neutral particles to the long ranges we need faces problems of beam divergence, and charged particle beams are even more tricky, because like charges cause the beam to diverge.

Greason outlined another possibility at Montreal, one he described as ‘no more than half of an idea,’ but one he’s hoping to provoke colleagues to explore. This beaming option uses the ‘pinch’ phenomenon, in which charged beams in a low-density plasma can confine themselves over long distances. The mechanism: A beam carrying a current creates a circular axial magnetic field which in turn confines the beam. ‘Pinching’ is a means of self-confinement of the beam that has been studied since the 1930s. A pinch forming a jet explains why solar proton events can strike the Earth despite the 1 AU distance, and why galaxy-spanning jets like that in the image above can form.

Image: Jeff Greason, chief technologist and co-founder of Electric Sky.

We normally hear about a ‘pinch’ in the context of fusion research, but here we’re more interested in the beam’s persistence than its ability to compress and heat a plasma. The beam persists until it loses energy by collisions, which causes the current sustaining it to weaken and lose confinement. Although Greason said that ion beams may prove feasible, he noted that we’re getting into territory where we simply lack data to know what will work. Issues of charge neutralization and return currents from the beam come into play, as do long-range oscillations that can affect the beam. But the idea of applying a magnetic field to a stream of electrons along a specific axis to create the z-pinch is well established. If we can create an electron beam using this method, we can resurrect the idea of using charged particle beams to push our starship.

How to use power beamed in this fashion once it arrives at the target craft is a significant question. Greason spoke of the beam striking a plasma-filled waveguide which can ‘couple to backwards plasma wave modes,’ in effect launching plasma in the opposite direction as reaction mass. This keys to existing work on plasma accelerators (so-called “wakefield” accelerators), which use similar physics. How much of the beamed energy can be returned in this way remains up for investigation.

The consequences of mastering pinched beaming technologies would be immense. If we can increase the range of a beam from 0.1 AU to 1000 AU, we open up the possibility of sending much larger spacecraft, up to 105 larger, at the same power levels. We go from a gram-sized spacecraft as contemplated by Breakthrough Starshot’s laser methods to one of 10 kilograms. In doing this we have also changed the acceleration time from minutes to months. That increased payload size is particularly useful when it allows a braking system aboard for long-term study of the target.

This method demands a space-based platform – these ideas are inapplicable when applied to a ground installation and a beam through the atmosphere. Beaming from a location near the Sun offers obvious access to power and could be made possible through a near-Solar statite; i.e., an installation that ‘hovers’ over the Sun at Parker Solar Probe distances. Greason adds that to add maximum stability to the beam, the statite would have to transmit from a location between the Sun and the target star; i.e, the flow should be with the current of the solar wind as opposed to across the stream.

Image: Can we operate a statite at 0.05 AU from the Sun? This NASA visualization of the Parker Solar Probe highlights the kind of conditions the craft would be operating in.

The operative statite technology is thermionics, where electrons ‘boil’ out of a hot cathode and collect on a cold anode. Greason’s statite winds up with approximately 50 kilowatts per square meter of useful power; factoring in the thickness of the foils used in the installation, he calculates 150 kilowatts per square kilogram. A 1 gigawatt electron beam results. So operating at about 11 solar radii, we can produce the beam we need while also being forced to tackle the issues involved in maintaining a statite in position. One possibility is a plasma magnet sail to make use of the supersonic solar wind, a notion Greason has been exploring for years. See Alex Tolley’s The Plasma Magnet Drive: A Simple, Cheap Drive for the Solar System and Beyond for more.

Greason’s tightly reasoned, no-nonsense approach makes him a hugely appealing speaker. He’s offering a concept that opens out into all kinds of research questions, and spurring interested parties to advance the construct. A symposium of like-minded scientists and engineers like that in Montreal provides the kind of venue to gin up that support. The implication of being able to reach 20 percent of lightspeed with a multi-kilogram spacecraft is driver enough. A craft like that could begin exploration of nearby stars in stellar orbit there, rather than blowing through the destination system within a matter of minutes. What smaller beam installations near Earth could do for interplanetary exploration is left to the imagination of the reader.

The Order of Interstellar Arrival

Writers have modeled the arrival of an extraterrestrial probe in our Solar System in a number of interesting science fiction texts, from Clarke’s Rendezvous with Rama (1973) to the enigmatic visitors of Ted Chiang’s “Story of Your Life,” which Hollywood translated into the film Arrival (2016). In between I might add the classic ‘saucer landing on the White House lawn’ trope of The Day the Earth Stood Still (1951), based on a Harry Bates short story. All these and many other stories raise the question: What if before we make a radio or optical SETI detection, an extraterrestrial scout actually shows up?

Graeme Smith (UC: Santa Cruz) goes to work on the idea in a recent paper in the International Journal of Astrobiology, where he focuses on the mechanism of interstellar dispersion. The model has obvious ramifications for ourselves. We are beings who have begun probing nearby space with vehicles like Pioneer and Voyager, and in our early stages of exploration we could conceivably be reached by an extraterrestrial civilization (ETC) before we can make such journeys ourselves. Smith is asking what form such contact would take. His paper cautiously tries to quantify how interstellar exploration likely proceeds based on velocity and distance in a steadily advancing technological culture.

This takes us back to the so-called ‘Wait Equation’ explored by Andrew Kennedy in 2006, where he dug into what he called ‘the incentive trap of progress.’ Kennedy made the natural assumption that as an interstellar program of exploration proceeded, it would continue to produce faster travel speeds, so that one probe might be overtaken by another (thus A. E. van Vogt’s ‘Far Centaurus’ scenario, where a starship crew comes out of hibernation at Alpha Centauri to find a thriving civilization of humans, all of whom came by much speedier means while the original exploration team slumbered enroute).

The question, then, becomes whether we should postpone an interstellar launch until a certain amount of further progress can be made. And exactly how long should we wait? But Smith looks at this from a different angle: What kind of probes would be first to arrive in a planetary system where a civilization like ours can receive them? Would they be the ‘lurkers’ Jim Benford has written about, left by beings who expected them to report home on what evolved in our Solar System? Or might they be more overt, making themselves known in some way, and advanced well beyond our understanding?

Image: Is this really what we might expect if an ETC arrived on Earth? From the 1951 version of The Day the Earth Stood Still. Frank Lloyd Wright is said to have been involved in the design of the craft for this movie, though some believe this to be no more than a Hollywood legend. It’s an interesting one if so. And about that spacecraft: Is it too low-tech to be realistic? Read on.

In Smith’s parlance, a civilization like ours is ‘passive,’ a specific usage meaning that it is able to probe its own system with spacecraft but does not yet have interstellar capabilities. He imagines two ETCs, one in this passive state and one capable of interstellar flight. Smith’s calculations then consider probes launched by an extraterrestrial civilization that are followed by increasingly advanced probes over time. You can see from this that the farther away the sending civilization is, the more likely that what will arrive at the passive ETC will be one of its more advanced probes, the earlier ones being still in transit.

If an active ETC is evolving rapidly in technology, or is exceedingly distant, then a vehicle of relatively advanced state may be more likely to first reach a passive collecting civilization. In this case, there could be a considerable mismatch in the technology level of the first-arrival probe and that of the passive ETC that it encounters. This would presumably have ramifications for what might eventuate if an artefact from an ETC were to arrive within the Solar System and enable first-contact with terrestrials. Hypothetical reverse engineering, for example, might be difficult given the technology gap.

Assuming the probe speed scales linearly with launch date, Smith uses as an example the Voyager probes and spins out increasingly fast generations of probes, noting how many such generations will be required to reach first the closest stars and then stars farther out, and calculating the time that separates the first encounter spacecraft with the initial, zero-generation probes. The situation accelerates if we assume probe speeds that scale exponentially with launch date. I send you to the paper for his equations, but the upshot is that this scenario heightens the likelihood that a first encounter probe will display a major disparity in technology from what it finds at the receiving end.

And depending on the distance of the sending civilization, the disparity between the ETC technology and our own could be such that we would have difficulty understanding, even comprehending, what we were looking at. Smith again (italics mine):

The key implication of this paper can be summarized as followed: if an actively space-faring ETC embarks on a program to send probes to interstellar destinations, and if the technology of this ETC advances with time, then the first probe to arrive at the destination of a less-advanced ETC is less likely to be one of the earliest probes launched, but one of more advanced capability. There may thus be a substantial disparity between the level of technology comprising the first-arrival probe and that developed by the receiving ETC, if it has no interstellar capability itself. The greater the initial separation of the two ETCs, or the greater the rate of probe development by the active ETC, the greater is the potential for a technological mismatch at first encounter.

Image: A language that can alter our perception of time, under study in the film Arrival, where the probes in question represent a technology that is baffling to Earth scientists.

The situation would change, of course, if the receiving civilization is also one possessing interstellar capabilities, in which case contact might not even occur on the home world or system of the receiving culture. As we are a passive civilization in Smith’s terms, we are likely to encounter a markedly advanced civilization if an artifact ever does show up in our system. David Kipping calls this result ‘contact inequality,’ and remember, “…increasing the distance Dmax of the first-contact horizon increases the likely generation number of a probe of first encounter, thereby enhancing a contact inequality with a passive ETC.”

Something entering our Solar System from another civilization should be highly sophisticated, well beyond our technological levels, and perhaps utterly opaque to our scrutiny. The scenario of, for example, the Strugatsky brothers’ Roadside Picnic seems more likely than that of The Day the Earth Stood Still. The 1972 novel depicts the ‘stalker’ Red Schuhart as he enters a ‘zone of visitation’ where an alien civilization has come to Earth and left behind bizarre and inexplicable traces. Now they’ve moved on. What is human culture to make of their detritus? From the novel:

He had never experienced anything like this before outside the Zone. And it had happened in the Zone only two or three times. It was as though he were in a different world. A million odors cascaded in on him at once—sharp, sweet, metallic, gentle, dangerous ones, as crude as cobblestones, as delicate and complex as watch mechanisms, as huge as a house and as tiny as a dust particle. The air became hard, it developed edges, surfaces, and corners, like space was filled with huge, stiff balloons, slippery pyramids, gigantic prickly crystals, and he had to push his way through it all, making his way in a dream through a junk store stuffed with ancient ugly furniture … It lasted a second. He opened his eyes, and everything was gone. It hadn’t been a different world—it was this world turning a new, unknown side to him. This side was revealed to him for a second and then disappeared, before he had time to figure it out.

Image: From the 1979 film Stalker, based loosely on the Strugatsky novel. No spacecraft, no aliens here, just the mystery of what they left and what it means.

It should hardly surprise us that an arriving interstellar probe would be well beyond our technology; otherwise, it couldn’t have gotten here. But if we factor in what Smith is saying, it appears that depending on how far away the sending ETC is, the technology gap between us and them becomes greater and greater. We’re talking about baffling and perplexing morphing into the all but unknowable. Indistinguishable from magic?

No grand arrivals, no opportunities for trade, no galactic encyclopedias. This is first contact as enigma, and if I had to put money on it, I suspect this is closer to what would happen if contact is achieved by a visitation to our planet. I return to Rendezvous with Rama, where odd geometric structures and a ‘cylindrical sea’ are found within the probe slingshotting around the Sun, and the vehicle departs as mysteriously as it came, leaving behind only one overwhelming fact: We are not alone.

The paper is Smith, “On the first probe to transit between two interstellar civilizations,”

International Journal of Astrobiology 22 (2023), 185-196 (abstract).

To Build an Interstellar Radio Bridge

I sometimes imagine Claudio Maccone having a particularly vivid dream, a bright star surrounded by a ring of fire that all but grazes its surface. And from this ring an image begins to form behind him, kilometers wide, dwarfing him and carrying in its pixels the view of a world no one has ever seen. The dream is half visual, half diagrammatic, but it’s all about curving Einsteinian spacetime, so that light flows along the gravity well to be bent into a focus that extends into linear infinity.

My slightly poetic vision of what happens beyond 550 AU or so doesn’t do justice to the intrinsic beauty of the mathematics, which Maccone learned to unlock decades ago as he explored the concept of an ‘Einstein ring’ as fine-tuned by Von Eshleman at Stanford. When I met him (at one of Ed Belbruno’s astrodynamics conferences at Princeton in 2006), we and Greg Matloff and wife C talked about lensing at breakfast one morning. Even then he was afire with the concept. He’d been probing it since the late 1980s, and had submitted a mission proposal to the European Space Agency. He had written a short text that would later be expanded into the seminal Deep Space Flight and Communications (Springer, 2009).

Maccone said in his presentation at the Interstellar Research Group’s Montreal symposium that he was delighted to see the Sun’s gravitational focus moving into the hands of the next generation, citing the 2020 NASA grant to Slava Turyshev’s team at JPL, where a Solar Gravitational Lens mission is being worked out at the highest level of detail as an entrant into the sweepstakes known as the Heliophysics 2024 Decadal Survey. To see how far the concept has gone, have a look at, for example, Self-Assembly: Reshaping Mission Design, or A Mission Architecture for the Solar Gravity Lens, among numerous entries I’ve written on the JPL work.

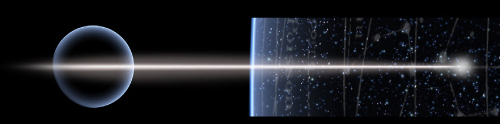

Image: A meter-class telescope with a coronagraph to block solar light, placed in the strong interference region of the solar gravitational lens (SGL), is capable of imaging an exoplanet at a distance of up to 30 parsecs with a few 10 km-scale resolution on its surface. The picture shows results of a simulation of the effects of the SGL on an Earth-like exoplanet image. Left: original RGB color image with (1024×1024) pixels; center: image blurred by the SGL, sampled at an SNR of ~103 per color channel, or overall SNR of 3×103; right: the result of image deconvolution. Credit: Turyshev et al., “Direct Multipixel Imaging and Spectroscopy of an Exoplanet with a Solar Gravity Lens Mission,” Final Report NASA Innovative Advanced Concepts Phase II.

The astounding magnification we could achieve by using bent starlight was what drew me instantly to the concept when I first learned about it – how else to actually see not just pixels from an exoplanet around its star, but actual continents, weather patterns, oceans and, who knows, even vegetation on the surface? But at Montreal, after his praise for the JPL effort that could become our first attempt to exploit the gravitational lens if adopted by the Decadal survey, Maccone took a much more futuristic look at what humans might do with lensing, delving into the realm of communications. What about building a radio ‘bridge’?

The concept is even more audacious that reaching 650 AU with the payloads we’ll need to deconvolve imagery from another star. In fact, it’s downright science fictional. Suppose we achieve the technologies needed to send humans to Alpha Centauri. We have there in the form of Centauri A a G-class star much like the Sun (although we could also use the K-class star Centauri B). Both of these stars have their own distance from which gravitational lensing occurs, Align your spacecraft properly to look back towards the Earth from Centauri A and you can now connect to the ‘relay’ at the lensing distance from the Sun. You’ve drastically changed the communications picture by using lensing in both directions.

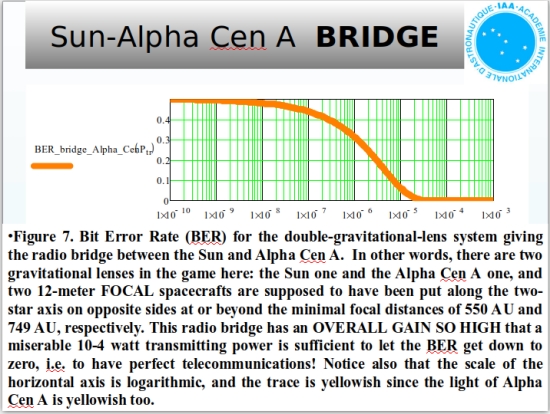

The consequences for contact and data transfer are enormous. Consider: If we want to talk to our crew now orbiting Centauri A and try to do so with one of the Deep Space Network’s 70-meter dish antennae using today’s standards for spacecraft communications, we’d have no usable signal to work with. Assume a transmitting power of 40 W and communications over the Ka band (32 GHz) at a rate of 32 kbps (these are the figures for the highest frequency used by the Cassini mission). The distances are too great; the power too weak. But if we factor in a receiver at the lensing point of Centauri A directly opposite to the Sun, we get the extraordinary gain shown in the diagram below.

This raises the eyebrows. Bit Error Rate expresses the quality of the signal, being the number of erroneous bits received divided by the total number of bits transmitted. Using a spacecraft at the solar gravitational lens distance from the Sun talking to one on the other side of Centauri A (alignment, of course, is critical here), we have a signal so strong that we have to go over 9 light years out before it begins to degrade. A radio bridge like this would allow communications with a colony at Alpha Centauri using power levels and infrastructure we have in place today.

Obviously, this is a multi-generational idea given travel times to and from Alpha Centauri. But it’s a step we may well need to take if we can solve all the problems involved in getting human crews to another star. Maccone told the audience at Montreal that in terms of channel capacity (as defined by Shannon information theory), the Sun used as a gravitational lens allows 190 gigabits per second in a radio bridge to Centauri A as opposed to the paltry 15.3 kilobits per second available without lensing.

Realizing that any star creates this possibility, Maccone has lately been working on the question of how a starfaring society of the future might use radio bridges to plot out expansion into nearby stars. He is in fact thinking about the best ‘trail of expansion’ humans might use to keep links being built and used between colonies at these stars. This turns out to be no easy task: The first goal must be to convert the list of nearby stars being studied (the number is arbitrary) into Cartesian coordinates centered on each star (their coordinates are currently given in terms of Right Ascension and Declination with respect to the Sun). Maccone calls this an exercise in spherical trigonometry, and it’s a thorny one.

A network of radio bridges between stars could evolve into a kind of ‘galactic internet,’ a term Maccone uses with an ironic smile as it plays to the journalist’s need to write dramatic copy. Be that as it may, the SETI component is intriguing, given that older civilizations may even now be exploiting gravitational lensing. It would be an interesting thing indeed if we were to discover a bridge relay somewhere at our Sun’s gravitational lensing distance, for its placement would allow us to calculate where the receiving civilization must be located. Using a gravitational lens for communications is, after all, extraordinarily directional. Might we one day discover at the lensing distance from the Sun an artifact that can open access to a networked conversation on the interstellar scale?

Human expansion to nearby stars would likely be a matter of millennia, but given the age of the galaxy, it would represent just a sliver of time. Whether humanity can survive for far shorter timeframes is an immediate question, but I think it’s refreshing indeed to look beyond the current work on reaching the solar gravitational lens to the implications that would follow from exploiting it. The radio bridge is great science fiction material – we might even call it the stuff of dreams – but solidly rooted in physics if we can find the tools to make it happen.

Atmospheric Types and the Results from K2-18b

The exoplanet K2-18b has been all over the news lately, with provocative headlines suggesting a life detection because of the possible presence of dimethyl sulfide (DMS), a molecule produced by life on our own planet. Is this a ‘Hycean’ world, covered with oceans under a hydrogen-rich atmosphere? Almost nine times as massive as Earth, K2-18b is certainly noteworthy, but just how likely are these speculations? Centauri Dreams regular Dave Moore has some thoughts on the matter, and as he has done before in deeply researched articles here, he now zeroes in on the evidence and the limitations of the analysis. This is one exoplanet that turns out to be provocative in a number of ways, some of which will move the search for life forward.

by Dave Moore

124 light years away in the constellation of Leo lies an undistinguished M3V red dwarf, K2-18. Two planets are known to orbit this star: K2-18c, a 5.6 Earth mass planet orbiting 6 million miles out, and K2-18b, an 8.6 Earth mass planet orbiting 16 million miles out. The latter planet transits its primary, so from its mass and size (2.6 x Earth’s), we have its density (2.7 g/cm2), which class the planet as a sub-Neptune. The planet’s relatively large radius and its primary’s low luminosity make it a good target to get its atmospheric spectra, but what also makes this planet of special interest to astronomers is that its estimated irradiance of 1368 watts/m2 is almost the same as Earth’s (1380 watts/m2).

Determining an exosolar planet’s atmospheric constituents, even with the help of the James Webb telescope, is no easy matter. For a detectable infrared spectrum, molecules like H2O, CH4, CO2 and CO generally need to have a concentration above 100 ppm. The presence of O3 can function as a stand-in for O2, but molecules such as H2, N2, with no permanent dipole moment, are much harder to detect.

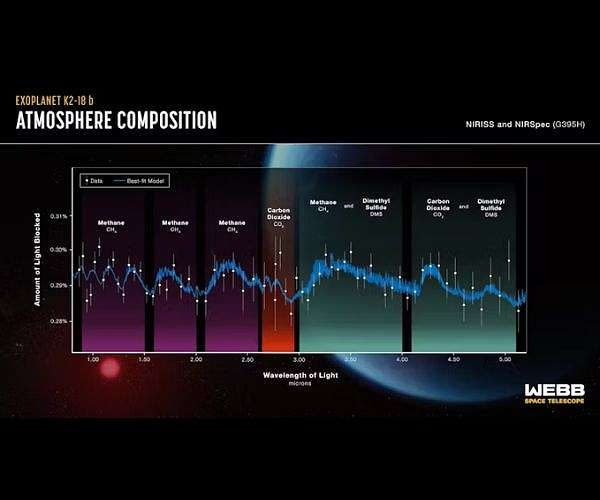

The Hubble telescope got a spectrum of K2-18b in 2019. Water vapor and H2 were detected, and it was assumed to have a deep H2/He/steam atmosphere above a high pressure ice layer over an iron/rocky core, much like Neptune. On September 11 of this year, the results of spectral studies by the James Webb telescope were announced: CH4 and CO2 were found as well as possible traces of DMS (Dimethyl sulfide). No signal of NH3 was found. Nor was there any sign of water vapor. The feature thought to be water vapor turned out to be a methane line of the same frequency.

Figure 1: Spectra of K2-18b obtained by the James Webb telescope

This announcement resulted in considerable excitement and speculation by the popular press. K2-18b was called a Hycean planet. It was speculated that it had an ocean, and the possible presence of DMS was taken as an indication of life because oceanic algae produce this chemical. But that was not what intrigued me. What caught my attention was the seemingly anomalous combination of CH4 and CO2in the planet’s atmosphere. How could a planet have CH4, a highly reduced form of carbon, in equilibrium with CO2, the oxidized form of carbon? A search turned up a paper from February 2021: “Coexistence of CH4, CO2, and H20 in exoplanet atmospheres,” by Woitke, Herbort, Helling, Stüeken, Dominik, Barth and Samra.

The authors’ purpose for this paper was to help with the detection of biosignatures. To quote:

The identification of spectral signatures of biological activity needs to proceed via two steps: first, identify combinations of molecules which cannot co-exist in chemical equilibrium (“non-equilibrium markers”). Second, find biological processes that cause such disequilibria, which cannot be explained by other physical non-equilibrium processes like photo-dissociation. […] The aim of this letter is to propose a robust criterion for step one…

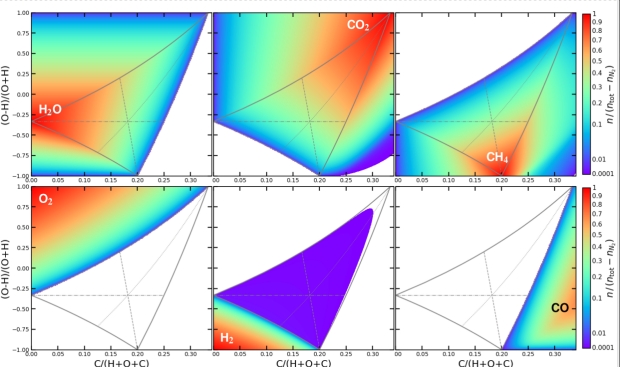

The paper presents an exhaustive study for the lowest energy state (Gibbs free energy) composition of exoplanet atmospheres for all possible abundances of Hydrogen, Carbon, Oxygen, and Nitrogen in chemical equilibrium. To do that, they ran thermodynamic simulations of varying mixtures of the above atoms and looked at the resulting molecular ratios. At low temperatures (T ≤ 600K), they found that the only molecular species you get in any abundance are H2, H20, CH4, NH3, N2, CO2, O2. At higher temperature, the equilibrium shifts towards more H2, and CO begins to appear.

Some examples of their results:

If O > 0.5 x H + 2 x C ––> O2-rich atmosphere, no CH4

If H > 2 x O + 4 x C ––> H2-rich atmosphere, no CO2

If C > 0.25 x H + 0.5 x O ––> Graphite condensation, no H20

They also used the equations to tell what partial pressures of the elemental mixture will produce equal pressures of the various molecules:

If H = 2 x O then the CO2 level will equal CH4

If 12 C = 2 x O + 3 x H then the CO2level will equal H20

If 12 C = 6 x O + H then the H20 level will equal CH4

To summarize, I quote from their abstract:

We propose a classification of exoplanet atmospheres based on their H, C, O, and N element abundances below about 600 K. Chemical equilibrium models were run for all combinations of H, C, O, and N abundances, and three types of solutions were found, which are robust against variations of temperature, pressure, and nitrogen abundance.

Type A atmospheres[which] contain H20, CH4, NH3, and either H2 or N2, but only traces of CO2 and O2.

Type B atmospheres [which] contain O2, H20, CO2, and N2, but only traces of CH4, NH3, and H2.

Type C atmospheres [which] contain H20, CO2, CH4, and N2, but only traces of NH3, H2, and O2…

Type A atmospheres are found in the giant planets of our outer solar system. Type B atmospheres occur in our inner solar system. Earth, Venus and Mars fall under this classification, but we don’t see any planets with Type C atmospheres.

Below is a series of charts showing the results for each of the six main molecular species over a range of mixtures.

Figure 2: The vertical axis is the ratio of Hydrogen to Oxygen, starting at 100% Hydrogen at the bottom and running to 100% Oxygen at the top. The horizontal axis shows the proportion of Carbon in the total mixture (The ratio runs up to 35%.) Molecular concentrations are in chemical equilibrium as a function of Hydrogen, Carbon, and Oxygen element abundances, calculated for T = 400 K and p = 1 bar. The blank regions are concentrations of < 10−4.

The central grey triangle marks the region in which H20, CH4, and CO2 can coexist in chemical equilibrium. The thin grey lines bisecting the triangle indicate where two of the constituents are at an equal concentration. These lines are hard to discern unless you can magnify the original image. For H20 and CO2 at equal concentration, it’s the dashed line (the near vertical line running upwards from 0.2 on the horizontal scale.) For CO2 and CH4, it’s the horizontal line. And for H20 and CH4, it’s the dotted line swooping upwards toward the top right-hand corner.)

The color bars at the right-hand side of the charts are both a color representation of the concentration and show the proportion of Nitrogen tied up as N2, i.e. that which is not NH3. Not surprisingly, the more Hydrogen there is in the mix, the higher the proportion of NH3 there is.

Other Results from the Paper

In the area around the stoichiometric ratio for water you get maximum H20 production and supersaturation occurs. Clouds form and the water rains out. Therefore, you cannot get an atmosphere with very high concentrations of water vapor unless the temperature is over 650°K, the critical point of water. Precipitation results in the atmospheric composition moving out of the area that gives CO2/CH4 mixtures.

Atmospheres with high carbon concentrations and having Hydrogen and Oxygen near their stoichiometric ratio have most of the atmospheric constituents tied up as water, so at a certain point carbon forms neither CO2 nor CH4 but rains out as soot. This, however, only precludes mixtures in the very right hand side of the CO2/CH4 Triangle.

Full-equilibrium condensation models show that the outgassing from warm rock, such as mid-oceanic ridge basalt can naturally produce Type C atmospheres.

Thoughts and Speculations

i) While it is difficult to argue with the man who coined the term, I still think Madhusudhan’s description of K2-18b as Hycean is too broad. Watching Madhusudhan in a Youtube interview, he refers to his paper “Habitability and Biosignatures of Hycean Worlds,’ which suggests that ocean covered planets under a Hydrogen atmosphere can exists within a zone that reaches into a level of irradiance slightly greater than Earth’s; however, he doesn’t mention the work by Lous et al in their paper, “Potential long-term habitable conditions on planets with primordial H–He atmospheres,” that showed that inside irradiance levels equivalent to 2 au from our Sun or greater, the Hydrogen atmosphere required to maintain Earthlike temperatures and not cook it is so thin that it is lost quickly over geological timescales. (You can see this in more detail in my article Super Earths/Hycean Worlds.) I would therefore define a Hycean planet as a rocky world with a radius up to 1.8 x Earth’s outside the irradiance equivalent of 2 au from our sun. K2-18b, being both larger than this and less dense than a rocky world, would fall, in my mind, firmly into the category of sub-Neptune.

ii) Another way of thinking of Type A, Type B and Type C atmospheres is to denote them as Hydrogen dominated, Oxygen dominated and Carbon dominated. Carbon dominated atmospheres may have by far the bulk of their constituents being Hydrogen and Oxygen; but because the enthalpy of the Hydrogen-Oxygen reaction is so much greater than the other reactions, when Hydrogen and Oxygen are close to their stoichiometric ratio, they preferentially remove themselves from the mix leaving Carbon as the dominant constituent. There is no Nitrogen dominated atmosphere because for most of its range Nitrogen sticks to itself forming N2 and is inert.

iii) The lack of H20 spectral lines is puzzling. Madhusudhan in his interview suggests that the spectra was a shot of the high-dry stratosphere. To cross-check the plausibility of this, I looked up the physical data on DMS. Dimethyl Sulfide vaporizes at 37°C and freezes at -98°C, which is lower than CO2’s freezing point. It also has a much higher vapor pressure than water at below freezing temperatures, so this does not contradict the assumption.

iv) I’m surprised this paper is not more widely known as not only does it provide a powerful tool for the analysis of exosolar planets’ atmospheric spectra, but it can also point to other aspects of a planet.

After the Hubble results came out in 2017, papers were published to model the formation of K2-18b, and while a range of possibilities could match the planet’s characteristics, they all came from the assumption that the planet began via the formation of a rocky/iron core followed by the gas accretion of large amounts of H2, Helium, and H20. According to the coexistence paper though, you cannot have large amounts of H2 and get a CO2/CH4 mix with no NH3. So to arrive at this state, this planet must never have had much gas accretion in the first place, or lost large amounts of Hydrogen after it formed. This latter scenario would require the planet to gain a Hydrogen envelope while at less than full mass in a hot nebula and then at full mass, in a cooler environment, lose most of its Hydrogen.

It is much easier to explain the planet’s characteristics by assuming it formed outside the snowline, never gained much of a gas envelope in the first place and spiraled into its present position. If it was formed from icy bodies like Ganymede and Titan (density ~ 1.9 gm/cc), this would give a good match for its density (2.7 gm/cc) allowing for gravitational contraction. The snow line is also the zone where carbonaceous chondrites form, so this would give the planet a higher carbon content than a pure rocky/iron one.

v) Madhusudhan, again from his interview, seems to think that K2-18b is an ocean planet, but I’m dubious about this for two reasons:

The first is that from the work done on Hycean planets by Lous et al, any depth of atmosphere especially with the potent greenhouse mix of CO2 and CH4 is likely to result in a runaway-greenhouse steam atmosphere inside the classically defined habitable zone (inside 2 au. for our sun).

The planet’s CO2/CH4 mix also points against this. From the paper, if there is a slight excess of Hydrogen over the stoichiometric ratio for water, then condensing H20 out, as either water or high pressure ice, pushes the planet’s atmosphere towards a Type A Hydrogen excess with no CO2 and NH3 lines appearing.

All of this would point towards a planet with a rocky/iron core overlaid by high pressure ice, which would, at about the megabar level, transition to a gas atmosphere composed mainly of super-critical steam. This would make up a significant volume of the planet. At the top of this atmosphere, the water, now in the form of steam, would condense out as virago rain leaving a dry stratosphere consisting mainly of CO2, CH4, H2 and N2.

To test my assumption, I did a rough back of the envelope calculation using online calculators, and looked at the wet adiabatic lapse rate (the rate of increase in temperature when saturated air is compressed) per atm. pressure doubling starting from 1 bar at 20°C. This rate (1.5°C/1000 ft) is considerably less than the rate for dry gases (3°C/1000 ft).

It was all very ad hoc, but the first thing I noted was that for each pressure doubling, the boiling point of water goes up significantly–at 100 bar, water boils at 300°C–until its temperature approaches its critical point (374°C) where it levels off. So the lapse rate increase in temperature chases the boiling point of water as you go deeper and deeper into the atmosphere; however, from my calculations, it catches water’s boiling point at 270°C and 64 bar. The calculations are arbitrary—I was using Earth’s atmospheric composition and gravity–and small changes in the parameters can result in big changes in the crossover point; but what this does point to is that if the planet has an ocean, it could be a rather hot one under a dense atmosphere, and if the atmosphere has any great depth then the ocean is likely to be a supercritical fluid.

Also, for the atmosphere to be thin, the planet’s ratio of CO2, CH4 and H2 must be less than 1/10,000 that of H20, which is not something I regard as likely, given what we know about the outer solar system.

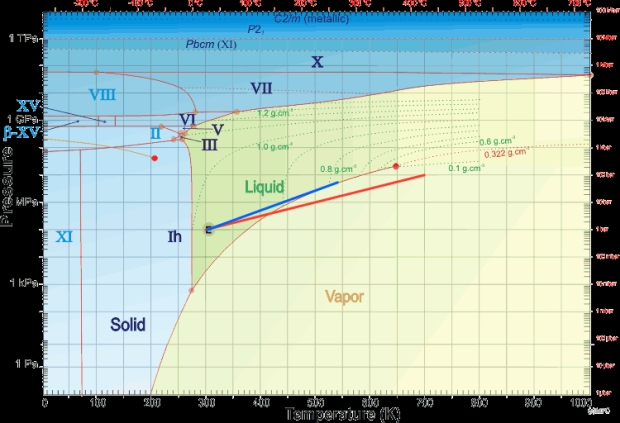

I’ll leave you with a phase diagram of water with (red line) the dry adiabat of Venus moved 25°C cooler to represent a dry Earth and the wet adiabat (blue line) the one I calculated out. It’s also a handy diagram to play with as it gives you an idea of how deep the ocean or critical fluid layer will be at a given temperature before it turns into a layer of high pressure ice.

vi) One final point, and this reinforces the purpose of the paper: that we need to thoroughly understand planetary chemistry to eliminate false bio-markers. DMS is widely touted as a biomarker, but if we look at the most thermodynamically stable forms of sulfur: In a Type A reducing atmosphere, it’s H2S; and in a wet, oxidizing, Type B atmosphere, it’s the Sulfate (SO42-) ion. Unfortunately, the authors of the paper did not extend their thermodynamic analysis to Sulfur, but if we look at DMS’s formula (CH3)2S, it looks an awful lot like a good candidate for the most thermodynamically stable form of Sulfur for a Type C atmosphere, not a biomarker.

References

Wikipedia: K2-18b

https://en.wikipedia.org/wiki/K2-18b

N. Madhusudhan, S. Sarkar, S. Constantinou, M Holmberg, A. Piette, and J. Moses, Carbon-bearing Molecules in a Possible Hycean Atmosphere, Preprint, arXiv: 2309.05566v2, Oct 2023

https://esawebb.org/media/archives/releases/sciencepapers/weic2321/weic2321a.pdf

P. Woitke, O. Herbort, Ch. Helling, E. Stüeken, M. Dominik, P. Barth and D. Samra, Coexistence of CH4, CO2, and H2O in exoplanet atmospheres, Astronomy & Astrophysics, Vol. 646, A43, Feb 2021

https://doi.org/10.1051/0004-6361/202038870

N. Madhusudhan, M. Nixon, L. Welbanks, A. Piette and R. Booth, The Interior and Atmosphere of the Habitable-zone Exoplanet K2-18b, The Astrophysical Journal Letters, 891:L7 (6pp), 2020 March 1

https://doi.org/10.3847/2041-8213/ab7229

Super Earths/Hycean Worlds, Centauri Dreams 11 November, 2022

Youtube interview of Nikku Madhusudhan, Is K2-18b a Hycean Exoworld? on Colin Michael Godier’s Event Horizon

Galactic Civilizations: Does N=1?

I don’t suppose that Frank Drake intended his famous Drake Equation to be anything more than a pedagogical device, or rather, an illustrative tool to explain what he viewed as the most significant things we would need to know to figure out how many other civilizations might be out there in the galaxy. This was back in 1961, and naturally the equation was all about probabilities, because we didn’t have hard information on most of the factors in the equation. Drake was already searching for radio signals at Green Bank, in the process inventing SETI as practiced through radio telescopes.

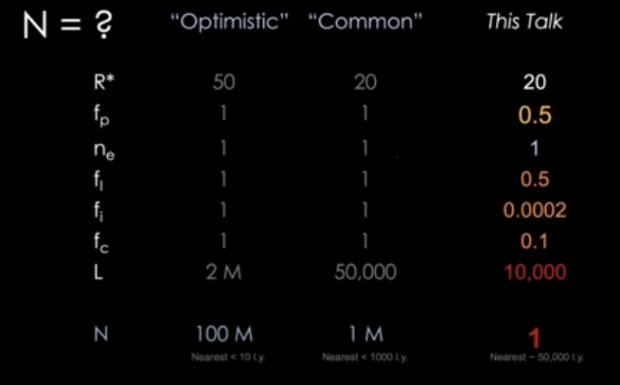

![]()

The factors here should look familiar to most Centauri Dreams readers, but let’s run through them, because among the old hands here we also get an encouraging number of students and people new to the field. N is the number of civilizations with communications potential in the galaxy, with R* the rate of star formation, fp the fraction of stars with planets, ne the number of planets that can support life per system, fl the fraction of planets that actually develop life, fi the fraction that develop intelligent life, fc the fraction that go on to communicate and L the life time of a technological civilization.

The idea of course is that you can multiply all these things together to derive some idea of how many civilizations are out there whose signals might be detected on Earth. Multiplying all those factors obviously ratchets up the uncertainties, but given that we have been proceeding with our investigations, and fruitfully so, for decades since Drake addressed the 1961 meeting at Green Bank, it’s interesting to see just where we stand today. Bringing us up to date is what Pascal Lee did at the recent symposium of the Interstellar Research Group in Montreal, where he gave the talk you can access here.

Image: M31, the Andromeda galaxy. Are civilizations common in spirals like this one? The Drake Equation is one way of probing the question, with ever-changing results. Credit: Space Telescope Science Institute.

Lee (SETI Institute, NASA Ames) is all too aware that with Earth as our only datapoint, we run the risk of being eaten alive by our own assumptions. So he takes a conservative approach on each of the factors involved in the Drake Equation. I think some of the most interesting factors here relate to the nature of intelligence, which didn’t pop up until halfway through the life of our star. That’s assuming you posit, as Lee does, that the appearance of homo erectus signifies this development, and this datapoint indicates that intelligence is not necessarily a common thing.

After all, the creatures that ran the show here on Earth for well over 200 million years do not seem to have developed intelligence, if we take Lee’s definition and say that this trait involves making technologies that did not exist earlier. A beaver dam is not a mark of intelligence because over the course of time it remains the same basic structure. Whereas true intelligence produces evolving and improving technologies. Thus primitive humans learn how to control fire. Then they make it portable. They start using tools. That this occurs so late causes Lee to give the fi factor a value of 0.0002, derived by dividing the median duration homo erectus has been around, roughly one million years, by the age of our planet. That’s but a sliver in Earth’s geology. We can reasonably argue that intelligence is circumstantial and fortuitous.

And what about intelligence developing into a technological civilization? This one is likewise interesting, and I like Lee’s idea of pegging 1865 as the time when humans became capable of electromagnetic communications because of James Clerk Maxwell’s equations. Thus we go from intelligence to communicative technologies and SETI possibilities in roughly a million years, from homo erectus to Maxwell.

But that’s just Earth’s datapoint. What about ocean worlds, or life forms in gas giant atmospheres or in heavy gravity surface environments where attaining orbit is itself problematic and perhaps the stars are rarely visible? Intelligence may develop without producing advanced civilizations that can become SETI targets. Here we have to get arbitrary, but Lee’s choice for the fraction of intelligent life turning into communicating civilizations is 0.1. It happens, in other words, just one time in ten. He’s being deliberately conservative about this, and the result has a lot of play in the equation.

Take a look at the rest of Lee’s values, based on all the work on these matters since the Drake Equation was conceived. Here’s his summary slide of the current outlook. I won’t run through each of the factors because we’re making progress on refining our numbers for those on the left side of the equation, whereas for these last two points we’re still extrapolating from a serious shortage of data. And that is certainly true in the last factor, which is L.

Obviously how we evaluate the longevity of a civilization determines the outcome, for if it is common for advanced technological cultures to destroy themselves, then we could be looking at a galaxy full of ruins rather than one with a flourishing network of intelligence. Our own threats are obvious enough: nuclear war, pandemics, runaway AI or nanotech, the ‘democratizing’ of potentially lethal technologies and more. Speculation on this matter runs the gamut, from a lifetime of 100 years to over a million, but historically human cultures last somewhere between 500 and 5000 years.

Is the anthropocene to be little more than a thin layer of rust in the geological strata? Lee sees 10,000 years for the lifetime of a civilization as a generous estimate, given that we are global and have more than the capability of self-annihilation. If this estimate is correct, we arrive at the conclusion shown in the slide above: The number of civilizations in our galaxy most likely equals one. And that would be us.

I was once asked in the question session after a talk how many civilizations I thought were in the Milky Way right now. I remember hedging my bets by referring to everybody else’s estimates – the 1961 estimate from the Green Bank meeting had ranged from 1,000 to 100,000,000, whereas one recent paper pegged the number at 30. But my interlocutor pressed me: What was my estimate? My conservative nature came to the fore, and I heard myself answering: “Between 1 and 10.” That’s still my estimate, but as I told my audience then, it’s the hunch of a writer, not the conclusion of a scientist, so take it for what it is.

Now I find Lee reaching the same conclusion, but two things about this stick out. If a single civilization did somehow get past what Robin Hanson calls ‘the great filter’ to emerge as a star-faring species living on many worlds, then their presence could still make all our talk of an Encyclopedia Galactica relevant. We might one day find that there is indeed a thriving network of intelligence, but one based around the work of a single Ur-civilization whose works we have better learn about and emulate. It’s a pleasing thought that such a civilization might be biological as well as machine-based, but all bets are off.

The other point, and this is one Lee makes in his talk: If life is common but technological civilizations are rare, that still leaves room for a value for N that takes in not just the Milky Way but the entire universe. N=1 means that the visible universe should contain 1011 civilizations, a satisfyingly large number and one that keeps our SETI hopes alive. We had better, in this case, concentrate our attention on nearby galaxies to have the greatest chance of success. There are 60 of them within 2 million light years, and over a thousand within 33 million light years. M31, the Andromeda galaxy, may deserve more SETI attention than it has been getting.

SETI: A New Kind of Stellar Engine

The problem of perspective haunts SETI, and in particular that branch of SETI that has been labeled Dysonian. This discipline, based on Freeman Dyson’s original notion of spheres of power-gathering technology enclosing a star, has given rise to the ongoing search for artifacts in our astronomical data. The fuss over KIC 8462852 (Boyajian’s Star) a few years back involved the possibility that it was orbited by a megastructure of some kind, and thus a demonstration of advanced technology. Jason Wright and team at Penn State have led searches, covered in these pages, for evidence of Dyson spheres in other galaxies. The Dysonian search continues to widen.

I cite a problem of perspective in that we have no real notion of what we might find if we finally locate signs of extraterrestrial builders in our data. It’s so comfortable to be a carbon-based biped, but the entities we’re trying to locate may have other ways of evolving. Clément Vidal, a French philosopher and one of the most creative thinkers that SETI has yet produced, likes to talk not about carbon or silicon but rather ‘substrates.’ Where, in other words, might intelligence eventually land, and is that likely to be a matter of chemistry and biology or simple energy?

Vidal is currently at the UC Berkeley SETI Research Center, though he has deeper roots at the Free University of Brussels, from which he created his remarkable The Beginning and the End (Springer, 2014), along with a string of other publications. Try to come up with a definition of life and you may well emerge with something like this: Matter and energy in cyclical relationship using energy drawn from the environment to increase order in the system. I think that was Vidal’s starting point; it’s drawn from Gerald Feinberg and Robert Shapiro in Life Beyond Earth, Morrow 1980). No DNA there. No water. No carbon. Instead, we’re addressing the basic mechanism at work. In how many ways can it occur?

As Vidal reminded the audience at the recent Interstellar Research Group symposium in Montreal (video here), we are even now, at our paltry 0.72 rank in the Kardashev scale, creating increasingly interesting software that at least mimics intelligence to a rather high order. Making further advances that may exceed human intelligence is conceivably a matter of mere decades. If we consider intelligence embedded in a substrate of some kind, it makes sense that our planet may house fewer biological beings in the distant future than creatures we can call ‘artilects.’

The silicon-based outcome has been explored by thinkers like Martin Rees and Paul Davies in the recent literature. But the ramifications go much further than this. If we consider life as critically embedded in energy flows, the notion of life upon a neutron star swims into the realm of possibility. Frank Drake is one scientist who wrote about such things, as did Robert Forward in his novel Dragon’s Egg (Ballantine, 1980). If the underlying biology is of less importance and matter/energy interactions take precedence, we can further consider concepts like intelligence appearing wherever these interactions are at their most intense. Vidal has explored close binary systems as places where a civilization might mine energy, and for all we know, extract it to support a cognitive existence far removed from our notion of a habitable zone.

What about stars themselves? Greg Matloff has pointed to the low temperatures of red dwarf stars as allowing molecular interactions in which a primitive form of intelligence might emerge. Olaf Stapledon dreamed up civilizations using stellar energies in novel ways in Star Maker (Methuen, 1937) and mused on the emergence of stellar awareness. At Montreal, Vidal presented recent work on how an advanced civilization – in whatever substrate – might deploy a star orbited closely by a neutron star or black hole as a system of propulsion, with the ‘evaporation’ from the host star flowing to the compact companion and being directed by timing the pulsations to coincide with the orbital position of each. Far beyond our technologies, but then we’re at 0.72, as opposed to Kardashev civilizations at the far end of Kardashev II.

We have no idea how likely it is that such entities could emerge, but consider this. Charles Lineweaver’s work at Australian National University shows that the average Earth-like planet in the galaxy is on the order of 1.8 billion years older than Earth. The Serbian astronomer and writer Milan Ćirković has made the further point that this 1.8 billion year head-start is only an average. There must be planets considerably more than 1.8 billion years older than ours, and that makes for quite a few millennia for intelligence to develop and technologies to flourish.

Our first encounter with another civilization, then, is almost certainly going to be with one far older than our own. What, then, might we find one day in our astronomical data? I’ve quoted Vidal on this in the past and want to cite the same passage from The Beginning and the End today:

We need not be overcautious in our astrobiological speculations. Quite the contrary, we must push them to their extreme limits if we want to glimpse what such advanced civilizations could look like. Naturally, such an ambitious search should be balanced with considered conclusions. Furthermore, given our total ignorance of such civilizations, it remains wise to encourage and maintain a wide variety of search strategies. A commitment to observation, to the scientific method, and to the most general scientific theories remains our best touchstone.

The specific speculation Vidal tantalized the crowd with at Montreal is one he calls the ‘spider stellar engine,’ about which a quick word. Two types of ‘spider engines’ get his attention, the ‘redback’ and the ‘black widow.’ I assume Vidal is not necessarily an arachnophile, but rather a man aware of the current astrophysical jargon about extreme objects and pulsar binaries in particular. A redback refers to a rapidly rotating neutron star in tight orbit around a star massing up to 0.6 solar masses. A black widow has a much smaller companion star, and the term spider simply refers to the fact that the pulsar’s gravity draws material away from the larger star.

The larger star in such systems can be, spider-like, completely consumed, a useful marker as we study effects such as accretion disks and mass transfer between the two objects. Here is the energy gradient we are looking for in the question of a basic life definition, one that can be exploited by any beings that want to take advantage of it. A long-lived Kardashev II civilization, having feasted on the host star for its energies, could use what is left of the dwindling star at the end of its life to move to another host. The question for astronomers as well as philosophers is whether such a system would throw an observational signal that is detectable, and the question at this point remains unanswered.

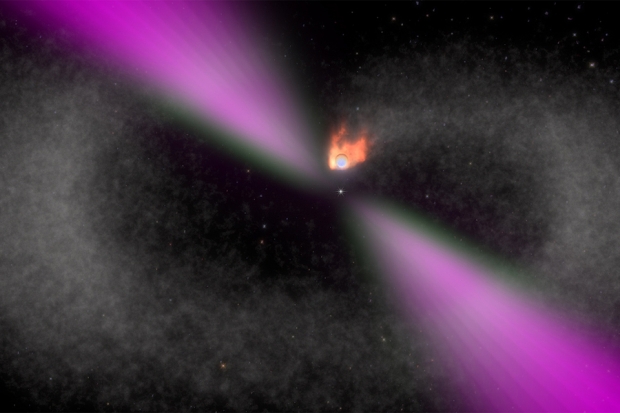

Image; An illustrated view of a black widow pulsar and its stellar companion. The pulsar’s gamma-ray emissions (magenta) strongly heat the facing side of the star (orange). The pulsar is gradually evaporating its partner. ZTF J1406+1222, has the shortest orbital period yet identified, with the pulsar and companion star circling each other every 62 minutes. Credit: NASA Goddard Space Flight Center/Cruz deWilde.

We do have some interesting systems to watch, however. Vidal cites the pulsar PSR B1957+20 as having pulsations between host star and pulsar that match the orbital period, but notes that of course there are other ways of explaining this effect. We may want to include this particular signature as an item to look for in our pulsar work related to SETI, however. Meanwhile, the question of stellar propulsion (I think also of the ‘Shkadov thruster,’ another type of hypothesized stellar engine), explored by Vidal in his Montreal talk, yields precedence to the broader question with which we began. Are our perspectives sufficient to look for the kind of astronomical signatures that might be pointing toward forms of life almost unimaginably beyond our own?