Centauri Dreams

Imagining and Planning Interstellar Exploration

Habitability: Similar Magnetic Activity Links Stellar Types

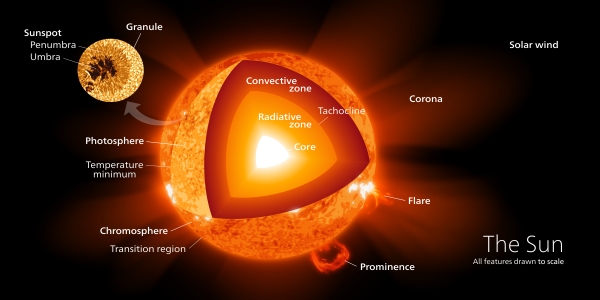

Looking at flare activity in young M-dwarf stars, as we did in the last post, brings out a notable difference between these fast-spinning stars and stars like the Sun. Across stellar classifications from M- to F-, G- and K-class stars, there is commonality in the fusion of hydrogen into helium in the stellar cores. But the Sun has a zone at which energy carried toward the surface as radiative photons is absorbed or scattered by dense matter.

At this point, convection begins as colder matter moves downward and hot matter rises. This radiative zone giving way to convection is distinctive — stars in the M-class range, a third of the mass of the Sun and lower, do not possess a radiative core, but undergo convection throughout their interior.

Image: Interior structure of the Sun. Credit: kelvinsong / Wikimedia Commons CC BY-SA 3.0.

If we’re going to account for magnetic phenomena like starspots, flares and coronal mass ejections, we can come up with a model that fits stars with a radiative core, but fully convective stars might be expected to have a different kind of magnetic dynamo. What stands out in the data, however, is that the relationship between the star’s rotation and its magnetic activity appears the same for stars on both sides of what I might call the ‘convective divide.’ In both cases, the magnetic dynamo seems to be efficient despite the fact that M-dwarfs are fully convective.

Digging further into the subject is a new paper out of Rice University, where modeling of these phenomena examines the linkage between the rotation of stars and the behavior of their surface magnetic flux. The flux in turn governs the luminosity of the star at X-ray wavelengths, giving us a way to probe magnetic activity and its potential effects on planets in these systems. The paper explaining the new model has just run in The Astrophysical Journal. Lead author Alison Farrish comments on the implications over time as rotation periods change:

“All stars spin down over their lifetimes as they shed angular momentum, and they get less active as a result. We think the sun in the past was more active and that might have affected the early atmospheric chemistry of Earth. So thinking about how the higher energy emissions from stars change over long timescales is pretty important to exoplanet studies.”

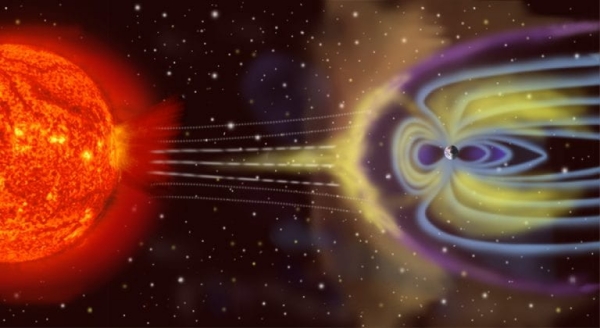

Image: Rice University scientists have shown that “cool” stars like the sun share dynamic surface behaviors that influence their energetic and magnetic environments. Stellar magnetic activity is key to whether a given star can host planets that support life. Credit: NASA.

Farrish and team used the Rossby number of our own star to model the behavior of other stars. This value measures stellar activity through the combination of rotational speed and subsurface liquid flows that influence how the magnetic flux is distributed on the stellar surface. Presumably the magnetic field in stars with a radiative zone is generated at the interface between the interior radiative region and the outer convective zone. The Rossby number relates the rotation of the star — determined through observation — to the internal convective activity of the star.

The results affirm that the mechanisms producing local ‘space weather’ are common across different stellar classes, meaning we can with some confidence examine planetary systems around M, F, G and K stars using the same model. The process generating a star’s magnetic field may thus turn out to be similar despite the presence, or lack, of a radiative core. Adds co-author Christopher Johns-Krull:

“A lot of ideas about how stars generate a magnetic field rely on there being a boundary between the radiative and the convection zones, so you would expect stars that don’t have that boundary to behave differently. This paper shows that in many ways, they behave just like the sun, once you adjust for their own peculiarities.”

Thus an M-dwarf with a Rossby number typical for its class shows magnetic behaviors close enough to the Sun for us to make predictions about their effect on its planets. The stellar magnetic field data are, in turn, affected by the activity cycle of individual stars, which the model does not include because this would demand lengthy observational study for each star. But from the perspective of magnetically active stars, the new model from Farrish and team can be applied to interactions within their systems.

We’re a long way from knowing whether M-dwarf systems like that at Proxima Centauri or the intriguing TRAPPIST-1 and L 98-59 could support living planets, but our models for their magnetic interactions can draw on what we see in our own Sun, despite its differences in age and stellar class. Refining that model for these systems will help us determine the most likely M-dwarf candidates for habitability.

The paper is Farrish et al., “Modeling Stellar Activity-rotation Relations in Unsaturated Cool Stars,” Astrophysical Journal Vol. 916, No. 2 (3 August 2021). Abstract.

Can M-Dwarf Planets Survive Stellar Flares?

We can learn a lot about stars by studying magnetic activity like starspots, flares and coronal mass ejections (CMEs). Starspots are particularly significant for scientists using radial velocity methods to detect planets, because they can sometimes mimic the signature of a planet in the data. But the astrobiology angle is also profound: Young M-dwarfs, known for flare activity, could be fatally compromised as hosts for life because strong flares can play havoc with planetary atmospheres.

Given the ubiquity of M-dwarfs — they’re the most common type of star in our galaxy — we’d like to know whether or not they are candidates for supporting life. A paper from Ekaterina Ilin and team at the Leibniz Institute for Astrophysics in Potsdam digs into the question by looking at the orientation of magnetic activity on young M-dwarfs.

The sample is small, though carefully chosen from the processing of over 3000 red dwarf signatures obtained by TESS, the Transiting Exoplanet Survey Satellite mission. The results are promising, indicating that the worst flare activity an M-dwarf can produce occurs along the poles of the star. If that is the case, then a young planetary system may remain unscathed. Here’s how Ilin describes this:

“We discovered that extremely large flares are launched from near the poles of red dwarf stars, rather than from their equator, as is typically the case on the Sun. Exoplanets that orbit in the same plane as the equator of the star, like the planets in our own solar system, could therefore be largely protected from such superflares, as these are directed upwards or downwards out of the exoplanet system. This could improve the prospects for the habitability of exoplanets around small host stars, which would otherwise be much more endangered by the energetic radiation and particles associated with flares compared to planets in the solar system.”

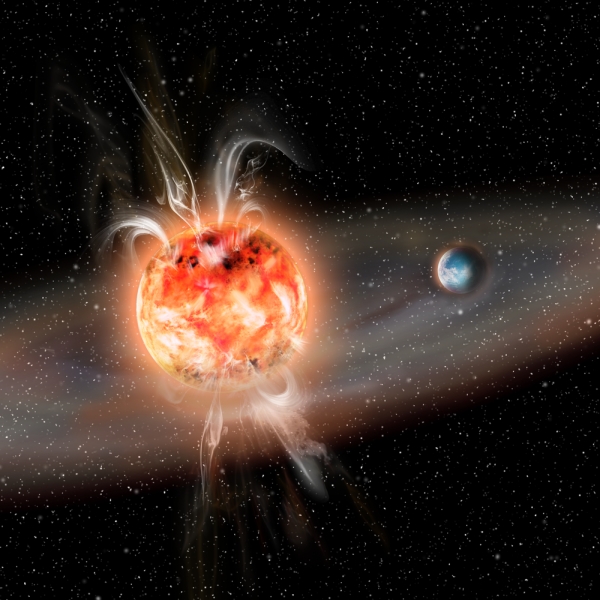

Image: Small stars flare actively and expel particles that can alter and evaporate the atmospheres of planets that orbit them. New findings suggest that large superflares prefer to occur at high latitudes, sparing planets that orbit around the stellar equator. Credit: AIP/ J. Fohlmeister.

This is provocative stuff, even if only four stars emerged from the TESS data as fitting the criteria the researchers were looking for. To understand how they winnowed these stars out, we need to look at PEPSI, the Potsdam Echelle Polarimetric and Spectroscopic Instrument, mounted at the Large Binocular Telescope (LBT) in Arizona. Feeding polarized light to the spectrograph, scientists using PEPSI have been able to use what is called the Zeeman effect — involving the polarization of spectral lines due to an external magnetic field — to analyze the field geometry of the field.

This earlier work has implied the existence of concentrated magnetic activity near the poles of fast rotating stars like young M-dwarfs, activity that emerges as spots and flares. While the Zeeman technique could reconstruct a stellar magnetic field, no observations of this polar clustering had previously been made — bear in mind that we cannot resolve the surface of the target stars. The Potsdam researchers were able to detect signs of polar clustering by analyzing white-light flares on their target stars, pinpointing the latitude of the flaring region from the shape of the light curve.

This works because modulations in brightness, caused by the young stars’ fast spin as the flare location rotates in and out of view on the stellar surface, carry useful information. M-dwarfs remain fast rotators much longer than stars like the Sun; in fact, the fast rotation enhances their magnetic and flare activity. The researchers were able to determine where on the star these flares occurred. According to the paper:

The exceptional morphology of the modulation allowed us to directly localize these flares between 55? and 81? latitude on the stellar surface. Our findings are evidence that strong magnetic fields tend to emerge close to the rotational poles of fast-rotating fully convective stars, and suggest a reduced impact of these flares on exoplanet habitability.

The kind of long-duration superflare activity considered most lethal for planetary atmospheres, in other words, occurs much closer to the pole than the weaker flares and spots found below 30?. On our own mature G-class star, sunspots and flares associated with them tend to occur near the equator. This paper offers, then, a continuing lifeline for those interested in the prospects for life around M-class stars, while also pointing to the need for what the authors call “the first fully empirical spatio-temporal flare reconstructions on low mass stars.” The emergence of such a model will help us draw broader conclusions on the impact of stellar magnetic activity on M-dwarf planets.

The paper is Ilin et al., “Giant white-light flares on fully convective stars occur at high latitudes,” accepted at Monthly Notices of the Royal Astronomical Society 05 August 2021 (abstract / Preprint). Thanks to Michael Fidler and Antonio Tavani for an early heads-up on this work.

Europa: Building the Clipper

Seeing spacecraft coming together is always exciting, and when it comes to Europa Clipper, what grabs my attention first is the radiation containment hardware. This is a hostile environment even for a craft that will attempt no landing, for flybys take sensitive electronics into the powerful radiation environment of Jupiter’s magnetosphere. 20,000 times stronger than Earth’s, Jupiter’s magnetic field creates a magnetosphere that affects the solar wind fully three million kilometers before it even reaches the planet, trapping charged particles from the Sun as well as Io.

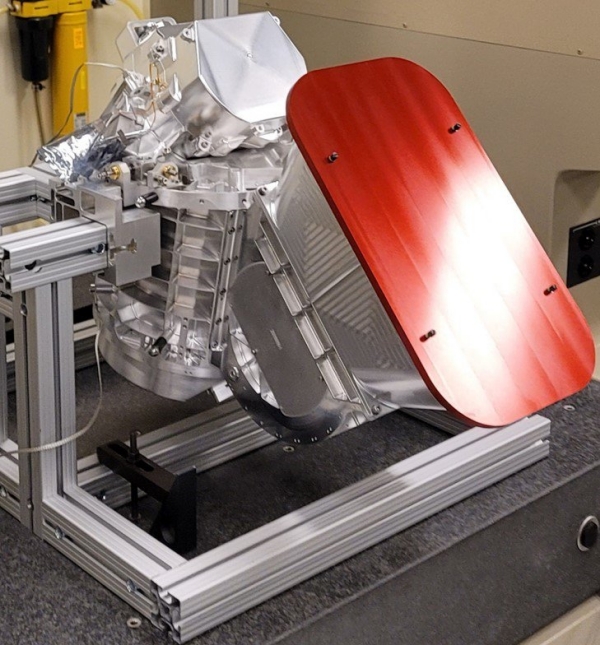

We have to protect Europa Clipper from the intense radiation emerging out of all this, and in the image below you can see what the craft’s engineers have come up with. Now nearing completion at the Jet Propulsion Laboratory, the aluminum radiation vault will ultimately be attached to the top of the spacecraft’s propulsion module, connecting via kilometers of cabling that will allow its power box and computer to communicate with systems throughout the spacecraft. The duplicate vault shown below is used for stress testing before final assembly of the flight hardware.

Image: Engineers and technicians in a clean room at NASA’s Jet Propulsion Laboratory display the thick-walled aluminum vault they helped build for the Europa Clipper spacecraft. The vault will protect the spacecraft’s electronics from Jupiter’s intense radiation. In the background is a duplicate vault. Credit: NASA/JPL-Caltech.

The ATLO phase (Assembly, Test, and Launch Operations) begins in the spring of 2022 at JPL, with the radiation vault being one of the first components in place as Europa Clipper enters its final stage of fabrication. The 3-meter tall propulsion module was recently moved from Goddard Space Flight Center in Greenbelt, Maryland to the Johns Hopkins Applied Physics Laboratory (APL) in preparation for the installation of electronics, radios, antennae and cabling. Science instruments, meanwhile, are being tested at the universities and other institutions contributing to the mission.

Jan Chodas (JPL) is Europa Clipper Project Manager:

“It’s really exciting to see the progression of flight hardware moving forward this year as the various elements are put together bit by bit and tested. The project team is energized and more focused than ever on delivering a spacecraft with an exquisite instrument suite that promises to revolutionize our knowledge of Europa.”

Before the ATLO phase begins, Europa Clipper will also be subject to a System Integration Review later this year, a process in which all instruments are inspected and plans for the assembly and testing of the spacecraft are finalized. The destination for all these components and instruments is the main clean room at JPL in Pasadena, where what NASA describes as the ‘choreography’ of building a flagship mission will draw together components from workshops and laboratories in the US and Europe.

Image: Contamination control engineers in a clean room at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, evaluate a propellant tank before it is installed in NASA’s Europa Clipper spacecraft. The tank is one of two that will be used to hold the spacecraft’s propellant. It will be inserted into the cylinder seen at left in the background, one of two cylinders that make up the propulsion module. Credit: NASA/GSFC Denny Henry.

The travels of the propulsion module emphasize the collaborative nature of any complex spacecraft assembly. The two cylinders making up the module were built at the Applied Physics Laboratory and then shipped to JPL, where thermal tubing carrying coolant to regulate the spacecraft’s temperature in deep space was added. The cylinders then went to Goddard, where the propellant tanks were installed inside them and the craft’s sixteen rocket engines were attached to the outside. It then returned to APL for the installation of electronics and cabling mentioned above.

Image: Engineers and technicians in a clean room at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, integrate the tanks that will contain helium pressurant onto the propulsion module of NASA’s Europa Clipper spacecraft. Credit: NASA/GSFC Barbara Lambert.

Connecting to the thermal tubing will be Europa Clipper’s radiator, which will radiate enough heat into space to keep the spacecraft in its operating temperature range. APL is now integrating the propulsion module and the radios, antennae and cabling for communications, while a company called Applied Aerospace Structures Corporation in Stockton, California is building the 3-meter high-gain antenna. By the spring of next year, the antenna will be in place at JPL for insertion in the ATLO process.

Nine science instruments will fly aboard Europa Clipper, all being assembled and undergoing testing at NASA centers as well as partner institutions and private vendors. The spacecraft is to investigate the depth of the internal ocean as well as its salinity and the thickness of the ice crust. The latter is obviously a huge factor in any future plans to sample the ocean beneath the ice, but so is the question of whether Europa vents subsurface water into space through plumes that may one day be sampled.

Image: NASA’s Jet Propulsion Laboratory in Southern California is building the spectrometer for the agency’s Europa Clipper mission. Called the Mapping Imaging Spectrometer for Europa (MISE), it is seen in the midst of assembly in a clean room at JPL. Pronounced “mize,” the instrument will analyze infrared light reflected from Jupiter’s moon Europa and will map the distribution of organics and salts on the surface to help scientists understand if the moon’s global ocean – which lies beneath a thick layer of ice – is habitable. Credit: NASA/JPL-Caltech.

We’ll finally be able to update those Galileo images that have served scientists so well in the study of Europa’s surface with new, detailed looks at the surface geology. Launch is currently planned for October, 2024 aboard a Falcon Heavy rocket. Europa Clipper isn’t a life detection mission, but we’ll learn a good deal more about Europa’s potential for supporting life. What kind of mission grows out of that is something it would be foolhardy to predict. One step at a time as Europa reveals its mysteries.

L 98-59 b: A Rocky World with Half the Mass of Venus

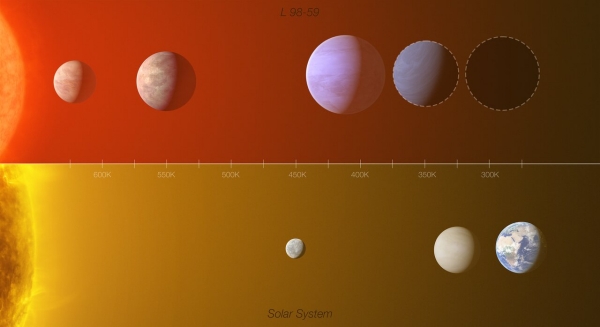

ESPRESSO comes through. The spectrograph, mounted on the European Southern Observatory’s Very Large Telescope, has produced data allowing astronomers to calculate the mass of the lightest exoplanet ever measured using radial velocity techniques. The star is L 98-59, an M-dwarf about a third of the mass of the Sun some 35 light years away in the southern constellation Volans. It was already known to host three planets in tight orbits of 2.25 days, 3.7 days and 7.5 days. The innermost world, L 98-59b, has now been determined to have roughly half the mass of Venus.

What extraordinary precision from ESPRESSO (Echelle SPectrograph for Rocky Exoplanets and Stable Spectroscopic Observations). The three previously known L 98-59 planets were discovered in data from TESS, the Transiting Exoplanet Survey Satellite, which spots dips in the lightcurve from a star when a planet crosses its face.

Adding ESPRESSO’s data, and incorporating previous data from HARPS, has allowed Olivier Demangeon (Instituto de Astrofísica e Ciências do Espaço, University of Porto) and team to refine the planets’ mass. Because we already know their radii through transits, we can constrain the density of these rocky worlds. Intriguingly, 30% of L 98-59 d’s mass could be water.

What stands out here, though, is the confirmation of ESPRESSO’s capabilities as we continue to drill down into the centimeters-per-second range that will allow us to probe small rocky worlds around other stars. We’ve seen rapid growth in spectrography through ESPRESSO as well as NEID and, of course, HARPS (High Accuracy Radial Velocity Planet Searcher), which has long been in the forefront of the exoplanet hunt at ESO’s 3.6m telescope at La Silla Observatory in Chile.

ESPRESSO continues to push the boundaries of radial velocity planet detection. There is no hyperbole at all in the conclusion to the paper on this work, which notes that the refinement of mass for the planets in this system, particularly the innermost world:

…represents a new milestone which illustrates the capability of ESPRESSO to yield the mass of planets with RV signatures of the order of 10 cm s-1 in multi-planetary systems even with the presence of stellar activity.

The ESPRESSO data also flag a fourth planet around this star, along with hints of a possible fifth, the latter of which would be in the star’s liquid water habitable zone. The detected planet e has an orbital period of 12.80 days with a minimum mass of 3 Earth masses, while the candidate fifth planet has a period of 23.2 days and a minimum mass of 2.46 Earth masses. It would be in the star’s habitable zone and thus of high interest if confirmed, although there remains the possibility that the signal in the data is the result of stellar activity.

There are no signs of transits from either of these worlds. As this system is likely to become a benchmark for planetary analysis in nearby systems, we’ll keep an eye on the confirmation process for the planet candidate here.

Image: Comparison of the L 98-59 exoplanet system with the inner Solar System.

The three inner worlds at L 98-59 are candidates for atmospheric study through transmission spectrography, where astronomers examine light from the star as filtered through a planetary atmosphere during a transit. The astronomers note that in addition to potential analysis via the James Webb Space Telescope, the Extremely Large Telescope under construction in Chile’s Atacama Desert — scheduled to begin observations in 2027 — may be able to study the atmospheres of these planets from the ground.

In any event, further work with ESPRESSO, the Hubble Space Telescope, and future observatories like NIRPS (Near Infra Red Planet Searcher in Chile) and the Ariel space telescope (Atmospheric Remote-sensing Infrared Exoplanet Large-survey) should be available for atmospheric studies in this interesting system. Adds Demangeon:

“This system announces what is to come. We, as a society, have been chasing terrestrial planets since the birth of astronomy and now we are finally getting closer and closer to the detection of a terrestrial planet in the habitable zone of its star, of which we could study the atmosphere.”

An additional note relates to the tightness of planetary system configurations in multiple planet systems. This is from the paper’s conclusion:

According to exoplanet archive (Akeson et al. 2013), we currently know 739 multi-planetary systems. A large fraction of them (~ 60%) were discovered by the Kepler survey (Borucki et al. 2010; Lissauer et al. 2011). From a detailed characterization and analysis of the properties of the Kepler multiplanetary systems, Weiss et al. (2018, hereafter W18) extracted the “peas in a pod” configuration. They observed that consecutive planets in the same system tend to have similar sizes. They also appear to be preferentially regularly spaced. The authors also noted that the smaller the planets, the tighter their orbital configuration is… [W]e conclude that the L 98-59 system is closely following the “peas in a pod” configuration…

A useful fact, and one that, as the authors add, “further strengthens the universality of this configuration and the constraints that it brings on planet formation theories.”

The paper is Demangeon et al., “Warm terrestrial planet with half the mass of Venus transiting a nearby star,” accepted at Astronomy & Astrophysics (abstract).

A Stellar Analogue to the Young Sun

Vladimir Airapetian, senior astrophysicist in the Heliophysics Division at NASA’s Goddard Space Flight Center, has a somewhat unusual ambition. Most attention related to finding a ‘second Earth’ revolves around locating a world not only similar to ours in its characteristics but also similarly situated in terms of its host star’s evolution. In other words, a rocky world scorched by its star’s transition to red giant status isn’t a true analogue of our own, but a glimpse of what it will be at another stage.

What Airapetian has in mind, though, is going in the other direction. His projected Earth analogue is one that mimics what our planet was in its early days, not all that long after the birth of its stellar system. It’s an ambition that points to learning where we came from, and thus what we might expect when we see a system like ours evolving around other stars. It has led to a search for a star like the Sun in its infancy. Says Airapetian:

“It’s my dream to find a rocky exoplanet in the stage that our planet was in more than 4 billion years ago, being shaped by its young, active star and nearly ready to host life. Understanding what our Sun was like just as life was beginning to develop on Earth will help us to refine our search for stars with exoplanets that may eventually host life.”

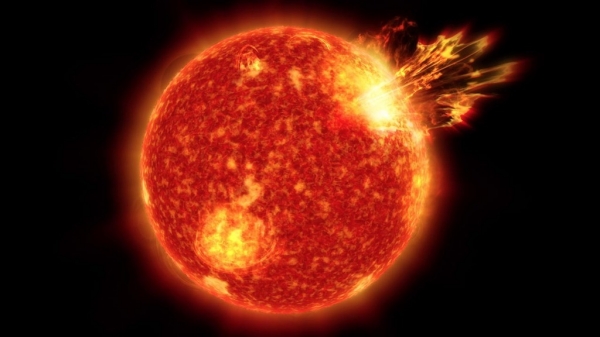

Image: Illustration of what the Sun may have been like 4 billion years ago, around the time life developed on Earth. Credit: NASA’s Goddard Space Flight Center/Conceptual Image Lab.

Space-based instruments have provided data, as the GSFC team studied existing solar models for insight into the characteristics of the young star Kappa 1 Ceti, about 30 light years away. Data input from Hubble, TESS (Transiting Exoplanet Survey Satellite), NICER (Neutron star Interior Composition Explorer, installed aboard the ISS) and the European Space Agency’s XMM-Newton observatory have refined the model.

Kappa 1 Ceti is a G-class star in the constellation Cetus with a rapid rotation of about nine days. Our Sun rotates in 27 days, but in its youth is thought to have rotated three times faster. The mass of the two stars is roughly the same, and Kappa 1 Ceti has 95 percent of the Sun’s radius, with about 85 percent of its luminosity. An early Sun would have had a stronger magnetic field and a powerful solar wind, which would have affected the magnetosphere and thus atmospheric chemistry on the young Earth.

Consider: Earth in its infancy, though receiving less solar heat than today, would have been subject to more high energy particles and radiation from the young Sun. High solar activity would cause particles to slam into Earth’s nitrogen atmosphere, wreaking molecular change: Breaking nitrogen molecules into atoms and turning carbon dioxide into its carbon and oxygen constituents. Nitrogen paired with free oxygen gives nitrous oxide, which produces a greenhouse effect boosting the planet’s temperatures and perhaps assisting the formation of life.

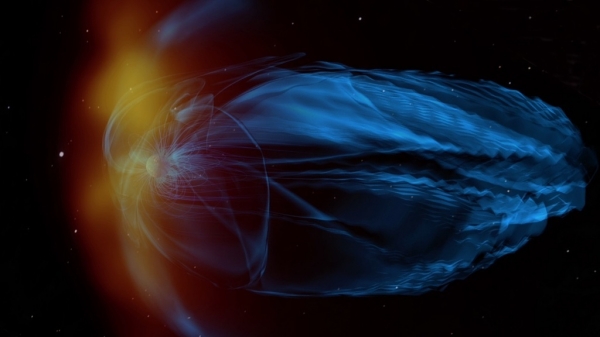

Image: An artist concept of a coronal mass ejection hitting the young Earth’s weak magnetosphere. Credit: NASA/GSFC/CIL.

Airapetian’s team used the Alfvén Wave Solar Model, developed within a larger model of space weather originally created at the University of Michigan, described in the paper as “a first-principles global model that describes the stellar atmosphere from the top of the chromosphere and extends it into the heliosphere beyond Earth’s orbit.”

Data inputs include known information about a star’s magnetic field and data on its ultraviolet emissions, both of which can be used to predict otherwise unobservable stellar wind activity. The team tested its model against data on the Sun to validate its predictions, finding that it successfully tracks the Sun’s solar wind and corona.

The model allows the researchers to predict the effects of a star’s stellar wind and corona on its magnetic shield, with effects on potential habitability. The key finding: In addition to the kind of coronal mass ejection (CME) events that blow plasma outward from the star and can produce atmospheric loss on young planets, we also find what are known as Corotating Interaction Events (CIRs) that can directly impact such worlds.

The young star produces a dynamic pressure 1380 times what is produced by these same CIRs in our own mature Sun. The effects would be significant::

This is a very important result as CIRs cause magnetic storms on Earth… The initiation of magnetic storms is associated with enhanced dynamic pressure that compress[es] the Earth’s magnetosphere and cause[s] the induction of ionospheric currents and results in Joule heating of the ionospheric and thermospheric layers of the planet.

The changes in the magnetosphere produced by these CIRs are comparable to the strongest coronal mass ejections, with results including strong fluxes of electrons and protons into the upper atmosphere:

These processes can be crucial in evaluating the magnetospheric states of exoplanets around active stars because induced current dissipation will enhance the atmospheric escape from Earth-like exoplanets around active stars and can be critical for habitability conditions for rocky exoplanets in close-in habitable zones around red dwarfs.

Airapetian and team have developed a way of modeling the stellar environments in a range of young G- and M-class stars. We can look toward future mapping of stars at various stages of their lives. The researchers specifically mention their interest in EK Dra, a 100 million year old star 111 light years out that is producing more flares and plasma than Kappa 1 Ceti.

While Airapetian says his team’s work involves “looking at our own Sun, its past and its possible future, through the lens of other stars,” it also points in the other direction as a way of examining infant exoplanet systems where life may have the potential for developing, and thus guiding our list of targets for future observing efforts

The paper is Airapetian et al., “One Year in the Life of Young Suns: Data-constrained Corona-wind Model of ?1 Ceti,” Astrophysical Journal Vol. 916, No. 2 (3 August 2021), 96 (abstract / preprint).

Celebrating the Event Horizon Telescope

The X-ray ‘echoes’ from the Seyfert galaxy I Zwicky 1 occupied us on Friday, but today I want to explore the larger content of black hole research following the news about the relatively nearby active galaxy called Centaurus A. Whereas the X-ray work took data from two X-ray telescopes, NuSTAR and XMM-Newton, the Centaurus A investigation gives us another startling image from the instrument that to my mind has the coolest name of them all when it comes to observing tools — the Event Horizon Telescope.

It was the virtual EHT, of course, that produced the first image of a black hole, the supermassive object at the center of M87. The same observing campaign in 2017 produced the data used in the new paper on Centaurus A. At some 10-13 million light years, Centaurus A is — at radio wavelengths — one of the largest and brightest objects in the sky. Its central black hole is thought to mass about 55 million suns. By contrast, the EHT researchers have estimated the black hole in M87’s center to be 6.5 billion times more massive than the Sun, while our own Sgr A* — the Milky Way’s supermassive black hole — is thought to mass on the order of a ‘mere’ 4.3 million solar masses.

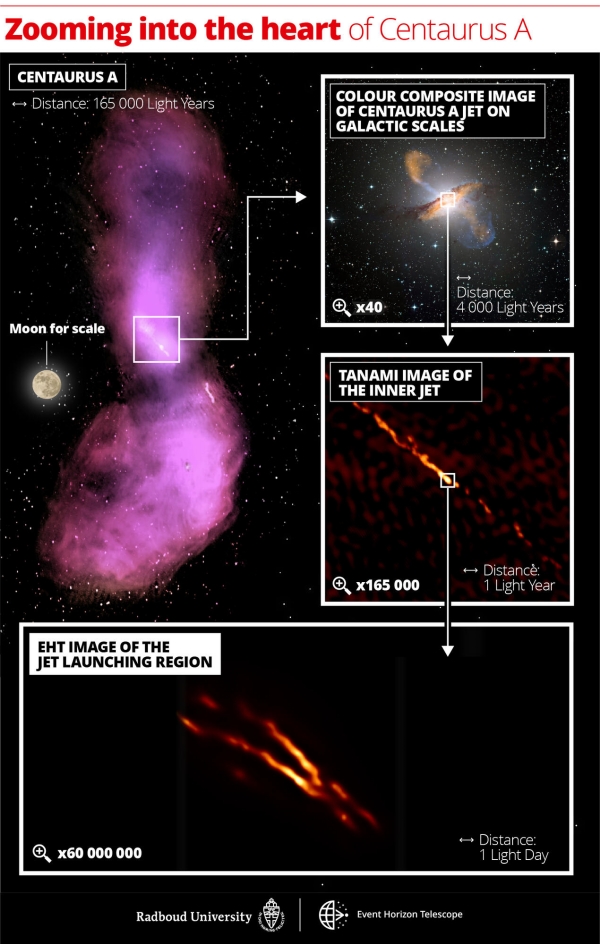

The Centaurus A study, led by Michael Janssen (Max Planck Institute for Radio Astronomy and Radboud University, Nijmegen) presents intriguing data on the galaxy’s enormous jet, as shown in the image below. Notice the range of observatories involved in this work, as listed in the caption. The Event Horizon Telescope data is here being supplemented by ground-based and space-based equipment, reminding us of the essential collaborative nature of the experiment when trying to mount a global interferometric effort and tease the greatest amount of information out of the result.

Image: Distance scales uncovered in the Centaurus A jet. The top left image shows how the jet disperses into gas clouds that emit radio waves, captured by the ATCA and Parkes observatories. The top right panel displays a color composite image, with a 40x zoom compared to the first panel to match the size of the galaxy itself. Submillimeter emission from the jet and dust in the galaxy measured by the LABOCA/APEX instrument is shown in orange. X-ray emission from the jet measured by the Chandra spacecraft is shown in blue. Visible white light from the stars in the galaxy has been captured by the MPG/ESO 2.2-metre telescope. The next panel below shows a 165,000x zoom image of the inner radio jet obtained with the TANAMI telescopes. The bottom panel depicts the new highest resolution image of the jet launching region obtained with the EHT at millimeter wavelengths with a 60,000,000x zoom in telescope resolution. Indicated scale bars are shown in light years and light days. Credit: Radboud Univ. Nijmegen; CSIRO/ATNF/I. Feain et al., R. Morganti et al., N. Junkes et al.; ESO/WFI; MPIfR/ESO/APEX/A. Weiß et al.; NASA/CXC/CfA/R. Kraft et al.; TANAMI/C. Müller et al.; EHT/M. Janßen et al.

Supermassive black holes cause the release of vast amounts of energy as some of the gas and dust near the accretion disk is blown into space in the form of jets. There is plenty to work with in the EHT data, and new questions raised, for only the outer edges of the jet appear to emit radiation, another reminder of how much we have to learn about the processes in play in this phenomenon. But let’s home in for now on the quality of the imagery.

For compared to previous work, we see the jet at Centaurus A at 16 times sharper resolution than ever before. The magnification factor here is one billion. EHT astronomers believe they can locate the black hole itself at the launching point of the jet, a location that will be explored in future observations at shorter wavelength and still higher resolution that will incorporate the use of space-based observatories.

The technique at Centaurus A, as it was at M87, was Very Long Baseline Interferometry (VLBI). If black hole jets occur at the stupendous scale we see here, it’s fitting that a planet-sized aperture would be used to see one of them up close. The Event Horizon Telescope originally joined eight telescopes around the globe to create this virtual capability, with other radio dishes around the globe later coming into play as observations continued. More than 300 researchers are involved in the effort worldwide.

We have to go back to 1971 to find the roots of the Event Horizon Telescope, as Heino Falcke points out in his book Light in the Darkness (HarperOne, 2021). This was when Donald Lynden-Bell and Martin Rees predicted that VLBI techniques on the scale of a continent could be used to discover a compact radio source like a black hole at the center of our own galaxy. Seyfert galaxies, bright with radio plasma, were suspected of hosting central black holes, part of a grouping of galaxies known to possess active galactic nuclei (AGN). It made sense that the Milky Way might have its own black hole. Three years later a compact radio source was discovered there.

Thus we learned about Sagittarius A*, a fascinating find but one whose image from Earth was compromised by the dust and hot gas along the galactic disk. The move toward shorter wavelength observation to study an object that was dark at almost every wavelength except that of radio frequency light makes for fascinating reading in Falcke’s book. Puzzles abounded. If Sagittarius A* were a black hole, it seemed a weak one. Quasar 3C 273, if placed in the Milky Way, would be 40 million times brighter.

Falcke wrote his doctoral thesis around the question of what he describes in his book as ‘starved black holes.’ If Sagittarius A* is no more than a weak glimmer compared to some, it implied that the black hole was simply not drawing in the matter needed to produce a stronger signature. If the average quasar consumed one sun per year, his calculations showed that our black hole must suck in ten million times less mass. That’s the equivalent of about three moons per year. From the book:

…contrary to popular belief, black holes generally aren’t wildly voracious monsters: they’re very well behaved and eat only what they’re served. In our imagination, black holes might be giant, but compared to an entire galaxy they’re just little chicks. And like chicks in the nest, black holes must wait for food, must wait for their mother galaxy to feed them with dust and stars. If this doesn’t happen, they waste away, go dark and quiet, and stop growing — just like Sagittarius A*. But they don’t die.

How to build a globe-spanning interferometric network to study Sagittarius A*, or the huge M87* source at the center of that galaxy? With eight observatories around the planet, the radio signals have to be perfectly synchronized to allow the observation, the position of the observatories known to the millimeter and the arrival time of signals received measured with atomic clocks of picosecond precision. As Falcke points out, such a radio telescope is assembled on a computer. Algorithms build the image.

And money builds the interferometer or, at least, the effort needed to coordinate a planet-sized effort like the EHT, and as countless meetings and strategy planning both online and in person continued and proposals were generated — we are talking a process years in the making, of course — M87 began to emerge as a viable target for the first attempt at an image of a black hole. Its black hole is two thousand times farther away — 55 million light years out — but it’s also huge, and estimates of its size had continued to grow as work on the EHT concept continued.

The advantages were many: M87 was easier to see from the northern hemisphere, where most of the EHT’s telescopes were located. It was also not located along the line of sight of the Milky Way’s disk, making it a clearer target. It’s no surprise that, among the EHT’s sources, including Sgr A*, M87 should have become the one whose results were most anticipated.

Falcke brings to the evolution of the EHT the same intensity that Alan Stern brought to the scientific, political and financial effort to build New Horizons in his book Chasing New Horizons (Picador, 2018). His eye for detail makes the observing sites lively places indeed, while he clarifies the technical issues without jargon. This is a project collecting data at 32 gigabits per second, all recorded on hard drives for future processing. As Falcke puts it:

Only after a lengthy process does a tiny image emerge from the giant quantity of data — talk about data reduction! Really we’re only recording static: static from the sky, receiver static, and a small bit of static from the edge of the black hole. Thankfully a large part of the sky and receiver static can be filtered out when the data are processed afterward. The total energy of the static that such a telescope gathers from our cosmic radio source in one night is incomprehensibly small. It’s the equivalent of the energy produced by a strand of hair one millimeter long that falls from a height of half a millimeter in vacuum onto a glass plate. The impact will hardly scratch the glass, but we can measure it.

We know the outcome, but that doesn’t lessen the drama. In April of 2017, the eight EHT observatories — two in Chile, two in Hawaii, one in Spain, one in Mexico, another in Arizona and a last one at the south pole — all pointed at the target in M87. Falcke heads for Málaga, test runs begin via the global network, the observations kick in. There is no way for all eight of the telescopes to measure simultaneously because of differences in location. The work has to be staggered, the data then synchronized. Calibration tests on quasars are conducted, then the first observations of M87 begin. At Málaga:

The telescope turns slowly toward the Virgo constellation, the second largest in the sky. We follow the motion on the display, transfixed. The telescope positions itself to find the right azimuth, or angle along the horizon; its elevation, or vertical angle, is perfect now as well. Same as with any other large movement, we have to do a little adjusting afterward. “Pico Veleta on source M87 and recording, pointing on nearby [quasar] 3C 273,” Krickbaum reports. The plots in the control room show plausible signal levels; the hard drives spin and fill up. A reassuring sign. On our instruments we see how the telescope follows the center of M87, moving counter to the Earth’s rotation. For hours now we swivel back and forth between M87 and a calibration quasar for scans of a few minutes each. Now everything seems to take care of itself.

As morning comes in Spain, the telescopes in Hawaii go into action observing M87, for a time simultaneously with Málaga before Spain shuts down. This is a distance of 10,907 kilometers, Falcke points out, the longest distance in the network. Arizona and Hawaii keep recording data for hours more. And this is only round one. The weather intervenes, then moderates. The words ‘go for VLBI’ take on an urgency like a rocket liftoff.

Consider that to make the Event Horizon Telescope work, you have to coordinate the position of each telescope relative to the sky as well as the time of arrival of the emissions being observed, which means modeling the motion of the Earth, taking into account pole-wandering due to the motion of its oceans and the effects of its atmosphere. Then a supercomputer goes to work looking for common oscillations. This means figuring out correlations between the received data to make sure they depict the same thing.

As Falcke points out, being a millisecond off in your timing means searching through millions of alternatives, which is why the analysis of the EHT’s data takes far longer than making the observations. Using quasars for calibration and testing is critical. It turned out to take nine months just to correlate the observations that produced the famous image of M87’s black hole. Or I should say, its ‘shadow,’ for no light can escape the black hole itself. The imaging teams had to wrestle with the fact that there are numerous ways of turning these data into a final image. We wind up with an image with the resolution that would be produced with an aperture the size of the Earth despite technical glitches, variable weather, and the need to schedule observing times at all the observatories, no mean feat in itself.

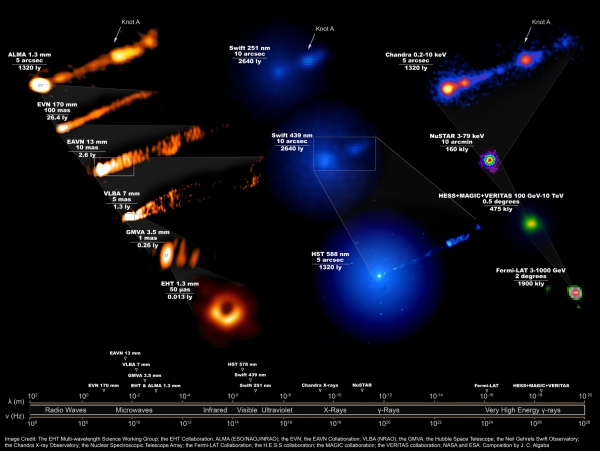

Image: Composite image showing how the M87 system looked, across the entire electromagnetic spectrum, during the Event Horizon Telescope’s April 2017 campaign to take the iconic first image of a black hole. Requiring 19 different facilities on the Earth and in space, this image reveals the enormous scales spanned by the black hole and its forward-pointing jet, launched just outside the event horizon and spanning the entire galaxy. Credit: the EHT Multi-Wavelength Science Working Group; the EHT Collaboration; ALMA (ESO/NAOJ/NRAO); the EVN; the EAVN Collaboration; VLBA (NRAO); the GMVA; the Hubble Space Telescope, the Neil Gehrels Swift Observatory; the Chandra X-ray Observatory; the Nuclear Spectroscopic Telescope Array; the Fermi-LAT Collaboration; the H.E.S.S. collaboration; the MAGIC collaboration; the VERITAS collaboration; NASA and ESA. Composition by J.C. Algaba.

It’s impressive that so many of the simulations the EHT team ran match the image of M87 that emerged, and as Falcke notes, there is an exact correlation between the size of the object and its mass, with the ring of light brightest at the bottom, as gas rotating around the black hole near light speed moves towards us, becoming focused and intensified. 6.5 billion solar masses gives us a black hole with a diameter of about 100 billion kilometers, an event horizon with a circumference four times as large as the orbit of Neptune.

The fact that we can make such measurements and produce such images doing astronomy at planet-wide scale is a testament to the power of scientific collaboration and raw human persistence. The EHT is indeed cause for celebration, as the new paper on Centaurus A affirms.

The paper on Centaurus A is Janssen et al., “Event Horizon Telescope observations of the jet launching and collimation zone in Centaurus A,” Nature Astronomy 19 July 2021 (abstract / full text).