Centauri Dreams

Imagining and Planning Interstellar Exploration

Happy New Year from Centauri Dreams

And for those of you who’ve been asking about the videos of presentations at the Tennessee Valley Interstellar Workshop, they’re now online. 2015, with New Horizons at Pluto/Charon and Dawn at Ceres, is shaping up to be an extraordinary year. Here’s to the continuing effort to advance the human and robotic effort in deep space.

Dawn: Beginning Approach to Ceres

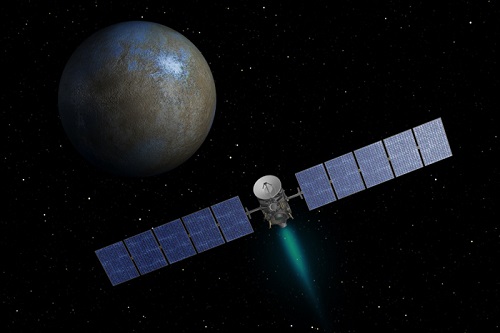

Speaking of spacecraft that do remarkable things, as we did yesterday in looking at the ingenious methods being used to lengthen the Messenger mission, I might also mention what is happening with Dawn. When the probe enters orbit around Ceres — now considered a ‘dwarf planet’ rather than an asteroid — in 2015, it will mark the first time the same spacecraft has ever orbited two targets in the Solar System. Dawn’s Vesta visit lasted for 14 months in 2011-2012.

We have the supple ion propulsion system of Dawn to thank for the dual nature of the mission. In the Dawn version of the technology, xenon gas is bombarded by an electron beam. The resulting xenon ions are accelerated through charged metal grids out of the thruster. JPL’s Marc Rayman, chief engineer and mission director for the mission, explained thruster design in one of the earliest of his Dawn Journal entries:

Because it is electrically charged, the xenon ion can feel the effect of an electrical field, which is simply a voltage. So the thruster applies more than 1000 volts to accelerate the xenon ions, expelling them at speeds as high as 40 kilometers/second… Each ion, tiny though it is, pushes back on the thruster as it leaves, and this reaction force is what propels the spacecraft. The ions are shot from the thruster at roughly 10 times the speed of the propellants expelled by rockets on typical spacecraft, and this is the source of ion propulsion’s extraordinary efficacy.

Slow but steady wins the race. For the same amount of propellant, a craft equipped with an ion propulsion system can achieve ten times the speed of a probe boosted by today’s conventional rocketry, says Rayman, but on the other hand, an ion-powered spacecraft can manage to carry far less propellant to accomplish the same job, which is how missions like Dawn can be executed. It’s also true that one of Dawn’s thrusters pushes on the spacecraft with about the force of a piece of paper pushing on a human hand on Earth. Dawn isn’t exactly the spacecraft equivalent of a Ferrari — at full power, the vehicle would go from 0 to 60 miles per hour in a stately four days.

Fortunately, space is a zero-g environment without friction, so the minuscule thrust has a chance to build up. ‘Acceleration with patience’ is Rayman’s term. In addition to enhancing maneuverability, ion thrusters are also durable. Dawn’s three thrusters have completed five years of accumulated thrust time, more than any other spacecraft. If all goes well at Ceres, we can’t rule out an extended mission that might include other asteroid targets, just as we hope for a Kuiper Belt object encounter for New Horizons after its 2015 flyby of Pluto/Charon.

Image: An artist’s concept shows NASA’s Dawn spacecraft heading toward the dwarf planet Ceres. Dawn spent nearly 14 months orbiting Vesta, the second most massive object in the main asteroid belt between Mars and Jupiter, from 2011 to 2012. It is heading towards Ceres, the largest member of the asteroid belt. When Dawn arrives, it will be the first spacecraft to go into orbit around two destinations in our Solar System beyond Earth. Credit: NASA/JPL-Caltech.

Keep an eye on Rayman’s Dawn Journal as the Ceres encounter approaches. His latest entry goes through the historical background on the dwarf planet’s discovery, and includes the fact that the Dawn team has been working with the International Astronomical Union (IAU) to formalize a plan for names on Ceres that builds upon the name given to it by its discoverer. Astronomer Giuseppe Piazzi found Ceres in 1801 and named it after the Roman goddess of agriculture. The plan going forward is for surface detail like craters to be named after gods and goddesses of agriculture and vegetation, drawing on worldwide sources of mythology.

Deep space has been yielding unexpected results since the earliest days of our exploration, and with Dawn approaching Ceres it’s instructive to recall some of the discoveries the Voyagers made as they moved into Jupiter space, starting with the surprisingly frequent volcanic activity on Io. Ceres will doubtless yield data just as intriguing, says Christopher Russell (UCLA), principal investigator for the Dawn mission:

“Ceres is almost a complete mystery to us. Ceres, unlike Vesta, has no meteorites linked to it to help reveal its secrets. All we can predict with confidence is that we will be surprised.”

Not quite twice as large as Vesta, Ceres (diameter 950 kilometers) is the largest object in the asteroid belt, and unlike Vesta, it apparently has a cooler interior, one that may even include an ocean beneath a crust of surface ice. We’ll know more soon, for Dawn has emerged from solar conjunction and is communicating with Earth controllers, who have programmed the maneuvers for the next stage of operations, which includes the Ceres approach phase. At present, the spacecraft is 640,000 kilometers from the dwarf world, approaching it at 725 kilometers per hour.

Long-Distance Spacecraft Engineering

I find few things more fascinating than remote fixes to distant spacecraft. We’ve used them surprisingly often, an outstanding case in point being the Galileo mission to Jupiter, launched in 1989. The failure of the craft’s high-gain antenna demanded that controllers maximize what they had left, using the low-gain antenna along with data compression and receiver upgrades on Earth to perform outstanding science. Galileo’s four-track tape recorder, critical for storing data for later playback, also caused problems that required study and intervention from the ground.

But as we saw yesterday, Galileo was hardly the first spacecraft to run into difficulties. The K2 mission, reviving Kepler by using sophisticated computer algorithms and photon pressure from the Sun, is a story in progress, with the discovery of super-Earth HIP 116454 b its first success. Or think all the way back to Mariner 10, launched in 1973 and afflicted with problems including flaking paint that caused its star-tracker to lose its lock on the guide star Canopus. The result: A long roll that burned hydrazine as thrusters tried to compensate for the motion. Controllers were able to use the pressure of solar photons on the spacecraft’s solar panels to create the torque necessary to counter the roll and re-acquire the necessary control.

The Messenger spacecraft also used pressure from solar photons as part of needed course adjustment on the way to Mercury, and now comes news of yet another inspired fix involving the same craft. Messenger was on course to impact Mercury’s surface by the end of March, 2015, having in the course of its four years in Mercury orbit (and six previous years enroute) used up most of its propellant. But controllers will now use pressurization gas in the spacecraft’s propulsion system to raise Messenger’s orbit enough to allow another month of operation.

The helium in question was used to pressurize the propellant tanks aboard the spacecraft. Let me quote Stewart Bushman (JHU/APL), lead propulsion engineer for the mission, on just what is going on here:

“The team continues to find inventive ways to keep MESSENGER going, all while providing an unprecedented vantage point for studying Mercury. To my knowledge this is the first time that helium pressurant has been intentionally used as a cold-gas propellant through hydrazine thrusters. These engines are not optimized to use pressurized gas as a propellant source. They have flow restrictors and orifices for hydrazine that reduce the feed pressure, hampering performance compared with actual cold-gas engines, which are little more than valves with a nozzle.”

Bushman adds that stretching propellant use is not the norm:

“Propellant, though a consumable, is usually not the limiting life factor on a spacecraft, as generally something else goes wrong first. As such, we had to become creative with what we had available. Helium, with its low atomic weight, is preferred as a pressurant because it’s light, but rarely as a cold gas propellant, because its low mass doesn’t get you much bang for your buck.”

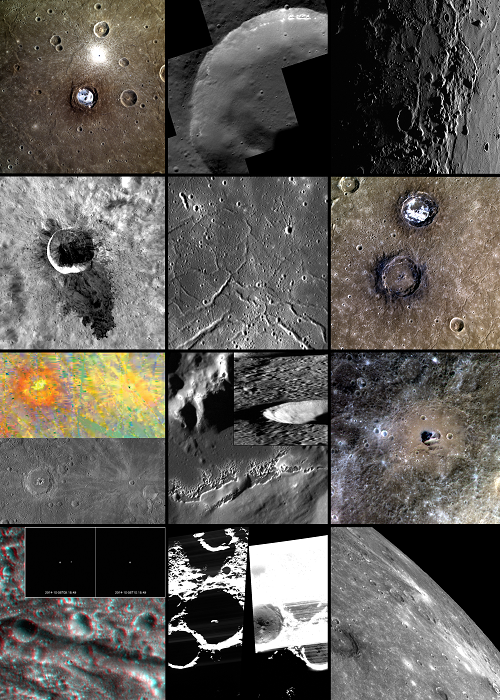

Image: A compilation of Messenger images from Mercury in 2014. Next April, Messenger’s operational mission will come to an end, as the spacecraft depletes its fuel and impacts the surface. However, the last few months of operations should be rich, including science data obtained closer to the planet’s surface than ever previously accomplished. Credit: JHU/APL.

So we gain an extra month to add to Messenger’s already impressive data on the closest planet to the Sun. The spacecraft’s most recent studies, begun this past summer, have involved a low altitude observation campaign looking for volcanic flow fronts, small scale tectonic effects, layering in crater walls and other features explained in this JHU/APL news release. Growing out of this effort will be the highest resolution images ever obtained of Mercury’s surface.

The additional month of operations will allow a closer look at Mercury’s magnetic field. “During the additional period of operations, up to four weeks, MESSENGER will measure variations in Mercury’s internal magnetic field at shorter horizontal scales than ever before, scales comparable to the anticipated periapsis altitude between 7 km and 15 km above the planetary surface,” says APL’s Haje Korth, the instrument scientist for the Magnetometer. Korth also says that at these lower altitudes, Messenger’s Neutron Spectrometer will be able to resolve water ice deposits inside individual impact craters at the high northern latitudes of the planet.

That’s a useful outcome and it grows out of sheer ingenuity in using existing resources. What’s fascinating in all these stories is that when we send a spacecraft out, we have frozen its technology level while our own continues to expand and accelerate. Think of the Voyagers, still operational after their 1977 launches, and imagine the kind of components we would use to build them today. The trick in resolving spacecraft problems and extending their missions is to keep the interface between our latest technology and their older tools as robust as possible. That involves, it’s clear, not just hardware and software, but the power of the human imagination.

Kepler: Thoughts on K2

As we start thinking ahead to the TESS mission (Transiting Exoplanet Survey Satellite), currently scheduled for launch in 2017, the exoplanet focus sharpens on stars closer to home. The Kepler mission was designed to look at a whole field of stars, 156,000 of them extending over portions of the constellations Cygnus, Lyra and Draco. Most of the Kepler stars are from 600 to 3000 light years away. In fact, fewer than one percent of these stars are closer than 600 light years, while stars beyond 3000 light years are too faint for effective transit signatures.

Kepler has proven enormously useful in helping us develop statistical models on how common planets are, with the ultimate goal, still quite a way off, of calculating the value of ?Earth (Eta_Earth) — the fraction of stars orbited by planets like our own. Looking closer to home will be the mandate of TESS, which will be performing an all-sky survey rather than the ‘long stare’ Kepler has used so effectively. We should wind up with a growing catalog of nearby main-sequence stars hosting planets, a catalog that future missions will exploit.

But what of Kepler itself? It’s heartening to see a mission rescued when onboard problems have threatened its survival, and in the case of this doughty space telescope, the revival is almost as interesting as the latest results. The Kepler primary mission ended with the failure of the second of four reaction wheels that were used to stabilize the spacecraft. Kepler needed three reaction wheels for effective pointing accuracy, but ground controllers found a way to use the pressure of sunlight as a kind of ‘virtual’ reaction wheel to control the instrument.

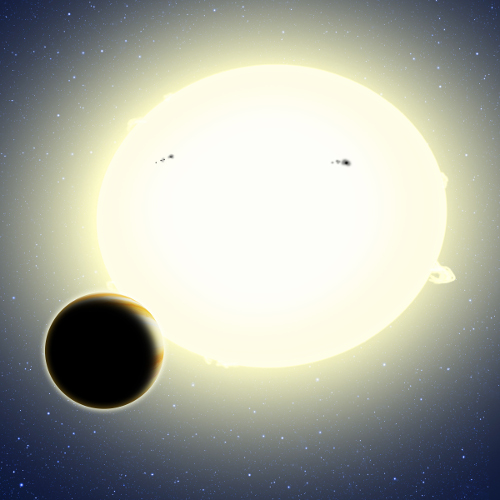

Thus we get K2, the extended Kepler mission. Software to correct for drift in the field of view allows the telescope to achieve about half the photometric precision of the original Kepler mission, according to this University of Hawaii news release. A new exoplanet find — HIP 116454 b — demonstrates that the method works and that Kepler is, at least to some extent, back in business. Unlike most of the stars in the original Kepler field of view, HIP 116454 is relatively nearby, at 180 light years from Earth in the constellation Pisces.

Image: This artist’s conception portrays the first planet discovered by the Kepler spacecraft during its K2 mission. A transit of the planet was teased out of K2’s noisier data using ingenious computer algorithms developed by a researcher at the Harvard-Smithsonian Center for Astrophysics (CfA). The newfound planet, HIP 116454 b, has a diameter of 32,000 kilometers. It orbits its star once every 9.1 days. Credit: CfA.

Working with K2 is exceedingly tricky business, with NASA likening the use of solar photons to stabilize the instrument to the act of balancing a pencil on a finger, a manageable but demanding task. Nor was HIP 116454 b an easy catch. For one thing, only a single transit was detected in the engineering data that was being used to prepare the spacecraft for the full K2 mission. That called for confirmation from ground-based observatories, which came from the Robo-AO instrument mounted on the Palomar 1.5-meter telescope and additional follow-up from the Keck II adaptive optics system on Mauna Kea.

We learn that HIP 116454 b is about two and a half times the size of Earth, with a diameter of about 32000 kilometers and a mass twelve times that of our planet. The paper on this work speculates that we are either dealing with a water world or a mini-Neptune with a gaseous envelope. The planet’s orbital period around the K-class host is 9.1 days at a distance of 13.5 million kilometers. As a ‘super-Earth,’ it will be a high-value target for future observation:

HIP 116454 b could be important in the era of the James Webb Space Telescope (JWST) to probe the transition between ice giants and rocky planets. In the Solar system, there are no planets with radii between 1–3 R? while population studies with Kepler data have shown these planets to be nearly ubiquitous… Atmospheric studies with transit transmission spectroscopy can help determine whether these planets are in fact solid or have a gaseous envelope, and give a better understanding on how these planets form and why they are so common in the Galaxy.

The planet will also be useful in relation to another similar world:

Also of interest is the fact that HIP 116454 b is very similar to HD 97658 b, in terms of its orbital characteristics (both are in ? 10 day low-eccentricity orbits), mass and radius (within 10% in radius, and within 25% in mass), and stellar hosts (both orbit K–dwarfs). Comparative studies of these two super–Earths will be valuable for understanding the diversity and possible origins of close–in Super–Earths around Sun–like stars.

We know from this work that K2 can find transiting planets despite the loss of precision the telescope has suffered, and the paper points out that the engineering test field in which HIP 116454 b was found as well as other potential fields of view will enable observatories in both hemispheres to view the stars for follow-up, something that was not possible in the original Kepler mission. So with K2 capability, we can see HIP 116454 b, relatively nearby, as a kind of bridge between the original Kepler statistical studies and the nearby targets of the TESS mission to follow.

The paper is Vanderburg et al., “Characterizing K2 Planet Discoveries: A super-Earth transiting the bright K-dwarf HIP 116454,” accepted for publication in The Astrophysical Journal (preprint).

Have a Wonderful Holiday

I’m cooking all afternoon in anticipation of a family dinner tonight. The first fruits of my labors are in the photo below. I cultivated the sourdough starter I use for this bread three years ago — over the years, it has really developed some punch, and produces a fine, aromatic loaf. My afternoon now turns to large poultry, a country-sausage stuffing (with some of the sourdough bread as a key ingredient), various greens, beans and a chipotle-laden sweet potato dish I discovered last year. I leave it to my daughter to bring her usual spectacular salad and dessert.

I want to wish all of you the best, and hope your day is going as well as mine. It’s always a privilege to write for this audience.

An Internal Source for Earth’s Water?

The last time we caught up with Wendy Panero’s work, the Ohio State scientist was investigating, with grad student Cayman Unterborn, a possible way to widen the habitable zone. Slow radioactive decay in elements like potassium, uranium and thorium helps to heat planets from within and is perhaps a factor in plate tectonics. In 2012, Unterborn argued that planets with higher thorium content than the Sun would generate much more heat than the Earth, allowing a habitable zone with liquid water on the surface correspondingly farther out from the star.

You can read about that work and its implications in Widening the Habitable Zone. I was reminded of it because Panero reported at the recent American Geophysical Union meeting on her latest direction, a study involving the formation of the Earth’s water. Recall that analysis of data from the Rosetta probe implicated asteroids rather than comets as the main delivery mechanism for Earth’s oceans (see Rosetta: New Findings on Cometary Water). Panero’s new work indicates a substantial part of Earth’s water was made right here.

Hydrogen atoms trapped inside crystal defects and voids within minerals can bond with the oxygen already plentiful in these substances, which is how rock that appears dry can contain water. The potential for a great deal of water exists in mantle rock, given that the mantle makes up more than 80 percent of the total volume of the planet, according to this Ohio State news release. Working with doctoral student Jeff Pigott, Panero subjected mantle minerals to high pressures and temperatures to create conditions like those deep inside the Earth.

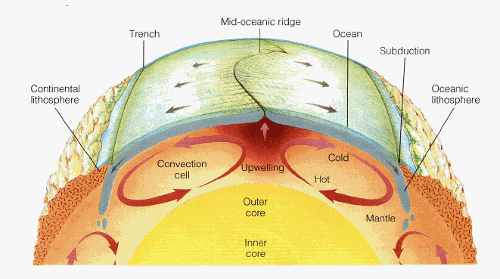

Changes to the crystal structures of these minerals as a result of compression helped the team gauge how much hydrogen the minerals can store. The minerals in play here include bridgmanite (a high pressure form of olivine), which is abundant in the lower mantle, and ringwoodite (another form of olivine). 525 to 800 kilometers below the surface, both minerals exist in a ‘transition zone’ that can hold large amounts of water, which could be carried to the surface by convection of mantle rock, the same process that produces plate tectonics. The researchers’ tests indicated that bridgmanite contained too little hydrogen to be significant for water delivery, but ringwoodite emerged as a candidate for deep-earth water storage.

Image: This plate tectonics diagram from the Byrd Polar and Climate Research Center shows how mantle circulation delivers new rock to the crust via mid-ocean ridges. New research suggests that mantle circulation also delivers water to the oceans.

Panero and Piggott’s computer calculations helped to reveal the geochemical processes that would allow the minerals to rise through the mantle to the surface, the prerequisite for release of water into the oceans. Panero calls the relationship between surface water and plate tectonics “one of the great mysteries in the geosciences.” Her calculations with Pigott indicate that another mineral, garnet, may also play a role in delivering water from ringwoodite back down into the lower mantle, a circulation cycle enabled by plate tectonics that could maintain half as much water below the Earth as is currently flowing in today’s surface oceans.

A cycle like this would keep mantle water replenished even as it fed the oceans — the mantle would never exhaust its water supply. Says Panero:

“If all of the Earth’s water is on the surface, that gives us one interpretation of the water cycle, where we can think of water cycling from oceans into the atmosphere and into the groundwater over millions of years. But if mantle circulation is also part of the water cycle, the total cycle time for our planet’s water has to be billions of years.”

Such a process also relaxes the need for accounting for all of Earth’s water through bombardment from asteroids or comets. The latter surely played a role, but perhaps supplemented water that has been reaching the surface through plate tectonics ever since.