Centauri Dreams

Imagining and Planning Interstellar Exploration

Habitable Moons: Background and Prospects

While I’m in Houston attending the 100 Year Starship Symposium (about which more next week), Andrew LePage has the floor. A physicist and freelance writer specializing in astronomy and the history of spaceflight, LePage will be joining us on a regular basis to provide the benefits of his considerable insight. Over the last 25 years, he has had over 100 articles published in magazines including Scientific American, Sky & Telescope and Ad Astra as well as numerous online sites. He also has a web site, www.DrewExMachina.com, where he regularly publishes a blog on various space-related topics. When not writing, LePage works as a Senior Project Scientist at Visidyne, Inc. located outside Boston, Massachusetts, where he specializes in the processing and analysis of remote sensing data.

by Andrew LePage

Like many space exploration enthusiasts and professional scientists, I was inspired as a child by science fiction in films, television and print. Even as a young adult, science fiction occasionally forced me to think outside of the confines of my mainstream training in science to consider other possibilities. One example of this was the 1983 film Star Wars: Return of the Jedi which is largely set on the forest moon of Endor. While this was hardly the first time a science fiction story was set on a habitable moon, as a college physics major increasingly interested in the science behind planetary habitability, it did get me thinking about what it would take for a moon of an extrasolar planet (or exomoon) to be habitable. And not “habitable” like Jupiter’s moon Europa potentially is with a tidally-heated ocean that could provide an abode for life buried beneath kilometers of ice, but “habitable” like the Earth with conditions that allow for the presence of liquid water on the surface for billions of years with the possibility of life and maybe a technological civilization evolving.

A dozen years later, the first extrasolar planet orbiting a normal star was discovered and a few months afterwards on January 17, 1996, famed extrasolar planet hunters Geoff Marcy and Paul Butler announced the discovery of a pair of new extrasolar giant planets (EGPs) opening the floodgate of discoveries that continues to this day. One of these new EGPs, 47 UMa b, immediately caught my attention since it orbited right at the outer edge of its sun’s habitable zone based on the newest models by James Kasting (Penn State) and his colleagues published just three years earlier. While 47 UMa b was a gas giant with a minimum mass of about 2.5 times that of Jupiter and was therefore unlikely to be habitable, what about any moons it might have? If the size of exomoons scaled with the mass of their primary, one could expect 47 UMa b to sport a family of moons with minimum masses up to a quarter of Earth’s.

I was hardly the first to consider this possibility since it was frequently mentioned at this time by astronomers whenever new EGPs were found anywhere near the habitable zone. But this realization did get me seriously researching the scientific issues surrounding the potential habitability of exomoons and I started preparing an article on the subject for the short-lived SETI and bioastronomy magazine SETIQuest, whose editorial staff I had recently joined. While working on this article, I started corresponding with then-grad student Darren Williams (Penn State) who, it would turn out, was already preparing a paper on habitable moons with Dr. Kasting and Richard Wade (Penn State). Published in Nature on January 16, 1997, their paper titled “Habitable Moons Around Extrasolar Giant Planets” was the first peer-reviewed scientific paper on the topic. They showed that a moon with a mass greater than 0.12 times that of Earth would be large enough to hold onto an atmosphere and shield it from the erosive effect of an EGP’s radiation environment. In addition, tidal heating could potentially provide an important additional source of internal heat to drive the geologic activity needed for the carbonate-silicate cycle (which acts as a planetary thermostat) for much longer periods than would otherwise be possible for such a small body in isolation.

I published my fully-referenced article on habitable moons in the spring of 1997. In addition to incorporating the results from Williams et al. and related work by other researchers, I went so far as to make the first tentative estimate of the number of habitable moons orbiting EGPs and brown dwarfs in our galaxy based on the earliest results of extrasolar planet searches: 47 million compared to the best estimate of the time of about ten billion habitable planets in the galaxy (estimates that are in desperate need of revision after almost two decades of progress). Since my research showed it was likely that habitable moons would tend to come in groups of two or more, I further speculated about the possibilities of life originating on one of these moons being transplanted to a neighbor via lithospermia. And since I did not have to contend with scientific peer-review for this article, I even speculated about the effects multiple habitable moons would have on a spacefaring civilization in such a system with so many easy-to-reach targets for exploration and exploitation.

Image: An artist’s conception of a habitable exomoon (credit: David A. Aguilar, CfA).

After SETIQuest stopped publication and I published a popular-level article on habitable moons in the December 1998 issue of Sky & Telescope, my scientific and writing interests lead me in other directions for the next decade and a half. But in the meantime, scientific work on exploring the issues surrounding habitable bodies in general and habitable moons in particular has continued. The current state of knowledge has been thoroughly reviewed in the recent cover story of the September 2014 issue of the scientific journal Astrobiology, titled “Formation, Habitability, and Detection of Extrasolar Moons” by a dozen scientists active in the field including one of the authors of the first paper on habitable exomoons, Dr. Darren Williams.

Even after 17 years of new theoretical work and observations, the possibility of habitable exomoons still remains strong. The authors show that exomoons with masses between 0.1 and 0.5 times that of the Earth can be habitable. A review of the available literature shows that exomoons of this size could form around EGPs or could be captured much as Triton is believed to have been captured by Neptune in our own solar system. Calculations also show that such exomoons, habitable or otherwise, are detectable using techniques that are available today, especially direct detection by photometric means like that employed by Kepler and by more subtle techniques such as transit timing variations (TTV) and transit duration variations (TDV) of EGPs with exomoons. As the authors state in the closing sentence of their paper:

In view of the unanticipated discoveries of planets around pulsars, Jupiter-mass planets in orbits extremely close to their stars, planets orbiting binary stars, and small-scale planetary systems that resemble the satellite system of Jupiter, the discovery of the first exomoon beckons, and promises yet another revolution in our understanding of the universe.

The fully referenced review paper is René Heller et al., “Formation, Habitability, and Detection of Extrasolar Moons”, Astrobiology, Vol. 14, No. 9, September 2014 (preprint).

New Horizons: Hydra Revealed

Since we don’t yet have flight-ready systems for getting to the outer Solar System much faster than New Horizons, we might as well enjoy one of the benefits of long flight times. Look at it this way: For the next ten months, we can look forward to sharper and sharper images and an ever increasing flow of data about Pluto/Charon and associated moons. It’s going to be a fascinating story that unfolds gradually, culminating in the July flyby next year, and then, of course, we can hope for further exploration of a Kuiper Belt object.

So New Horizons, launched in 2006, is going to be with us for a while, and it has already given us a brief look at asteroid 132524 APL and a shakeout of its science instruments during a gravitational assist maneuver at Jupiter. Now we’re getting down to much finer-grained imagery from Pluto. The first image distinguishing Pluto and Charon was returned in July of 2013. The latest imagery using the spacecraft’s Long Range Reconnaissance Imager (LORRI) shows Pluto’s diminutive moon Hydra, taken as part of a long-exposure strategy that controllers are using to search for other moons or debris near Pluto/Charon.

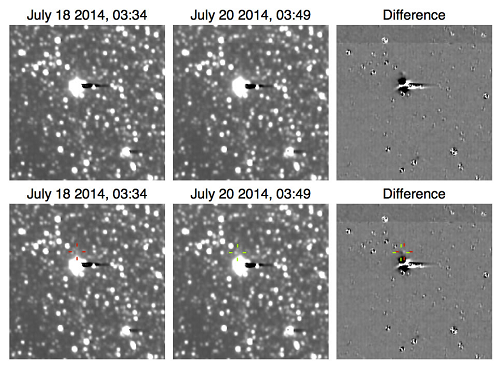

Interestingly, this report on the New Horizons site points out that the science team didn’t expect to detect Hydra until January. The spacecraft took 48 10-second images on July 18 and repeated the process on July 20, combining them to show the evidence of Hydra.

Image (click to enlarge): Hydra revealed in summer data from New Horizons. Credit: Alan Stern/JHU/APL.

To untangle the image, start with the top row. With Pluto overexposed at image center, you can see a dark streak at the right that is an artifact of the imaging process. Both dates show similar images, but at the right is a ‘difference’ image that largely pulls out the background starfield. Here you can see Hydra as an overlapping smudge of bright and dark that appears immediately above Pluto in the image. In the bottom row are the same images, showing Hydra’s expected position on these dates as marked by red and green crosshairs. It’s tough to make Hydra out, but a close look at the enlarged image will identify it.

The JHU/APL article quotes Science Team member John Spencer as saying that at this point, Hydra is several times fainter than the faintest objects New Horizons’ camera is designed to detect. That’s good news overall because it speaks to the quality of the equipment as well as its operational status. It also confirms the efficacy of the plan to look for satellites and possibly hazardous smaller objects as the spacecraft approaches the system. Keeping New Horizons healthy and collision-free is obviously job number one — we only get one shot at this.

I also want to quote New Horizons co-Investigator Randy Gladstone (Southwest Research Institute) on the question of how we’ll study Pluto’s atmosphere during the flyby. We know, of course, that it’s low in pressure, mostly made up of molecular nitrogen with small amounts of methane and carbon monoxide, but Gladstone points out that models of the atmosphere are in disagreement because of the sparse data available. New Horizons will be engaged in survey observations that make few assumptions about what may be found, including a Pluto solar occultation operation:

The Alice ultraviolet spectrograph will watch the Sun set (and then rise again) as New Horizons flies through Pluto’s shadow, about an hour after closest approach. Watching how the different colors of sunlight fade (and then return) as New Horizons enters (and leaves) the shadow will tell us nearly all we could ask for about composition (all gases have unique absorption signatures at the ultraviolet wavelengths covered by Alice) and structure (how those the absorption features vary with altitude will tell us about temperatures, escape rates and possibly about dynamics and clouds).

We’ve only known about this atmosphere since 1988, when Pluto occulted a distant star. The refracted starlight observed then was hard evidence for an atmosphere. Like the atmosphere on Neptune’s large moon Triton, Pluto’s has a surface pressure of 30 to 100 microbars — that’s 3 to 100 millionths of Earth’s surface pressure. Sublimation of ices on the surface is responsible for what little atmosphere Pluto has. As Pluto continues to move away from the Sun following its closest approach in 1989, condensation should take over, but New Horizons should be getting there before the atmosphere has condensed back onto the surface.

And what about Charon? No evidence exists for an atmosphere there, but the moon is small enough that any atmosphere present would have to be considerably less dense than that on Pluto. While sublimation could produce gases that fed the atmosphere for a time, the tiny world would not be able to prevent their rapid dispersion into space.

Crucible for Moon Formation in Saturn’s Rings

Hard to believe that it’s been ten years for Cassini, but it was all the way back in January of 2005 that the Huygens probe landed on Titan, an event that will be forever bright in my memory. Although the fourth space probe to visit Saturn, Cassini became in 2004 the first to orbit the ringed planet, and since then, the mission has explored Titan’s hydrocarbon lakes, probed the geyser activity on Enceladus, tracked the mammoth hurricane at Saturn’s north pole, and firmed up the possibility of subsurface oceans on both Titan and Enceladus.

I mentioned the Galileo probe last week, its work at Europa and its fiery plunge into Jupiter’s atmosphere to conclude the mission. Cassini has a similar fate in store after finishing its Northern Solstice Mission, which will explore the region between the rings and the planet. As discussed at the recent European Planetary Science Congress in Cascais, Portugal, the spacecraft’s final orbit will occur in September of 2017, taking Cassini to a mere 3000 kilometers above the planet on closest approach. A final Titan encounter will then provide the gravitational muscle to hurl the craft into Saturn’s atmosphere, where it will be vaporized.

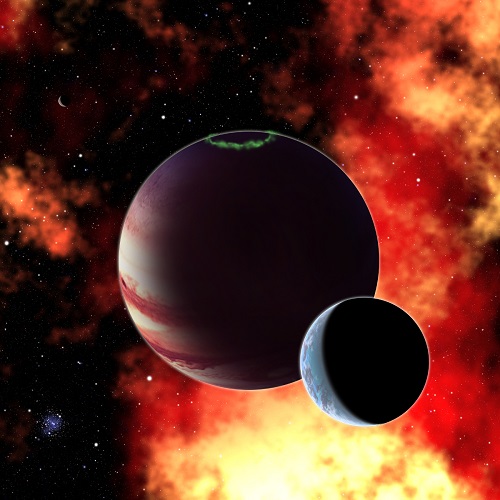

But of course we’re not quite through with Cassini yet. New work out of the SETI Institute delves into the creation and destruction of moons on extremely short time-scales within the Saturnian ring system. Robert French, Mark Showalter and team accomplished their studies by comparing the exquisite photographs Cassini has produced with pictures made during the Voyager mission. The upshot: The F ring has taken on a completely new look.

“The F ring is a narrow, lumpy feature made entirely of water ice that lies just outside the broad, luminous rings A, B, and C,” notes French. “It has bright spots. But it has fundamentally changed its appearance since the time of Voyager. Today, there are fewer of the very bright lumps. We believe the most luminous knots occur when tiny moons, no bigger than a large mountain, collide with the densest part of the ring. These moons are small enough to coalesce and then break apart in short order.”

Short order indeed. It appears that the bright spots can appear and disappear in the course of mere days and even hours. Explaining the phenomenon is the nature of the F ring itself, which is at the critical point known as the Roche limit. This is the range within which the gravitational pull on a moon’s near side can differ enough from that on its far side to actually tear the moon apart. What we seem to be seeing is moon formation — objects no more than 5 kilometers in size — quickly followed by gravitationally induced breakup.

Image: Cassini spied just as many regular, faint clumps in Saturn’s narrow F ring (the outermost, thin ring), like those pictured here, as Voyager did. But it saw hardly any of the long, bright clumps that were common in Voyager images. Credit: NASA/JPL-Caltech/SSI

Mark Showalter compares these small moons to bumper cars that careen through moon-forming material, taking form and then fragmenting as they go. Adding to the chaos is the moon Prometheus, a 100-kilometer object that orbits just within the F ring. Alignments of this moon that occur every 17 years produce a further gravitational influence that helps to launch the formation of the tiny moonlets and propel them through their brief lives. Prometheus, then, should cause a periodic waxing and waning of the clumps of moon activity.

Further work with the Cassini data should help the researchers firm up this theory, for the Prometheus influence should cause an increase in the clumping and breakup activity within the next few years. Cassini has a sufficient lifetime to test that prediction. What adds further interest to the story is the fact that we’re seeing in miniature some of the elementary processes that, 4.6 billion years ago, led to the formation of the Solar System’s planets. Consider Saturn’s F ring, then, a laboratory for processes we’d like to learn much more about as we turn our instruments to young stars and the planets coalescing around them.

The paper is French et al., “Analysis of clumps in Saturn’s F ring from Voyager and Cassini,” published online in Icarus on July 15, 2014 (abstract). This news release from the SETI Institute is also helpful.

‘Hot Jupiters’: Explaining Spin-Orbit Misalignment

Bringing some order into the realm of ‘hot Jupiters’ is all to the good. How do these enormous worlds get so close to their star, having presumably formed much further out beyond the ‘snowline’ in their systems, and what effects do they have on the central star itself? And how do ‘hot Jupiter’ orbits evolve so as to create spin-orbit misalignments? A team at Cornell University led by astronomy professor Dong Lai, working with graduate students Natalia Storch and Kassandra Anderson, has produced a paper that tells us much about orbital alignments and ‘hot Jupiter’ formation.

It’s no surprise that large planets — and small ones, for that matter — can make their stars wobble. This is the basis for the Doppler method that so accurately measures the movement of a star as affected by the planets around it. But something else is going on in ‘hot Jupiter’ systems. In our own Solar System the rotational axis of the Sun is more or less aligned with the orbital axis of the planets. But some systems with ‘hot Jupiters’ have shown a misalignment between the orbital axis of the gas giants and the rotational axis of the host star.

Image: ‘Hot Jupiters,’ large, gaseous planets in inner orbits, can make their suns wobble after they wend their way through their solar systems. Credit: Dong Lai/Cornell University.

The Cornell team went to work on simulations of such systems, working with binary star systems separated by as much as hundreds of AU. Their work shows that gas giants can be influenced by partner binary stars that cause them to migrate closer to their star. At play here is the Lidov-Kozai mechanism in celestial mechanics, an effect first described by Soviet scientist Michael Lidov in 1961 and studied by the Japanese astronomer Yoshihide Kozai. The effect of perturbation by an outer object is an important factor in the orbits of planetary moons, trans-Neptunian objects and some extrasolar planets in multiple star systems.

Thus the mechanism for moving a gas giant into the inner system, as described in the paper:

In the ‘Kozai+tide’ scenario, a giant planet initially orbits its host star at a few AU and experiences secular gravitational perturbations from a distant companion (a star or planet). When the companion’s orbit is sufficiently inclined relative to the planetary orbit, the planet’s eccentricity undergoes excursions to large values, while the orbital axis precesses with varying inclination. At periastron, tidal dissipation in the planet reduces the orbital energy, leading to inward migration and circularization of the planet’s orbit.

As the planet approaches the star, interesting things continue to occur. From the paper:

It is a curious fact that the stellar spin axis in a wide binary (~ 100 AU apart) can exhibit such a rich, complex evolution. This is made possible by a tiny planet (~ 10-3 of the stellar mass) that serves as a link between the two stars: the planet is ‘forced’ by the distant companion into a close-in orbit, and it ‘forces’ the spin axis of its host star into wild precession and wandering.

Moreover, “…in the presence of tidal dissipation the memory of chaotic spin evolution can be preserved, leaving an imprint on the final spin-orbit misalignment angles.”

The approach of the ‘hot Jupiter’ to the host star can, in other words, disrupt the previous orientation of the star’s spin axis, causing it to wobble something like a spinning top. The paper speaks of ‘wild precession and wandering,’ a fact that Lai emphasizes, likening the chaotic variation of the precession to chaotic phenomenon such as weather systems. The spin-orbit misalignments we see in ‘hot Jupiter’ systems are thus the result of the evolution of changes to the stellar spin caused by the migration of the planet inward.

The paper goes on to mention that we see examples of chaotic spin-orbit resonances in our own Solar System. Saturn’s satellite Hyperion experiences what the paper calls ‘chaotic spin evolution’ because of resonances between its spin and orbital precession periods. Even the rotation axis of Mars undergoes chaotic variation due to much the same mechanism.

The paper is Storch, Anderson & Lai, “Chaotic dynamics of stellar spin in binaries and the production of misaligned hot Jupiters,” Science Vol. 345, No. 6202 (12 September 2014), pp. 1317-1321 (abstract / preprint)

Emergence of the ‘Venus Zone’

In terms of habitability, it’s clear that getting a world too close to its star spells trouble. In the case of Gliese 581c, we had a planet that some thought would allow liquid water at the surface, but subsequent work tells us it’s simply too hot for life as we know it. With the recent dismissal of Gl 581d and g (see Red Dwarf Planets: Weeding Out the False Positives), that leaves no habitable zone worlds that we know about in this otherwise interesting red dwarf system.

I’m glad to see that Stephen Kane (San Francisco State) and his team of researchers are working on the matter of distinguishing an Earth-like world from one that is more like Venus. We’ve made so much of the quest to find something roughly the same size as the Earth that we haven’t always been clear to the general public about what that implies. For Venus is Earth-like in terms of size, but it’s clearly a far cry from Earth in terms of conditions.

Indeed, you would be hard-pressed to find a more hellish place than Venus’ surface. Kane wants to understand where the dividing line is between two planetary outcomes that could not be more different. Says the scientist:

“We believe the Earth and Venus had similar starts in terms of their atmospheric evolution. Something changed at one point, and the obvious difference between the two is proximity to the Sun.”

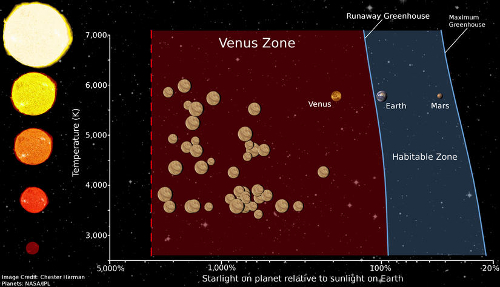

Kane and company’s paper on this will appear in Astrophysical Journal Letters and is already available on the arXiv server (citation below). At stake here is solar flux, the incoming energy from the planet’s star, which can be used to define an inner and an outer edge to what Kane calls the ‘Venus Zone.’ Venus is 25 percent closer to the Sun than the Earth, but it gets twice the amount of solar flux. Get close enough to the star to trigger runaway greenhouse effects — the results of which make Venus the distinctive nightmare at the surface that it is — and you are at the outer edge of the Venus Zone.

Go further in toward the star and you can pick out the point where a planet’s atmosphere would begin to be eroded by the incoming flux. This is the inner edge of the Venus Zone, and by understanding the boundaries here, we are helping future attempts to characterize Earth-sized worlds in the inner systems of their stars. Find an Earth-sized planet in the Venus Zone and there is reason to suspect that a runaway greenhouse gas effect is in play.

Image: This graphic shows the location of the “Venus Zone,” the area around a star in which a planet is likely to exhibit atmospheric and surface conditions similar to the planet Venus. Credit: Chester Harman, Pennsylvania State University.

The broader picture is an attempt to place our Solar System in context. The Kepler results have consistently demonstrated that any thought of our Solar System being a kind of template for what a system should look like must be abandoned. From the paper:

A critical question that exoplanet searches are attempting to answer is: how common are the various elements that we find within our own Solar System? This includes the determination of Jupiter analogs since the giant planet has undoubtedly played a significant role in the formation and evolution of our Solar System. When considering the terrestrial planets, the attention often turns to atmospheric composition and prospects of habitability. In this context, the size degeneracy of Earth with its sister planet Venus cannot be ignored and the incident flux must be carefully considered.

The study identifies 43 potential Venus analogs from the Kepler data, with occurrence rates similar to those for Earth-class planets, though as the paper notes, with smaller uncertainties. After all, Kepler is more likely to detect shorter-period planets in the Venus Zone than Earth-class planets with longer orbital periods. Overall, the team estimates based on Kepler data that approximately 32% of small low-mass stars have terrestrial planets that are potentially like Venus, while for G-class stars like the Sun, the figure reaches 45%.

Kane notes that future missions will be challenged by the need to distinguish between the Venus and Earth model. We’ll also be looking at the question of carbon in a planet’s atmosphere and its effects on the boundaries of the Venus Zone, the assumption being that more carbon in the atmosphere would push the outer boundary further from the star.

The paper is Kane, Kopparapu and Domagal-Goldman, “On the frequency of potential Venus analogs from Kepler data,” accepted for publication in The Astrophysical Journal Letters and available as a preprint. Be aware as well of the team’s Habitable Zone Gallery, which currently identifies 51 planets as likely being within their star’s habitable zone.

Space Telescopes Beyond Hubble and JWST

Ashley Baldwin tracks developments in astronomical imaging with a passion, making him a key source for me in keeping up with the latest developments. In this follow-up to his earlier story on interferometry, Ashley looks at the options beyond the James Webb Space Telescope, particularly those that can help in the exoplanet hunt. Coronagraph and starshade alternatives are out there, but which will be the most effective, and just as much to the point, which are likely to fly? Dr. Baldwin, a consultant psychiatrist at the 5 Boroughs Partnership NHS Trust (Warrington, UK) and a former lecturer at Liverpool and Manchester Universities, gives us the overview, one that hints at great things to come if we can get these missions funded.

by Ashley Baldwin

Hubble is getting old.

It is due to be replaced in 2018 by the much larger James Webb Space Telescope. This is very much a compromise of what is needed in a wide range of astronomical and cosmological specialties, one that works predominantly in the infrared. The exoplanetary fraternity will get a portion of its (hoped for) ten year operating period. The JWST has coronagraphs on some of its spectrographs which will allow exoplanetary imaging but as its angular resolution is actually lower than Hubble, its main contribution will be to characterise the atmospheres of discovered exoplanets.

It is for this reason that the designers of TESS (Transiting Exoplanet Survey Satellite) have made sure a lot of its most prolonged viewing will overlap with that of the JWST. Its ability to do this will depend on several factors such as the heat (infrared) the planet is giving out, its size and critically its atmospheric depth (the deeper the better) and the proximity of the planet in question. The longer the telescope has to “stop and stare” at its target planet the better, but we already know lots of other experts want some of the telescope’s precious time, so this will be a big limiting factor.

Planet Hunting in Space

The big question is, where are the dedicated exoplanet telescopes? NASA had a mission called WFIRST planned for the next decade, with the predominant aim of looking at dark matter. There was an add on for “micro-lensing” discovery of exoplanets that happened to pass behind further stars, getting magnified by the stars’ gravity and showing up as “blips” in the star’s spectrum. When the National Reconnaissance Mirrors (NRO) were recently donated to NASA, it was suggested that these could be used for WFIRST instead.

Being 2.4 m in diameter they would be much larger than the circa 1.5 m mirror originally proposed and would therefore make the mission more powerful, especially because by being “wide field” they would view far bigger areas of the sky, further increasing the mission’s potency. It was then suggested that the mission could be improved yet further by adding a “coronagraph” to the satellite’s instrument package. The savings made by using one of the “free” NRO mirrors would cover the coronagraph cost.

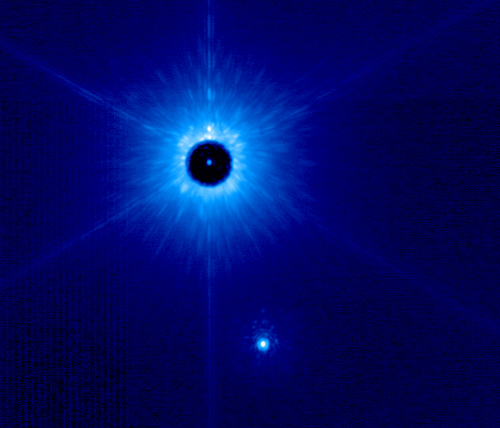

Coronagraphs block out starlight and were originally developed to allow astronomers to view the Sun’s atmosphere (safely!). Subsequently they have been placed in front of a telescope’s focal plane to cut out the light of more distant stars, thus allowing the much dimmer light of orbiting exoplanets to be seen. A decade of development at numerous research testbeds such as the Jet Propulsion Laboratory, Princeton and at the Subaru telescope on Mauna Kea has refined the device to a high degree. When starlight of all wavelengths strikes a planet it can be reflected directly into space, or absorbed to be re-emitted as thermal infrared energy. The difference between the amount of light emitted by planets versus stars is many orders of magnitude in the infrared compared to the visible, so for this reason telescopes looking to visualise exoplanets do so in the infrared. The difference is still a billion times or so.

Thus the famous “firefly in the searchlight “metaphor. Any coronagraph must cut out infrared to the tune of a billion times or more for an exoplanet to first be seen and then analysed spectroscopically. The latter is crucial as it tells us about the planet and its atmosphere according to the factors described above. This light reduction technique is called “high contrast imaging” with the reduction described according to negative powers of ten. Typically a billion times reduction is simplified to 10e9. This level of reduction should allow Jupiter size planets, ice giants like Neptune and, at a push, “super earths”. To visualise Earth like, terrestrial planets, an extra order of magnitude, 10e10 or better is necessary.

Image: A coronagraph at work. This infrared image was taken at 1.6 microns with the Keck 2 telescope on Mauna Kea. The star is seen here behind a partly transparent coronagraph mask to help bring out faint companions. The mask attenuates the light from the primary by roughly a factor of 1000. The young brown dwarf companion in this image has a mass of about 32 Jupiter masses. The physical separation here is about 120 AU. Credit: B. Bowler/IFA.

The Emergence of WFIRST AFTA

Telescope aperture is not absolutely critical (with a long enough view), with even small metre-sized scopes able to see exoplanets with the correct coronagraph. The problem is the inner working angle or IWA. This represents how close to the parent star its light is effectively blocked, allowing imaging with minimal interference. Conversely, the outer working angle, OWA , determines how far away from the star a planet can be seen. The IWA is particularly important for seeing and characterising planets in the habitable zone (HBZ) of sun-like stars. By necessity it will need to shrink as the HBZ shrinks, as with M dwarfs, which would obviously make direct imaging of any terrestrial planets discovered in the habitable zones of TESS discoveries very difficult. For bigger stars with wider HBZs obviously the IWA will be less of an issue.

So all of this effectively made a new direct imaging mission, WFIRST AFTA. Unfortunately the NRO mirror was not made for this sort of purpose. It is a Cassegrain design, a so-called “on axis” telescope with the focal plane in line with the primary mirror’s incoming light, with the secondary mirror reflecting its light back through a hole in the primary to whatever science analysis equipment is required. In WFIRST AFTA this would mainly be a spectrograph.

The coronagraph would have to be at the focal plane and along with the secondary mirror, would further obscure the light striking the primary. It would also need squeezing between the “spider’ wires that support the secondary mirror (these give the classic ‘Christmas tree star’ images we are all familiar with in common telescopes).

Two coronagraphs are under consideration that should achieve an image contrast ratio of 10 to the minus 9, which is good enough to view Jupiter-sized planets. Every effort is being made to improve on this and to get down to a level where terrestrial planets can be viewed. Difficult and expensive, but far from impossible. Obviously, WFIRST has quite easily the biggest mirror of the options under consideration by NASA and hence the greatest light intake and imaging range. It could also be possible to put the necessary equipment on board to allow it to use a starshade at a later date. The original WFIRST budget came in at $1.6 billion but that was before NASA came under increasing political pressure on the JWST’s (huge) overspend.

An independent review of cost suggested WFIRST would come in at over $2 billion. Understandably concerned about the potential for “mission creep”, seen with the JWST development, NASA put the WFIRST AFTA design on hold until the budgetary statement of 2017, with no new building commencing until JWST launched. So whatever is eventually picked, 2023 will be the earliest launch date. Same old story, but limited costs sometimes lead to innovation. In the meantime, NASA commissioned two “Probe” class alternative back up concepts to be considered in the “light” of the budgetary statement.

Exoplanet Telescope Alternatives

The first of these is EXO-C. This consists of a 1.5 m “off axis” telescope ( the primary mirror is angled so that the focal plane and secondary mirror are at the side of the telescope and don’t obscure the primary, thus increasing its light gathering ability). There are potential imaging issues with such scopes so they cost more to build. EXO-C has a coronagraph and a spectrograph away from the optical plane. The issue for this concept is which coronagraph to choose. There are many designs, tested over a decade or more with the current “high contrast imaging”( see above) level between 10e9 and 10e10. So EXo-C is relatively low risk and should at a push be able to even see some Earth or Super-Earth planets in the HBZs of some nearby stars, as well as lots of “Jupiters”.

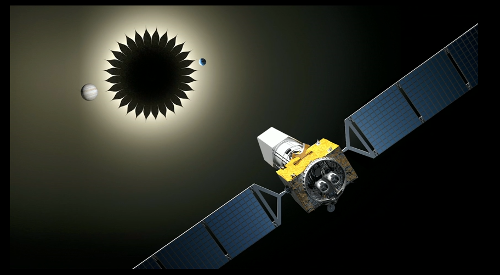

The other Probe mission, even more exciting, is EXO-S. This involves combining a self propelled “on axis” 1.1m telescope with a “starshade”. The starshade is a flower-like (it even has petals) satellite — the choice of a flower shape rather than a round configuration reduces image-spoiling “Fresnel” diffraction from the starshade edges. The shade sits between the telescope and the star to be examined for planets. It casts a shadow in space within which the telescope propels itself to the correct distance for observation (several thousands of kms).

Like a coronagraph, the starshade cuts out the star’s light, but without the difficulty of squeezing an extra device into the telescope. The hard bit is that both telescope and shade need to have radio or laser communication to achieve EXACT positioning throughout the telescopic “stare” to be successful, requiring tight formation flying. The telescope carries propellant for between 3 and 5 years. With several days for moving into position, this is around 100 or so separate stop and stares. The shade concept means two devices instead of one although they can be squeezed into one conventional launch vehicle, to separate at a later point in the mission.

Image: The starshade concept in action. Credit: NASA/JPL.

The good news is that with a starshade the inner working angle is dependent on the telescope starshade distance rather than the telescope. The price of this is that the further apart the two are, the greater the precision placement required. The distances involved depend on the size of the starshade. For EXO-S’ 35 m starshade, this is in excess of thirty seven thousand kilometres. EXO-S, despite its small mirror size, will be able to view and spectrographically characterise terrestrial planets around suitable nearby stars and Jupiter-sized planets considerably further out.

Achieving Space Interferometry

“Formation flying” of telescopes is an entirely new concept that hasn’t been tried before, so potentially more risky, especially as its development is way behind that of coronagraph telescopes. If it works, though, it opens the gate to fantastic discovery in a much wider area than EXO-S. This is just the beginning. If you can get two spacecraft to fly in formation, why not 3 or 30 or even more? In the recent review I wrote for Centauri Dreams on heterodyne interferometers, I described how 30 or so large telescopes could be linked up to deliver the resolution of an telescope with an aperture equivalent to the largest gap between the unit scopes of the interferometer (a diluted aperture). The number of scopes increases light intake ( the brightness of the image) and “baselines” , the gap between constituent scopes in the array, delivering detail across the diluted aperture of the interferometer.

We’re in early days here, but this is heading in the direction of an interferometer in space with resolution orders of magnitude larger than any New Worlds telescope. A terrestrial planet finder yes, but more important, with a good spectrograph, a terrestrial planet characteriser interferometer. TPC-I. To actually “see” detail on an exoplanet would require hundreds of large space telescopes spread over hundreds of kilometers, so that’s one for Star Trek. Detailed atmospheric characterisation, however, is almost as good and not so far in the future if EXO-S gets the go ahead and the Planet Formation Imager evolves on the ground before migrating into space. All roads lead to space.

As an addendum, EXO-S has a yet to be described back-up that could best be seen as WFIRST AFTA-S. Here the starshade has the propulsive system, but the telescope is made from the NRO 2.4 m mirror, thus making the device potentially the most potent of the three designs. Having the drive system on the starshade, along with a radio connector to the telescope, is a concept even newer than the conventional EXO-S . But it is potentially feasible. We await a cost from the final reports the design concept groups need to submit.

In the meantime, various private ventures such as the BoldlyGo Institute run by Jon Morse, formerly of NASA, are hoping to fund and launch a 1.8 m off-axis telescope with BOTH an internal coronagraph AND a starshade. Sadly, the two methods have been found not to work in combination, but obviously a coronagraph telescope can look at stars while its starshade moves into position, increasing critical viewing time over a 3 year mission.

By way of comparison, coronagraphs can and have been used increasingly effectively on ground-based scopes such as Gemini South. It is believed that thanks to atmospheric interference the best contrast image achievable, even with one of the new ELTs being built, will be around 10 to the minus 9, so thanks to their huge light gathering capacity, they too might just discover terrestrial planets around nearby stars but probably not in the HBZ.

The future holds exciting developments. Tantalisingly close. In the meantime, it is important to keep up the momentum of development. The two Probe design groups recognise that their ideas, whilst capable of exciting science as well as just “proof of concept”, are a long way short of what could and should be done. The JWST for all its overspend will hopefully be a resounding success and act as a pathfinder for a large, 16 m plus New Worlds telescope that will start the exoplanet characterisation that will be completed by TPC-I. Collapsible, segmented telescopes will be shown to fit into and work from available launch vehicles, such as the upcoming Space Launch system (SLS), or one of the new Falcon Heavy rockets. New materials such as silicon carbide will reduce telescope costs. The lessons learned from JWST will make such concepts economically viable and deliver ground-shaking findings.

How ironic if would be if we discover other life in another star system before we find it in our own !