Centauri Dreams

Imagining and Planning Interstellar Exploration

100 Year Starship Site Launches

You’ll want to bookmark the 100 Year Starship Initiative‘s new site, which just came online. From the mission statement:

100 Year Starship will pursue national and global initiatives, and galvanize public and private leadership and grassroots support, to assure that human travel beyond our solar system and to another star can be a reality within the next century. 100 Year Starship will unreservedly dedicate itself to identifying and pushing the radical leaps in knowledge and technology needed to achieve interstellar flight while pioneering and transforming breakthrough applications to enhance the quality of life on earth. We will actively include the broadest swath of people in understanding, shaping, and implementing our mission.

And check here for news about the 2012 public symposium, which will be held in Houston from September 13-16. Quoting from that page:

This year, 2012, DARPA gave its stamp of approval to and seed funded —100 Year Starship (100YSS)—a private organization to achieve perhaps the most daring initiative ever in space exploration: human travel beyond our solar system to another star!

Meeting the challenge of 100YSS will be as or even more transformative to our global world as Sputnik or DARPA’s commercialization of the ARPA net that became the Internet. Make no mistake; this is not your grandfathers’ space program. 100YSS—An Inclusive, Audacious Journey Transforms Life Here on Earth and Beyond.”

Join us in Houston, September 13-16, 2012 at the 100YSS Public Symposium as the journey begins!

The Proxima Centauri Planet Hunt

Although we haven’t yet found any planets around Proxima Centauri, it would be a tremendous spur to our dreams of future exploration if one turned up in the habitable zone there. That would give us three potential targets within 4.3 light years, with Centauri A and B conceivably the home to interesting worlds of their own. And the issue we started to look at yesterday — whether Proxima Centauri is actually part of the Alpha Centauri system or merely passing through the neighborhood — has a bearing on the planet question, not only in terms of how it might affect the two primary stars, but also because it would tell us something about Proxima’s composition.

A Gravitationally Bound System

Greg Laughlin makes this case in the systemic post I referred to yesterday. It was Laughlin and Jeremy Wertheimer (UCSC) who used data from ESA’s Hipparcos mission to conclude that Proxima was indeed bound to Centauri A and B. Here I want to quote the conclusion of the duo’s paper on the matter, which takes us into some interesting ground indeed:

The availability of Hipparcos data has provided us with the ability to implement a significant improvement over previous studies of the ? Cen system. Our results indicate that it is quite likely that Proxima Cen is gravitationally bound to the Cen A-B pair, thus suggesting that they formed together within the same birth aggregate and that the three stars have the same ages and metallicities. As future observations bring increased accuracy to the kinematic measurements, it will likely become more obvious that Proxima Cen is bound to the Cen A-B binary and that Proxima Cen is currently near the apastron of an eccentric orbit…

First of all, the idea of Proxima Centauri as part of a triple star system makes sense given Proxima’s small relative velocity with respect to Centauri A and B (0.53 ± 0.14 km s-1). The star lies close by the binary pair at 15000 plus or minus 700 AU [and note that I incorrectly posted this distance yesterday, though the error is now corrected to reflect these figures]. Laughlin and Wertheimer calculate that the likelihood of this configuration occurring by chance is less than 10-6, adding that “ …based on this incredibly improbable arrangement it has been suspected that the stars constitute a bound triple system ever since Proxima’s discovery…”

Note too the implication that Proxima Centauri was born out of the same molecular cloud as its close neighbors, which would imply that all three have the same age and metallicity. Now that’s interesting. We know a lot about Centauri A and B, and in particular we know that both stars are more rich in metals than the Sun, with recent work by Jeff Valenti and Debra Fischer indicating a metallicity of about 150 percent of the Sun’s, and this may be a low-ball estimate, given other recent work on the matter. The link between metals — elements higher than hydrogen and helium — and planets is well established for gas giants and may well extend to small, rocky worlds, as we saw the other day in Falguni Suthar and Christopher McKay’s paper on habitable zones in elliptical galaxies. A metal-rich Proxima Centauri would boost its chances for planets.

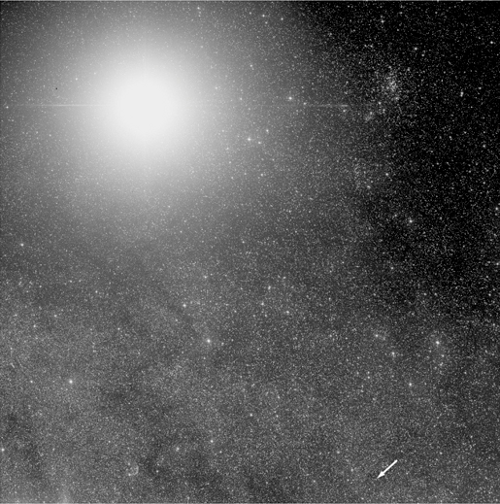

Image: Light takes only 4.22 years to reach us from Proxima Centauri. This small red star, captured in the center of the above image, is so faint that it was only discovered in 1915 and is visible only through a telescope. Recent research is revealing much about its composition and the likelihood of planets there. Credit & Copyright: David Malin, UK Schmidt Telescope, DSS, AAO.

If we thought Proxima were not bound by Centauri A and B, we’d have a problem, because as Laughlin noted on systemic, it’s tricky to figure out the metallicity of a solitary red dwarf:

Metallicities for red dwarf stars are notoriously difficult to determine. Low-mass red dwarfs are cool enough so that molecules such as titanium oxide, water, and carbon monoxide are able to form in the stellar atmospheres. The presence of molecules leads to a huge number of lines in the spectra, which destroys the ability to fix a continuum level, and makes abundance determinations very difficult.

But if you have a red dwarf within a multiple star system, you can use the metallicity of the more massive primary star(s) to infer the metallicity of the red dwarf, a method that has produced a metallicity calibration for red dwarfs that thus far has proven useful. All of this means that if Proxima Centauri is indeed bound to Centauri A and B, then its metallicity is on the same order as theirs. When Xavier Bonfils (Observatoire de Grenoble) and colleagues went to work on red dwarf metallicity in 2008, they examined 20 red dwarfs whose metallicity could be estimated in this way. Of those 20 stars, reports Laughlin, only five were higher than the Sun in metallicity, and only one star, GL 324, proved to be as rich in metals as Proxima Centauri.

Proxima Planets: What We Can Exclude

From the standpoint of metals, then, we’d expect the Alpha Centauri stars should have the materials needed for the formation of rocky planets, but what have we observed around Proxima Centauri? The most recent work from Michael Endl (UT-Austin) and Martin Kürster (Max-Planck-Institut für Astronomie) works with seven years of high precision radial velocity data using the UVES spectrograph at the European Southern Observatory. These observations went from March of 2000 to March of 2007 and found no planet of Neptune mass or above exists there out to about 1 AU from the star.

Let’s pause for a moment. These are radial velocity studies about a star the orientation of whose planetary system — if one exists — is unknown to us. If there are planets here, we could be looking at the system from almost any angle, so that any mass calculations have a lot of play in them. Consider a large planet at a high angle to the line of sight. A gas giant like this could cause a radial velocity signature roughly similar to a much smaller planet in a system where the orbital plane was on the line of sight. In other words, the radial velocity technique is insensitive to the reflex velocity in the plane of the sky, giving us only a lower limit to a planet’s mass.

All that is by way of saying that a planet larger than Neptune could conceivably still be there around Proxima Centauri, but the odds do not favor it. We also learn from Endl and Kürster that no super-Earths have been detected larger than about 8.5 Earth masses in orbits with a period of less than 100 days. As for the habitable zone of this star — thought to be 0.022 to 0.054 AU, which corresponds to an orbital period ranging from 3.6 to 13.8 days — we can rule out super-Earths of 2-3 Earth masses in circular orbits. Here we pause again: The authors stress that their mass limits apply only to planets in circular orbits. Planets above these mass thresholds could still exist on eccentric orbits around this star.

So no planets yet around Proxima Centauri, and we’re beginning to rule out entire categories of planet here. We also have the possibility of smaller worlds in interesting orbits. The encouraging thing is that the radial velocity work on Proxima is getting better and better, and the authors see us closing in on planets of Earth size:

With the results from this paper we demonstrate that the discovery of m sin i ? 1 M? [one Earth mass] is within our grasp. Since sensitivity is a function of RV [radial velocity] precision, number of measurements and sampling, adding more points to the existing data string in a pseudo-random fashion, will allow us to improve the detection sensitivity over time.

On the broader question of M-dwarf planetary systems in general, Endl and Kürster note the ambiguity inherent in radial velocity studies in terms of mass. One way we can supplement our red dwarf studies is to find low-mass planets inside the habitable zone of such stars, where their close orbits make them more likely to transit the star than planets on wider orbits. Moreover, such transits will be more detectable — we say they have a greater ‘transit depth’ than for other types of star, referring to the change in brightness as the planet transits the star. As we discover transiting super-Earths in close orbits around M-dwarfs, then, we’ll be able to put them on a mass/radius diagram that will help us understand what’s happening around other such stars.

But we’re still not through with Proxima Centauri and its nearby companions. Just how old are these stars? More on that tomorrow, when I’ll consider the question in terms of what’s around us in the stellar neighborhood.

The paper is Endl and Kürster, “Toward Detection of Terrestrial Planets in the Habitable Zone of Our Closest Neighbor: Proxima Centauri,” Astronomy and Astrophysics, Volume 488, Issue 3, 2008, pp.1149-1153 (abstract).

Proxima Centauri: Looking at the Nearest Star

Let’s start the week with a reminder about Debra Fischer’s work on Alpha Centauri, which we talked about last week. There are several ongoing efforts to monitor Centauri A and B for planets and, given the scrutiny the duo have received for the past several years, we should be getting close to learning whether there are rocky worlds in this system or not. Fischer’s continuing work at Cerro Tololo involves 20 nights of observing time that her grant money can’t cover. Private donations are the key — please check the Planetary Society’s donation page to help if you can.

While interest in the Alpha Centauri system is high, the small red dwarf component of that system has been getting relatively little press lately. But I don’t want to neglect Proxima Centauri, which as far as we know is the closest star to the Earth (some 4.218 light years away, compared to Centauri A and B’s 4.39 light years). From a planet around Centauri B, it would be hard to know that Proxima (also known as Alpha Centauri C) was even associated with the primary stars. It’s fully 15,000 AU out (about 400 times Pluto’s distance from the Sun), and only a check on its large proper motion would reveal its true nature. The photo below makes this point as well as anything. Proxima Centauri is not a star that immediately grabs the attention.

Image: Proxima Centauri (shown by the arrow) in relation to Centauri A and B. The latter appear as a single bright object at upper left. Credit: European Southern Observatory.

In his book Alpha Centauri: The Nearest Star (New York: William Morrow & Company, 1976), Isaac Asimov imagined what Proxima Centauri would look like from a planet around either of the binary stars. He worked out that Proxima’s magnitude would be a fairly dim 3.7, making it a naked eye object but not a particularly noticeable one. Its proper motion would be about 1 second of arc per year. As always, Asimov writes entertainingly:

Neither its brightness nor its proper motion would attract much attention, and stargazers might look at the sky forever and not suspect this dim star of belonging to their own system. The only giveaway would come when astronomers decided to make a routine check of the parallaxes of the various visible stars in the sky. After a month or so, they would begin to get a hint of an extraordinarily large parallax and in the end they would measure one of 20 seconds of arc, which would be so much higher than that of any other star that they would at once suspect it of being a member of their own system.

When Asimov wrote this, it was generally accepted that Proxima Centauri was gravitationally bound to Centauri A and B, but that finding has come in for serious review in the years since. In 1993, Robert Matthews and Gerard Gilmore (Cambridge University) took a hard look at the kinematic data and found that Proxima Centauri was on the borderline for an object in a bound orbit around Centauri A and B. But a later study by Jeremy Wertheimer and Gregory Laughlin (UCSC) firmed up the case using data from the European Space Agency’s Hipparcos satellite and concluded that Proxima was indeed bound to the system and not just passing in the night.

All this can have big implications. To understand why, read what Laughlin says on his systemic site about potential planets around Centauri A and B:

At first glance, one expects that the Alpha Centauri planets will be very dry. The period of the AB binary pair is only 79 years. The orbital eccentricity, e=0.52, indicates that the stars come within 11.2 AU of each other at close approach. Only refractory materials such as silicates and metals would have been able to condense in the protoplanetary disks around Alpha Centauri A and B. To reach the water, you need to go out to the circumbinary disk that would have surrounded both stars. With only A and B present, there’s no clear mechanism for delivering water to the parched systems of terrestrial planets.

But now let’s assume that Proxima Centauri is indeed bound to this system in a million-year orbit. Given our age estimates of these stars, that would mean Proxima has orbited Centauri A and B roughly 6500 times. Its presence, note Laughlin and Wertheimer, introduces a mechanism for dislodging comets from outer orbits and pushing them into the inner system(s), allowing for the water they might otherwise lack. Even in terms of astrobiology, then, Proxima Centauri may play a role in making planets around Centauri A and B interesting, not to mention what it offers up in its own right.

In thinking about planets around Proxima itself, we might start with metallicity, a major factor in planet formation. Centauri A and B have higher levels of metallicity than the Sun, but calculating red dwarf metallicities is tricky, for reasons I want to get into tomorrow, when we’ll look more closely at Proxima Centauri as a possible home for planets. We’re learning more about this intriguing star all the time, and any planets there would play into our thinking with regard to future interstellar probes, something to keep in mind as tomorrow’s discussion of Proxima proceeds.

The Matthews and Gilmore paper is “Is Proxima really in orbit about Alpha CEN A/B?,” Monthly Notices of the Royal Astronomical Society Vol. 261, No. 2 (1993), p. L5-L7 (abstract). The Wertheimer and Laughlin paper is “Are Proxima and Alpha Centauri Gravitationally Bound?” The Astronomical Journal 132:1995-1997 (2006), available online.

Habitable Zones in Other Galaxies

We often speak of habitable zones around stars, most commonly referring to the zone in which a planet could retain liquid water on its surface. But the last ten years has also seen the growth of a much broader idea, the galactic habitable zone (GHZ). A new paper by Falguni Suthar and Christopher McKay (NASA Ames) digs into galactic habitable zones as they apply to elliptical galaxies, which are generally made up of older stars and marked by little star formation. Ellipticals have little gas and dust as compared to spirals like the Milky Way and are often found to have a large population of globular clusters. Are they also likely to have abundant planets?

The answer is yes, based on the authors’ comparison of metallicity — in nearby stars and stars with known planets — to star clusters in two elliptical galaxies. While many factors have been considered that could affect a galactic habitable zone, Suthar and McKay focus tightly on metallicity, with planet formation dependent upon the presence of elements heavier than hydrogen and helium. Results from Kepler have backed the notion that metallicity and planets go together, although it’s a correlation that has so far been established only for large planets like the gas giants that are the easiest for us to detect. We can’t be sure that the correlation goes all the way down to planets the size of the Earth, but the idea seems logical.

After all, we think Jupiter-class planets formed by the accretion of gases around a rocky core, and the formation of that core may be similar to the formation of Earth-sized planets. The authors think the correlation between stellar metallicity and planets will extend to smaller worlds, while recent exoplanet discoveries help us explore the relationship and extend our thinking to elliptical galaxies. The galaxies in question are M87 and M32, and the investigation is one that invokes the history of star-forming materials. After all, heavy elements are produced inside stars, so the concentration of metals depends critically on the generations of earlier stars.

From the paper:

First generation stars would have low metallicity, whereas stars that form from material that has been through many generations of previous stars would have a high metallicity. Metallicity is important for planet formation because in the hot protoplanetary disc surrounding a star, the formation of protoplanetary bodies (small planetesimals) depends exclusively on high atomic weight elements since the protoplanetary masses are too small to retain hydrogen or helium. Earth-like planets are composed virtually entirely of compounds that are high in atomic number, Z (silicates) or bound to a high Z atom (H2O). Thus, it is reasonable that the metallicity should correlate with planet formation.

Metallicity can be considered in terms of the iron abundance ratio to hydrogen [Fe/H], which the authors use as their indicator, obtaining this value for a variety of nearby stars including many with known planets. Interestingly enough, the metallicity of exoplanet host stars as shown by [Fe/H] peaks at a value well above that of the Sun, leading the authors to comment “…the Sun may be a typical star, but it is not a typical planet-hosting star… It may be that we are lucky to be here.” The metallicity distribution is then extended to gauge habitability in the two ellipticals.

Why these two galaxies? M87 is an active galaxy (it has an active galactic nucleus, or AGN) along with a supermassive black hole, with the AGN thought to be powered up by the black hole, which is itself marked by two relativistic jets and a wide variety of emissions from the accretion disk. M32 is a compact dwarf elliptical, a low-luminosity satellite of M31, the Andromeda galaxy. Here we have a stellar population that is younger and more metal rich at the core than M87’s. The two are markedly different, giving us the chance to make comparisons between active and non-active galaxies.

Image: A composite of visible (or optical), radio, and X-ray data of the giant elliptical galaxy, M87. M87 lies at a distance of 60 million light years and is the largest galaxy in the Virgo cluster of galaxies. Bright jets moving at close to the speed of light are seen at all wavelengths coming from the massive black hole at the center of the galaxy. It has also been identified with the strong radio source, Virgo A, and is a powerful source of X-rays as it resides near the center of a hot, X-ray emitting cloud that extends over much of the Virgo cluster. The extended radio emission consists of plumes of fast-moving gas from the jets rising into the X-ray emitting cluster medium. Credit: X-ray: NASA/CXC/CfA/W. Forman et al.; Radio: NRAO/AUI/NSF/W. Cotton; Optical: NASA/ESA/Hubble Heritage Team (STScI/AURA), and R. Gendler.

So what do we get in terms of metallicity distribution in these galaxies? The outer layers of M87 from 10 to 15 Kpc from galactic center show metallicity consistent with planet formation. In fact, this region of M87 is shown to be more favorable for planet formation than the distribution of nearby stars. Note that the habitable region here must form well away from the area of the jet, which would have its star-making materials blown outward and would be bathed in radiation.

The younger M32 galaxy has metallicity conditions somewhat less favorable than nearby stars, but still shows a large fraction of stars with Sun-like metallicity values. The distributions the authors are working with are based not on individual stars but on star clusters, with the assumption that the metallicity values are accurate when thus extended. Overall, the older M87 is likely to be rich in planetary systems but both galaxies should support habitable zones:

We have compared the metallicity distribution of nearby stars that have planets to the metallicity distribution of outer layers of elliptical galaxies, M87 and M32. From this comparison, we conclude that the stars in these elliptical galaxies are likely to have planetary systems and could be expected to have the same percentage of Earth-like habitable planets as those in the neighbourhood of the Sun.

The only other paper I know about that studied galactic habitable zones in other galaxies worked specifically on barred galaxies, and to my knowledge this is the first time the concept has been extended to ellipticals. It would be interesting to see the GHZ studied more closely in terms of radiation, because Suthar and McKay are concerned only with planets that would support microbial life, which might not be extinguished by the same radiation that destroyed more complex life-forms. For them, metals leading to planet formation are the story. In fact, note this comment:

Complex life forms are sensitive to ionizing radiation and changes in atmospheric chemistry that might result. However, microbial life forms, e.g. Deinococcus radiodurans, can withstand high doses of radiation and are more ?exible in terms of atmospheric composition. Furthermore, microbial life in subsurface environments would be effectively shielded from space radiation. Thus, while a high level of radiation from nearby supernovae may be inimical to complex life, it would not extinguish microbial life.

We’re early in the study of galactic habitable zones, but other factors like nearby supernovae, gamma ray bursts (GRBs), encounters with nearby stars and gravitational perturbations all play into the habitability picture when we start going beyond the microbial stage, studies it will be fascinating to see applied to types of galaxy other than our own.

The paper is Suthar and McKay, “The Galactic Habitable Zone in Elliptical Galaxies,” International Journal of Astrobiology, published online 16 February 2012 (abstract). Thanks to Andrew Tribick for the pointer to this one.

Closing in on Alpha Centauri

Alpha Centauri is irresistible, a bright beacon in the southern skies that captures the imagination because it is our closest interstellar target. If we learn there are no planets in the habitable zones around Centauri A and B, we then have to look further afield, where the next candidate is Barnard’s Star, at 5.9 light years. Centauri A and B are far enough at 4.3 light years — that next stretch adds a full 1.6 light years, and takes us to a red dwarf that may or may not have planets. Still further out are Tau Ceti (11.88 light years) with its problematic cometary cloud, and Epsilon Eridani (10.48 light years), a young system though one thought to have at least one planet.

A warm and cozy planet around the K-class Centauri B would be just the ticket, and the planet hunt continues. One thing we’ve learned in the past decade is that neither Centauri A or B is orbited by a gas giant — planets of this size should have shown up in the data by now. We’ve also learned that stable orbits reach out maybe 2 AU from either star. Remember that while Centauri A and B are separated by almost 40 AU at their widest point, they close to within 11 AU, thus disrupting outer orbits, as demonstrated by computer simulations. We should expect planets, if they exist, to be no further out than the main asteroid belt in our own system.

Helping the Centauri Planet Hunt

Debra Fischer (Yale University) has been working on the Alpha Centauri problem at Cerro Tololo (Chile) in addition to her efforts at improving instrument sensitivity for planet hunting at the Keck and Lick observatories. The goal is to reach the precision needed to turn up planets the size of the Earth with radial velocity methods. If we’re going to get a Centauri detection, odds are it favors Centauri B because A does not seem to be as stable as B, and the latter is more likely to be the first to yield what Fischer calls the ‘tiny whisper’ that would flag an Earth-like world. Usefully, the 79 degree orbital plane of these stars means that planets in this system, assuming they share this tilt, should be generating a reflex velocity close to the line of sight from the Earth.

Image: The view from the Cerro Tololo Inter-American Observatory, where Debra Fischer’s work continues. Credit: San Francisco State University.

Radial velocity methods, in other words, should work here if we can attain sufficient sensitivity. The detection effort calls for telescope time at the Cerro Tololo Inter-American Observatory this spring and summer, and The Planetary Society is campaigning to raise money to support the effort. What Fischer needs is 20 nights of observing time, but the team’s NASA and NSF grants cannot be used to pay for telescope time, which at Cerro Tololo runs to $1650 per night. A total of $33,000 will do it, then, money the community should be able to raise. Have a look at the Planetary Society’s donation page and let’s see if we can’t make this happen.

Anyone involved with The Planetary Society is probably already aware of Fischer’s work with astronomer and Tau Zero practitioner Geoff Marcy (UC-Berkeley) on FINDS Exo-Earths (Fiber-optic Improved Next generation Doppler Search for Exo-Earths). The collaboration has resulted in a high-end optical system installed on the 3-meter Lick Observatory telescope and is now feeding the FINDS 2 effort to provide advanced optics for the Keck Observatory in Hawaii. Marcy and Fischer are working with a fiber optics array that adjusts light entering the telescope’s spectrometer and an adaptive optics system that offers the best signal to noise ratio.

FINDS worked out well at the Lick Observatory, improving the ability to detect Doppler velocities from the pre-existing 5 meters per second down to the 1 meter per second range, allowing us to detect smaller planets. Fischer and Marcy are hopeful of attaining precisions down to 0.5 meters per second with their work at Keck, which should get us into the range of Earth-sized planets. FINDS 2 will then be used with Keck to provide follow-up data about planets found by the Kepler mission, ruling out false positives in the ongoing hunt for planets like our own. The work on FINDS has led directly into the commissioning of a new spectrometer at Cerro-Tololo.

The Cerro-Tololo instrument is now tuned up and perfectly positioned for the study of Centauri A and B. The system is a valuable target whether or not we find a habitable world (or even two) there. We don’t know whether a rocky world around Centauri A or B would have oceans, because the mechanisms for delivering water to inner planets in a binary system are unknown. What Alpha Centauri provides on our doorstep is a look at planet formation in a close binary system — recall that as many as half of all stars are in binary pairs, so these are questions with a profound relevance to our understanding of the galactic distribution of planets.

What we find around Alpha Centauri may also drive future space research. We’re gaining good information about the statistical spread of planets in the galaxy through the Kepler mission, but the logical follow-on is a mission to investigate the closest stars to learn whether habitable planets are to be found within 100 light years or less, and ultimately to gather spectroscopic data about their atmospheres. The discovery of an interesting world 4.3 light years away would encourage these plans and, in the long term, energize the idea of interstellar probes. But the near term is now, and we may have results on Centauri A and B in short order. Please join in the effort to fund Debra Fischer and team as the Alpha Centauri hunt continues.

Neutrino Communications: An Interstellar Future?

The news that a message has been sent using a beam of neutrinos awakened a flood of memories. Back in the late 1970s I was involved with the Society for Amateur Radio Astronomers, mostly as an interested onlooker rather than as an active equipment builder. Through SARA’s journal I learned about Cosmic Search, a magazine that ran from 1979 through 1982 specializing in SETI and related issues. I acquired the entire set, and went through all 13 issues again and again. I was writing sporadically about SETI then for Glenn Hauser’s Review of International Broadcasting and later, for the SARA journal itself.

Cosmic Search is a wonderful SETI resource despite its age, and the recent neutrino news out of Fermilab took me right back to a piece in its third issue by Jay Pasachoff and Marc Kutner on the question of using neutrinos for interstellar communications. Neutrinos are hard to manipulate because they hardly ever interact with other matter. On the average, neutrinos can penetrate four light years of lead before being stopped, which means that detecting them means snaring a tiny fraction out of a vast number of incoming neutrinos. Pasachoff and Kutner noted that this was how Frederick Reines and Clyde Cowan, Jr. detected antineutrinos in 1956, using a stream of particles emerging from the Savannah River reactor.

The Problem of Detection

In his work at Brookhaven National Laboratory, Raymond Davis, Jr. was using a 400,000 liter tank of perchloroethylene to detect solar neutrinos, and that’s an interesting story in itself. The tank had to be shielded from other particles that could cause reactions, and thus it was buried underground in a gold mine in South Dakota, where Davis was getting a neutrino interaction about once every six days out of the trillions of neutrinos passing through the tank. We’ve had a number of other neutrino detectors since, from the Sudbury Neutrino Observatory in Ontario to the Super Kamiokande experiments near the city of Hida, Japan and MINERvA (Main Injector Experiment for ?-A), the detector used in the Fermilab communications experiment.

The point is, these are major installations. Sudbury, for example, involves 1000 tonnes of heavy water contained in an acrylic vessel some 6 meters in radius, the detector being surrounded by normal water and some 9600 photomultiplier tubes mounted on the apparatus’ geodesic sphere. Super Kamiokande is 1000 meters underground in a mine, involving a cylindrical stainless steel tank 41 meters tall and almost 40 meters in diameter, containing 50,000 tons of water. You get the idea: Neutrino detectors are serious business requiring many tons of matter, and even with the advantages of these huge installations, our detection methods are still relatively insensitive.

Image: Scientists used Fermilab’s MINERvA neutrino detector to decode a message in a neutrino beam. Credit: Fermilab.

But Pasachoff and Kutner had an eye on neutrino possibilities for SETI detection. The idea has a certain resonance as we consider that even now, our terrestrial civilization is growing darker in many frequency bands as we resort to cable television and other non-broadcast technologies. If we had a lively century in radio and television broadcast terms just behind us, it’s worth considering that 100 years is a vanishingly short window when weighed against the development of a technological civilization. Thus the growing interest in optical SETI and other ways of detecting signs of an advanced civilization, one that may be going about its business but not necessarily building beacons at obvious wavelengths for us to investigate.

Neutrinos might fit the bill as a communications tool of the future. From the Cosmic Search article:

Much discussion of SETI has been taken up with finding a suitable frequency for radio communication. Interesting arguments have been advanced for 21 centimeters, the water hole, and other wavelengths. It is hard to reason satisfactorily on this subject; only the detection of a signal will tell us whether or not we are right. Neutrino detection schemes, on the other hand, are broad band, that is, the apparatus is sensitive to neutrinos of a wide energy range. The fact that neutrinos pass through the earth would also be an advantage, because detectors would be omnidirectional. Thus, the whole sky can be covered by a single detector. It is perhaps reasonable to search for messages from extraterrestrial civilizations by looking for the neutrinos they are transmitting, and then switch to electromagnetic means for further conversations.

The First Message Using a Neutrino Beam

Making this possible will be advances in our ability to detect neutrinos, and it’s clear how tricky this will be. The recent neutrino message at Fermilab, created by researchers from North Carolina State University and the University of Rochester, is a case in point. Fermilab’s NuMI beam (Neutrinos at the Main Injector) fired pulses at MINERvA, a 170-ton detector in a cavern some 100 meters underground. The team had encoded the word ‘neutrino’ into binary form, with the presence of a pulse standing for a ‘1’ and the absence of a pulse standing for a ‘0’.

3454 repeats of the 25-pulse message over a span of 142 minutes delivered the information, corresponding to a transmission rate of 0.1 bits per second with an error rate of 1 percent. Out of trillions of neutrinos, an average of just 0.81 neutrinos were detected for each pulse, but that was enough to deliver the message. Thus Fermilab’s NuMI neutrino beam and the MINERvA detector have demonstrated digital communications using neutrinos, pushing the signal through several hundred meters of rock. It’s also clear that neutrino communications are in their infancy.

From the paper on the Fermilab work:

…long-distance communication using neutrinos will favor detectors optimized for identifying interactions in a larger mass of target material than is visible to MINERvA and beams that are more intense and with higher energy neutrinos than NuMI because the beam becomes narrower and the neutrino interaction rate increases with neutrino energy. Of particular interest are the largest detectors, e.g., IceCube, that uses the Antarctic icepack to detect events, along with muon storage rings to produce directed neutrino beams.

Thinking about future applications, I asked Daniel Stancil (NCSU), lead author of the paper on this work, about the possibilities for communications in space. Stancil said that such systems were decades away at the earliest and noted the problem of detector size — you couldn’t pack a neutrino detector into any reasonably sized spacecraft, for example. But get to a larger scale and more things become possible. Stancil added “Communication to another planet or moon may be more feasible, if local material could be used to make the detector, e.g., water or ice on Europa.”

A Neutrino-Enabled SETI

Still pondering the implications of the first beamed neutrino message, I returned to Pasachoff and Kutner, who similarly looked to future improvements to the technology in their 1979 article. What kind of detector would be needed, they had asked, to repeat the results Raymond Davis, Jr. was getting from solar neutrinos at Brookhaven (one interaction every six days) if spread out to interstellar distances? The authors calculated that a 1 trillion electron volt proton beam would demand a detector ten times the mass of the Earth if located at the distance of Tau Ceti (11.88 light years). That’s one vast detector but improvements in proton beam energy can help us reduce detector mass dramatically. I wrote to Dr. Pasachoff yesterday to ask for a comment on the resurgence of his interstellar neutrino thinking. His response:

We are such novices in communication, with even radio communications not much different from 100 years old, as we learned from the Titanic’s difficulties with wireless in 1912. Now that we have taken baby steps with neutrino communication, and checked neutrino oscillations between distant sites on Earth, it is time to think eons into the future when we can imagine that the advantages of narrow-beam neutrinos overwhelm the disadvantages of generating them. As Yogi Berra, Yankee catcher of my youth, is supposed to have said, “Prediction is hard, especially about the future.” Still, neutrino beams may already be established in interstellar conversations. I once examined Raymond Davis’s solar-neutrino records to see if a signal was embedded; though I didn’t find one, who knows when our Earth may pass through some random neutrino message being beamed from one star to another–or from a star to an interstellar spaceship.

Neutrino communications, as Pasachoff and Kutner remarked in their Cosmic Search article, have lagged radio communications by about 100 years, and we can look forward to improvements in neutrino methods considering near-term advantages like communicating with submerged submarines, a tricky task with current technologies. From a SETI perspective, reception of a strong modulated neutrino signal would flag an advanced civilization. The prospect the authors suggest, of an initial neutrino detection followed by a dialogue developed through electromagnetic signals, is one that continues to resonate.

The Pasachoff and Kutner paper is “Neutrinos for Interstellar Communication,” Cosmic Search Vol. 1, No. 3 (Summer, 1979), available online. The Fermilab work is described in Stancil et al., “Demonstration of Communication using Neutrinos,” submitted to Modern Physics Letters A 27 (2012) 1250077 (preprint)