Centauri Dreams

Imagining and Planning Interstellar Exploration

Updating the Gravitational Wave Hunt

The Laser Interferometer Space Antenna mission (LISA), slated for launch later this decade, will go about testing one of Einstein’s key predictions, that gravitational waves should emanate from exotic objects like black holes. Detectors like the Laser Interferometer Gravitational Wave Observatory (LIGO) have operated on Earth’s surface but are subject to seismic noise that disturbs the observations in some of the key frequency ranges. The hope is that the space-based LISA will be able to go after the low-frequency gravitational wave spectrum to detect such things as the collision of black holes, the merger of galaxies or the interactions of neutron star binary systems.

The good news out of the European Space Agency is that the LISA Pathfinder precursor mission, planned for a 2014 launch, is showing the accuracy needed to demonstrate what the more sophisticated LISA mission should be able to do. LISA Pathfinder is a small spacecraft unable to make a direct detection of gravitational waves, but if all goes well it should be able to test whether objects moving through space without external influences trace out a slight curve — a geodesic — because of the effect of gravity upon spacetime. That would take us one step closer to the actual detection of gravitational waves using even more sensitive technologies in the full LISA mission.

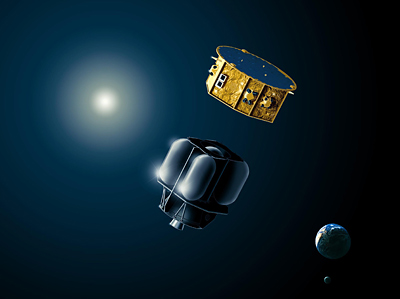

Image: LISA Pathfinder will pave the way for the LISA mission by testing in flight the very concept of the gravitational wave detection. It will put two test masses in a near-perfect gravitational free-fall and control and measure their motion with unprecedented accuracy. This is achieved through state-of-the-art technology comprising the inertial sensors, the laser metrology system, the drag-free control system and an ultra-precise micro-propulsion system. Credit: Astrium.

The early results on LISA Pathfinder are promising. Detecting ripples in spacetime requires measurements with the accuracy of 10 billionths of a degree, yet thermal vacuum tests are revealing the spacecraft’s subsystems can produce this kind of performance. Under space-like test conditions with an almost complete spacecraft, LISA Pathfinder has been demonstrating a 2 picometer accuracy — a picometer is about a hundredth the size of an atom — and expectations are that once in space the observatory will be able to do even better.

Aboard the spacecraft will be two 4.6 cm3 test masses that will float within two chambers 35 cm apart in the core of the observatory. Their relative positions are to be tracked by a laser measuring system. Any gravitational disturbances should cause minuscule changes in their positions. Bengt Johlander, payload engineer for LISA Pathfinder, describes the system:

“We are seeking to keep the geodesic motion of our test masses as pure as possible. The masses are free to move – and if our experiment is set up correctly they should move together in concert – while the surrounding spacecraft is guided by their motion to follow in the same direction. It is impossible to have zero perturbations, but we have fixed a precise budget for disturbances we will stay within.”

The perturbations that must be avoided are vanishingly small, but any of them could compromise the experiment. They include solar radiation pressure, slight magnetic influences or vibrations from the body of the spacecraft and, remarkably, the internal gravitational pull of the spacecraft structure itself. LISA Pathfinder is to operate 1.5 million kilometers from the Earth at the L1 Lagrange point. The success of its systems paves the way for the later LISA mission, which will involve a trio of spacecraft flying 5 million kilometers part while linked by laser beams. ESA offers a useful backgrounder on LISA Pathfinder and the subsequent LISA mission. And be aware of this graduate level Web-based course on gravitational waves that Caltech makes available.

Can Project Orion Be Re-Born?

Project Orion keeps surfacing in propulsion literature and making the occasional appearance on the broader Internet. A case in point is a vigorous defense of Orion-style engineering by Gary Michael Church on the Lifeboat Foundation blog. Church is rightly taken with the idea of propelling payloads massing thousands of tons around the Solar System, but he’s also more than mindful of the realities, both political and economic, that have kept Orion-class missions in the realm of the theoretical. It was, after all, the nuclear test ban treaties of the Cold War era that brought the original project to a close, and anti-nuclear sentiment remains strong in the public today.

But the Orion idea won’t go away because it is so tantalizing. Church runs through the relevant information, much of it familiar to old Centauri Dreams hands. Freeman Dyson and Ted Taylor were working on a concept in which a large percentage of the energy of a nuclear explosion — these are small nukes of the kind Taylor specialized in — could be concentrated in one direction. If you can do that, you can blow that energy into a propellant slab to create thrust, assuming that a massive pusher plate would be present to absorb the blast without melting in the process.

Image: An Orion-class mission under power. Credit: Adrian Mann.

Remarkably, the research indicated that this was possible because the blast would last for such a tiny amount of time as the craft, boosted by each subsequent bomb, accelerated through space. It’s hard to describe what riding aboard such a craft would be like, though Church likens it to repeated aircraft carrier catapault launches. My own guess is that even the most highly advanced pusher plate/shock absorber combination would have trouble making this ride anything but a nightmarish if potentially survivable experience. But Dyson and Taylor had numbers showing that it was worth pursuing, and there were grand thoughts about shaking out an Orion-class mission back in the late ‘60s by going all the way to Saturn’s moon Enceladus.

Defending Orion Through Radiation

A defense of Orion will need to produce reasons for its resurrection, and Church offers several, beginning with the radiation that bathes deep space. Some kinds of cosmic rays pose a serious threat. From the essay:

The presence of a small percentage of highly damaging and deeply penetrating particles?—?the heavy nuclei component of galactic cosmic rays makes a super powerful propulsion system mandatory. The tremendous power of atomic bomb propulsion is certainly able to propel the heavily shielded capsules required to protect space travelers. The great mass of shielding makes chemical engines, inefficient nuclear thermal rockets, the low thrust forms of electrical propulsion, and solar sails essentially worthless for human deep space flight. Which is why atomic bomb propulsion is left as the only “off the shelf” viable means of propulsion. For the foreseeable future, high thrust and high ISP to propel heavy shielding to the required velocities is only possible using bombs.

It’s true that the bulk of the radiation we’ve so far had experience with in long-duration spacecraft has been more manageable, largely because a venue like the International Space Station is shielded by the Earth’s magnetic field. But as we start talking about multi-year missions, our thinking has to turn toward practical methods of shielding far beyond Earth’s orbit. Church sees the need for shields massing hundreds of tons that could hardly be propelled by conventional methods, whereas an Orion-style craft, bulking itself up with lunar ice deposits from the Moon’s north pole, could boost out of lunar orbit with a full and robust radiation shield. In his view, then, only Orion can take us on manned missions to other planets, much less the kind of interstellar missions sometimes contemplated for this technology.

But shielding a spacecraft, at least within the Solar System, may not be as demanding as this, as at least one space scientist believes. Robert Zubrin has been working out the basics of Mars Direct for some time now, and the radiation issue is a major concern for him as well. Where Church says “An appreciation of the heavy nuclei component of galactic cosmic radiation, as well as solar events, will put multi-year human missions beyond earth orbit on hold indefinitely until a practical shield is available,” Zubrin (in the latest edition of The Case for Mars) refers to this kind of talk as ‘scaremongering’:

Cosmic rays deliver about half the radiation dose experienced throughout life by people on the surface of the Earth, with those living or working at high altitude receiving doses that are quite significant. For example, a trans-Atlantic airline pilot making one trip per day five days a week would receive about a rem per year in cosmic-ray doses. Over a twenty-five-year flying career, he or she would get more than half the total cosmic-ray dose experienced by a crew member of a two-and-one-half-year Mars mission.

As the issue continues to be debated, we also have to look toward future interstellar missions, no matter what kind of propulsion system we commit to the task. If we’re talking human missions, it will clearly be essential to learn more about the shielding effects of the heliosphere on galactic cosmic rays to find out what kind of shielding will be needed for a crew that moves into interstellar space. Dana Andrews (Andrews Space), who has collaborated in the past with Zubrin on magsails, is just one researcher who has examined the issue. See his “Things to Do While Coasting Through Interstellar Space,” AIAA-2004-3706, 40th AIAA/ASME/SAE/ASEE Joint Propulsion Conference and Exhibit, Fort Lauderdale, Florida, July 11-14, 2004. You may find my article on Andrews’ work Dust Up Between the Stars helpful as well.

The Imperative of Species Survival

The real motivator for Orion technology may not be crew protection — much of our future work in the outer system and beyond may well be robotic — but the need to protect the planet from asteroids or comets on dangerous trajectories. This is Church’s ultimate justification, the one he hopes will trump the anti-nuclear lobby and bring clarity to the issue, and he points out that an Orion-class mission would be just the ticket if we had to send a human crew to an errant object deep in the Solar System to begin adjustments to its course. Thus this:

It is within our power to defend Earth from the very real threat of an impact, and at this time self-defense is the only valid reason to go into space instead of spending the resources on Earth improving the human condition. Protecting our species from extinction is the penultimate moral high ground above all other calls on public funds.

And this final thrust:

A powerful force of nuclear powered, propelled, and armed spaceships cannot guarantee Earth will not suffer a catastrophe. The best insurance for our species is to establish, in concert with a spaceship fleet, several independent self-supporting off world colonies in the outer solar system. The first such colony would mark the beginning of a new age.

Church’s essay is well thought out and deserves more attention than it seems to have been getting, considering that Orion gets us around problems like the harnessing of massive energies within an internal engine without melting the engine, something that uncontained nuclear-generated plasma and pusher plates could resolve. Of course, George Dyson explains all this in his wonderful account of his father’s and Taylor’s work Project Orion: The True Story of the Atomic Spaceship (2002), but I’m always thinking in terms of keeping deep space concepts in front of the public, and Church lays out the basics in ways that might attract a Net-reading generation familiar mostly with chemical rockets.

Image: An Orion vehicle departs for Mars. Credit: Adrian Mann.

The question then becomes, if we were to explore this technology again, would the drivers Church mentions be enough to get us past the anti-nuclear lobby to begin testing the concept meaningfully? Because right now we have only the sketchiest knowledge of how adaptable Orion might prove to be, and only the reassurances of its proponents that a human crew would survive the ride. Developing an Orion prototype would demand testing it off the planet, and that presupposes lofting a great deal of nuclear material into space. We all remember the fuss kicked up by the plutonium carried aboard the Cassini probe to Saturn. Can the planetary insurance imperative become a credible enough issue to turn the prevailing view of nukes around?

An Asteroid Born Near the Earth

This morning we stick with the planetary migration theme begun on Friday, when the subject was a possible ice giant that was expelled from the Solar System some 600 million years after formation. We have a lot to learn about the mechanisms that could force such ejections, but it’s becoming clear that objects up to planet size do indeed migrate, as witness the latest findings on the asteroid Lutetia. Using spectral information put together from space- and ground-based telescopes including ESA’s Rosetta spacecraft, scientists have determined that Lutetia is a fragment of the same inner system material that went into the inner rocky planets. A gravitational encounter somewhere in the inner system would then have propelled it outward to the Main Belt.

The observations pulled together data in visible, ultraviolet, near-infrared and mid-infrared wavelengths. The only kind of meteorite that matches the spectrum of Lutetia exactly is the type known as enstatite chondrites, known to be material from the early Solar System, and thought to have been a major constituent in the formation of the Earth, Venus and Mercury. Isotope measurements of enstatite chondrites show that they are the only groups of chondrites that have an isotopic composition that is the same as that of the Earth and Moon, and their chemistry and mineralogy supports the idea that they formed close to the Sun. Mercury seems to have accreted from these materials.

Image: New observations indicate that the asteroid Lutetia is a leftover fragment of the same original material that formed the Earth, Venus and Mercury. Credit: ESA/Rosetta.

If Lutetia formed in the inner system, it would be a part of the less than 2 percent of bodies located in the region where the Earth formed that wound up in the asteroid belt. So in this case we have not a planetary ejection, but an asteroid migration, the movement of an object about 100 kilometers across that was propelled outward through an encounter with one of the inner planets. The migration scenario could involve more than just the rocky planets of the inner system, as the paper on this work notes:

The question naturally arises as to how Lutetia escaped accretion into one of the terrestrial planets and ultimately reached the main belt. The dynamical mechanism is likely to be similar to the one explaining the origin of iron meteorites as remnants of differentiated planetesimals formed in the terrestrial planet region… Extended dynamical simulations reveal that, at the time when terrestrial accretion was ongoing, a small fraction (<2%) of the planetesimals residing in the 0.5-1.5 AU region were scattered out by emerging protoplanets… and/or by the migration of Jupiter… and achieved main-belt orbits, thus becoming dynamically indistinguishable from the rest of the main-belt population.

But Lutetia would be carrying evidence for a far different interior than most asteroids:

According to this scenario, planetesimals of the size of Lutetia (D ~ 100 km) that formed in the 0.5-1.5 AU region experienced significantly more heating by short-lived nuclides than asteroids formed in the main belt. A direct consequence is that most of these planetesimals are likely to be differentiated in accordance with iron meteorites having formed in this region.

All of this adds to the buzz about an asteroid that, in terms of color and surface properties, was already considered unusual. It wasn’t long ago that we discussed Lutetia’s once having had a hot metallic core, based on evidence from the Rosetta encounter in July of 2010. The suggestion was that although it had a battered, unmelted surface, Lutetia may have had a differentiated interior, making it a primordial planetesimal. That would make it a highly interesting relic of the period of planetary formation, and the new work adds to the case for more intense study of the asteroid, as ESO’s Pierre Vernazza, lead author of the paper on the new work, comments:

“Lutetia seems to be the largest, and one of the very few, remnants of such material in the main asteroid belt. For this reason, asteroids like Lutetia represent ideal targets for future sample return missions. We could then study in detail the origin of the rocky planets, including our Earth.”

The paper is Vernazza et al., “Asteroid (21) Lutetia as a remnant of Earth’s precursor planetesimals,” accepted for publication in Icarus (preprint).

A Gas Giant Ejected from our System?

Free-floating planets — planets moving through interstellar space without stars — may not be unusual. If solar systems in their epoch of formation go through chaotic periods when the orbits of their giant planets are affected by dynamical instability, then ejecting a gas giant from the system entirely is a plausible outcome. David Nesvorny (SwRI) has been studying the possibilities for such ejections in our Solar System, using computer simulations of the era when the system was no more than 600 million years old. Clues from the Kuiper Belt and the lunar cratering record had already suggested a scattering of giant planets and smaller bodies then.

An ejected planet makes sense. Studies of giant planets interacting with the protoplanetary disk show that they tend to migrate and wind up in a configuration where pairs of neighboring planets are locked in a mean motion resonance. Such a resonance occurs when two planets exert a regular, periodic gravitational influence on each other (there is a 2:3 resonance, for example, between Pluto and Neptune, with Pluto completing 2 solar orbits for every 3 of Neptune). Current work suggests that these resonant systems then become dynamically unstable once the gas of the protoplanetary disk disappears. Just how this happens is what the new work is all about.

From Nesvorny’s paper:

To stretch to the present, more relaxed state, the outer solar system most likely underwent a violent phase when planets scattered off of each other and acquired eccentric orbits… The system was subsequently stabilized by damping the excess orbital energy into the transplanetary disk, whose remains survived to this time in the Kuiper belt. Finally, as evidenced by dynamical structures observed in the present Kuiper belt, planets radially migrated to their current orbits by scattering planetesimals…

The scattering outlined here suggests that Jupiter moved inward in its orbit and scattered smaller bodies both outward and inward, some to take up residence in the Kuiper Belt, others to cause impacts on the inner planets and Earth’s Moon. The problem: A slow change to Jupiter’s orbit based on interaction with small bodies would have thoroughly disrupted the inner system.

A faster change of orbit due to interactions with Uranus and Neptune would have caused both the latter two planets to be ejected from the system. At this point Nesvorny added to the model an additional giant planet, working with an initial state where all the giant planets reached resonant orbits in the protoplanetary disk within a range of some 15 AU from the Sun. As the gas disk dispersed, Uranus and Neptune would have been scattered by the gas giants, reaching their current orbits and in turn scattering the planetesimals in that region into the Kuiper Belt.

The final consequence is the ejection of the fifth gas giant. An additional planet between Saturn and the ice giants — a world that was ultimately ejected from the system entirely — leaves us with a simulation that models the four giant planets we see today. Nesvorny’s simulations put a range of different masses for the planetesimal disk into play, with a total of 6000 scattering simulations following each system for 100 million years, when the planetesimal disk was depleted and planetary migration at an end. And it turns out that you are roughly ten times more likely to wind up with an analog to our Solar System if you start with five giant planets rather than four.

This scenario solves a variety of problems. Understanding how Uranus and Neptune formed is difficult because at their present distances of roughly 20 and 30 AU, accretion would have required too long a timescale. Nesvorny points out that the ice giants form readily at 15 AU or less, and the five planet resonant system his work discovered accounts for their movement outward. The results shift depending on whether one assumes an initial 3:2 resonance between Jupiter and Saturn or a 2:1 resonance, with the latter pushing the outer ice giant to a problematic 18-20 AU for formation. This makes the 3:2 resonance the most likely, but the scientist notes the need for more work on the question.

In the meantime, we’re left with the vision of an even more interesting early Solar System than we thought, and the possibility that the ejection of that fifth giant planet may be what spared the inner system — and our Earth — from complete disruption. We’re also given another look at the processes that produce dark, interstellar wanderers, planets with no star to light them, as Nesvorny notes:

“The possibility that the solar system had more than four giant planets initially, and ejected some, appears to be conceivable in view of the recent discovery of a large number of free-floating planets in interstellar space, indicating the planet ejection process could be a common occurrence.”

The paper is Nesvorny, “Young Solar System’s Fifth Giant Planet?” Preprint available.

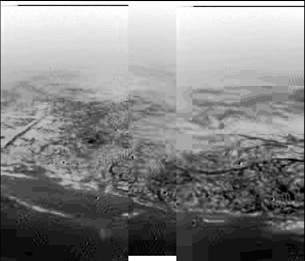

A Look at Methane-Based Life

Could life exist on a world with a methane rather than a water cycle? The nitrogen-rich atmosphere of Titan, laden with hydrocarbon smog, is a constant reminder of the question. Cassini has shown us the results of ultraviolet radiation from the Sun interacting with atmospheric methane, and we’ve had radar glimpses of lakes as well as the haunting imagery from the descending Huygens probe. Our notion of a habitable zone depends upon water, but adding methane into the mix would extend the region where life could exist much further out from a star. Chris McKay and Ashley Gilliam (NASA Ames) have been actively speculating on the possibilities around red dwarfs and have published a recent paper on the subject.

It’s intriguing, of course, that with methane we get the ‘triple point’ that allows a material to exist in liquid, solid or gaseous form at a particular temperature and pressure. That makes Titan ‘Earthlike’ in the sense that our initial view showed a landscape with the clear signs of running liquid, but this is a world where temperatures dip to 94 K (-179 Celsius) and water is the local analog of rock. In a fine essay on McKay’s work in Astrobiology Magazine, Keith Cooper notes an earlier McKay paper that suggested a potential life mechanism in this kind of environment: Local methanogens would consume hydrogen, acetylene and ethane while exhaling methane. That’s a mechanism useful for astrobiologists because it would show a particular signature in the depletion of hydrogen, acetylene and ethane at the surface.

Image: This composite was produced from images returned on 14 January 2005, by ESA’s Huygens probe during its successful descent to land on Titan. It shows the boundary between the lighter-coloured uplifted terrain, marked with what appear to be drainage channels, and darker lower areas. These images were taken from an altitude of about 8 kilometres with a resolution of about 20 metres per pixel. Credits: ESA/NASA/JPL/University of Arizona.

But the fact that Titan does show signs of such depletion isn’t necessarily indicative of life, for these signs are themselves dependent on atmospheric models that are still in play, and in any case we know little about other processes that could mimic the same characteristics without implications for life. Exo-Titans may be relatively common, for all we know, but to find them we are going to have to first establish that life can exist in this environment and then work out an atmospheric signature we can search for. Cooper quotes Lisa Kaltenegger (Max Planck Institute) on the issue:

“We just don’t know what the tell-tale signs for life would be in such an atmosphere because it is so vastly different from ours. That said, it will change in a flash if Chris [McKay] finds life on Titan and can tell us what it produces and what we could look for remotely with a telescope.”

That makes future probes of Titan all the more interesting, and adds to the desirability of a long-term presence on the moon, either through a surface rover or an aerostat that could range high over the surface and give us a highly-focused look. As for those red dwarfs McKay studied in his recent paper, a methane habitable zone should exist between 0.63 and 1.66 astronomical units (99 million and 248 million kilometers) around the star Gliese 581, that frequently invoked site of habitable planet speculation. Unfortunately, while we do have four planets confirmed in the system (with two others considered controversial), none exists in the methane sweet spot.

While Gliese 581 is an M2.5V dwarf, the authors also calculate the numbers for an M4 dwarf, finding a closer habitable zone between 0.084 AU and 0.23 AU (12.6 million kilometers to 34.4 million kilometers) in methane terms. The beauty of studying habitable environments — water or methane — around M-dwarfs is that these are systems where the orbital distances involved will be small and detection of planets through radial velocity and planetary transits somewhat easier. But what happens on the surface of such a planet is another matter. Much depends on how the atmosphere is affected by stellar conditions, as Cooper notes:

Titan’s atmosphere is opaque to blue and ultraviolet light, but transparent to red and infrared light, and red dwarfs produce more of the latter than the former. If Titan orbited a red dwarf, more red light would seep through to its surface, warming the planet and extending the range of the liquid methane habitable zone. (Interestingly, a red giant, which is close to the endpoint in the life cycle of a Sun-like star, produces light of similar red wavelengths. When our Sun expands into a bloated red giant in about five billion years, engulfing all the planets up to Earth and possibly Mars, Titan may well reap the benefits – for a short while at least before the red giant puffs away to leave behind a white dwarf star.)

Countering this warming is the effect of large stellar flares on evolving life, frequent on younger red dwarfs. McKay’s work suggests that such active M-dwarfs would dissociate atmospheric molecules on a Titan-like world, making the place more and more smoggy and reducing the surface temperature. The net effect would be to move the methane habitable zone closer to the star. Clearly we have a long way to go to be able to actively search for methane-based life outside our own Solar System, and probably decades to go before we get back to Titan.

For the time being, then, a methane habitable zone is sheer speculation, but it’s interesting to ponder the life that might appear on such worlds. One thing seems sure: The temperatures at which liquid methane exists would produce creatures with slow metabolisms. Will a future Titan probe find life? Given our relatively greater understanding of life’s relation to liquid water, we’re obviously going to keep the focus there, but a ‘second genesis’ on Titan would change the equation considerably as we ponder how frequently life can form and with what constituents.

The paper is McKay and Gilliam, “Titan under a red dwarf star and as a rogue planet: requirements for liquid methane,” Planetary and Space Science Volume 59, Issue 9, pp. 835-839 (July 2011). Abstract available.

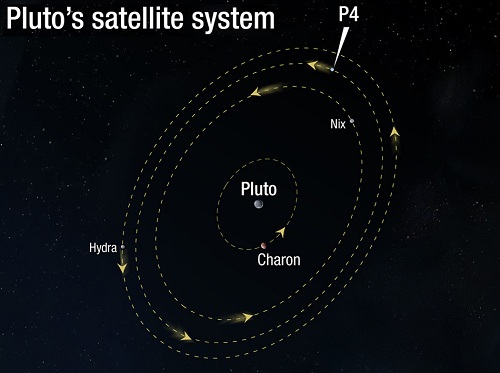

Pluto/Charon: A Dangerous Arrival?

We’ve often considered the effect of interstellar dust on a spacecraft moving at a substantial percentage of the speed of light. The matter becomes even more acute when we consider an interstellar probe arriving at the destination solar system. A flyby mission moving at ten percent of the speed of light is going to encounter a far more dangerous environment just as it sets about its critical observations, which is why various shielding concepts have been in play to protect the vehicle. But even at today’s velocities, spacecraft can have unexpected surprises when they arrive at their target.

We’re now looking toward a 2015 encounter at Pluto/Charon. New Horizons is potentially at risk because of the fact that debris in the Pluto system may not be found in a plane but could take the form of a thick torus or even a spherical cloud around the system. We don’t yet know how much of a factor impactors from the Kuiper Belt may be, but strikes at 1-2 kilometers per second would kick up fragments moving at high velocity, generating debris rings or clouds we have yet to see. For that matter, are there undiscovered satellites in this system that could pose a threat?

Image: Pluto’s newest found moon, P4, orbits between Nix and Hydra, both of which orbit beyond Charon. Finding out whether there are other moons or potential hazards near Pluto/Charon was the subject of a recent workshop that is gauging the dangers involved in the encounter. Credit: Alan Stern/New Horizons.

Working on these questions is the job of a team that met at the Southwest Research Institute (Boulder, CO) in early November. The New Horizons Pluto Encounter Hazards Workshop had plenty on its agenda. As principal investigator Alan Stern notes in this report on the New Horizons mission, the group was composed of about 20 of the leading experts in ring systems, orbital dynamics and the astronomical methods used to observe objects at the edge of the Solar System.

The Hubble Space Telescope will play a role in the search for undiscovered moons and possible rings, aided by ground-based telescopes that will study the environment between Pluto and Charon, space through which New Horizons is slated to move. Stern also notes that the ALMA (Atacama Large Millimeter/submillimeter Array) radio telescope will be able to make thermal observations of the system, all of which should give us a better idea of the situation ahead even as plans go forward to consider alternate routes in case the current trajectory starts to look too dangerous. From Stern’s report:

Studies presented at the Encounter Hazards Workshop indicate that a good ‘safe haven bailout trajectory’ (or SHBOT) could be designed to target a closest-approach aim point about 10,000 kilometers farther than our nominal mission trajectory. More specifically, a good candidate SHBOT aim point would be near Charon’s orbit, but about 180 degrees away from Charon on closest-approach day. Why this location? Because Charon’s gravity clears out the region close to it of debris, creating a safe zone.

New Horizons is now approaching 22 AU out and has been brought out of hibernation until November 15 for regular maintenance activities. Tracking a spacecraft on its way to a dwarf planet we have never visited is intriguing enough, but the recent workshop was inspired at least partially by discoveries made after launch, such as the existence of the moon P4, which was found this summer. Stern mentions some evidence for still fainter moons that have not yet been confirmed, but it’s clear that space ahead may have more surprises in store.

Stern adds “it is not lost on us that there is a certain irony that the very object of our long-held scientific interest and affection may, after so many years of work to reach her, turn out to be less hospitable than other planets have been.” Indeed. Then factor in how much work went into getting this mission funded, built and flown — the payload aboard New Horizons is a precious thing indeed. We can only hope — and assume — that the observing campaign to verify the path ahead will be successful.