Centauri Dreams

Imagining and Planning Interstellar Exploration

A Hybrid Interstellar Mission Using Antimatter

Epsilon Eridani has always intrigued me because in astronomical terms, it’s not all that far from the Sun. I can remember as a kid noting which stars were closest to us – the Centauri trio, Tau Ceti and Barnard’s Star – wondering which of these would be the first to be visited by a probe from Earth. Later, I thought we would have quick confirmation of planets around Epsilon Eridani, since it’s a scant (!) 10.5 light years out, but despite decades of radial velocity data, astronomers have only found one gas giant, and even that confirmation was slowed by noise-filled datasets.

Even so, Epsilon Eridani b is confirmed. Also known as Ægir (named for a figure in Old Norse mythology), it’s in a 3.5 AU orbit, circling the star every 7.4 years, with a mass somewhere between 0.6 and 1.5 times that of Jupiter. But there is more: We also get two asteroid belts in this system, as Gerald Jackson points out in his new paper on using antimatter for deceleration into nearby star systems, as well as another planet candidate.

Image: This artist’s conception shows what is known about the planetary system at Epsilon Eridani. Observations from NASA’s Spitzer Space Telescope show that the system hosts two asteroid belts, in addition to previously identified candidate planets and an outer comet ring. Epsilon Eridani is located about 10 light-years away in the constellation Eridanus. It is visible in the night skies with the naked eye. The system’s inner asteroid belt appears as the yellowish ring around the star, while the outer asteroid belt is in the foreground. The outermost comet ring is too far out to be seen in this view, but comets originating from it are shown in the upper right corner. Credit: NASA/JPL-Caltech/T. Pyle (SSC).

This is a young system, estimated at less than one billion years. For both Epsilon Eridani and Proxima Centauri, deceleration is crucial for entering the planetary system and establishing orbit around a planet. The amount of antimatter available will determine our deceleration options. Assuming a separate method of reaching Proxima Centauri in 97 years (perhaps beamed propulsion getting the payload up to 0.05c), we need 120 grams of antiproton mass to brake into the system. A 250 year mission to Epsilon Eridani at this velocity would require the same 120 grams.

Thus we consider the twin poles of difficulty when it comes to antimatter, the first being how to produce enough of it (current production levels are measured in nanograms per year), the second how to store it. Jackson, who has long championed the feasibility of upping our antimatter production, thinks we need to reach 20 grams per year before we can start thinking seriously about flying one of these missions. But as both he and Bob Forward have pointed out, there are reasons why we produce so little now, and reasons for optimism about moving to a dedicated production scenario.

Past antiproton production was constrained by the need to produce antiproton beams for high energy physics experiments, requiring strict longitudinal and transverse beam characteristics. Their solution was to target a 120 GeV proton beam into a nickel target [41] followed by a complex lithium lens [42]. The world record for the production of antimatter is held by the Fermilab. Antiproton production started in 1986 and ended in 2011, achieving an average production rate of approximately 2 ng/year [43]. The record instantaneous production rate was 3.6 ng/year [44]. In all, Fermilab produced and stored 17 ng of antiprotons, over 90% of the total planetary production.

Those are sobering numbers. Can we cast antimatter production in a different light? Jackson suggests using our accelerators in a novel way, colliding two proton beams in an asymmetric collider scenario, in which one beam is given more energy than the other. The result will be a coherent antiproton beam that, moving downstream in the collider, is subject to further manipulation. This colliding beam architecture makes for a less expensive accelerator infrastructure and sharply reduces the costs of operation.

The theoretical costs for producing 20 grams of antimatter per year are calculated under the assumption that the antimatter production facility is powered by a square solar array 7 km x 7 km in size that would be sufficient to supply all of the needed 7.6 GW of facility power. Using present-day costs for solar panels, the capital cost for this power plant comes in at $8 billion (i.e., the cost of 2 SLS rocket launches). $80 million per year covers operation and maintenance. Here’s Jackson on the cost:

…3.3% of the proton-proton collisions yields a useable antiproton, a number based on detailed particle physics calculations [45]. This means that all of the kinetic energy invested in 66 protons goes into each antiproton. As a result, the 20 g/yr facility would theoretically consume 6.7 GW of electrical power (assuming 100% conversion efficiencies). Operating 24/7 this power level corresponds to an energy usage of 67 billion kW-hrs per year. At a cost of $0.01 per kW-hr the annual operating cost of the facility would be $670 million. Note that a single Gerald R. Ford-class aircraft carrier costs $13 billion! The cost of the Apollo program adjusted for 2020 dollars was $194 billion.

Science Along the Way

Launching missions that take decades, and in some cases centuries, to reach their destination calls for good science return wherever possible, and Jackson argues that an interstellar mission will determine a great deal about its target star just by aiming for it. Whereas past missions like New Horizons could count on the position of targets like Pluto and Arrokoth being programmed into the spacecraft computers, the preliminary positioning information uploaded to the craft came from Earth observation. Our interstellar craft will need more advanced tools. It will have to be capable of making its own astrometrical observations, sending its calculations to the propulsion system for deceleration into the target system and orbital insertion, thus refining exoplanet parameters on the fly.

Remember that what we are considering is a hybrid mission, using one form of propulsion to attain interstellar cruise velocity, and antimatter as the method for deceleration. You might recall, for example, the starship ISV Venture Star in the film Avatar, which uses both antimatter engines and a photon sail. What Jackson has added to the mix is a deep dive into the possibilities of antimatter for turning what would have been a flyby mission into a long-lasting planet orbiter.

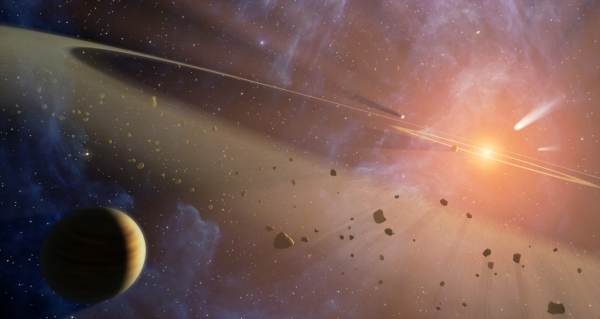

Let’s consider what happens along the line of flight as a spacecraft designed with these methods makes its way out of the Solar System. If we take a velocity of 0.02c, our spacecraft passes the outgoing Voyager and Pioneer spacecraft in two years, and within three more years it passes into the gravitational lensing regions of the Sun beginning at 550 AU. A mere five years has taken the vehicle through the Kuiper Belt and moved it out toward the inner Oort Cloud, where little is currently known about such things as the actual density distribution of Oort objects as a function of radius from the Sun. We can also expect to gain data on any comparable cometary clouds around Proxima Centauri or Epsilon Eridani as the spacecraft continues its journey.

By Jackson’s calculations, when we’re into the seventh year of such a mission, we are encountering Oort Cloud objects at a pretty good clip, with an estimated 450 Oort objects within 0.1 AU of its trajectory based on current assumptions. Moving at 1 AU every 5.6 hours, we can extrapolate an encounter rate of one object per month over a period of three decades as the craft transits this region. Jackson also notes that data on the interstellar medium, including the Local Interstellar Cloud, will be prolific, including particle spectra, galactic cosmic ray spectra, dust density distributions, and interstellar magnetic field strength and direction.

Image: This is Figure 7 from the paper. Caption: Potential early science return milestones for a spacecraft undergoing a 10-year acceleration burn with a cruise velocity of 0.02c. Credit: Gerald Jackson.

It’s interesting to compare science return over time with what we’ve achieved with the Voyager missions. Voyager 2 reached Jupiter about two years after launch in 1977, and passed Saturn in four. It would take twice that time to reach Uranus (8.4 years into the mission), while Neptune was reached after 12. Voyager 2 entered the heliopause after 41.2 years of flight, and as we all know, both Voyagers are still returning data. For purposes of comparison, the Voyager 2 mission cost $865 million in 1973 dollars.

Thus, while funding missions demands early return on investment, there should be abundant opportunity for science in the decades of interstellar flight between the Sun and Proxima Centauri, with surprises along the way, just as the Voyagers occasionally throw us a curveball – consider the twists and wrinkles detected in the Sun’s magnetic field as lines of magnetic force criss-cross, and reconnect, producing a kind of ‘foam’ of magnetic bubbles, all this detected over a decade ago in Voyager data. The long-term return on investment is considerable, as it includes years of up-close exoplanet data, with orbital operations around, for example, Proxima Centauri b.

It will be interesting to see Jackson’s final NIAC report, which he tells me will be complete within a week or so. As to the future, a glimpse at one aspect of it is available in the current paper, which refers to what the original NIAC project description referred to as “a powerful LIDAR system…to illuminate, identify and track flyby candidates” in the Oort Cloud. But as the paper notes, this now seems impractical:

One preliminary conclusion is that active interrogation methods for locating 10 km diameter objects, for example with the communication laser, are not feasible even with megawatts of available electrical power.

We’ll also find out in the NIAC report whether or not Jackson’s idea of using gram-scale chipcraft for closer examination of, say, objects in the Oort has stood up to scrutiny in the subsequent work. This hybrid mission concept using antimatter is rapidly evolving, and what lies ahead, he tells me in a recent email, is a series of papers expanding on antimatter production and storage, and further examining both the electrostatic trap and electrostatic nozzle. As both drastically increasing antimatter production, as well as learning how to maximize small amounts, are critical for our hopes to someday create antimatter propulsion, I’ll be tracking this report closely.

Antimatter-driven Deceleration at Proxima Centauri

Although I’ve often seen Arthur Conan Doyle’s Sherlock Holmes cited in various ways, I hadn’t chased down the source of this famous quote: “When you have eliminated all which is impossible, then whatever remains, however improbable, must be the truth.” Gerald Jackson’s new paper identifies the story as Doyle’s “The Adventure of the Blanched Soldier,” which somehow escaped my attention when I read through the Sherlock Holmes corpus a couple of years back. I’m a great admirer of Doyle and love both Holmes and much of his other work, so it’s good to get this citation straight.

As I recall, Spock quotes Holmes to this effect in one of the Star Trek movies; this site’s resident movie buffs will know which one, but I’ve forgotten. In any case, a Star Trek reference comes into useful play here because what Jackson (Hbar Technologies, LLC) is writing about is antimatter, a futuristic thing indeed, but also in Jackson’s thinking a real candidate for a propulsion system that involves using small amounts of antimatter to initiate fission in depleted uranium. The latter is a by-product of the enrichment of natural uranium to make nuclear fuel.

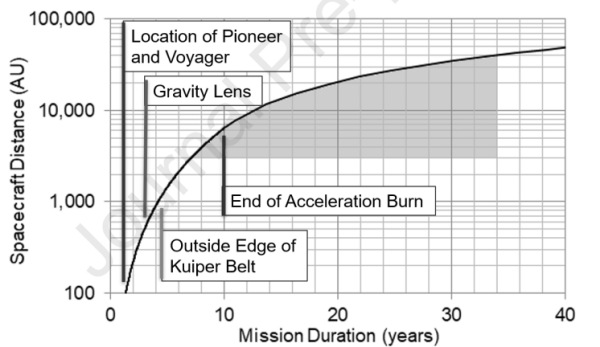

Both thrust and electrical power emerge from this, and in Jackson’s hands, we are looking at a mission architecture that can not only travel to another star – the paper focuses on Proxima Centauri as well as Epsilon Eridani – but also decelerate. Jackson has been studying the matter for decades now, and has presented antimatter-based propulsion concepts for interstellar flight at, among other venues, symposia of the Tennessee Valley Interstellar Workshop (now the Interstellar Research Group). In the new paper, he looks at a 10-kilogram scale spacecraft with the capability of deceleration as well as a continuing source of internal power for the science mission.

Image: Depiction of the deceleration of interstellar spacecraft utilizing antimatter concept. Credit: Gerald Jackson.

On the matter of the impossible, the quote proves useful. Jackson applies it to the propulsion concepts we normally think of in terms of making an interstellar crossing. This is worth quoting:

Applying this Holmes Method to space propulsion concepts for exoplanet exploration, in this paper the term “impossible” is re-interpreted arbitrarily to mean any technology that requires: 1) new physics that has not been experimentally validated; 2) mission durations in excess of one thousand years; and 3) material properties that are not currently demonstrated or likely to be achievable during this century. For example, “warp drives” can currently be classified as impossible by criterion #1, and chemical rockets are impossible due to criterion #2. Breakthrough Starshot may very well be impossible according to criterion #3 simply because of the needed material properties of the accelerating sail that must survive a gigawatt laser beam for 30 minutes. Though traditional nuclear thermal rockets fail due to criterion #2, specific fusion-based propulsion systems might be feasible if breakeven nuclear fusion is ever achieved.

Can antimatter supply the lack? The kind of mission Jackson has been analyzing uses antimatter to initiate fission, so we could consider this a hybrid design, one with its roots in the ‘antimatter sail’ Jackson and Steve Howe have described in earlier technical papers. For the background on this earlier work, you can start by looking at Antimatter and the Sail, one of a number of articles here on Centauri Dreams that has explored the idea.

In this paper, we move the antimatter sail concept to a deceleration method, with the launch propulsion being handed off to other technologies. The sail’s antimatter-induced fission is not used only to decelerate, though. It also provides a crucial source of power for the decades-long science mission at target.

If we leave the launch and long cruise of the mission up to other technologies, we might see the kind of laser-beaming methods we’ve looked at in other contexts as part of this mission. But if Breakthrough Starshot can develop a model for a fast flyby of a nearby star (moving at a remarkable 20 percent of lightspeed) via a laser array, various problems emerge, especially in data acquisition and return. On the former, the issue is that a flyby mission at these velocities allows precious little time at target. Successful deceleration would allow in situ observations from a stable exoplanet orbit.

That’s a breathtaking idea, given how much energy we’re thinking about using to propel a beamed-sail flyby, but Jackson believes it’s a feasible mission objective. He gives a nod to other proposed deceleration methods, which have included using a ‘magnetic sail’ (magsail) to brake against a star’s stellar wind. The problem is that the interstellar medium is too tenuous to slow a craft moving at a substantial percentage of lightspeed for orbital insertion upon arrival – Jackson considers the notion in the ‘impossible’ camp, whereas antimatter may come in under the wire as merely ‘improbable.’ That difference in degree, he believes, is well worth exploring.

The antimatter concept described generates a high specific impulse thrust, with the author noting that approximately 98 percent of antiprotons that stop within uranium induce fission. It turns out that antiproton annihilation on the nucleus of any uranium isotope – and that includes non-fissile U238 – induces fission. In Jackson’s design, about ten percent of the annihilation energy released is channeled into thrust.

Jackson analyzes an architecture in which the uranium “propagates as a singly-charged atomic ion beam confined to an electrostatic trap.” The trap can be likened in its effects to what magnetic storage rings do when they confine particle beams, providing a stable confinement for charged particles. Antiprotons are sent in the same direction as the uranium ions, reaching the same velocity in the central region, where the matter/antimatter annihilation occurs. Because the uranium is in the form of a sparse cloud, the energetic fission ‘daughters’ escape with little energy loss.

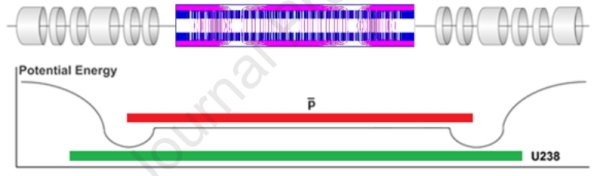

Here is Jackson’s depiction of an electrostatic annihilation trap. In this design, both the positively charged uranium ions and the negatively charged antiprotons are confined.

Image: This is Figure 1 from the paper. Caption: Axial and radial confinement electrodes (top) and two-species electrostatic potential well (bottom) of a lightweight charged-particle trap that mixes U238 with antiprotons.

A workable design? The author argues that it is, saying:

Longitudinal confinement is created by forming an axial electrostatic potential well with a set of end electrodes indicated in figure 1. To accomplish the goal of having oppositely charged antiprotons and uranium ions traveling together for the majority of their motion back and forth (left/right in the figure) across the trap, this electrostatic potential has a double-well architecture. This type of two-species axial confinement has been experimentally demonstrated [53].

The movement of antiprotons and uranium ions within the trap is complex:

The antiprotons oscillate along the trap axis across a smaller distance, reflected by a negative potential “hill”. In this reflection region the positively charged uranium ions are accelerated to a higher kinetic energy. Beyond the antiproton reflection region a larger positive potential hill is established that subsequently reflects the uranium ions. Because the two particle species must have equal velocity in the central region of the trap, and the fact that the antiprotons have a charge density of -1/nucleon and the uranium ions have a charge density of +1/(238 nucleons), the voltage gradient required to reflect the uranium ions is roughly 238 times greater than that required to reflect the antiprotons.

The design must reckon with the fact that the fission daughters escape the trap in all directions, which is compensated for through a focusing system in the form of an electrostatic nozzle that produces a collimated exhaust beam. The author is working with a prototype electrostatic trap coupled to an electrostatic nozzle to explore the effects of lower-energy electrons produced by the uranium-antiproton annihilation events as well as the electrostatic charge distribution within the fission daughters.

Decelerating at Proxima Centauri in this scheme involves a propulsive burn lasting ten years as the craft sheds kinetic energy on the long arc into the planetary system. Under these calculations, a 200 year mission to Proxima requires 35 grams of total antiproton mass. Upping this to a 56-year mission moving at 0.1 c demands 590 grams.

Addendum: I wrote ’35 kilograms’ in the above paragraph before I caught the error. Thanks, Alex Tolley, for pointing this out!

Current antimatter production remains in the nanogram range. What to do? In work for NASA’s Innovative Advanced Concepts office, Jackson has argued that despite minuscule current production, antimatter can be vastly ramped up. He believes that production of 20 grams of antimatter per year is a feasible goal. More on this issue, to which Jackson has been devoting his life for many years now, in the next post.

The paper is Jackson, “Deceleration of Exoplanet Missions Utilizing Scarce Antimatter,” in press at Acta Astronautica (2022). Abstract.

An Abundance of Technosignatures?

What expectations do we bring to the hunt for life elsewhere in the universe? Opinions vary depending on who has the podium, but we can neatly divide the effort into two camps. The first looks for biosignatures, spurred by our remarkably growing and provocative catalog of exoplanets. The other explicitly looks for signs of technology, as exemplified by SETI, which from the start hunted for signals produced by intelligence.

My guess is that a broad survey of those looking for biosignatures would find that they are excited by the emerging tools available to them, such as new generations of ground- and space-based telescopes, and the kind of modeling we saw in the last post applied to a hypothetical Alpha Centauri planet. We use our growing datasets to examine the nature of exoplanets and move beyond observation to model benchmarks for habitable worlds, including their atmospheric chemistry and even geology.

Technosignatures are a different matter, and it’s fascinating to read through a new paper from Jason Wright and colleagues. – Jacob Haqq-Misra, Adam Frank, Ravi Kopparapu, Manasvi Lingam and Sofia Sheikh – discussing just how. The intent is to show that technosignatures offer a vast search space that in a sense dwarfs the hunt for biosignatures. That’s not what you would expect, as the latter are usually described as a kind of all-encompassing envelope within which technosignatures would be a subset.

On the contrary, write the authors, “there is no incontrovertible reason that technology could not be more abundant, longer-lived, more detectable, and less ambiguous than biosignatures.” How this potential is unlocked impacts how the search proceeds, and it also sends out a call for collaboration among all those hunting for life elsewhere.

Image: Photo of the central region of the Milky Way. Credit: UCLA SETI Group/Yuri Beletsky, Carnegie Las Campanas Observatory.

Technosignatures as Subset?

Remember that technosignatures do not require an intent to communicate, but are evidence of technologies in use or even long abandoned, perhaps found in already existing datasets needing re-examination, or in results from upcoming observatories. Check your own assumptions here, based on the Drake equation, in which factors include the fraction of habitable planets that develop life, the fraction that produce species that are intelligent and can communicate, and so on. Traditional thinking sees technosignatures as an embedded feature within a broader spectrum of life.

Reasonably enough, then, we might decide that if intelligence is a rare subset within biological systems, technosignatures would prove even rarer. Our own planet seems to exemplify this, with our species having become communicative only within roughly a century of today, despite 4.6 billion years in which to evolve. But Wright and team make the case that technology cannot be bounded in this way. Its emergence may be rare, but once it appears, it is possible that it will outlive its biological creators.

Biology may confine itself to a single habitable planet, but why should technosignatures be thus limited? In our own Solar System, we are producing, the authors argue, technosignatures for multiple worlds right now, especially at Mars, where we have our combined force of landers and orbital assets taking data and communicating results back to Earth. Such signals should increase as we follow through on plans to explore Mars with human crews and robotic spacecraft. As we spread into the Solar System, new technosignatures will emerge at each venue we study.

Why, too, should technology not spread through self-replication, perhaps not under the control of the biological beings who set it into motion? For that matter, why should we confine technology to planets? Places with no biology may prove extremely useful for our species, as for example the asteroid belt for resource extraction. We might expect technosignatures to emerge from these operations, another separate appearance of technology that grows ultimately out of the single planetary source. Moreover, this diaspora is unlikely to confine itself to a single star system, as the authors point out:

There is also no reason to think that technological life in the galaxy cannot spread beyond its home planetary system (see Mamikunian & Briggs 1965; Drake 1980). While interstellar spaceflight of the sort needed to settle a nearby star system is beyond humanity’s current capabilities, the problem is one being seriously considered now, and there are no real physical or engineering obstacles to such a thing happening (e.g., Mauldin 1992; Ashworth 2012; Lingam & Loeb 2021). Even if we cannot envision it happening for humans in the near future, it is not hard to imagine it transpiring in, say, 10,000 or 100,000 yr.

What a shift in thinking in the above paragraph, which to us merely states the obvious, when compared to a mere 75 years ago, a time when the idea of interstellar flight was considered science fictional in the extreme, and we were only beginning to probe the physics of the engines that might make crossing to another star possible. Today we’re more likely to be thinking about interstellar journeys as expeditions awaiting new generations of technology and engineering rather than a mystical new physics. We also factor artificial intelligence into an interstellar future that may be exclusively robotic.

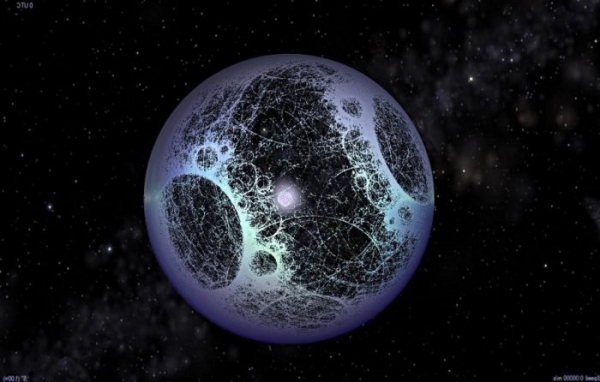

Image: A rendering of a potential Dyson sphere, collect stellar energy on a system wide scale for highly advanced civilizations. How many separate technosignatures might have emerged out of a single biological source in the building of such a thing? Credit: sentientdevelopments.com.

Recall our recent discussion of von Neumann probes. While the average distance between stars is vast, Greg Matloff looked at the problem in an exceedingly practical way. Suppose, he said, we confine ourselves to times when stars are within a single light year of each other, which happens to our Sun every 500,000 years or so. If we launch a self-replicating probe only every 500,000 years, we nonetheless set up a process of such crossings that fills a large percentage of stellar systems in the galaxy within a time frame of tens of thousands of years. All of these can produce technosignatures.

Thus even the most conservative assumptions for interstellar flight using speeds not much beyond what we can achieve with a Jupiter gravity assist today still create the opportunity for technology to spread far beyond the planet of its origin. As the authors are quick to point out, the Drake equation cannot capture this spreading, and the search space for technosignatures could vastly outnumber that for biological life.

Lifetimes Civilizational and Technological

Looming over discussion of the Drake equation has always been the issue of the lifetime of a technological civilization, the L factor. How likely would we be to pick up a signal from another civilization if our own is threatened at this comparatively early stage of its growth by factors like nuclear or biological war? The Fermi question may be answered simply enough by saying that no technological species lives very long.

Here it’s fair to ask how much we are projecting human tendencies onto our extraterrestrial counterparts. This gets intriguing. The collapse of civilization would be a dire event, but absent actual extinction, our species might recover or, indeed, re-develop the technologies that once proliferated. The time between catastrophe and potential recovery is not known, but such events do not put a fixed limit on a civilization’s lifetime. Even if we assume that technological civilizations will roughly track our own, we may understand our own only imperfectly. From the paper:

…humanity is the first species on Earth that can prevent its own extinction with technology, for instance by diverting asteroids, stopping or mitigating pandemics, or building “lifeboat” settlements elsewhere in the solar system or beyond (Baum et al. 2015; Turchin & Green 2017; Turchin & Denkenberger 2018). This means that the upper limit on our technology’s survival is essentially unlimited in theory, even in the face of inevitable natural catastrophes. Apart from these modern examples, Earth-analogs from human history teach us that a technological downshift—to temporarily become less technological until circumstances improve—is a common and healthy adaptation to catastrophe in human history and that technology and longevity are in this way inextricably linked…

Nor can we rule out the possibility that the Earth could develop other species beyond our own in the future that can produce a technological society following humanity’s extinction. For that matter, are we so sure about our past? If there have been prior periods of technology on Earth, the processes of time over millions of years would likely have eradicated them. Thus using our experience on Earth as the model for the Drake L factor is inadvisable because of how little we know about L for our own planet.

Technosignatures can outlast the beings that create them, and as the authors point out, the ones we produce are already on a par with Earth’s biosignatures in terms of detectability. While we would not be able to detect the biosignatures of Earth from Alpha Centauri’s distance, the final iteration of the Square Kilometre Array should be sensitive enough to pick up our radars at distances of several parsecs, and an advanced space telescope within our engineering capabilities now (such as the proposed LUVOIR) might be able to detect atmospheric pollution at 10 parsecs.

It seems a safe assumption that if our biosignatures and technosignatures are roughly comparable in terms of detectability today, the advance of technology as a species continues to innovate should produce ever more robust technosignatures. We cannot, in other words, assume a biology-like trajectory, as implicit in the Drake equation, for the evolution of technosignatures and their detectability through SETI. Indeed:

…the spread of technology could reasonably imply that the number of sites of technosignatures might be larger than that of biosignatures, potentially by a factor of as much as > 1010 if the galaxy were to be virtually filled with technology.

No wonder some authors have considered adding a ‘spreading factor’ to the Drake equation, which accounts for the possibility of technologies moving far beyond their home worlds. Thus one technosphere produces myriad technosignatures, while the Drake equation in its classic form inevitably does not account for such growth. If the equation assumes life emerges and stays on its home world, the authors of this paper see technology as having a separate evolutionary arc which potentially takes it far into the galaxy in ever proliferating form.

While the search for biosignatures continues, it makes sense given all these factors for technosignatures to remain under active investigation, and to encourage the astrobiology and SETI communities to engage with each other in the common pursuit of extraterrestrial life. Comparative and cooperative analysis should enhance the work of both disciplines.

The paper is Wright et al., “The Case for Technosignatures: Why They May Be Abundant, Long-lived, Highly Detectable, and Unambiguous,” Astrophysical Journal Letters 927, L30 (10 March 2022). Full text.

A New Title on Extraterrestrial Intelligence

Just a quick note for today as I finish up tomorrow’s long post. But I did want you to be aware of this new title, Extraterrestrial Intelligence: Academic and Societal Implications, which has connections with recent topics and will again tomorrow, when we discuss a new paper from Jason Wright and SETI colleagues on technosignatures. As with the recent biography of John von Neumann, I haven’t had the chance to read this yet, but it’s certainly going on the list. The book is out of Cambridge Scholars Publishing. Here’s the publisher’s description:

What are the implications for human society, and for our institutions of higher learning, of the discovery of a sophisticated extraterrestrial intelligence (ETI) operating on and around Earth? This book explores this timely question from a multidisciplinary perspective. It considers scientific, philosophical, theological, and interdisciplinary ways of thinking about the question, and it represents all viewpoints on how likely it is that an ETI is already operating here on Earth. The book’s contributors represent a wide range of academic disciplines in their formal training and later vocations, and, upon reflection on the book’s topic, they articulate a diverse range of insights into how ETI will impact humankind. It is safe to say that any contact or communication with ETI will not merely be a game changer for human society, but will also be a paradigm changer. This means that it makes sense for human beings to prepare themselves now for this important transition.

Important indeed, but how demoralizing to see another title at a stiff tariff: £63.99 (that’s about $84 US). I will spare you my thoughts on the academic side of publishing, and in the meantime see if I can get a review copy, as I assume most Centauri Dreams readers aren’t going to want to pony up this amount for a book they know little about (although if you live near a good academic library, this one should turn up there).

Modeling a Habitable Planet at Centauri A/B

Why is it so difficult to detect planets around Alpha Centauri? Proxima Centauri is one thing; we’ve found interesting worlds there, though this small, dim star has been a tough target, examined through decades of steadily improving equipment. But Centauri A and B, the G-class and K-class central binary here, have proven impenetrable. Given that we’ve found over 4500 planets around other stars, why the problem here?

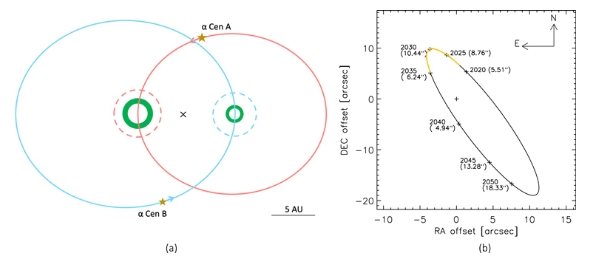

Proximity turns out to be a challenge in itself. Centauri A and B are in an orbit around a common barycenter, angled such that the light from one will contaminate the search around the other. It’s a 79-year orbit, with the distance between A and B varying from 35.6 AU to 11.2. You can think of them as, at their furthest, separated by the Sun’s distance from Pluto (roughly), and at their closest, by about the distance to Saturn.

The good news is that we have a window from 2022 to 2035 in which, even as our observing tools continue to improve, the parameters of that orbit as seen from Earth will separate Centauri A and B enough to allow astronomers to overcome light contamination. I think we can be quite optimistic about what we’ll find within the decade, assuming there are indeed planets here. I suspect we will find planets around each, but whether we find something in the habitable zone is anyone’s guess.

Image: This is Figure 1 from today’s paper. Caption: (a) Trajectories of ?-Cen A (red) and B (blue) around their barycenter (cross). The two stars are positioned at their approximate present-day separation. The Hill spheres (dashed circles) and HZs (nested green circles) of A and B are drawn to scale at periapsis. (b) The apparent trajectory of B centered on A, with indications of their apparent separation on the sky over the period from CE 2020 to 2050. The part of trajectory in yellow indicates the coming observational window (CE 2022–2035) when the apparent separation between A and B is larger than 6 and the search for planets around A or B can be conducted without suffering significant contamination from the respective companion star. Credit: Wang et al.

If we don’t yet have a planet detection around the binary Centauri stars, we continue to explore the possibilities even as the search continues. Thus a new paper from Haiyang Wang (ETH Zurich), who along with colleagues at the university has been modeling the kind of rocky planet in the habitable zone that we hope to find there. The idea is to create the benchmarks that predict what this world should look like.

The numerical modeling involved examines the composition of the hypothetical world, drawing on what we do know, based on spectroscopic measurements, of the chemical composition of Centauri A and B. Here there is a great deal of information to work with, especially on so-called refractory elements, the iron, magnesium and silicon that go into rock formation. Centauri A and B are among the Gaia “benchmark stars” for which stellar properties have been carefully calibrated, and up to 22 elements have been found in high-quality spectra, so we know a lot about their chemical makeup.

But a key issue remains. While rocky planets are known to have rock and metal chemical compositions similar to that of their host stars, there is no necessary correspondence when it comes to the readily vaporized volatile elements. The authors suggest that this is because the process of planetary formation and evolution quickly does away with key telltale volatiles.

The researchers thus develop their own ‘devolatilization model’ to project the possible composition of a supposed habitable zone planet around Centauri A and B, linking stellar composition with both volatile and refractory elements. The model grew out of Wang’s work with Charley Lineweaver and Trevor Ireland at the Australian National University in Canberra, and it continues at Wang’s current venue at ETH. This is fundamentally new ground that extends our notions of exoplanet composition.

Wang and team call their imagined world ‘a-Cen-Earth,’ delving into its internal structure, mineralogy and atmospheric composition, all factors in evolution and habitability. The findings reveal a planet that is geochemically similar to Earth, with a silicate mantle, although carbon-bearing species like graphite and diamond are enhanced. Water storage in the interior is roughly the same as Earth, but the deduced world has a somewhat larger iron core mixed with a possible lack of plate tectonics. Indeed, “…the planet may be in a Venus-like stagnant-lid regime, with sluggish mantle convection and planetary resurfacing, over most of its geological history.”

As to the atmosphere of the hypothetical world that grows out of Wang’s model, its early era shows an envelope rich in carbon dioxide, methane and water, which harks back to the Earth’s atmosphere in the Archean era, between 4 and 2.5 billion years ago. That gives life a promising start if we assume abiogenesis occurring in a similar environment.

Image: ? Centauri A (left) and ? Centauri B viewed by the Hubble Space Telescope. At a distance of 4.3 light-?years, the ? Centauri group (which includes also the red dwarf ? Centauri C) is the nearest star system to Earth. Credit: ESA/Hubble & NASA.

How far can we take a model like this? We may soon have data to measure it against, but it’s worth remembering what the paper’s authors point out. After noting that planets around the “Sun-like” Centauri A and B cannot be extrapolated from the already known planets around the red dwarf Proxima Centauri, they go on to say:

Second, although ? Cen A and B are “Sun-like” stars, their metallicities are ?72% higher than the solar metallicity (Figure 3). How this difference would affect the condensation/evaporation process, and thus the devolatilization scale, is the subject of ongoing work (Wang et al. 2020b).

That’s a big caveat and a useful pointer to the needed clarification that further work on the matter should bring – metallicity is obviously significant. The paper adds:

Third, we ignore any potential effect of the “binarity” of the stars on their surrounding planetary bulk chemistry during planet formation, even though we highlight that, dynamically, the planetary orbits in the HZ around either companion are stable. Finally, we have yet to explore a larger parameter space, e.g., in mass and radius, but have only benchmarked our analysis with an Earth-sized planet, which would otherwise have an impact on the interior modeling…

So we’re in early days with planet modeling using these methods, which are being examined and extended through the team’s collaborations at Switzerland’s National Centre of Competence in Research PlanetS. Note too that the authors do not inject any catastrophic impact into their model of the sort that could affect both a planet’s mantle and/or its atmosphere, with dramatic consequences for the outcome. We know from the Earth’s experience in the Late Heavy Bombardment that this can be a factor.

With all this in mind, it’s fascinating to see the lines of observation and theory converging on the Alpha Centauri binary pair. Finding a habitable zone planet around Proxima Centauri was exhilarating. How much more so to go beyond the many imponderables of red dwarf planet habitability to two stars much more like our Sun, each of which might have a planet in its habitable zone? The Alpha Centauri triple system may turn out to be a bonanza, showing us both red dwarf and Sun-like planetary outcomes in a single system that just happens to be the closest to us.

The paper is Wang et al,, “A Model Earth-sized Planet in the Habitable Zone of ? Centauri A/B,” The Astrophysical Journal Vol. 927, No. 2 (10 March 2022). Abstract/Full Text. Preprint also available.

Why Fill a Galaxy with Self-Reproducing Probes?

We can’t know whether there is a probe from another civilization – a von Neumann probe of the sort we discussed in the previous post – in our own Solar System unless we look for it. Even then, though, we have no guarantee that such a probe can be found. The Solar System is a vast place, and even if we home in on the more obvious targets, such as the Moon, and near-Earth objects in stable orbits, a well hidden artifact a billion or so years old, likely designed not to draw attention to itself, is a tricky catch.

As with any discussion of extraterrestrial civilizations, we’re left to ponder the possibilities and the likelihoods, acknowledging how little we know about whether life itself is widely found. One question opens up another. Abiogenesis may be spectacularly rare, or it may be commonplace. What we eventually find in the ice moons of the outer system should offer us some clues, but widespread life doesn’t itself translate into intelligent, tool-making life. But for today, let’s assume intelligent toolmakers and long-lived societies, and ponder what their motives might be.

Let’s also acknowledge the obvious. In looking at motivations, we can only peer through a human lens. The actions of extraterrestrial civilizations, and certainly their outlook on existence itself, would be opaque to us. They would possibly act in ways we consider inexplicable, for reasons that defy the logic we apply to human decisions. But today’s post is a romp into the conjectural, and it’s a reflection of the fact that being human, we want to know more about these things and have to start somewhere.

Motivations of the Probe Builders

Greg Matloff suggests in his paper on von Neumann probes that one reason a civilization might fill the galaxy with these devices is the possibly universal wish to transcend death. A walk through the Roman ruins scattered around what was once the province of Gaul gave weight to the concept when my wife and I prowled round the south of France some years back. Humans, at least, want to put down a marker. They want to be remembered, and their imprint upon a landscape can be unforgettable.

But in von Neumann terms, I have trouble with this one. I stood next to a Roman wall near Saint-Rémy-de-Provence on a late summer day and felt the poignancy of all artifacts worn by time, but the Romans were decidedly mortal. They knew death was a horizon bounding a short life, and could transcend it only through propitiations to their gods and monuments to their prowess. A civilization that is truly long-lived, defined not by centuries but aeons, may have less regard for personal aggrandizement and even less sense of a coming demise. Life might seem to stretch indefinitely before it.

Image: Some of the ruins of the Roman settlement at Glanum in Saint-Rémy-de-Provence, recovered through excavations beginning in 1921. Walking here caused me to reflect on how potent memorials and monuments would be to a species that had all but transcended death. Would the impulse to build them be enhanced, or would it gradually disappear?

Probes as a means of species reproduction, another Matloff suggestion, ring more true to me, and I would suggest this may flag a biological universal, the drive to preserve the species despite the death of the individual. Here we’re in familiar science fiction terrain in which biological material is preserved by machines and flung to the stars, to be activated upon arrival and raised to awareness by artificial intelligence. Or we could go further – Matloff does – to say that biological materials may prove unnecessary, with computer uploads of the minds of the builders taking their place, another SF trope.

I can go with that as a satisfactory motivator, and it’s enough to make me want to at least try to find what Jim Benford calls ‘lurkers’ in our own corner of the galaxy. Another motivator that deeply satisfies me because it’s so universal among humankind is simple curiosity. A long-lived, perhaps immortal civilization that wants to explore can send von Neumann probes everywhere possible in the hope of learning everything it can about the universe. Encyclopedia Galactica? Why not? Imputing any human motive to an extraterrestrial civilization is dangerous, of course, but we have little else to go on. And centuries of human researchers and librarians attest to the power of this one.

Would such probes be configured to establish communication with any societies that arise on the planets under observation? This is the Bracewell probe notion that extends von Neumann self-reproduction to include this much more immediate form of SETI, with potential knowledge stored at planetary distances. Obviously, 2001: A Space Odyssey comes to mind as we recall the mysterious monoliths found on the early Earth and, much later, on the Moon, and the changes to humanity they portend.

But are long-lived civilizations necessarily friendly? Fred Saberhagen’s ‘berserker’ probes key off the Germanic and particularly Norse freelance bodyguards and specialized troops that became fixtures at the courts of royalty in early medieval times (the word is from the Old Norse word meaning ‘bearskin’). These were not guys you wanted to mess with, and associations with their attire of bear and wolfskins seem to have contributed to the legend of werewolves. Old Norse records show that they were prominent at the court of Norway’s king Harald I Fairhair (reigned 872–930).

Because they made violence into a way of life, we should hope not to find the kind of probe that would be named after them, which might be sent out to eliminate competition. Thus Saberhagen’s portrayal of berserker probes sterilizing planets just as advanced life begins to appear. The fact that we have not yet been sterilized may be due to the possibility that such a probe does not yet consider us ‘advanced,’ but more likely implies we have no berserker probes nearby. Let’s hope to keep it that way.

Or what about the spread of life itself? If abiogenesis does turn out to be unusually rare, it’s possible that any civilization with the power to do so would decide to seed the cosmos with life. In this case, we’re not sending uploaded intelligence or biological beings in embryonic form in our probes, but rather the most basic lifeforms that can proliferate on any planets offering the right conditions for their development. Perhaps there becomes an imperative – written about, for example, by Michael Mautner and Matloff himself – to spread life as a way to transform the cosmos. Milan ?irkovi? continues to explore the implications of just such an effort.

In an interesting post in Sentient Developments, Canadian futurist George Dvorsky points out that self-reproduction has more than an outward-looking component. Supposing a civilization interested in building a megastructure – a Dyson sphere, let’s say – decides to harness self-reproduction to supply the needed ‘worker’ devices that would mine the local stellar system and create the object in question.

At a truly cosmic level, Matloff speculates, self-replicating probes might be deployed to build megastructures that could alter the course of cosmic evolution. We’re in Stapledon territory now, freely mixing philosophy and wonder. We’re also in the arena claimed by Frank Tipler in his The Physics of Immortality (Doubleday, 1994).

We’ll want to search the Earth Trojan asteroids and co-orbitals for any indication of extraterrestrial probes, though it’s also true that the abundant resources of the Kuiper Belt might make operations there attractive to this kind of intelligence. One of the biggest questions has to do with the size of such probes. Here I’ll quote Matloff:

In a search for active or quiescent von Neumann probes in the solar system, human science would contend with great uncertainty regarding the size of such objects. Some science fiction authors contend that these devices might be the size of small planetary satellites (see for example L. Johnson, Mission to Methone and A. Reynolds, Pushing Ice). On the other hand, Haqq-Misra and Kopparapu (2012) believe that they may be in the 1-10 m size range of contemporary human space probes and these might be observable.

But there may be a limit to von Neumann probe detection. If they can be nano-miniaturized as suggested by Tipler (1994), the solar system might swarm with them and detection efforts would likely fail.

I remember having a long phone conversation two decades ago with Robert Freitas on this very point. Freitas had originally come up with a self-reproducing probe concept at the macro-scale called REPRO, but went on to delve into the implications of nano-technology. He made Matloff’s point in our discussion: If probe technologies operate at this scale, the surface of planet Earth itself could be home to an observing network about which we would have no awareness. Self-reproductive probes will be hard to rule out, but looking where we can to screen for the obvious makes sense.

The paper is Matloff, “Von Neumann probes: rationale, propulsion, interstellar transfer timing,” International Journal of Astrobiology, published online by Cambridge University Press 28 February 2022 (abstract).