Centauri Dreams

Imagining and Planning Interstellar Exploration

A Stellar Analogue to the Young Sun

Vladimir Airapetian, senior astrophysicist in the Heliophysics Division at NASA’s Goddard Space Flight Center, has a somewhat unusual ambition. Most attention related to finding a ‘second Earth’ revolves around locating a world not only similar to ours in its characteristics but also similarly situated in terms of its host star’s evolution. In other words, a rocky world scorched by its star’s transition to red giant status isn’t a true analogue of our own, but a glimpse of what it will be at another stage.

What Airapetian has in mind, though, is going in the other direction. His projected Earth analogue is one that mimics what our planet was in its early days, not all that long after the birth of its stellar system. It’s an ambition that points to learning where we came from, and thus what we might expect when we see a system like ours evolving around other stars. It has led to a search for a star like the Sun in its infancy. Says Airapetian:

“It’s my dream to find a rocky exoplanet in the stage that our planet was in more than 4 billion years ago, being shaped by its young, active star and nearly ready to host life. Understanding what our Sun was like just as life was beginning to develop on Earth will help us to refine our search for stars with exoplanets that may eventually host life.”

Image: Illustration of what the Sun may have been like 4 billion years ago, around the time life developed on Earth. Credit: NASA’s Goddard Space Flight Center/Conceptual Image Lab.

Space-based instruments have provided data, as the GSFC team studied existing solar models for insight into the characteristics of the young star Kappa 1 Ceti, about 30 light years away. Data input from Hubble, TESS (Transiting Exoplanet Survey Satellite), NICER (Neutron star Interior Composition Explorer, installed aboard the ISS) and the European Space Agency’s XMM-Newton observatory have refined the model.

Kappa 1 Ceti is a G-class star in the constellation Cetus with a rapid rotation of about nine days. Our Sun rotates in 27 days, but in its youth is thought to have rotated three times faster. The mass of the two stars is roughly the same, and Kappa 1 Ceti has 95 percent of the Sun’s radius, with about 85 percent of its luminosity. An early Sun would have had a stronger magnetic field and a powerful solar wind, which would have affected the magnetosphere and thus atmospheric chemistry on the young Earth.

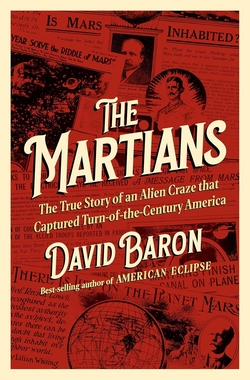

Consider: Earth in its infancy, though receiving less solar heat than today, would have been subject to more high energy particles and radiation from the young Sun. High solar activity would cause particles to slam into Earth’s nitrogen atmosphere, wreaking molecular change: Breaking nitrogen molecules into atoms and turning carbon dioxide into its carbon and oxygen constituents. Nitrogen paired with free oxygen gives nitrous oxide, which produces a greenhouse effect boosting the planet’s temperatures and perhaps assisting the formation of life.

Image: An artist concept of a coronal mass ejection hitting the young Earth’s weak magnetosphere. Credit: NASA/GSFC/CIL.

Airapetian’s team used the Alfvén Wave Solar Model, developed within a larger model of space weather originally created at the University of Michigan, described in the paper as “a first-principles global model that describes the stellar atmosphere from the top of the chromosphere and extends it into the heliosphere beyond Earth’s orbit.”

Data inputs include known information about a star’s magnetic field and data on its ultraviolet emissions, both of which can be used to predict otherwise unobservable stellar wind activity. The team tested its model against data on the Sun to validate its predictions, finding that it successfully tracks the Sun’s solar wind and corona.

The model allows the researchers to predict the effects of a star’s stellar wind and corona on its magnetic shield, with effects on potential habitability. The key finding: In addition to the kind of coronal mass ejection (CME) events that blow plasma outward from the star and can produce atmospheric loss on young planets, we also find what are known as Corotating Interaction Events (CIRs) that can directly impact such worlds.

The young star produces a dynamic pressure 1380 times what is produced by these same CIRs in our own mature Sun. The effects would be significant::

This is a very important result as CIRs cause magnetic storms on Earth… The initiation of magnetic storms is associated with enhanced dynamic pressure that compress[es] the Earth’s magnetosphere and cause[s] the induction of ionospheric currents and results in Joule heating of the ionospheric and thermospheric layers of the planet.

The changes in the magnetosphere produced by these CIRs are comparable to the strongest coronal mass ejections, with results including strong fluxes of electrons and protons into the upper atmosphere:

These processes can be crucial in evaluating the magnetospheric states of exoplanets around active stars because induced current dissipation will enhance the atmospheric escape from Earth-like exoplanets around active stars and can be critical for habitability conditions for rocky exoplanets in close-in habitable zones around red dwarfs.

Airapetian and team have developed a way of modeling the stellar environments in a range of young G- and M-class stars. We can look toward future mapping of stars at various stages of their lives. The researchers specifically mention their interest in EK Dra, a 100 million year old star 111 light years out that is producing more flares and plasma than Kappa 1 Ceti.

While Airapetian says his team’s work involves “looking at our own Sun, its past and its possible future, through the lens of other stars,” it also points in the other direction as a way of examining infant exoplanet systems where life may have the potential for developing, and thus guiding our list of targets for future observing efforts

The paper is Airapetian et al., “One Year in the Life of Young Suns: Data-constrained Corona-wind Model of ?1 Ceti,” Astrophysical Journal Vol. 916, No. 2 (3 August 2021), 96 (abstract / preprint).

Celebrating the Event Horizon Telescope

The X-ray ‘echoes’ from the Seyfert galaxy I Zwicky 1 occupied us on Friday, but today I want to explore the larger content of black hole research following the news about the relatively nearby active galaxy called Centaurus A. Whereas the X-ray work took data from two X-ray telescopes, NuSTAR and XMM-Newton, the Centaurus A investigation gives us another startling image from the instrument that to my mind has the coolest name of them all when it comes to observing tools — the Event Horizon Telescope.

It was the virtual EHT, of course, that produced the first image of a black hole, the supermassive object at the center of M87. The same observing campaign in 2017 produced the data used in the new paper on Centaurus A. At some 10-13 million light years, Centaurus A is — at radio wavelengths — one of the largest and brightest objects in the sky. Its central black hole is thought to mass about 55 million suns. By contrast, the EHT researchers have estimated the black hole in M87’s center to be 6.5 billion times more massive than the Sun, while our own Sgr A* — the Milky Way’s supermassive black hole — is thought to mass on the order of a ‘mere’ 4.3 million solar masses.

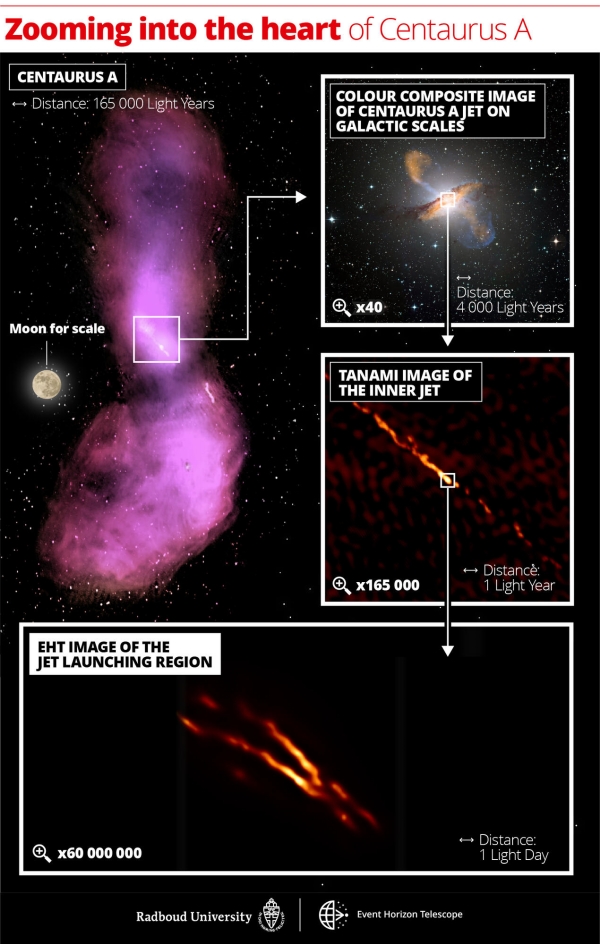

The Centaurus A study, led by Michael Janssen (Max Planck Institute for Radio Astronomy and Radboud University, Nijmegen) presents intriguing data on the galaxy’s enormous jet, as shown in the image below. Notice the range of observatories involved in this work, as listed in the caption. The Event Horizon Telescope data is here being supplemented by ground-based and space-based equipment, reminding us of the essential collaborative nature of the experiment when trying to mount a global interferometric effort and tease the greatest amount of information out of the result.

Image: Distance scales uncovered in the Centaurus A jet. The top left image shows how the jet disperses into gas clouds that emit radio waves, captured by the ATCA and Parkes observatories. The top right panel displays a color composite image, with a 40x zoom compared to the first panel to match the size of the galaxy itself. Submillimeter emission from the jet and dust in the galaxy measured by the LABOCA/APEX instrument is shown in orange. X-ray emission from the jet measured by the Chandra spacecraft is shown in blue. Visible white light from the stars in the galaxy has been captured by the MPG/ESO 2.2-metre telescope. The next panel below shows a 165,000x zoom image of the inner radio jet obtained with the TANAMI telescopes. The bottom panel depicts the new highest resolution image of the jet launching region obtained with the EHT at millimeter wavelengths with a 60,000,000x zoom in telescope resolution. Indicated scale bars are shown in light years and light days. Credit: Radboud Univ. Nijmegen; CSIRO/ATNF/I. Feain et al., R. Morganti et al., N. Junkes et al.; ESO/WFI; MPIfR/ESO/APEX/A. Weiß et al.; NASA/CXC/CfA/R. Kraft et al.; TANAMI/C. Müller et al.; EHT/M. Janßen et al.

Supermassive black holes cause the release of vast amounts of energy as some of the gas and dust near the accretion disk is blown into space in the form of jets. There is plenty to work with in the EHT data, and new questions raised, for only the outer edges of the jet appear to emit radiation, another reminder of how much we have to learn about the processes in play in this phenomenon. But let’s home in for now on the quality of the imagery.

For compared to previous work, we see the jet at Centaurus A at 16 times sharper resolution than ever before. The magnification factor here is one billion. EHT astronomers believe they can locate the black hole itself at the launching point of the jet, a location that will be explored in future observations at shorter wavelength and still higher resolution that will incorporate the use of space-based observatories.

The technique at Centaurus A, as it was at M87, was Very Long Baseline Interferometry (VLBI). If black hole jets occur at the stupendous scale we see here, it’s fitting that a planet-sized aperture would be used to see one of them up close. The Event Horizon Telescope originally joined eight telescopes around the globe to create this virtual capability, with other radio dishes around the globe later coming into play as observations continued. More than 300 researchers are involved in the effort worldwide.

We have to go back to 1971 to find the roots of the Event Horizon Telescope, as Heino Falcke points out in his book Light in the Darkness (HarperOne, 2021). This was when Donald Lynden-Bell and Martin Rees predicted that VLBI techniques on the scale of a continent could be used to discover a compact radio source like a black hole at the center of our own galaxy. Seyfert galaxies, bright with radio plasma, were suspected of hosting central black holes, part of a grouping of galaxies known to possess active galactic nuclei (AGN). It made sense that the Milky Way might have its own black hole. Three years later a compact radio source was discovered there.

Thus we learned about Sagittarius A*, a fascinating find but one whose image from Earth was compromised by the dust and hot gas along the galactic disk. The move toward shorter wavelength observation to study an object that was dark at almost every wavelength except that of radio frequency light makes for fascinating reading in Falcke’s book. Puzzles abounded. If Sagittarius A* were a black hole, it seemed a weak one. Quasar 3C 273, if placed in the Milky Way, would be 40 million times brighter.

Falcke wrote his doctoral thesis around the question of what he describes in his book as ‘starved black holes.’ If Sagittarius A* is no more than a weak glimmer compared to some, it implied that the black hole was simply not drawing in the matter needed to produce a stronger signature. If the average quasar consumed one sun per year, his calculations showed that our black hole must suck in ten million times less mass. That’s the equivalent of about three moons per year. From the book:

…contrary to popular belief, black holes generally aren’t wildly voracious monsters: they’re very well behaved and eat only what they’re served. In our imagination, black holes might be giant, but compared to an entire galaxy they’re just little chicks. And like chicks in the nest, black holes must wait for food, must wait for their mother galaxy to feed them with dust and stars. If this doesn’t happen, they waste away, go dark and quiet, and stop growing — just like Sagittarius A*. But they don’t die.

How to build a globe-spanning interferometric network to study Sagittarius A*, or the huge M87* source at the center of that galaxy? With eight observatories around the planet, the radio signals have to be perfectly synchronized to allow the observation, the position of the observatories known to the millimeter and the arrival time of signals received measured with atomic clocks of picosecond precision. As Falcke points out, such a radio telescope is assembled on a computer. Algorithms build the image.

And money builds the interferometer or, at least, the effort needed to coordinate a planet-sized effort like the EHT, and as countless meetings and strategy planning both online and in person continued and proposals were generated — we are talking a process years in the making, of course — M87 began to emerge as a viable target for the first attempt at an image of a black hole. Its black hole is two thousand times farther away — 55 million light years out — but it’s also huge, and estimates of its size had continued to grow as work on the EHT concept continued.

The advantages were many: M87 was easier to see from the northern hemisphere, where most of the EHT’s telescopes were located. It was also not located along the line of sight of the Milky Way’s disk, making it a clearer target. It’s no surprise that, among the EHT’s sources, including Sgr A*, M87 should have become the one whose results were most anticipated.

Falcke brings to the evolution of the EHT the same intensity that Alan Stern brought to the scientific, political and financial effort to build New Horizons in his book Chasing New Horizons (Picador, 2018). His eye for detail makes the observing sites lively places indeed, while he clarifies the technical issues without jargon. This is a project collecting data at 32 gigabits per second, all recorded on hard drives for future processing. As Falcke puts it:

Only after a lengthy process does a tiny image emerge from the giant quantity of data — talk about data reduction! Really we’re only recording static: static from the sky, receiver static, and a small bit of static from the edge of the black hole. Thankfully a large part of the sky and receiver static can be filtered out when the data are processed afterward. The total energy of the static that such a telescope gathers from our cosmic radio source in one night is incomprehensibly small. It’s the equivalent of the energy produced by a strand of hair one millimeter long that falls from a height of half a millimeter in vacuum onto a glass plate. The impact will hardly scratch the glass, but we can measure it.

We know the outcome, but that doesn’t lessen the drama. In April of 2017, the eight EHT observatories — two in Chile, two in Hawaii, one in Spain, one in Mexico, another in Arizona and a last one at the south pole — all pointed at the target in M87. Falcke heads for Málaga, test runs begin via the global network, the observations kick in. There is no way for all eight of the telescopes to measure simultaneously because of differences in location. The work has to be staggered, the data then synchronized. Calibration tests on quasars are conducted, then the first observations of M87 begin. At Málaga:

The telescope turns slowly toward the Virgo constellation, the second largest in the sky. We follow the motion on the display, transfixed. The telescope positions itself to find the right azimuth, or angle along the horizon; its elevation, or vertical angle, is perfect now as well. Same as with any other large movement, we have to do a little adjusting afterward. “Pico Veleta on source M87 and recording, pointing on nearby [quasar] 3C 273,” Krickbaum reports. The plots in the control room show plausible signal levels; the hard drives spin and fill up. A reassuring sign. On our instruments we see how the telescope follows the center of M87, moving counter to the Earth’s rotation. For hours now we swivel back and forth between M87 and a calibration quasar for scans of a few minutes each. Now everything seems to take care of itself.

As morning comes in Spain, the telescopes in Hawaii go into action observing M87, for a time simultaneously with Málaga before Spain shuts down. This is a distance of 10,907 kilometers, Falcke points out, the longest distance in the network. Arizona and Hawaii keep recording data for hours more. And this is only round one. The weather intervenes, then moderates. The words ‘go for VLBI’ take on an urgency like a rocket liftoff.

Consider that to make the Event Horizon Telescope work, you have to coordinate the position of each telescope relative to the sky as well as the time of arrival of the emissions being observed, which means modeling the motion of the Earth, taking into account pole-wandering due to the motion of its oceans and the effects of its atmosphere. Then a supercomputer goes to work looking for common oscillations. This means figuring out correlations between the received data to make sure they depict the same thing.

As Falcke points out, being a millisecond off in your timing means searching through millions of alternatives, which is why the analysis of the EHT’s data takes far longer than making the observations. Using quasars for calibration and testing is critical. It turned out to take nine months just to correlate the observations that produced the famous image of M87’s black hole. Or I should say, its ‘shadow,’ for no light can escape the black hole itself. The imaging teams had to wrestle with the fact that there are numerous ways of turning these data into a final image. We wind up with an image with the resolution that would be produced with an aperture the size of the Earth despite technical glitches, variable weather, and the need to schedule observing times at all the observatories, no mean feat in itself.

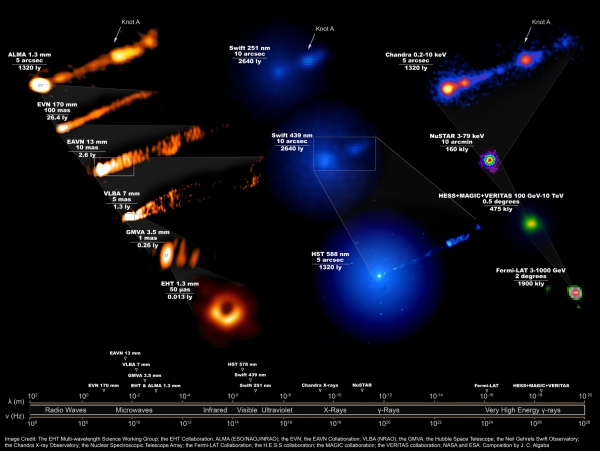

Image: Composite image showing how the M87 system looked, across the entire electromagnetic spectrum, during the Event Horizon Telescope’s April 2017 campaign to take the iconic first image of a black hole. Requiring 19 different facilities on the Earth and in space, this image reveals the enormous scales spanned by the black hole and its forward-pointing jet, launched just outside the event horizon and spanning the entire galaxy. Credit: the EHT Multi-Wavelength Science Working Group; the EHT Collaboration; ALMA (ESO/NAOJ/NRAO); the EVN; the EAVN Collaboration; VLBA (NRAO); the GMVA; the Hubble Space Telescope, the Neil Gehrels Swift Observatory; the Chandra X-ray Observatory; the Nuclear Spectroscopic Telescope Array; the Fermi-LAT Collaboration; the H.E.S.S. collaboration; the MAGIC collaboration; the VERITAS collaboration; NASA and ESA. Composition by J.C. Algaba.

It’s impressive that so many of the simulations the EHT team ran match the image of M87 that emerged, and as Falcke notes, there is an exact correlation between the size of the object and its mass, with the ring of light brightest at the bottom, as gas rotating around the black hole near light speed moves towards us, becoming focused and intensified. 6.5 billion solar masses gives us a black hole with a diameter of about 100 billion kilometers, an event horizon with a circumference four times as large as the orbit of Neptune.

The fact that we can make such measurements and produce such images doing astronomy at planet-wide scale is a testament to the power of scientific collaboration and raw human persistence. The EHT is indeed cause for celebration, as the new paper on Centaurus A affirms.

The paper on Centaurus A is Janssen et al., “Event Horizon Telescope observations of the jet launching and collimation zone in Centaurus A,” Nature Astronomy 19 July 2021 (abstract / full text).

‘Echoes’ from the Far Side of a Black Hole

The first direct observation of light from behind a black hole has just been described in a paper in Nature. What is striking in this work is not so much the confirmation, yet again, of Einstein’s General Relativity, but the fact that we can observe the effect in action in this environment. Having just read Heino Falcke’s Light in the Darkness: Black Holes, the Universe, and Us (HarperOne 2021), I have been thinking a lot about observing what was once thought unobservable, as Falcke and the worldwide interferometric effort called the Event Horizon Telescope managed to do when they produced the first image of a black hole.

The famous image out of that work that went worldwide in the media was of the supermassive black hole at the center of the galaxy M87, while the new paper — which offers no image but rather data on telltale X-ray emissions — covers a galaxy called I Zwicky 1 (I Zw 1), a Seyfert galaxy 800 million light years from the Sun. These are active galaxies with supermassive black holes at their centers and quasar-like nuclei. The data on this one were drawn from observations of I Zw 1 in early 2020 by the X-ray telescopes NuSTAR and XMM-Newton.

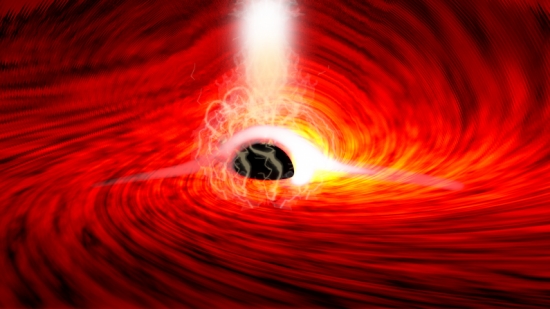

Image: The image of the black hole at the center of M87 shows the effect of the accretion disc as well as the black hole’s shadow. Credit: Akiyama et al. and ApJL.

Dan Wilkins, lead author of the paper on this work in Nature, is a research scientist at the Kavli Institute for Particle Astrophysics and Cosmology at Stanford and SLAC National Accelerator Laboratory. He explains how the malleability of spacetime makes the observations of I Zw 1’s black hole possible:

“Any light that goes into that black hole doesn’t come out, so we shouldn’t be able to see anything that’s behind the black hole. The reason we can see that is because that black hole is warping space, bending light and twisting magnetic fields around itself.”

Image: Researchers observed bright flares of X-ray emissions, produced as gas falls into a supermassive black hole. The flares echoed off of the gas falling into the black hole, and as the flares were subsiding, short flashes of X-rays were seen – corresponding to the reflection of the flares from the far side of the disk, bent around the black hole by its strong gravitational field. Credit: Dan Wilkins.

Material moving in the innermost regions of the accretion disk around a supermassive black hole forms a compact and variable corona of X-ray light near the object that allows scientists to map and characterize the accretion disk. Here superheated gas creates a magnetized plasma caught in the black hole’s spin. The magnetic field twists around itself, eventually breaking. The X-rays are reflected from the accretion disk, which gives us a look at events just outside the black hole’s event horizon.

All of this fits current thinking about how the black hole corona forms, but the researchers then detected a series of smaller X-ray flashes, reflections from the inner part of the accretion disk with calculable reverberation time delays. Wilkins calls these ‘echoes’ because they are the same X-ray flares but reflected from the back of the disk. In essence, this gives us the first information we have to characterize the far side of a black hole, and follows from Wilkins’ earlier research. The scientist adds:

“This magnetic field getting tied up and then snapping close to the black hole heats everything around it and produces these high energy electrons that then go on to produce the X-rays. I’ve been building theoretical predictions of how these echoes appear to us for a few years. I’d already seen them in the theory I’ve been developing, so once I saw them in the telescope observations, I could figure out the connection.”

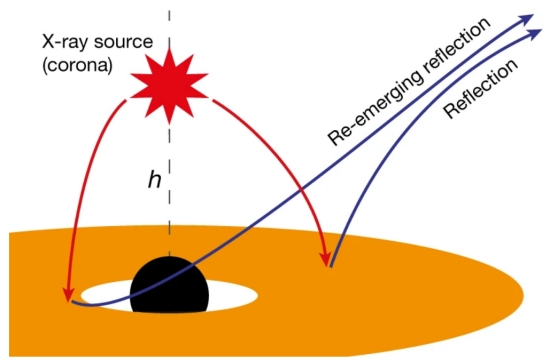

Image: This is Figure 2 from the paper, labelled “Schematic of the X-ray reverberation model.” Caption: X-rays are emitted from a corona of energetic particles close to the black hole. Some of these rays reach the observer directly, but some illuminate the inner regions of the accretion disk and are observed reflected from the disk. Strong light bending in the gravitational field around the black hole focuses the rays towards the black hole and onto the inner regions of the disk. Rays reflected from the back of the disk can be bent around the (spinning) black hole, allowing the re-emergence of X-rays from parts of the disk that would classically be hidden behind the black hole. Credit: Wilkins et al.

What I find extraordinary is that we are getting a glimpse of the accretion disk at work to a fine level of detail. The flares in this black hole’s corona “reveal the temporal response of the illuminated accretion disk,” as the paper puts it, going on to explain the strong Doppler effects at play here:

Emission line photons from different parts of the disk experience different Doppler shifts, due to the variation in the line-of-sight velocity across the disk, and also experience gravitational redshifts, which increase closer to the black hole. The energy shifts of the line photons therefore contain information about the positions on the accretion disk from which they were emitted. The light travel time varies according to the distance of each part of the disk from the corona, and the line emission at different energy shifts is expected to respond to the flare at different times.

Data from the coronal flares and their reverberations allow the team to measure the height of the X-ray source above the disk and also to measure the mass of the black hole itself, which turns out to be on the order of 30 million solar masses. The authors go into detail about the factors at play in the mass estimate, and as the paper is available online, I’ll leave that analysis for those interested in following it up themselves.

Once again black holes provide a way of seeing what had been thought unobservable. Co-author Roger Blandford, also at Stanford, takes note of how far we’ve come:

“Fifty years ago, when astrophysicists started speculating about how the magnetic field might behave close to a black hole, they had no idea that one day we might have the techniques to observe this directly and see Einstein’s general theory of relativity in action.”

Pushing yet deeper will be the European Space Agency’s X-ray observatory Athena (Advanced Telescope for High-ENergy Astrophysics). Wilkins is part of the team developing the Wide Field Imager detector for the telescope, scheduled for launch in 2031.

The paper is Wilkins et al., “Light bending and X-ray echoes from behind a supermassive black hole,” Nature 595 (2021), 657-660 (abstract).

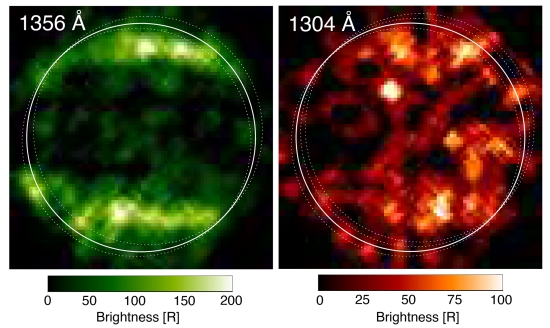

Sublimation Producing Water Vapor on Ganymede

Hubble observations from the past two decades have been recently re-examined as a way of investigating what is happening in the tenuous atmosphere of Ganymede, the largest moon in the Solar System. It was in 1998 that the telescope’s Space Telescope Imaging Spectrograph took the first images of Ganymede at ultraviolet wavelengths, showing auroral bands — ribbons of electrified gas — that reinforced earlier evidence that the moon had a weak magnetic field. Now we have news of sublimated water vapor within the atmosphere, an earlier prediction now verified.

Ganymede’s atmosphere, such as it is, is the result of charged particles and solar radiation eroding its icy surface, producing both molecular (02) and atomic oxygen (0) as well as H20, with the molecular oxygen long thought to be the most abundant constituent overall. Surface temperatures are as extreme as you would expect, roughly between 80 K and 150 K (-193 °C to -123 °C).

In 2018, a team led by Lorenz Roth (KTH Royal Institute of Technology in Stockholm) again turned to Hubble, this time in support of the ongoing Juno mission. The goal was to measure Ganymede’s atomic oxygen (0) as a way of resolving differences in the 1998 ultraviolet observations, which were thought to be the result of higher concentrations of atomic oxygen in some parts of the atmosphere. The result was surprising.

Roth’s team used data from Hubble’s Cosmic Origins Spectrograph along with archival data from the Space Telescope Imaging Spectrograph taken in 1998 and 2010. There was little trace of atomic oxygen in Ganymede’s atmosphere, meaning that differences in the auroral images must have some other explanation. The relative distribution of the aurorae allowed the scientists to map them against projected water sources on the surface, released when the moon sublimates water molecules as the equator warms. The phenomenon has no connection with the moon’s subsurface ocean, thought to be buried 150 kilometers beneath the surface.

The fit is strong: The area where water vapor would be expected in Ganymede’s atmosphere, around noon at the equator, correlates with the differences in the ultraviolet images. Roth thinks water vapor produced by sublimation (ice turning directly to vapor with no intervening liquid state) is the explanation:

“So far only the molecular oxygen had been observed,. This is produced when charged particles erode the ice surface. The water vapor that we measured now originates from ice sublimation caused by the thermal escape of water vapor from warm icy regions.”

Image: In 1998, Hubble’s Space Telescope Imaging Spectrograph took these first ultraviolet images of Ganymede, which revealed a particular pattern in the observed emissions from the moon’s atmosphere. The moon displays auroral bands that are somewhat similar to aurora ovals observed on Earth and other planets with magnetic fields. This was illustrative evidence for the fact that Ganymede has a permanent magnetic field. The similarities in the ultraviolet observations were explained by the presence of molecular oxygen. The differences were explained at the time by the presence of atomic oxygen, which produces a signal that affects one UV color more than the other. Credit: NASA, ESA, Lorenz Roth (KTH).

The paper goes on to point out the significance of the observation (italics mine):

The low oxygen emission ratios in the center of Ganymede’s observed hemispheres are consistent with a locally H2O-dominated atmosphere. With phase angles around 10? …the disk centers are close to the sub-solar points…. A viable source for H2O in Ganymede’s atmosphere can be sublimation in the low-latitude sub-solar regions, where an H2O-dominated atmosphere was indeed predicted by atmosphere models. Our derived H2O mixing ratios are in agreement with these predictions. While previously detected tenuous atmospheres around icy moons in the outer solar system were consistent with surface sputtering (or active outgassing) as source for the neutrals our analysis provides the first evidence for a sublimated atmosphere on an icy moon in the outer solar system.

Image: This image of the Jovian moon Ganymede was obtained by the JunoCam imager aboard NASA’s Juno spacecraft during its June 7, 2021, flyby of the icy moon. At the time of closest approach, Juno was within 1,038 kilometers of its surface – closer to Jupiter’s largest moon than any other spacecraft has come in more than two decades. Credit: NASA/JPL-Caltech/SwRI/MSSS.

So we have an atmosphere with what the paper describes as “a pronounced day/night asymmetry.” It’s an important finding for future missions, for atmospheric asymmetries will turn up in data on the magnetosphere and space plasma, meaning numerical simulations and data analysis for future missions have to incorporate them. This points to JUICE, the Jupiter Icy Moons Explorer mission, which will put eleven science instruments past Ganymede in a series of flybys and later orbital operations.

That itself is a stunning thought to someone who grew up thinking about Ganymede as a Poul Anderson novel setting. We’ll have a spacecraft orbiting the moon for a minimum of 280 days, if all goes according to plan, giving scientists abundant data about both the surface and atmosphere. The Roth paper provides significant information about the role of sublimation that will refine the JUICE observing plan.

The paper is Roth et al., “A sublimated water atmosphere on Ganymede detected from Hubble Space Telescope observations,” Nature Astronomy 26 July 2021 (abstract / preprint).

A Path to Planet Formation in Binary Systems

How planets grow in double-star systems has always held a particular fascination for me. The reason is probably obvious: In my younger days, when no exoplanets had been discovered, the question of what kind of planetary systems were possible around multiple stars was wide open. And there was Alpha Centauri in our southern skies, taunting us by its very presence. Could a life-laden planet be right next door?

What Kedron Silsbee and Roman Rafikov have been working on extends well beyond Alpha Centauri, usefully enough, and helps us look into how binaries like Centauri A and B form planets. Says Rafikov (University of Cambridge), “A system like this would be the equivalent of a second Sun where Uranus is, which would have made our own solar system look very different.” How true. In fact, imagining how different our system would work if we had a star among the outer planets raises wonderful questions.

Could we have a habitable world around each star in such a binary? And if so, wouldn’t the incentive to develop spaceflight take hold early among the denizens of such a world? We used to imagine a habitable Mars, by stretching Percival Lowell’s observations of what Giovanni Schiaparelli described as ‘canali’ (‘channels’) to their limit. How much more would a green and blue world with clouds and oceans beckon?

Image: Artist’s impression of a hypothetical planet around Alpha Centauri B. Credit: ESO/L. Calçada/N. Risinger.

But back to Rafikov, whose paper with Silsbee (Max Planck Institute for Extraterrestrial Physics) has been accepted at Astronomy & Astrophysics. The two researchers have refined binary star planet formation through a series of simulations, with Alpha Centauri in mind as well as the tight binary Gamma Cephei, a K-class star with red dwarf companion and a planet orbiting the primary. Silsbee explains the problem they were trying to solve: How does the companion star affect the existing protoplanetary disk of the other? He adds:

“In a system with a single star the particles in the disc are moving at low velocities, so they easily stick together when they collide, allowing them to grow. But because of the gravitational ‘eggbeater’ effect of the companion star in a binary system, the solid particles there collide with each other at much higher velocity. So, when they collide, they destroy each other.”

Gamma Cephei is a case in point: The system yields planetesimal collision velocities of several kilometers per second at the 2 AU distance of the system’s known planet, which should be enough, the authors note, to destroy even planetesimals as large as hundreds of kilometers in size. This problem appears in the literature as the fragmentation barrier, and it looms large, even when taking into account the aerodynamic drag induced by the gases of the protoplanetary disk. We can expect high collision velocities here.

And there go the planetesimals, which should, according to core accretion theory, grow out of dust particles as they gradually begin to bulk up into larger solid bodies. Given that we now know about numerous exoplanets in binary systems, how did they emerge? Were they all ‘rogue’ planets that ambled into the gravitational influence of the binary pair? And if that idea seems unlikely, how then do we explain their growth?

Rafikov and Silsbee show through their simulations that given realistic processes and the mathematics to describe them, such worlds will emerge. Incorporated in the resulting model is a new look at the question of gas drag and its effects. They find that drag in the disk — Silsbee likens it to a kind of wind — can indeed alter planetesimal dynamics and can offset the gravitational influence of the nearby stellar companion.

For although a number of earlier studies included gas drag in their models, their calculations ignored the effect of disk gravity, which according to the authors changes the dynamics of the population of planetesimals. They are able to identify quiet zones in the disk in which planetesimals can grow into planets. And they believe their model fully accounts for planetesimal dynamics throughout the young system. Among their conclusions:

The gravitational effect of the protoplanetary disk plays the key role in lowering the minimum initial planetesimal size necessary for sustained growth by a factor of four. This reduction can be achieved in protoplanetary disks apsidally aligned with the binary, in which a dynamically quiet zone appears within the disk provided that the mass-weighted mean disk eccentricity ≲ 0.05…

And this:

For most disk parameters considered in this paper, planet formation in binaries such as γ Cephei can successfully occur provided that the initial planetesimal size is ≲ 10 km; however, for favorable disk parameters, this minimum initial size can go down to ≲ 1 km.

We should expect, then, that planets could form in systems like Alpha Centauri, where the hunt for worlds around the Centauri A and B pair continues. This can occur if the planetesimals can reach this minimum size, and it assumes a protoplanetary disk that is close to circular. Given those parameters, planetesimal relative velocities are slow enough in certain parts of the disk to allow planet formation to take place.

How to get planetesimals to the minimum size needed? The streaming instability model of planetesimal formation may be operational here, in which the planetesimals grow rapidly. In this model, drag in the disk slows solid particles and leads to their swift agglomeration into clumps that can gravitationally collapse. Streaming instability is a rapid alternative to the alternate theory of planetesimals growing steadily through coagulation alone. In fact, the paper cites a timescale of tens of local orbital periods, rapidly producing a population of ‘seed’ planetesimals.

Whether or not streaming instability does offer a pathway to planets is a question that is still unresolved, though the theory has implications for planet formation around single stars as well. It certainly eases formation in the binaries considered here.

The paper is Silsbee & Rafikov, “Planet Formation in Stellar Binaries: Global Simulations of Planetesimal Growth,” accepted at Astronomy and Astrophysics (abstract / preprint).

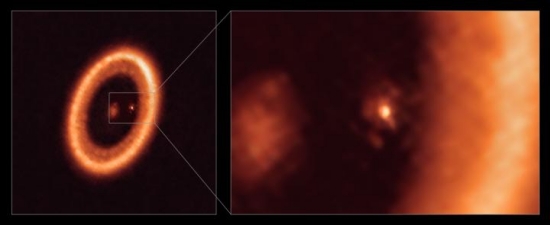

The Case of PDS 70 and a Moon-forming Disk

The things we look for around other stars do not necessarily surprise us. I think most astronomers were thinking we’d find planets around a lot of stars when the Kepler mission began its work. The question was how many — Kepler was to give us a statistical measurement on the planet population within its field of stars, and it succeeded brilliantly. These days it seems clear that we can find planets around most stars, in all kinds of sizes and orbits, as we continue to seek an Earth 2.0..

The continuing news about the star PDS 70, a young T Tauri star about 400 light years away in Centaurus, fits the same mold. Here we’re talking not just about planets but their moons. No exomoons have been confirmed, but there seems no reason to assume we won’t begin to find them — surely the process of forming moons is as universal as that of planet formation. The interest is in the observation, how it is made, and what it implies about our ability to move forward in characterizing planetary systems.

The process takes time, and results can be ambiguous. Back in 2019, PDS 70 was the subject of work performed at Monash University (Australia), led by Valentin Christiaens. The story received an exomoon splash in the press: The researchers believed they were looking at a circumplanetary disk around one of two gas giants forming in this system (see Exoplanet Moons in Formation?).

Everything pointed to a moon-forming disk around one of two young gas giants in the system, though the conclusion could only be considered tentative. I want to mention this because work from the European Southern Observatory that we’ll discuss today also finds a circumplanetary disk at PDS 70, though not around the same still-forming planet examined in the Christiaens et al. study. What an intriguing system this is!

While Christiaens and team looked at PDS 70b, the ESO work examines new high-resolution images of the second gas giant, PDS 70c, using data obtained through the Atacama Large Millimetre/submillimetre Array (ALMA). Led by Myriam Benisty (University of Grenoble and University of Chile), the international team now declares the detection of a circumplanetary disk — though not yet a moon — unambiguous. Says Benisty:

“Our work presents a clear detection of a disk in which satellites could be forming. Our ALMA observations were obtained at such exquisite resolution that we could clearly identify that the disk is associated with the planet and we are able to constrain its size for the first time.”

Image: This image shows wide (left) and close-up (right) views of the moon-forming disk surrounding PDS 70c, a young Jupiter-like planet nearly 400 light-years away. The close-up view shows PDS 70c and its circumplanetary disk center-front, with the larger circumstellar ring-like disk taking up most of the right-hand side of the image. The star PDS 70 is at the center of the wide-view image on the left. Two planets have been found in the system, PDS 70c and PDS 70b, the latter not being visible in this image. They have carved a cavity in the circumstellar disk as they gobbled up material from the disk itself, growing in size. In this process, PDS 70c acquired its own circumplanetary disk, which contributes to the growth of the planet and where moons can form. This disk is as large as the Sun-Earth distance and has enough mass to form up to three satellites the size of the Moon. Credit: ALMA (ESO/NAOJ/NRAO)/Benisty et al.

The high-resolution data allow Benisty and team to state that the circumplanetary disk has a diameter of about 1 AU, with enough mass to form up to three moons the size of our own Moon. The planetary system forming around this star is reminiscent of the Jupiter and Saturn configuration in our own Solar System, though notice the size differential. The disk around PDS 70c is 500 times larger than Saturn’s rings. The two planets are also at much larger distances from the host star, and appear to be migrating inward. We are seeing the system in the process of formation, which should offer insights into how not just moons but planets themselves form around infant stars.

Interestingly, the second world here, PDS 70b, does not show evidence of a circumplanetary disk in the ALMA data. One supposition is that it is being starved of dusty material by PDS 70c, although other mechanisms are possible. Here’s a bit more on this from the paper, noting an apparent transport mechanism between disk and forming planet:

These ALMA observations shed new light on the origin of the mm emission close to planet b. The emission is diffuse with a low surface brightness and is suggestive of a streamer of material connecting the planets to the inner disk, providing insights into the transport of material through a cavity generated by two massive planets.

And as to PDS 70b:

The non-detection of a point source around PDS 70 b indicates a smaller and/or less massive CPD [circumplanetary disk] around planet b as compared to planet c, due to the filtering of dust grains by planet c preventing large amount of dust to leak through the cavity, or that the nature of the two CPDs differ. We also detect a faint inner disk emission that could be reproduced with small 1 µm dust grains, and resolve the outer disk into two substructures (a bright ring and an inner shoulder).

The Monash University team in Australia was able to image PDS 70b in the infrared and, like the ESO astronomers, was able to find a spiral arm seeming to feed a circumplanetary disk, while making the case for PDS 70b as the world with the disk. Remember that the two teams were working with different instrumentation and at different wavelengths — the Monash researchers operated at infrared wavelengths to analyze the spectrum of the planet produced by SINFONI (Spectrograph for INtegral Field Observations in the Near Infrared) at the Very Large Telescope in Chile. The ESO team used data from ALMA.

So do we have one or two circumplanetary disks in this system? We’ll see how this is resolved as the investigation of the planets around PDS 70 continues through a variety of instruments. For the importance of the system is clear, as the Benisty paper argues:

Detailed studies of the circumplanetary disks, and of the leakage of material through the cavity, will provide strong constraints on the formation of satellites around gas giants, and on the ability to provide the mass reservoir needed to form terrestrial planets in the inner regions of the disk. Upcoming studies of the gas kinematics and chemistry of PDS 70 will complement the view provided by this work, serving as a benchmark for models of satellite formation, planet-disk interactions and delivery of chemically enriched material to planetary atmospheres.

The paper is Benisty et al., “A Circumplanetary Disk around PDS70c,” Astrophysical Journal Letters Vol. 916, No. 1 (22 July 2021). Abstract.