Reminiscing about some of Robert Forward’s mind-boggling concepts, as I did in my last post, reminds me that it was both Forward as well as the Daedalus project that convinced many people to look deeper into the prospect of interstellar flight. Not that there weren’t predecessors – Les Shepherd comes immediately to mind (see The Worldship of 1953) – but Forward was able to advance a key point: Interstellar flight is possible within known physics. He argued that the problem was one of engineering.

Daedalus made the same point. When the British Interplanetary Society came up with a starship design that grew out of freelance scientists and engineers working on their own dime in a friendly pub, the notion was not to actually build a starship that would bankrupt an entire planet for a simple flyby mission. Rather, it was to demonstrate that even with technologies that could be extrapolated in the 1970s, there were ways to reach the stars within the realm of known physics. Starflight was incredibly hard and expensive, but if it were possible, we could try to figure out how to make it feasible.

And if figuring it out takes centuries rather than decades, what of it? The stars are a goal for humanity, not for individuals. Reaching them is a multi-generational effort that builds one mission at a time. At any point in the process, we do what we can.

What steps can we take along the way to start moving up the kind of technological ladder that Phil Lubin and Alexander Cohen examine in their recent paper? Because you can’t just jump to Forward’s 1000-kilometer sails pushed by a beam from a power station in solar orbit that feeds a gigantic Fresnel lens constructed in the outer Solar System between the orbits of Saturn and Uranus. The laser power demand for some of Forward’s missions is roughly 1000 times our current power consumption. That is to say, 1000 times the power consumption of our entire civilization.

Clearly, we have to find a way to start at the other end, looking at just how beamed energy technologies can produce early benefits through far smaller-scale missions right here in the Solar System. Lubin and Cohen hope to build on those by leveraging the exponential growth we see in some sectors of the electronics and photonics industries, which gives us that tricky moving target we looked at last time. How accurately can you estimate where we’ll be in ten years? How stable is the term ‘exponential’?

These are difficult questions, but we do see trends here that are sharply different from what we’ve observed in chemical rocketry, where we’re still using launch vehicles that anyone watching a Mercury astronaut blast off in 1961 would understand. Consumer demand doesn’t drive chemical propulsion, but in terms of power beaming, we obviously do have electronics and photonics industries in which the role of the consumer plays a key role. We also see the exponential growth in capability paralleled by exponential decreases in cost in areas that can benefit beamed technologies.

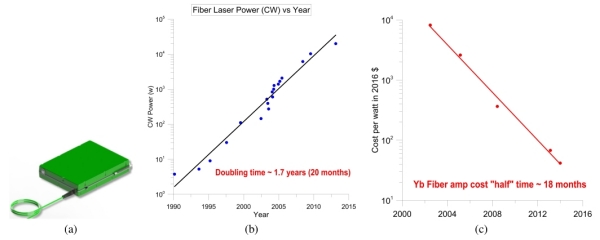

Lubin and Cohen see such growth as the key to a sustainable program that builds capability in a series of steps, moving ever outward in terms of mission complexity and speed. Have a look at trends in photonics, as shown in Figure 5 of their paper.

Image (click to enlarge): This is Figure 5 from the paper. Caption: (a) Picture of current 1-3 kW class Yb laser amplifier which forms the baseline approach for our design. Fiber output is shown at lower left. Mass is approx 5 kg and size is approximately that of this page. This will evolve rapidly, but is already sufficient to begin. Courtesy Nufern. (b) CW fiber laser power vs year over 25 years showing a “Moore’s Law” like progression with a doubling time of about 20 months. (c) CW fiber lasers and Yb fiber laser amplifiers (baselined in this paper) cost/watt with an inflation index correction to bring it to 2016 dollars. Note the excellent fit to an exponential with a cost “halving” time of 18 months.

Such growth makes developing a cost-optimized model for beamed propulsion a tricky proposition. We’ve talked in these pages before about the need for such a model, particularly in Jim Benford’s Beamer Technology for Reaching the Solar Gravity Focus Line, where he presented his analysis of cost optimized systems operating at different wavelengths. That article grew out of his paper “Intermediate Beamers for Starshot: Probes to the Sun’s Inner Gravity Focus” (JBIS 72, pg. 51), written with Greg Matloff in 2019. I should also mention Benford’s “Starship Sails Propelled by Cost-Optimized Directed Energy” (JBIS 66, pg. 85 – abstract), and note that Kevin Parkin authored “The Breakthrough Starshot System Model” (Acta Astronautica 152, 370-384) in 2018 (full text). So resources are there for comparative analysis on the matter.

But let’s talk some more about the laser driver that can produce the beam needed to power space missions like those in the Lubin and Cohen paper, remembering that while interstellar flight is a long-term goal, much smaller systems can grow through such research as we test and refine missions of scientific value to nearby targets. The authors see the photon driver as a phased laser array, the idea being to replace a single huge laser with numerous laser amplifiers in what is called a “MOPA (Master Oscillator Power Amplifier) configuration with a baseline of Yb [ytterbium] amplifiers operating at 1064 nm.”

Lubin has been working on this concept through his Starlight program at UC-Santa Barbara, which has received Phase I and II funding through NASA’s Innovative Advanced Concepts program under the headings DEEP-IN (Directed Energy Propulsion for Interstellar Exploration) and DEIS (Directed Energy Interstellar Studies). You’ll also recognize the laser-driven sail concept as a key part of the Breakthrough Starshot effort, for which Lubin continues to serve as a consultant.

Crucial to the laser array concept in economic terms is that the array replaces conventional optics with numerous low-cost optical elements. The idea scales in interesting ways, as the paper notes:

The basic system topology is scalable to any level of power and array size where the tradeoff is between the spacecraft mass and speed and hence the “steps on the ladder.” One of the advantages of this approach is that once a laser driver is constructed it can be used on a wide variety of missions, from large mass interplanetary to low mass interstellar probes, and can be amortized over a very large range of missions.

So immediately we’re talking about building not a one-off interstellar mission (another Daedalus, though using beamed energy rather than fusion and at a much different scale), but rather a system that can begin producing scientific returns early in the process as we resolve such issues as phase locking to maintain the integrity of the beam. The authors liken this approach to building a supercomputer from a large number of modest processors. As it scales up, such a system could produce:

- Beamed power for ion engine systems (as discussed in the previous post);

- Power to distant spacecraft, possibly eliminating onboard radioisotope thermoelectric generators (RTG);

- Planetary defense systems against asteroids and comets;

- Laser scanning (LIDAR) to identify nearby objects and analyze them.

Take this to a full-scale 50 to 100 GW system and you can push a tiny payload (like Starshot’s ‘spacecraft on a chip’) to perhaps 25 percent of lightspeed using a meter-class reflective sail illuminated for a matter of no more than minutes. Whether you could get data back from it is another matter, and a severe constraint upon the Starshot program, though one that continues to be analyzed by its scientists.

But let me dwell on closer possibilities: A system like this could also push a 100 kg payload to 0.01 c and – the one that really catches my eye – a 10,000 kg payload to more than 1,000 kilometers per second. At this scale of mass, the authors think we’d be better off going to IDM methods, with the beam supplying power to onboard propulsion, but the point is we would have startlingly swift options for reaching the outer Solar System and beyond with payloads allowing complex operations there.

If we can build it, a laser array like this can be modular, drawing on mass production for its key elements and thus achieving economies of scale. It is an enabler for interstellar missions but also a tool for building infrastructure in the Solar System:

There are very large economies of scale in such a system in addition to the exponential growth. The system has no expendables, is completely solid state, and can run continuously for years on end. Industrial fiber lasers have MTBF in excess of 50,000 hours. The revolution in solid state lighting including upcoming laser lighting will only further increase the performance and lower costs. The “wall plug” efficiency is excellent at 42% as of this year. The same basic system can also be used as a phased array telescope for the receive side in the laser communications as well as for future kilometer-scale telescopes for specialized applications such as spectroscopy of exoplanet atmospheres and high redshift cosmology studies…

Such capabilities have to be matched against the complications inevitable in such a design. These ideas are reliant on the prospect of industrial capacity catching up, a process that is mitigated by finding technologies driven by other sectors or produced in mass quantities so as to reach the needed price point. A major issue: Can laser amplifiers parallel what is happening in the current LED lighting market, where costs continue to plummet? A parallel movement in laser amplifiers would, over the next 20 years, reduce their cost enough that it would not dominate the overall system cost.

This is problematic. Lubin and Cohen point out that LED costs are driven by the large volume needed. There is no such demand in laser amplifiers. Can we expect the exponential growth to continue in this area? I asked Dr. Lubin about this in an email. Given the importance of the issue, I want to quote his response at some length:

There are a number of ways we are looking at the economics of laser amplifiers. Currently we are using fiber based amplifiers pumped by diode lasers. There are other types of amplification that include direct semiconductor amplifiers known as SOA (Semiconductor Optical Amplifier). This is an emerging technology that may be a path forward in the future. This is an example of trying to predict the future based on current technology. Often the future is not just “more of the same” but rather the future often is disrupted by new technologies. This is part of a future we refer to as “integrated photonics” where the phase shifting and amplification are done “on wafer” much like computation is done “on wafer” with the CPU, memory, GPU and auxiliary electronics all integrated in a single elements (chip/ wafer).

Lubin uses the analogy of a modern personal computer as compared to an ENIAC machine from 1943, as we went from room-sized computers that drew 100 kW to something that, today, we can hold in our hands and carry in our pockets. We enjoy a modern version that is about 1 billion times faster and features a billion times the memory. And he continues:

In the case of our current technique of using fiber based amplifiers the “intrinsic raw materials cost” of the fiber laser amplifier is very low and if you look at every part of the full system, the intrinsic costs are quite low per sub element. This works to our advantage as we can test the basic system performance incrementally and as we enlarge the scale to increase its capability, we will be able to reduce the final costs due to the continuing exponential growth in technology. To some extent this is similar to deploying solar PV [photovoltaics]. The more we deploy the cheaper it gets per watt deployed, and what was not long ago conceivable in terms of scale is now readily accomplished.

Hence the need to find out how to optimize the cost of the laser array that is critical to a beamed energy propulsion infrastructure. The paper is offered as an attempt to produce such a cost function, to take in the wide range of system parameters and their complex connections. Comparing their results to past NASA programs, Lubin and Cohen point out that exponential technologies fundamentally change the game, with the cost of the research and development phase being amortised over decades. Moreover, directed energy systems are driven by market factors in areas as diverse as telecommunications and commercial electronics in a long-term development phase.

An effective cost model generates the best cost given the parameters necessary to produce a product. A cost function that takes into account the complex interconnections here is, to say the least, challenging, and I leave the reader to explore the equations the authors develop in the search for cost minimums, relating system parameters to the physics. Thus speed and mass are related to power, array size, wavelength, and so on. The model also examines staged system goals – in other words, it considers the various milestones that can be achieved as the system grows.

Bear in mind that this is a cost model, not a cost estimate, which the authors argue would not be not credible given the long-term nature of the proposed program. But it’s a model based on cost expectations drawn from existing technologies. We can see that the worldwide photonics market is expected to exceed $1 trillion by this year (growing from $180 billion in 2016), with annual growth rates of 20 percent.

These are numbers that dwarf the current chemical launch industry; Lubin and Cohen consider them to reveal the “engine upon which a DE program would be propelled” through the integration of photonics and mass production. While fundamental physics drives the analytical cost model, it is the long term emerging trends that set the cost parameters in the model.

Today’s paper is Lubin & Cohen, “The Economics of Interstellar Flight,” to be published in a special issue of Acta Astronautica (preprint).

As the saying goes, “Trends continue, until they don’t”. Moore’s Law of doubling transistors per chip is close to the limits of current silicon technology. Ideas to extend it require different layouts (3D instead of 2D), and a shift to new materials, such as graphene, to name but 2. CPU clock speeds have long since peaked. Multiple core architecture is the current game (who still has a single-core CPU at the heart of their computer?), but there are limits to that if the cores must communicate.

Every technology goes through a logistic growth phase, with early exponential growth, an inflection point, and a slowing growth to an asymptote. Commercial jet aircraft are slightly slower than they used to be, although much cheaper per ticket price. But changes are marginal now. The performance of a current-generation passenger jet is fairly similar to a 50-year vintage Boeing 747.

On this blog we have made similar trend forecasts for economic growth, assuming X% growth for the next few hundred years before we can launch a large starship. As the last few years have shown, the increasing need for energy and material to support this growth is creating a climate crisis, extensive environmental damage, and loss of biodiversity. A fully decarbonized energy system will push the inevitable unsustainable heat load on the planet into the future, but not a far future. Exponential growth changes things very rapidly, as we see in the current rate of omicron-variant Covid infection rates.

All this is to say we should beware of the seductive temptation to extrapolate exponential growth patterns. They can come to sudden slowdown, hit a hard constraint, or even reverse.

Lubin might get his cheap-enough laser arrays through cost reductions and performance increases, or he may not. It is not impossible that we are on the brink of our current global civilizational upper bound and that it might even reverse, with a declining population, lower outputs, very slow technology development except in specialized areas, reduced GDP/capita, etc. Think of the disruption to Ancient Rome when the empire reached its limits, started to retract, and the Western empire fell. Living conditions in the outer reaches of the empire, such as Britannia fell as Rome abandoned this corner of the empire, and that was well before the trashing of the city of Rome itself. At its peak, Rome had a constant flow of grain shipments from Egypt to maintain its population, not unlike the container ships of today, and the supply chains we have established, and are now somewhat damaged. Peak global trade in the early 20th century peaked in 1914, and didn’t recover that level until the 1970s. One can see the effects in consumption patterns as a result, and of course, the depression between the 2 world wars made it so much worse.

SO just as the 1960s predictions of shiny technology and space projects failed to materialize, so might the current projections of a similar shiny future fail to materialize. Autonomous cars instead of flying cars. Beamed propulsion instead of nuclear propulsion. Humanoid robots…well they never happened, and maybe a longer to appear than we think, unless a breakthrough appears.

So I hope we get beamed propulsion, especially in teh solar system, as that seems to be a good piece of technology infrastructure to really explore and exploit our system, allowing economic growth to continue by shifting much of the growth off-planet. (The anti-METI folks must be freaking out at the thought of multi-GW laser beams firing into the void unambiguously signaling our presence to the stars.) It may be a shiny future stillborn by the reality of the conditions on Earth.

“the increasing need for energy and material to support this growth is creating a climate crisis”

LOL. 1,2 degrees in 120 years is not a climate crisis.

You have made it quite plain in the past you are a climate crisis denier. However, your opinion is at odds with so many scientists in so many disciplines. It is even obvious to the layman at this point. Further warming is already “baked in” even if we stopped emitting any excess CO2/CH4 today, of which there is no intention of doing with a “net zero by 2050” goal (but not universal commitment).

Space Solar Power is both an answer to climate change and power beaming…as solar powersats are SEPs without the xenon. They can double as sunshades…which is better than acid-rain producing injections of sulfur dioxide aerosols that with Tonga’s recent eruption could have gotten us too cold. Dyson Harrop cables might even help drain a future Carrington event. Space Solar Power gets spaceflight into the energy sector where the real money is..and can be the rising tide to lift all boats. So—no, NASA bills should not be co-opted into other things…and NASA needs budget increases with defense cuts going to SSP. Ground based wind and solar kill birds…rectenna won’t. Having ground based Green power and laser beaming compete for Federal funds.

Space Solar Power, starwisps and grid protection projects use the SAME funds, help red space states…give green power for blue states.

I suggest going on Rogan and having the “problem solver caucus” lead this effort.

We need positive news damn it!

Don’t Laugh Antonio. You should take is seriously. The Pliocene was only 2 to 3 degrees centigrade warner than pre industrial periods. This is the average world wide increase, but not the increase by location. For example “latitudes, as much as 10–20 °C warmer than today above 70°N.” The Pliocene, Wikipedia.https://en.wikipedia.org/wiki/Pliocene_climate

Our carbon dioxide levels are 415 parts per million which is the same level as the Pliocene, so at some point in the future our temperatures and sea level rise will be the same as in the Pliocene. The sea level was 30 meters higher in the Pliocene or ninety feet. The carbon dioxide levels of the past are proven by the ice core samples and the drilled sea floor core sediment samples which uses the sea shells of microscopic organisms. Scientists use the boron 11/10 as well as the oxygen O18/O16 ratios to get the CO2 levels and temperature, ice covering and rainfall.

https://today.tamu.edu/2021/06/14/ancient-deepsea-shells-reveal-66-million-years-of-carbon-dioxide-levels/

https://pubs.usgs.gov/fs/0117-95/report.pdf

Since we are here we must have survived the ‘deadly…the skies are falling’ cough, cough Pliocine climate change era. I wonder what the west will do when the snow and ice returns to Europe !

Who is “we”? The Pliocene period was 5.332 million to 2.588 million ya. During that time our ancestors were Australopithecus sp. We were living as hunter-gatherers, with no civilization or agriculture. We also know that subsequent to that time our numbers were reduced to a genetic bottleneck for some environmental event while we were still in the pre-agricultural, pre-civilizational state. No complex supply chains for food, or manufactured items like chipped flint or obsidian. No huge population sizes dependent on high energy inputs for industrialized agriculture. Able to “mass” migrate if the local climate changed.

If the Gulf Stream slows sufficiently so that northern Europe experiences Siberian winters, the effect on the populations there will be devastating. It will make the recent heat deaths look like a small blip. Populations will freeze and starve, just as they did in Stalin’s Russia. And due to climate impacting agriculture globally, there will not be huge shipments from elsewhere to mitigate the food [and fuel] shortages. And what is supposed to happen with those living in the tropics that become unlivable, or those where water has run out? Where do they migrate to?

Arguments that it was hotter/colder in the past, that humans lived during periods of different climates, are irrelevant to the current situation where we have expanded to [and beyond] the sustainable population of the planet, relying on a stable climate for agriculture patterns. Any rapid change over decades is not adjustable, and ecosystems cannot migrate quickly either. When California’s extensive supply of fruit and veg to the USA is curtailed due to lack of water, what are you going to do, eat cornflakes or tortillas at every meal? Maybe Soylent [Green]?

Corn needs water too. I hope you like peas and lentils.

Beans also, but even beans, peas and lentils still need some water.

I think you understand my point. CA supplies very large fractions of the fruit and veg consumption in the US. As CA dries out, the decline in those products will increase. It isn’t helped by the farming community switching to nut exports, worsening the problem. Grass crops like corn can produce on lower water requirements, but who wants to live on a diet of corn and related grass products? As the midwest climate becomes more variable too, even these crops will fail.

Maybe we can spend the $ and cover the cropland in greenhouses to retain moisture, as they are doing in the ME. Desalinization of seawater would need to be a heroic effort, but mitigated somewhat with greenhouse technologies.

In any case, climate change is already worsening crop production all over the world, and this will lead to mass starvation. The baby boomer generation has never experienced severe food rationing. This may be influencing our reaction to the climate. A market-based food supply economy with scarce supplies is going to look ugly. Imagine the 2020 shortages in the grocery stores going on indefinitely. In WWII Britain, there were severe penalties for farmers holding back food for their own consumption. The pandemic has given us a good taste of how many Americans regard community responsibility, and it takes little imagination to foresee the possible outcomes.

I find it interesting Alex that you are laying out in graphic detail all the tremendous shortages that will arise from this climate change. Several months ago I wrote on here that perhaps the best way to approach this problem would have been to have a drastic curtailing of the human population through lessening of the birth rate of the humans throughout the world. At that time you thought (and not so subtly) accused me of being misanthropic when I suggested that this might be a prudent course of action.

Now you seem to be changing your tune drastically because of perceived overpopulation and the inability to support these now burgeoning populations. Yet, I had suggested this as well as preached that large number of crops and domestic livestock be harvested and freeze-dried for storage just for such an occasion. I hate to say I told you so, but I told you so !!

Reducing the population by birth control. Let’s run some BoE numbers. Assume the average life span is 70 years. Assume that global births go to zero, as in “Children of Men”. In 35 years, the population falls by 50%, but with a demographic crisis as the population is now 35-70 years old. So even with an extreme population control, you have a disaster on your hands, and it still takes 35 years to get there, long after food shortages are apparent. @Antonio (?) once scolded me, correctly as it turned out, about China’s population growth decline. I read today that China is now instituting a 3-child policy to stem population growth decline! The experience in China and India with population control resulted in a massive sex ratio imbalance, favoring boys. What do you do when your male population becomes frustrated when there are insufficient women to marry? The usual answer is to find a way to reduce the imbalance by “rapid lead poisoning”. Again, not good. The bottom line, birth control is far too late to meaningfully mitigate food supply reductions due to droughts.

Historically, famines are mostly created by people reacting badly to changing circumstances. Sometimes it is set off by droughts. It has long been known that even during the famines of the past, the problem was not supply, but distribution. In CA, we are seeing a resurgence of the “water wars” where pro-ag business politicians pander to the demands of ag for “more water”, and the farmers demand the population pay for reservoirs and dams, use the very cheap water they get, and increasingly follow the profits by exporting their crops. Not even the barest touch of regulations on what can be farmed and how the crops are to be distributed.

Food chains exacerbate farmland use increases. Increased meat consumption results in more primary production of animal feed like soybeans. Reduce meat consumption and the typical 10:1 energy loss could feed more people with existing cropland – if it was distributed.

IOW, we could change the rules on consumption and distribution to offset the climate problem, and this would take effect almost immediately, just as wartime rationing did in Britain in WWII.

But as Heinlein exhorted his readers, we need to accept human nature as it is, not as we would like it to be. Droughts will cause food shortages, the food distribution will remain market-based to ensure the richer parts of the world remain reasonably fed, and violence and famine will increase elsewhere.

Unless there are good solutions to increase food supply that can be implemented within just a short time, we will be unleashing the Four Horsemen to balance supply and demand. I trust that is not your preferred solution.

Bottom line is that we need to try to offset global heating rather than accept it.

At this point I’m just willing to throw my hands up and say you win! It seems like you grasp at straws to bolster your arguments simply so that you can win your arguments. Now you’re saying that everything is because of “supply chain” issues which can possibly cause in some cases starvation. But if you saved food and you have reduced population then I can’t really imagine that the supply chain is the bottleneck in the entire process. But you think so and I don’t, so there!

I’m only attempting to think of a way to maximize one part of the problem and minimize another. That’s how I view all problems as a max-min problem

I thought my 1st paragraph demonstrated why population reduction through birth control would not work over the required time scales. Over the long term, sure, but that is happening anyway. But as Keynes said, in the long term, we are all dead.

” Grass crops like corn can produce on lower water requirements, but who wants to live on a diet of corn and related grass products? ”

Nobody, and that’s why mankind releasing CO2 was a good thing, not bad. Without some extra CO2 returned to the biosphere, the next glacial period could easily have resulted in an extinction event for C3 photosynthesis plants. Basically everything but the grasses, on land.

Even today C3 plants are suffering from CO2 starvation, which is why we have extensive grass lands.

Biomes are mainly the result of water and temperature. That is why we have deserts, and not forests where water is scarce. As CO2 levels are approximately the same all over the planet, you would need to explain why grasslands don’t exist everywhere if CO2 was the limiting factor for C3 plants where grasslands exist and why grasslands do not dominate boreal forest regions. Let’s not ignore over a century’s worth of biological study explaining geographical patterns of plant growth.

“Greenland is famous for its massive glaciers, but the region was relatively free of ice until about 2.7 million years ago, according to a new study. Before then, the Northern Hemisphere had been mostly ice-free for more than 500 million years, the researchers said.

The Greenland ice sheet began building after plate tectonics and the Earth’s shifting tilt reshaped the region, the researchers found. The team narrowed the cause down to three factors: plate tectonics that lifted the region, creating soaring snow-capped mountain peaks; a northward drift from plate tectonics; and a shift in the Earth’s axis that caused Greenland to move farther north, away from the sun’s warmth. ”

https://www.livescience.com/49361-greenland-ice-glacier-formation.html

“According to the latest findings, the transition from actual green land to a large mass covered in ice began approximately 3 million years ago. At that time, there were probably some areas of Greenland that were covered in ice, but it is believed that there was nothing as large or thick as the Greenland ice sheet. Additionally, the vast majority of the island would have been ice-free.”

https://oceanwide-expeditions.com/blog/how-and-when-did-greenland-become-covered-in-ice

And what is your point? That the world was warmer 3 mya when “we” were a tiny population of Australopithecines?

Pliocene and Eocene provide best analogs for near-future climates

My point was the higher sea level was related to lack glacial ice in Greenland as compared present levels. Also the world has changed so much one can’t compare sea level with modern sea levels to reflect how warm the world was. It was slightly warmer than modern.

Or we are living in Ice Age, which is called Late Cenozoic Ice Age, and the 2 million years has been cooler and is related to ice sheets forming in Greenland within this recent part of the 34 million years of our icehouse global climate called Late Cenozoic Ice Age.

Of course as taught during this time human were evolving as forests gave way to grasslands in Africa due to this global cooling.

But how is this in any way relevant to our current situation? Climate change will cause large disruptions to our food and water supply. The current global population is dependent on our current farming patterns. We cannot simply “agilely adjust” to changes. If the Greenland glaciers melt, Europe might well suffer extremely cold winters again. Sea level rises will be very costly to mitigate. In CA, a meter of sea level rise will start causing severe saltwater intrusions into the Central Valley aquifers, slowly shutting down that source of water in farms outwards from the Sacramento River delta.

You seem to imply that global warming is measured by sea-level rise. Nothing can be further from the truth. We can see warming is occurring from a variety of indications, from paleoclimate impacts on the biomes and ecosystems over time, the geology indicating continental glacier retreat, and yes, sea-level rise and land uplift to restore isostatic equilibrium as the glacial mass was removed (IIRC, Britain’s south coast is still rising in response to the retreat of the glaciers that reached London). Within a few decades, we can see today the retreat of glaciers around the world, and this is not on geologic time scales, but within living memories.

We have enough data recording to compare atmospheric gas compositions with local climate and weather patterns, and can use these to compare with deep time data to extrapolate what the world might be like in the near future.

“Alex Tolley January 17, 2022, 15:20

But how is this in any way relevant to our current situation? Climate change will cause large disruptions to our food and water supply. ”

Human civilization has had Climate Change.

One has to admit that turning the Sahara Desert into a desert, is massive climate change. Yes?

When was Sahara Desert green?

I will google it:

“14,500 to 5,000 years ago

About 14,500 to 5,000 years ago, North Africa was green with vegetation and the period is known as the Green Sahara or African Humid Period. Jun 2, 2021”

This period of time is called Holocene Optimum:

https://en.wikipedia.org/wiki/Holocene_climatic_optimum

And Sahara Desert is being mined for it’s “fossil water”. It has “fossil water” because it was a lot wetter. And endless other evidence that had rivers, lakes, forests and was almost entirely a grassland-or small parts possibly being a desert. AND it’s not one off, it occurs in every interglacial period, during the peak

of the interglacial period.

Or I would guess our ocean was around 4 C, and for last 5000 years, it’s been about 3.5 C. And during the “Little Ice Age” the ocean cooled by a tenth or two.

Sea level fell a bit. And it has warm up since the ending of Little Ice Age, with sea level rising about 7″ with about 2″ of the 7″ is due to ocean thermal expansion.

I would guess get about 1 foot of ocean expanse if warmed to about 4 C.

And:

Change over time

“More than 90 percent of the warming that has happened on Earth over the past 50 years has occurred in the ocean.”

https://www.climate.gov/news-features/understanding-climate/climate-change-ocean-heat-content

I’m glad you are now using evidence that is relevant to our human civilization. So yes, the Sahara was once grassland. Egypt was also the grain basket of the Mediterranean, supplying the ancient city of Rome with grain using convoys of grain ships, much as oil tankers supply our oil today. As I said, vegetation is dependent on rainfall, so what do the climate models tell us? Explainer: What climate models tell us about future rainfall is a brief, simplified version of rain and snowfall changes. Best to start with the paired high and low rainfall maps that show rather different forecasts for the Sahara, and the last map that shows where the average model has a consensus. The Mediterranean will dry out, including the ME bordering the Med, Israel, Lebanon. SW US and Mexico will dry out, as will SW Australia and S. Africa. Canada, N. Europe, Russia, the Himalayas will get wetter.

So despite the increase in temperature, Egypt’s Nile delta will not be a grain-producing region again, nor will Jordan’s rock city of Petra regain its water and become a wealthy trade route city. The consensus shows a lot of increased rainfall in the Eastern Sahara. Does this mean that grassland will appear again over this time frame? No. Cropland requires soil, and this requires organic carbon buildup, a rich soil biota, including worms, and fungi. Just pouring freshwater onto desert sand won’t work. The sands and poor soils will need to be worked, just as Israel demonstrated over decades of arid farming. With technology, we could try increasing the soil manufacture with local composting of plant wastes (shipped in from where?) , biochar, and building surface dams, as well as pumping the aquifers. We might just make the Sahara green again. Or…the worst scenarios are correct and the Sahara dries out even more, and the effort becomes almost futile. With lots of technology and resources, we could try greenhousing the Sahara, use aquaponics, and hope to at least grow fruits and vegetables. (England now has huge greenhouse facilities to grow high-value fruits like strawberries, as the climate warms. Real wine can now be made again, no more elderflower wine! That medieval warming is returning.) So that is possible, but will it happen? As CA dries out, one might think that such a rich state will be well ahead in this regard. Smart farmers will recognize that water is scarce, and therefore conserve it, growing edible crops for sale. But this is not happening at all. All that is happening is some experimentation with water application, changing crops for export, and using political muscle to grab a larger share of the available water. This isn’t to say that as goes CA, so goes the world, but I do think that the profit driver looks for the easiest solutions first, however destructive in the long term. Subsistence farmers don’t have room to experiment.

Lastly, rainfall totals are not the whole story, it is rainfall patterns. 2021 saw extensive flooding in England and Germany. Lots of water, but not helpful. Hurricanes will supply plenty of transient water for the US East Coast, but again not helpful. CA is expected to experience heavy rainfall from atmosphere rivers, but the annual supply over the summer from melting Sierra snowpack will disappear. This means farms dependent on that supply will die, and be bought by big ag businesses that can mine the aquifers while fighting for the surface water share.

The bottom line, IMO, a warming world will not likely adjust well in the coming decades of this century. Wait a few hundred years, or a thousand, and civilization will surely adapt. Population centers will change, diets will change, and hopefully, technology will continue to advance. Or it may look like the collapse of the Western Roman Empire on a near-global scale, with a slow climb back after a millennium or so, without the benefit of readily extractable fossil fuels if that civilization is forced into an energy reset.

“The bottom line, IMO, a warming world will not likely adjust well in the coming decades of this century. ”

Well what was asked is what going to happen in next decade in regards interstellar travel. Making a solar shade, is quite easy compared to interstellar travel. If we want to cool Earth we could do it. Likewise if we wanted to cool Venus we could do it. I don’t think we want to cool Earth or Venus. But we wanted to it would not cost much- it’s no where near the cost of traveling to a star.

But what we can do now, is explore the Moon and then Mars, which is pocket change. Or it’s just managing the current NASA budget in the correct direction, maybe even with lower NASA budget.

But adding 1 billion dollars to NASA now could push NASA is right direction. Apparently, NASA having problems related funding in regard to making new space suits, or at least it was given as one reason to delay exploring the Moon.

It seems to me that NASA has wasted decades, not exploring the Moon.

Is there or is there not mineable water on the Moon- and where is thought the best place to mine lunar water if lunar is mineable. There is no shortage of billionaires, who could invest in doing this {in order to make more billions of dollars}.

But as said, if want throw 1 trillion dollars at it, one make solar shade, now, but in future, it could cost much less than this- assuming you wanted to cool Earth which is in an Ice Age.

A 1/2 degree of cooling is worse than 1/2 degree of warming- everyone knows this.

In terms of Sahara Desert, I think Africa countries will start make it green within a couple decades. It’s mostly political problem rather than anything else. But political problems are hard to solve.

@gbaikie

The cheapest solution for shading the earth is to increase high albedo cloud cover using SO2.

However we shade the Earth, this is a bandaid as the CO2 emissions are acidifying the oceans and both solutions require constant maintenance.

The best solution is to stop raising the CO2 level in the first place, lower the atmospheric CO2 and let the oceanic reservoir slowly release its CO2 over the next millennium back into the atmosphere where we can properly sequester it.

Having said that, I suspect that in desperation we will end up geoengineering with shading. Countries really suffering from the heat will do this regardless of the consequences, simply as self-preservation if they can afford to do it.

It is back to human nature again. Resist change, and do the easiest things to offset the need for change, with tribalism determining who benefits and who loses.

Quote by gbaikie. “Also the world has changed so much one can’t compare sea level with modern sea levels to reflect how warm the world was.” This makes no sense. It sounds like mysterianism idea that our climate is a mystery. Scientists know what our past climate was and the carbon dioxide levels go up the further back in time we look.

Scientists don’t just use the sea level, but the carbon dioxide levels and the Milankovitch cycles which clearly show that in our ancient past long term climate when the atmospheric ppm of CO2 goes up, the temperature goes up and when the CO2 level goes down, the temperature goes down.

When the air temperature goes up, the polar ice caps melt, not just Greenland.

The science of sea floor sediment cores is infallible. It uses a mass spectrometer which measures the weight of the atoms to get their chemical atomic number or composition. The sea absorbs CO2 by the contact of our atmosphere with the sea and it’s solubility goes down when there is a lot of CO2 in the ocean and the solubility goes up with less CO2 in the atmosphere and the ocean. The amount of CO2 in the ocean is dependent on the CO2 in the air. Scientists have determined our past climate by the carbon in the sea shells which gives a snapshot of the percentage of CO2 in the sea at the time the animal made it’s calcium carbonate shell in the sea which gives us the percentage of CO2 in the air. Also the boron B11/10 ratio always goes up when the CO2 level goes up and it goes down when the CO2 goes down. Also the temperature, rainfall, sea level and ice covering, whether glacial or nonglacial, (interglacial) can be determined and proven by the oxygen isotopes O18/O16 ratio which are also found in the sea shells of animals as well as the ice drilled ice core samples. Radiometric dating can confirm the time period.

125,000 years ago, the sea level was 6 meters, 18 higher because the carbon dioxide level was at 310 ppm which is 40ppm higher than in the year 1850 and earlier which is preindustrial. It only to 20 ppm of CO2 to change our global temperature in the past and raise the sea level 18 feet and end our glacial period until the next ice age. 5ppm is equal to 36 gigatons of CO2.

Looking at the past 800,000 years we can clearly see that every that the warmest time is in every interglacial period, which comes every 100,000 years for the past 800,000, the temperature was higher and the carbon dioxide level was always never over 300ppm and the 280ppm of preindustrial level which is so important because 280ppm is before the world increased CO2 production from coal power which was new technology in 1850. The glacial periods coincided with only 180ppm of atmospheric CO2 to a maximum of 280 ppm and the interglacial periods 280 to at most 300ppm. Basically, it takes 50,000 years for the ocean, the carbon cycle and plants through photosynthesis to remove 100 parts per million of CO2 which always happens before every ice age and glacial period. The Milankovitch cycles through the obliquity or axial tilt and eccentricity, how far away the Earth is from the Sun initiate the ice ages due to reduced light in the polar regions, but it is the feedback process of CO2, CH4, N2O greenhouse gases with water vapor and also albedo feedback process of polar ice caps and their loss, the sea absorbs a lot of light with an absorption of 60 to 96 percent reflectivity depending on the angle of sunlight or only 4 to 40 percent, but ice reflects 75 up 85 percent of the sunlight keeping the polar regions cool.

finally, today’s 415ppm are the same as in the Pliocene, which does mean we will get the same temperature and sea level rise as the Pliocene. If we want to keep today’s sea level or less, then we have to remove some of the CO2 from our air and get the level down from 415ppm to 280ppm. This can be done with carbon air filters which remove the CO2 and turn it into solid bicarbonate. It might be a good idea to start that now instead of wait until we are living the exact Pliocene climate or worse an earlier climate without any polar ice caps and high sea level and temperature.

https://climate.nasa.gov/climate_resources/24/graphic-the-relentless-rise-of-carbon-dioxide/

–Geoffrey Hillend January 17, 2022, 17:34

Quote by gbaikie. “Also the world has changed so much one can’t compare sea level with modern sea levels to reflect how warm the world was.” This makes no sense. It sounds like mysterianism idea that our climate is a mystery.–

As compared to the study of global climate, the theory of plate tectonic is fairly new.

Our climate is not mystery.

Our global climate is icehouse global climate. The definition of icehouse climate, wiki:

“Throughout Earth’s climate history (Paleoclimate) its climate has fluctuated between two primary states: greenhouse and icehouse Earth. Both climate states last for millions of years and should not be confused with glacial and interglacial periods, which occur as alternate phases within an icehouse period and tend to last less than 1 million years. There are five known Icehouse periods in Earth’s climate history, which are known as the Huronian, Cryogenian, Andean-Saharan, Late Paleozoic, and Late Cenozoic glaciations.”

https://en.wikipedia.org/wiki/Greenhouse_and_icehouse_Earth

Icehouse climates have cold oceans, our average temperature of our ocean is about 3.5 C. If ocean was 5 C, it would still be a cold ocean.

Our ocean as far as I know has not been 5 C within the last 2 million years.

Or since icehouse global climate cooled and Greenland formed a “permanent” ice sheet. But during past interglacial period the ocean has warmed to about 4 C.

Having ocean which 4 C or warmer is very warm world as compared to our present world. But a global greenhouse climate has ocean which is about 10 C- a 10 C has not seen in our Age- or it would not be icehouse climate if it did. And one would have massive ocean thermal expansion with 10 C ocean.

No one thinks 10 C ocean is possible [within millions of years, if not tens of millions of years- it not even discussed as worth talking about, or one could say that was different Earth- quite literally a different world.

The graph in this paper is more accurate: As we can see that the temperature precisely changes with the carbon dioxide levels. The last interglacial period coincided with 310 ppm which is only 30ppm more than the 280ppm preindustrial interglacial period which was 125 K or 125,000 years ago with a six meter sea level rise.

https://www.carbonbrief.org/explainer-how-the-rise-and-fall-of-co2-levels-influenced-the-ice-ages

Sorry, I’ll continue laughing. That “climate crisis”:

– has lengthened the growing season in the US by tens of days in the 20th century:

https://i0.wp.com/live-nr-2017.pantheonsite.io/wp-content/uploads/2016/05/chat-epa-growing-seasons-5.jpg?resize=660%2C720&ssl=1

– has not submerged any port city

– didn’t prevented the vegetated terrain in the world to increase an equivalent in area to two times the continental United States in 35 years

https://www.nasa.gov/feature/goddard/2016/carbon-dioxide-fertilization-greening-earth

So I’ll continue to not being terrified by such dangerous existential crisis.

Show your data source. This source indicates a different situation.

Forests, and land use over time

I already showed it.

If you are referring to the NASA leaf analysis from satellite data, then if it is correct, there is a difference of analysis between the 2 analytic sources.

What surprises me about the NASA data is that the greening is happening in areas that are also where cities are expanding. The only explanation is that the existing vegetation that was torn up is being replaced by leafy garden shrubs, or tree planting in cities. Otherwise, this makes no sense at all.

However, it is also quite possible that what is being measured is leafy crops being planted. Note as well that despite concerns over Amazon rainforest deforestation, the Amazon is increasing its leaf index. Somewhat counterintuitive.

Look at southern Europe, from Spain, across to Italy, Greece, and Turkey. All greening at high levels. It is almost as if ground truth about conditions is turned on its head – and these are all areas where water is getting scarcer.

The one region the data makes sense is the Sahel region in Africa showing a degreening at the southern edge of the Sahara. This is a long-standing problem due to rainfall patterns and overgrazing of livestock.

A good explanation for NASA’s data would be helpful, because the details seem counterintuitive to what we [think] we observe is happening.

I concede that the Earth is greening. A more recent NASA piece refers to this paper about the causes.

The oceans absorb 30 to 50 percent of the carbon dioxide. Photosynthesis and weathering take up the rest. The problem is that our past climate clearly shows that these do not remove enough CO2 to over ride the Milankovitch cycles and a 18 foot sea level rise every 100,000 years during the interglacial period. Consequently, with our power production and the use of fossil fuels such as oil and coal, we have put carbon dioxide into our air much faster than these process in nature, photosynthesis, carbon cycle and oceans, remove it. Scientists in the graph of my earlier post clearly show that it takes 50,000 years for nature to remove 100 parts per million from our atmosphere. Extrapolating, even if we stop most CO2 production and convert to solar power, we will not have an ice age of glacial period in 40,000 years but we will have to wait until the next one 140,000 years. This was written by a NASA scientist on the NASA forum about why the Milankovitch cycles alone are NOT responsible for anthropogenic climate change which must also include our CO2 emissions.

Also we will be stuck with a 90 foot sea level rise for 50,000 years. Even if we stop all carbon dioxide production right now, nature will remove only 100 ppm, so in 50,000 years, the CO2 will be at 315 ppm which still gives a 18 foot sea level rise. 415 today minus 100ppm is 315ppm. Consequently, it is easy to see why we have to “draw down the CO2” by removing it from out atmosphere, so we have only 280ppm instead of 415ppm. I don’t know how much plankton and plants could speed this up, but it has to be at least 36 billion tons annually of removal are needed to have a fast removal which would take 27 years to remove 127 ppm, and we have to produce very little CO2.

Humans exhale 3 billion tons of CO2, but plants and photosynthesis easily already take that up, so the idea that we need to be less populated is not valid. The idea that the earth is greening does necessarily mean that it could mitigate climate change. The density and height of the green matters since it is the forests that remove a lot of CO2. If we removed the leaf of of every tree, it would already cover a lot of the surface of the land. I agree with the idea of greening to reduce the atmospheric CO2, but I don’t think it will be enough without carbon capture machines at least not fast enough.

If we past the 600 ppm, the tipping point, then there will be no ice ages, no polar capes, 240 foot see level rise for one million years considering how long it took the snow to build the two mile thick ice on Antarctica.

We do have the technology to modify our weather for better or for worse. Let’s do it for the better intentionally.

Agree with almost every point. We should note that greening is not an unalloyed good. Just this morning I was reading about birch trees encroaching on the Norwegian tundra regions. Apart from disrupting the reindeer herds that are the livelihoods for the Sami people, the result is increased warming feedback as the snow and ice cover is reduced.

Elsewhere, it turns out that peatlands are huge stores of carbon. Draining them to plant trees results in a net loss of carbon and a concomitant release of CH4. Greening these peatlands with tree planting is therefore counterproductive.

@Antonio’s simplistic “GW extends the growing season” ignores the disruption of the natural world in favor of industrial agriculture growing seasons equated with increased production [but ignoring the energy requirements and nutrient replacement increases.]

“Humans exhale 3 billion tons of CO2, but plants and photosynthesis easily already take that up, so the idea that we need to be less populated is not valid. ”

silly. Fewer Humans mean less population = fewer CO2 creation totally

So much for all that greening and longer growing seasons. The UN report indicates that framing is increasingly degrading the soil and reducing output.

Both croplands and forests have declined since 2000.

https://www.fao.org/3/cb7654en/online/src/html/chapter-1-1.html

A large part of Moore’s law was getting more done with less energy in a smaller package. Obviously inapplicable to scaling products where the energy out is exactly what you’re aiming for, and the package size is dictated by optics.

I tried to take into account the imminent end of exponential growth on Earth in my Bottleneck Effect paper (JBIS, 2016). I agree that exponential growth is only the first phase of an overall logistic growth pattern. See: https://bis-space.com/shop/product/the-bottleneck-effect-on-interplanetary-and-interstellar-growth-over-the-coming-millennium/

Any chance of getting a non-paywalled copy?

This is a book review is from many moons ago; (looking at those blogs feels like the Jurassic era or something), but Catton’s gone and Mobus & Hagens have faded away.

Book review on The Oil Drum of Bottleneck: Humanity’s Impending Impasse, by William R. Catton, Jr.

So Catton is placing us in the class of societies (actually global civilization) that will collapse. (Collapse: How Societies Choose to Fail or Succeed)

James Lovelock has also given up hope, but at least he also won’t live to see it. IDK whether we are coming up to the cliff or whether we have done a Wiley Coyote and gone off the edge but not yet fallen. My sense is that our various national responses to the coronavirus pandemic are a perfect indicator of how nations will respond once the impacts of heating become impossible to ignore as crop production declines and even failures become more common. Our tribalism is exposed. We seem to be in a multiplayer “Prisoner’s Dilemma” with the outcome almost a foregone conclusion. :(

Maybe I’ve missed something…But I am having trouble getting too excited about these tiny, laser-propelled interstellar probes.

I’m ready to concede that perhaps there are potential laser/maser technologies that can propel lightsail nanovehicles to extreme velocities. I can only foresee insuperable problems with targeting and aiming, especially, with the lightspeed/feedback delays involved over long distances. But let us assume for the sake of argument there are ways around these obstacles.

What I can’t understand is how these flying microchips will be able to gather any meaningful data once they get there, much less send it back to us.

I’m not being facetious here. I’m well aware we have the capability of packing enormous processing power into very tiny packages, and that we are getting better at it every day. But power sources, sensors and radios are bulky items, and that’s what has to go there to do the job.

You may change your mind after reading this paper by MAccone and Antonietti:

Radio bridges of the future between Solar system and the nearest 50 stars

[While this is a new paper, IIRC, we had a post about this in 2021.]

One can see that a large antenna (perhaps much greater than 12 meters) can be placed in the SGL by sending the 10 MT craft to the SGL at 0.01c. The small 0.2c craft are sent to alpha-Cen in a stream, capturing images and other data with micro-cameras and other micro sensors, and then they travel on to the alpha-cen’s SGL and transmit back to earth using the sail reconfigured as a radio dish and thin-film solar cells or another source to power the transmitter. (possible using several sails to handle teh task). The data is then transmitted and received over the SGL bridge and sent on to earth.

Yes, this is hardly a simple matter to achieve, and likely not achievable in decades, but physics says the engineering is possible to achieve this. If it is theoretically doable, that at least removes the “impossible” from the project and shifts the issue of how to achieve it with the technology that is, or could shortly be, available.

It’s an interesting paper, but it does assume a 12 meter aperture at *both* ends of the link, which is pretty optimistic if the far one is a StarShot probe.

Launching the receiver on our end is relatively unproblematic, because we could get fairly substantial payloads out to any given focal line in a reasonable time, once we start using nuclear power, or beamed propulsion.

It’s the transmitter at the other end, which has to be fairly large, and perfectly aligned on the far side of the destination star from our Sun, that concerns me. The positioning has to be precise to a matter of meters for the calculations to work. If it’s off the line you lose the gain.

I suppose you could have a very large array of transmitters at our end sending out a navigational transmission, so that the far end probe would only have to pick that up, and do course corrections to end up on the right vector. But that does imply substantial delta-V would be available, and it would still have to be pretty close to the right vector to begin with, (Because the gain drops off VERY fast off axis!) and it can’t start out on that vector, because there was a star in the way of that particular route.

All it all, I think it’s probably feasible, but I have a hard time seeing it accomplished by a Starshot scale probe.

As you understand, the authors were trying for a standard module size to make a galactic network, but for single bridges from Earth to various stars, these dish sizes can be asymmetric, just as our huge ground stations dwarf satellite antennae.

The alignment is very difficult which is why I caveated it with the understatement of : “This is hardly a simple matter to achieve”. However, the paper shows it is possible, and therefore doable, if the engineering can be worked out. Personally, I think teh paper does a good job of undermining the argument that small chip sails are useless for interstellar data acquisition due to the communication problem. That now has one theoretical solution, even if technically difficult to achieve.

“Maybe I’ve missed something…But I am having trouble getting too excited about these tiny, laser-propelled interstellar probes”

Yeah, I feel like you, not only by the small amount of information these approaches can provide about the target system, if any, but because they could never transport us to the stars. I can’t be a fan of them. I prefer fusion rockets.

The thing is, if you can launch tiny, laser propelled interstellar probes in really large quantities, you can use them as a kind of “smart” mass beam, capable of course corrections, to push something much larger.

Sounds like Jordin Kare’s ‘sailbeam’ concept.

SailBeam: A Conversation with Jordin Kare

https://centauri-dreams.org/2017/07/25/sailbeam-a-conversation-with-jordin-kare/

BTW — I add this because someone always corrects my spelling of Jordin’s name — ‘Jordin’ is the correct spelling. Very creative thinker who died just a few years back. A real loss.

I’ve had this loopy idea for a long while that we could just have factories in the asteroid belt, or maybe the poles of Mercury, continually churning out small automated solar sails. Any time you need thrust, you light up a beacon, and any sails in a position to provide it accelerate in your direction, and kamikaze against your pusher plate.

For interstellar missions you add a big laser to allow them to follow you further out and reach higher velocities.

Doesn’t seem loopy to me. ;) Robotic factories on Mercury churning out cartridges of foil sails by the megaton, with nearby solar PV arrays to power ground-based beamers to fire the sails in the calculated trajectories needed to propel larger ships. If the foils were charged, the pusher plates might be huge magnetic fields, allowing for large targets but with a small mass and no damaging impacts with fractional c velocities. I wonder if that might also be a way to propel a Plasma Magnet/wind Rider to give it an extra boost and a faster terminal velocity once it outruns the solar wind?

There’s lots of ways to do it, once you have the infrastructure. For instance, a mass beam propulsion system where it’s a beam of fusion fuel, and after capturing the momentum, you burn the fuel. A fusion rocket with no need to carry fuel could probably sustain a pretty high acceleration.

I have mentioned this before, each alternate sail can be slowed down by either a laser onboard the craft or using the laser light from the home system to do it via a reflector on the spacecraft. They can then collide to form an ionised gas or even physical contact impact with the craft. At high enough velocities fission or fusion can occur. It’s a very viable way to get heavy craft moving.

These heavy craft could potentially be useful in laying down a slowing down stream of matter runway at a target system which a craft uses to brake with.

If you need to slow the sails to low speed, then the ship’s energy supply has to be at least commensurate with the beamer, therefore negating the value of the external fuel.

Yes, the ship could use a reflector to slow the mini sails, but then the reduced energy at reflection means that the deceleration has to take longer (and over a longer distance) than the acceleration.

You could adjust the velocity and number of mini sails depending on the ship’s velocity, using large numbers of slow sails early on, and fewer high-velocity sails later as the ship’s velocity increases, to minimize the ship’s need for energy to extract the momentum, and to maintain a constant beamer power consumption. If the accelerator could rapid fire subcritical-mass slugs of Pu239, the ship should eject enough mass/Pu239 to allow the slugs to impact each other behind the ship and fission to act like an Orion. But again, the ship must carry some mass to do this and have enough energy to eject that mass fast enough. Arguably, one would be better to fire the atom-bombs at the ship and have them remotely detonated as they reach the optimum distance from the ship, thus avoiding carrying the a-bomb load. The ship then just becomes the pusher plate mechanism and life-support habitat. The cost is that the accelerator must either increase its energy to eject the bombs at increasing speed, or decrease the ejection rate as the ship accelerates.

It seems to me (without having done the calculations) that one is still better off using high energy em beams, with light-speed velocity, and either a sail to reach high velocity with a small payload, or a propellant load to provide thrust for a larger payload. Krafft Ehricke once proposed a solar thermal ship using inflatable mirrors to heat water as a propellant, and just sunlight as the energy input. Lubin’s beamers could provide the energy, allowing for either smaller reflectors, or power conversion devices to energize a propellant like water. Several engines based on microwave heating can generate and accelerate plasmas with an Isp around 1000 seconds, making these craft comparable to NTRs without the radiation hazard to crews and required parasitic shielding mass. I would also store energy by electrolyzing water as a most readily available, safe, lightweight energy-dense material for when the ship was out of sight of the beam, for example in the shadow of a planet during an orbit, or for a high energy maneuvering burst that exceeded the power of the beamer. Conversion of laser light to electricity could be upwards of 50% at the spacecraft.

While Lubin’s design for his asteroid vaporizing DE-STAR requires a huge amount of energy, I would argue that with sufficient response time, it might be better to send an IDM craft to rendezvous with the asteroid and use the beam power to maintain thrust against the asteroid to deflect it. The craft may even be able to use the asteroid material (especially if a CC type) as propellant.

The key here Alex is that you can recycle photons better with accelerated discs and at very high accelerations, millions of g’s the runway is a lot less than the proposed accelerated path of Starshot. Recyled photons can reduce the capital expenditure potentially by thousands of times.

When you say “recycle photons” you mean a photonic drive where the light continues to bounce between 2 mirrors, one fixed and the other accelerating?

If you place the system on Earth, you must contend with the atmosphere at the point of release (unless you can build a vacuum tube 10s of kilometers high. To maintain a fixed base, probably the best place is the Moon – massive, stable, and no atmosphere of any significance.

What sort of runway are you talking about?

[10^6 g to reach 0.1c implies t = 3 seconds, and a launch distance of 45000 km.]

What material can tolerate millions of g’s, and what could guide such a projectile?

The ground based phased array beams the laser light to recycler at a stationary point, say the geostationary point, or near to as possible. There the laser light is bounced between as system of mirrors and lens. Perhaps the laser beam from the ground is directed directly into the laser excitation chamber to used as the power source.

It depends on what you want to transport. Adult humans 1.0 will not go with such small craft. Microscopic organisms can go. If we can develop “living” machinery that can develop from “seeds” and local resources, we might be able to send human DNA/fertilized eggs/embryos and develop them at the target after a suitable environment is constructed.

Maybe we have to send huge slow boat world ships to such worlds taking many millennia, which then become the environment to send such pre-adult humans.

Maybe humans x.x will be partially digital and we can send just the information of their minds to be embodied on arrival.

Arguing that chip sails are not interesting is like saying that logs are uninteresting as they cannot transport humans across oceans to colonize distant islands or continents. They are just the proof of concept. Once developed, larger sail ships can be developed once we have the experience, technology, and power to send them.

Whatever the technology – nanoprobes of huge telescopes, we need to map out and explore the potential target worlds for human/robot destinations. There is no point heading out into the dark not knowing the characteristics of the destination. Probes will always have the upper hand in possible information collection compared with telescopes, as they can collect both em and physical data. The downside is that they are relatively expensive per target, and take a long time to complete a mission. Just look at what we have achieved with probes in our own system, they have far exceeded our telescopes, both ground, and space-based. Similarly, technology miniaturization continues. Arguments that “size matters” may not be so true in the future, especially as computational means to emulate large instruments can obsolete their requirement. IDK how far this process can continue, but even casual perusal of technology development shows extraordinary achievements in this regard, with no sign we are off the exponential phase of this development yet as new devices and techniques show. Even with computers, while silicon is nearing the end of its miniaturization path, other technologies are waiting in the wings to replace it, in the future possibly making our current machines look like old valve radios in comparison. Even 25 years ago I would not have thought I could hold a powerful computer in my palm with lots of useful software and a telephone to boot. 40 years ago we had Z80/6502/8088 chips in very primitive desktop and laptop computers. I had several. 30 years ago I had a 386 desktop with KBs of RAM and 30 MB of hard drive space running Windows 3.0/3.11. Compare that to the smartphone in your pocket.

20 years ago DNA sequencers were the size of refrigerators. Today you can get a “good enough” sequencer about the size of a deck of cards that can be taken into the field.

The list goes on and on. Instruments we thought had to be of a certain size are being miniaturized – making them suitable for more applications and cheaper too – consumer items – as well as making them possible instruments to be added to space probes, surface rovers, and flyers.

By the time we might start sending probes to other stars with sensible mission times, the miniaturization of technology (and their capabilities) to accompany those probes might be extraordinary to our contemporary eyes. It isn’t science fiction, but rather the extrapolation of technology development we can see today.

“How accurately can you estimate where we’ll be in ten years? ”

Not very well. I thought we have sub orbital travel and took more than 10 years to get a suborbital joyride. And looks rather bleak in terms of sub-orbital travel at the moment. And terms exploring the Moon, way off. And now it seems a lot things depend on guy, Elon Musk.

If take US off the table, and it doesn’t seem like dem party has any interest in space exploration {not that Rep party does}, I don’t see much happening in 10 years. People are fairly excited about Starship, and I can see this, but seems FAA has failed, and one failure can lead to another failure. We going have 3D printed rocket launch this year- and that pretty exciting, but such 3D printing might even more important in other non-space things.

But anyhow, we can imagine that Starship get launched pretty soon, and it is fairly successful. And starship is operational in sense it can refuel in orbit and Starship can successful land on Earth, within say 2 years. That seems fairly optimistic. And it seems, that will push, lunar exploration to happen, even sooner than Biden administration has currently delayed it. From that point, it seems a lot things could happen within 10 years from now- and 3d rockets are even “helped”, such as within 10 years, that is how all new rockets are made. 3D printing seems to like quite useful in terms using the Moon and Mars.

I think 3D printing could quite useful for ocean settlements, but, also for everything else. But despite such optimism, in 10 years time, one say things are “just getting started” rather anything amazing is done. So mining the Moon, exploring Mars, and ocean settlements, might be starting.

It seems something happening “unexpected” is more likely to happen within 10 years, which cause something to happen as far as changing prospects of star traveling. Say, fusion electrical powerplant is made.

Finding out you could perhaps make fusion electrical powerplant after 10 years, is something one hope for, but making one within 10 years, is largely unexpected.

Making one is unlikely but making something somehow unexpected within 10 years is more likely.

In more than 10 years, one could have explosion stuff happenning, 3D printing is normal way things are made, mining the Moon, settlements on Mars, orbital refueling in Venus orbit, and ocean settlements- and ocean mining and farming.

It seems to me, using Venus orbit would important in terms using outer solar system, and exploring beyond solar system.

It seems from Venus orbit, chemical rockets are more related to exploring beyond our solar system.

Or oberth effect and hohmann transfers are the stuff of chemical rockets.

I quality that and say that this type of manufacture is suited to very complex objects that are extremely hard or costly to manufacture by conventional means, especially in metals, and particular biological materials like cells.

It will not replace the manufacture of simple things from steel girders, to extruded shapes, and injection-molded items, like plastic parts and toys, plate glass, etc, etc.

But if you want single-piece rocket engines, artificial body parts made of living materials, few items of a particular design, then 3D printing is the way to go.

Time will tell if it becomes ubiquitous and a major method for custom items or a niche method used only for some applications, time will tell.

3-printing is alluring but as you mentioned, it has serious limitations and high costs; especially in metal. Regarding metal 3-printing, surface finishes are generally poor with concerns over porosity and density. It’s not a Star Trek matter replicator.

3-D printed parts in stainless steel are an order of magnitude more expensive than fabrications or machined castings. Perhaps some geometries that can not be cast can justify the cost.

For polymers, it can be really helpful and especially for manufacture of complex 3-D impeller geometries that 5-axis machines simply can not duplicate.

3-D printing seems limited to one material at a time. It would be difficult to include, say, copper wires, within the part. Nevertheless our company will likely purchase a polymer printer for prototype work and possibly limited production applications. It will be fun.

SpaceX 3D-prints some parts of the Raptor engine. The rest of the engine and the StarShip are produced non-additively, because it’s way cheaper. Nevertheless SuperDraco engines are fully 3D-printed. And there are fully 3D-printed rockets out there, from other company, but it’s not yet clear whether they will be competitive.

If we make a concentrated single beam laser into a ultra powerful directed energy weapon instead of sail technology, the beam would be very high intensity, but it could be used to for METI at the speed of light. Other countries might not like that though because it would make satellites destroying missiles obsolete.

No one lives on Venus.

Venus is key location in many respects.

My favorite science explainer mentioned that warp drives that move at sub-light speed do not violate known physics nor require exotic matter.

https://www.youtube.com/watch?v=YdVIBlyiyBA

Is that not good enough? Potentially large spacecraft moving at nearly the speed of light would seem a huge improvement over solar system sized engineering projects. Of course, it could be another false dawn or the energy needed to create the warp bubble is expressed in solar masses.

Yeah, that’s where my heart is also Patient Observer. It seems like in every sense of the word the most practical of all solutions if it turns out to have any ability to reach physicality. There have been considerable amount of pushback by various physics authors who are chipping away at some of the foundation behind ‘positive energy’ solutions to warp regions based technologies.

There considerably beyond my limited knowledge behind GR and I attempt to digest some of the information in the papers but it’s a hopeless task unless you are young, and are able to spend the money to attend university. Perhaps someone that is out there who’s just starting out life could take a run at it and bring it to fruition. Recently there has been suggestions that even if you can create a warp drive it will be of necessity limited to sub light velocities-but very high velocities. I put my money on this.

This topic seemed like an opportunity to weave together some

thoughts based on diverse influences…

By definition, none of us on this site are disinterested in the question of interstellar voyage or discovery. But for the next couple of decades, I strongly suspect that our rewards for inquiry will be received through

other means.

There might be ( at least) two exponential trends to consider here.

One is space propulsion; the other is observation and detection technology. When I get into circles that talk about launch for specific destinations, I end up thinking about how much more I would like to know about the destination before committing resources ( or payload) along one particular acceleration vector toward one particular star and a planetary system partly identified by doppler or some transits. One could argue that previous periods of exploration did not proceed that way… But was that really the case? Famously and initially, the pitch for trans-Atlantic crossing was to reach China and trade – not to discover an undeveloped continent. And at first, it was regarded as something that got in the way, what with all that Northwest Passage search.

Would we be so lucky with miniaturized spacecraft accelerated to fractions of the speed of light for a very quick flyby?

My guess is that even more pressing than the race of early space probes against the later launched faster ones, is the early launch probe against the space-borne telescope and detector technology that will identify what the interstellar probes will find, decades before their arrival or maybe even weeks after they are launched. Dollar per dollar ( bitcoin per bitcoin?), a passive collector antenna in space is going to get you more than an active beam pushing a pellet. And the

people on the ground would feel more secure looking up at a few kilowatt operation rather than the gigawatts that are likely needed for support past the first parsec.