Centauri Dreams

Imagining and Planning Interstellar Exploration

A Possible Proxima Centauri c

While we continue to labor over the question of planets around Alpha Centauri A and B, Proxima Centauri — that tiny red dwarf with an unusually interesting planet in the habitable zone — remains a robust source of new work. It’s surely going to be an early target for whatever interstellar probes we eventually send, and is the presumptive first destination of Breakthrough Starshot. Now we have news of a possible second planet here, though well outside the habitable zone. Nonetheless, Proxima Centauri c, if it is there, commands the attention.

A new paper offers the results of continuing analysis of the radial velocity dataset that led to the discovery of Proxima b, work that reflects the labors of Mario Damasso and Fabio Del Sordo, who re-analyzed these data using an alternative treatment of stellar noise in 2017. Damasso and Del Sordo now present new evidence, working with, among others, Proxima Centauri b discoverer Guillem Anglada-Escudé, and incorporating astrometric data from the Gaia mission’s Data Release 2 (DR2). The result of the new analysis is a possible planet with an orbital period of 5.2 years and a minimum mass of 5.8 ± 1.9 times the mass of the Earth.

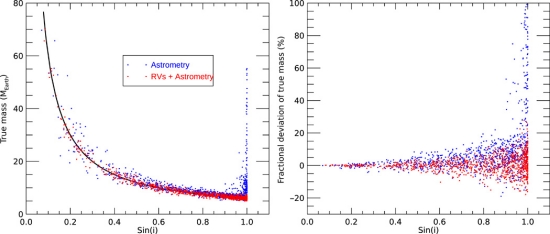

Image: This is Figure 5 from the paper. Caption: Outcomes of the combined analysis of the astrometric and RV datasets. Left: True mass of Proxima c versus the sine of the orbital inclination, as obtained from the astrometric simulations. The black line is the simulated exact solution, the blue dots represent the values derived from the Gaia astrometry alone, while the red dots are the values derived by combining the Gaia astrometry with the radial velocities. Right: Fractional deviation of the true mass (defined as the difference between the simulated and retrieved masses for Proxima c divided by the simulated value) versus sine of the orbital inclination. Credit: Damasso et al.

Remember that when dealing with radial velocity results, we can only draw conclusions on the minimum mass in question, as we don’t know how the system is inclined around the star. The researchers find that by analyzing the photometric data and spectroscopic results, they cannot explain the planetary signal through stellar activity, but they also argue that a good deal of follow-up work is needed through a variety of means. The paper notes, for example, that Proxima was observed with the Atacama Large Millimeter/submillimeter Array (ALMA) in 2017, with an unknown source detected at 1.6 AU. Is this evidence for Proxima c?

It’s quite an interesting question, and one that involves more than a new planet:

ALMA imaging could corroborate the existence of Proxima c if the secondary 1.3-mm source is confirmed: In this sense, ALMA follow-up observations will be essential. In (28), the possible existence of a cold dust belt at ?30 AU, with inclination of 45°, is also mentioned. If Proxima c orbits on the same plane, its real mass would be mc = 8.2 M?

Image: Artist’s impression of dust belts around Proxima Centauri. Discovered in data from the Atacama Large Millimeter/submillimeter Array (ALMA) in Chile, the cold dust appears to be in a region between one to four times as far from Proxima Centauri as the Earth is from the Sun. The data also hint at the presence of an even cooler outer dust belt and may indicate the presence of an elaborate planetary system. These structures are similar to the much larger belts in the Solar System and are also expected to be made from particles of rock and ice that failed to form planets. Such belts may also prove useful in helping us investigate the presence of a possible second planet around this star. Credit: ESO / M. Kornmesser.

But Gaia astrometry is also crucial, for there is some evidence of an anomaly in Proxima’s tangential velocity that, if confirmed, would be compatible with the existence of a planet with a mass in the 10 to 20 Earth range, and a distance between 1 and 2 AU. Further work with Gaia data is clearly in the cards:

Given the target brightness and the expected minimum size of the astrometric signature…, Gaia alone should clearly detect the astrometric signal of the candidate planet at the end of the 5-year nominal mission, all the more so in case of a true inclination angle significantly less than 90°. Proxima is one of the very few stars in the Sun’s backyard for which Gaia alone might be sensitive to an intermediate separation planetary companion in the super-Earth mass regime.

A final consideration is that while the flux contrast between the hypothetical Proxima c and the parent star (depending on albedo, among other things) is beyond the capabilities of our current direct imaging technologies, the apparent separation of planet and star should be accessible to future high-contrast imaging instruments, perhaps the European Extremely Large Telescope, which the paper mentions along with other ground- and space-based instruments. So we have what the authors describe as ‘a very challenging target,’ but one with huge interest for astronomers continuing to characterize this closest of all stellar systems.

It seems premature to get too far into a discussion of how Proxima c formed, since we have yet to confirm it. However, the authors make the case that if it is there, this planet would challenge us to explain how it formed so far beyond the snowline, where super-Earths could take advantage of the accumulation of ices. Perhaps the protoplanetary disk here was warmer than we’ve assumed. In any case, the apparent circularity of the orbit and the absence of more massive planets closer in makes migration from the inner system unlikely. And I think we should leave formation issues there while we await new work, especially the authors note, from ALMA.

The Damasso paper reanalyzing the Proxima Centauri radial velocity data in 2017 is “Proxima Centauri reloaded: Unravelling the stellar noise in radial velocities,” Astronomy & Astrophysics 599, A126 (2017) (abstract/ preprint). The new Damasso et al. paper is “A low-mass planet candidate orbiting Proxima Centauri at a distance of 1.5 AU,’ Science Advances Vol. 6, No. 3 (15 January 2020). Full text.

.

New Planets from Old Data

We rightly celebrate exoplanet discoveries from dedicated space missions like TESS (Transiting Exoplanet Survey Satellite), watching the work go from initial concept to first light in space and early results. But let’s not forget the growing usefulness of older data, tapped and analyzed in new ways to reveal hidden gems. Thus recent work out of the Carnegie Institution for Science, where Fabo Feng and Paul Butler have mined the archives of the Ultraviolet and Visual Echelle Spectrograph survey of 33 nearby red dwarf stars, a project operational from 2000 to 2007.

The duo have uncovered five newly discovered exoplanets and eight more candidates, all found orbiting nearby red dwarf stars. Two of these are conceivably in the habitable zone, putting nearby stars GJ180 and GJ229A into position as potential targets for next-generation instruments. Both of these stars host super-Earths (7.5 and 7.9 times the mass of Earth), with orbital periods of 106 and 122 days respectively. Like the other planets unveiled in the discovery paper in The Astrophysical Journal Supplement Series, these worlds were all found using radial velocity methods, uniquely powerful when deployed on low-mass red dwarfs.

Temperate super-Earths are interesting in their own right, but one of these has a particular claim to our attention, as lead author Feng explains:

“Many planets that orbit red dwarfs in the habitable zone are tidally locked, meaning that the period at which they spin around their axes is the same as the period at which they orbit their host star. This is similar to how our Moon is tidally locked to Earth, meaning that we only ever see one side of it from here. As a result, these exoplanets [have] a very cold permanent night on one side and very hot permanent day on the other—not good for habitability. GJ180d is the nearest temperate super-Earth to us that is not tidally locked to its star, which probably boosts its likelihood of being able to host and sustain life.”

But GJ229Ac is also intriguing, a possibly temperate super-Earth in a system where the host star has a brown dwarf companion. That object, GJ229B, was one of the first brown dwarfs to be imaged, making this system an interesting testbed for planet formation models. We also have a Neptune-class planet orbiting GJ433 well out of the habitable zone, far enough from its star that the authors see it as a realistic candidate for future direct imaging. The planet is the coldest Neptune-like world we’ve yet found around another star, and also the nearest to Earth.

Image: Artist’s concept of GJ229Ac, the nearest temperate super-Earth to us that is in a system in which the host star has a brown dwarf companion. Credit: Robin Dienel, courtesy of the Carnegie Institution for Science.

Efforts like this don’t stop with a single dataset, but rely on multiple follow-ups to increase the fidelity of the data. Thus Feng and Butler used the Planet Finder Spectrograph at Las Campanas (Chile), ESO’s HARPS spectrograph (High Accuracy Radial Velocity Planet Searcher) at La Silla, and HIRES (High Resolution Echelle Spectrometer) at the Keck Observatory in a combination that, in Butler’s words, “increases the number of observations and the time baseline, and minimizes instrumental biases.”

I think Feng and Butler are right to emphasize the utility of the UVES data. From the paper:

The most important observation in a precision velocity data set is the first observation because observers cannot go back in time. For most of the stars in the UVES M Dwarf program, these are the first observations taken with state-of-the-art precision. This data set is all the more remarkable for focusing on some [of] the nearest stars, and the stars most likely to harbor detectable potentially habitable planets. These observations will continue to be important in finding and constraining planets around these stars for decades to come. We do not expect this to be the final word on this remarkable data set. We look forward to future researchers reanalyzing this data set with a superior Doppler reduction package, and producing the surprises that emerge from better measurement precision.

All of which emphasizes how creative we are learning to be with the data that in recent decades have been cascading in quantity, quality and importance. Nice work by the UVES M Dwarf team. As the paper goes on to say: “Starting back in the infancy of precision velocity measurements, they boldly went straight to the heart of the most interesting and challenging problem, finding potentially habitable planets around the nearest stars.”

And kudos to Feng and Butler for dedicating their paper to Carnegie astronomer and system manager Sandy Keiser, who died suddenly in 2017 during the analysis of the data from which these results emerged, but not before she produced work critical to this paper.

The paper is Butler et al., “A Reanalysis of the UVES M Dwarf Planet Search Program,” Astronomical Journal Vol. 158, No. 6 (2 December 2019). Abstract.

A Satellite for Eurybates

3548 Eurybates is a Jupiter trojan, one of the family of objects that have moved within the Lagrange points around Jupiter for billions of years (the term is libration, meaning these asteroids actually oscillate around the Lagrange points). Consider them trapped objects, of consequence because they have so much to tell us about the early Solar System. The Lucy mission aims to visit both populations (the ‘Greeks’ and the ‘Trojans’) at Jupiter’s L4 and L5 Lagrangians when it heads for Jupiter following launch in 2021.

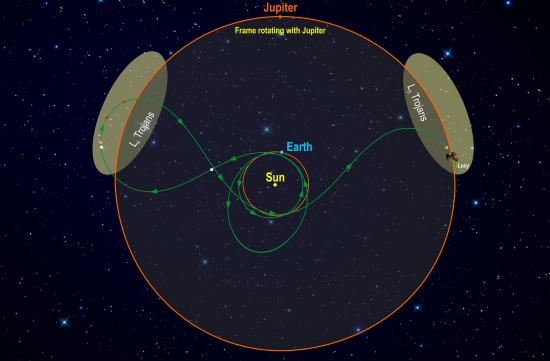

Image: During the course of its mission, Lucy will fly by six Jupiter Trojans. This time-lapsed animation shows the movements of the inner planets (Mercury, brown; Venus, white; Earth, blue; Mars, red), Jupiter (orange), and the two Trojan swarms (green) during the course of the Lucy mission. Credit: Astronomical Institute of CAS/Petr Scheirich (used with permission).

Right now the focus is on Eurybates as mission planning continues, for we’ve just learned thanks to the Hubble instrument’s Wide Field Camera 3 that this asteroid has a moon, an object more than 6,000 times fainter than Eurybates itself. According to mission principal investigator Hal Levison (Southwest Research Institute), that implies a diameter of less than 1 kilometer. The tiny moon will be among the smallest asteroids visited by Lucy, which is intended to perform flybys of six trojans, as well as a main belt asteroid along the way.

Thomas Statler is a Lucy program scientist at NASA headquarters in Washington:

“There are only a handful of known Trojan asteroids with satellites, and the presence of a satellite is particularly interesting for Eurybates. It’s the largest member of the only confirmed Trojan collisional family – roughly 100 asteroids all traceable to, and probably fragments from, the same collision.”

It took three tries with Hubble to confirm the satellite’s existence, a tricky job given the object’s faintness and unknown orbit around the much brighter Eurybates. Now the task is to figure out when it will become visible again, for Eurybates won’t be observable until well clear of the Sun, which won’t happen until June. No major changes to the existing flight planning are needed to incorporate the small moon into the mission, but refining its orbit around the asteroid will help scientists schedule the best observing time during the Eurybates encounter.

Image: This diagram illustrates Lucy’s orbital path. The spacecraft’s path (green) is shown in a frame of reference where Jupiter remains stationary, giving the trajectory its pretzel-like shape. After launch in October 2021, Lucy has two close Earth flybys before encountering its Trojan targets. In the L4 cloud Lucy will fly by (3548) Eurybates (white), (15094) Polymele (pink), (11351) Leucus (red), and (21900) Orus (red) from 2027-2028. After diving past Earth again Lucy will visit the L5 cloud and encounter the (617) Patroclus-Menoetius binary (pink) in 2033. As a bonus, in 2025 on the way to the L4, Lucy flies by a small Main Belt asteroid, (52246) Donaldjohanson (white), named for the discoverer of the Lucy fossil. After flying by the Patroclus-Menoetius binary in 2033, Lucy will continue cycling between the two Trojan clouds every six years. Credit: Southwest Research Institute.

We already have one binary to look forward to, for Patroclus, in the L5 cloud, has a small satellite called Menoetius. Note how stuffed with interesting things these Lagrangian points seem to be. We have D-type asteroids like Patroclus, which likely have water ice in the interior, as well as C- and P- class asteroids, the latter darker and bearing more similarities to Kuiper Belt objects than main belt asteroids. All are thought to be rich in volatiles. Our explorations here should offer insights into primordial planet-building materials in the early Solar System.

Orange Dwarfs: ‘Goldilocks’ Stars for Life?

Our Sun is a G2V type star, or to use less formidable parlance, a yellow dwarf. It was inevitable that as we began considering planets around other stars (well before the first of these were discovered), we would imagine solar-class stars as the best place to look for life, but attention has swung to other possibilities in recent years, especially toward red dwarfs, which comprise a high percentage of all the stars in the galaxy. Now it seems that the problems of M-dwarfs are causing a reconsideration of the class in between, the K-class orange dwarfs.

Alpha Centauri B is such a star, although its proximity to Centauri A may raise problems in planet formation that we have yet to observe. Fortunately, our long-distance exploration of the Centauri stars is well underway, and we should have new information about what orbits the two primary stars here within a few short years. If we were to find a habitable zone rocky world around Centauri B, one thing that makes it interesting is the longevity of such stars.

Unlike our Sun, which is about halfway through its 10 billion year lifetime, orange dwarfs can live for tens of billions of years, offering abundant opportunity for life’s growth and evolution. While not as ubiquitous as M-dwarfs, K-dwarfs appear to be about three times more numerous than G-dwarfs like the Sun. These percentages are always being adjusted, of course, but I’ve seen estimates of G-dwarfs between 3 and 8 percent of the stellar population. A higher population of K-dwarfs, though, gives us plenty of search space for planets possibly bearing life.

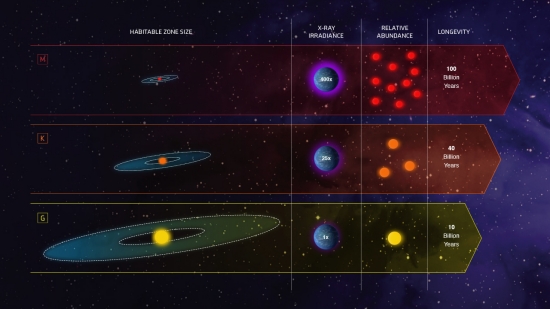

How likely are the various kinds of stars to produce habitable conditions around them? M-dwarfs give us fantastically longer lifetimes (into the trillions of years), but at the recent meeting of the American Astronomical Society in Hawaii, Edward Guinan and Scott Engle (Villanova University) described the extreme levels of UV and X-ray radiation through flares and coronal mass ejections that planets in the habitable zones of these stars can receive, with the real possibility of atmospheres being stripped away. “We’re not so optimistic anymore about the chances of finding advanced life around many M stars,” Guinan said.

Guinan and Engle have been engaged in a project called GoldiloKs at Villanova, in which they work with undergraduate students to measure factors like age, rotation rate, and radiation exposure in a sampling of stars ranging across primarily G- and K-class stars. The Hubble instrument, Chandra X-ray Observatory, and ESA’s XMM-Newton satellite are involved in the observations, with Hubble particularly useful for assessing radiation from the K-dwarfs. Lacking the intense magnetic fields powering up X-ray and UV emissions, these stars produce a scant 1/100th as much radiation as would be received by a habitable zone world around an M-dwarf.

To Guinan, these orange dwarfs are indeed the Goldilocks stars:

“K-dwarf stars are in the ‘sweet spot,’ with properties intermediate between the rarer, more luminous, but shorter-lived solar-type stars (G stars) and the more numerous red dwarf stars (M stars). The K stars, especially the warmer ones, have the best of all worlds. If you are looking for planets with habitability, the abundance of K stars pump up your chances of finding life.”

Image: This infographic compares the characteristics of three classes of stars in our galaxy: Sunlike stars are classified as G stars; stars less massive and cooler than our Sun are K dwarfs; and even fainter and cooler stars are the reddish M dwarfs. The graphic compares the stars in terms of several important variables. The habitable zones, potentially capable of hosting life-bearing planets, are wider for hotter stars. The longevity for red dwarf M stars can exceed 100 billion years. K dwarf ages can range from 15 to 45 billion years. And, our Sun only lasts for 10 billion years. The relative amount of harmful radiation (to life as we know it) that stars emit can be 80 to 500 times more intense for M dwarfs relative to our Sun, but only 5 to 25 times more intense for the orange K dwarfs. Red dwarfs make up the bulk of the Milky Way’s population, about 73%. Sunlike stars are merely 6% of the population, and K dwarfs are at 13%. When these four variables are balanced, the most suitable stars for potentially hosting advanced life forms are K dwarfs. Credit: NASA, ESA, and Z. Levy (STScI).

We have about 1,000 orange dwarfs within 100 light years of the Sun, making these interesting targets for future study. Whereas our own planet will face a habitable zone that gradually moves outward as the Sun begins to swell — we’re in deep trouble in a billion years or so — K-dwarfs see much slower migration of the habitable zone, with an increase in brightness by about 10-15 percent over the Sun’s entire lifetime. No wonder Guinan and Engle single out K-star hosts like Kepler-442 and Epsilon Eridani for extra attention. Indeed, Kepler-442 b is a rocky world circling a K5 star that Guinan calls ‘a Goldilocks planet hosted by a Goldilocks star.’

Addendum: I had inexplicably included Tau Ceti above as a K-class star (and yes, I had already had my morning coffee, so I have no excuses). Thanks to readers Alan and Michal Barcikowski for pointing out the error. Tau Ceti is a cool G8 dwarf, with mass about 70 percent of the Sun’s.

All this reminds us of how our views of our own circumstances have changed over time. It was natural enough to believe that in seeking out life elsewhere in the universe, we would look for places like the one we knew supported it. But we’re beginning to ask whether, habitable though it obviously is, the Earth is as ideally habitable as it might be. Let me point you to René Heller (McMaster University) and John Armstrong (Weber State University), who raised similar issues in a 2014 paper in Astrobiology. The duo use the term ‘superhabitability,’ and, although looking primarily at planetary types, also ask about the host stars:

Higher biodiversity made Earth more habitable in the long term. If this is a general feature of inhabited planets, that is to say, that planets tend to become more habitable once they are inhabited, a host star slightly less massive than the Sun should be favorable for superhabitability. These so-called K-dwarf stars have lifetimes that are longer than the age of the Universe. Consequently, if they are much older than the Sun, then life has had more time to emerge on their potentially habitable planets and moons, and — once occurred — it would have had more time to ‘tune’ its ecosystem to make it even more habitable.

Back to Guinan and Engle, whose work over the past 30 years has included X-ray, UV and photometric studies of F- and G-class stars, a corresponding study of M-dwarfs that lasted a decade, and now the collection of similar data for K-dwarfs. My point here is that the K-dwarf work takes place within the context of a robust dataset painstakingly gathered across a wide range of spectral types, giving these two researchers’ conclusions substantial heft.

Is Earth, then, only ‘marginally habitable’ when compared to planets that could exist around stars more benign than our Sun? It’s a fascinating thought that demands we examine our own anthropocentrism while at the same time bolstering our target list for future observatories.

Heller and Armstrong’s paper is “Superhabitable Worlds,” Astrobiology Vol. 14, No. 1 (2014). Abstract available. I’m sure a paper from Guinan and Engle is in the works. For now, however, have a look at Cuntz & Guinan, “About Exobiology: The Case for Dwarf K Stars,” Astrophysical Journal Vol. 827, No. 1 10 August 2016 (abstract).

New Entry in High Precision Spectroscopy

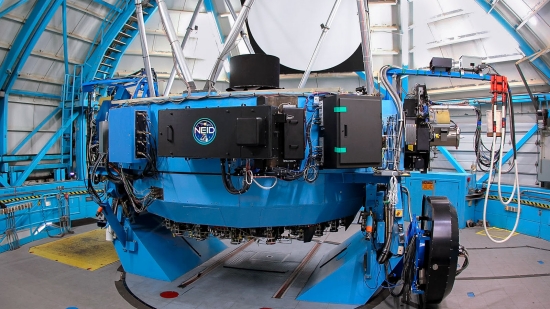

As if I don’t have enough trouble figuring out acronyms, I now have to figure out how to pronounce acronyms. The issue comes up because a new NASA instrument now in use at Kitt Peak National Observatory is a spectrograph built at Penn State called NEID. Now NEID stands for NN-EXPLORE Exoplanet Investigations with Doppler spectroscopy. Here we have an acronym within an acronym, for NN-EXPLORE itself stands for the NASA-NSF Exoplanet Observational Research partnership that funds NEID.

Here’s the trick: The acronym NEID is not pronounced ‘NEE-id’ or ‘NEED’ but ‘NOO-id.’ The reason: Kit Peak is on land owned by the Tohono O’odham nation, and the latter pronunciation honors a verb that means something close to ‘to see’ in the Tohono O’odham language.

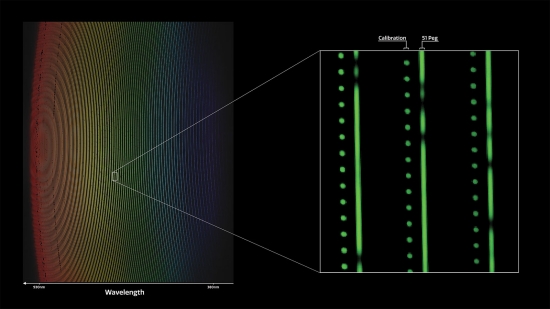

As a person fascinated with linguistics, I’m delighted to see this nod to a language whose very survival is threatened by the small number of speakers (count me as one infinitely cheered by the resurrection of Cornish, for example). And as one absorbed with exoplanet science, I note that NEID first light results were discussed at the recent meeting of the American Astronomical Society in Honolulu. The instrument is mounted on the WIYN 3.5-meter telescope at Kit Peak. The first observations were of 51 Pegasi, the first main sequence star found to host an exoplanet.

Here we’re in the realm of using radial velocity measurements to ferret out the slight stellar motion that indicates planets. The better we get at doing radial velocity calibration, the better, not only because we can discover new planets, but because we can use the method to characterize already known worlds. Thus TOI 700 d, that interesting habitable zone world we looked at yesterday, is a case of discovery by transit methods, but having measured its size, we can now use followup radial velocity readings to get a read on its density.

Image: The NEID instrument, mounted on the 3.5-meter WIYN telescope at the Kitt Peak National Observatory. The NASA-NSF Exoplanet Observational Research (NN-EXPLORE) partnership funds NEID (short for NN-EXPLORE Exoplanet Investigations with Doppler spectroscopy).Credit: NSF’s National Optical-Infrared Astronomy Research Laboratory/KPNO/NSF/AURA.

With NEID, we continue the movement in radial velocity studies down to measurements well below 1 meter per second. Long-time Centauri Dreams readers will know that for a long time, the HARPS spectrograph (High Accuracy Radial velocity Planet Searcher) at the ESO La Silla 3.6m telescope, has been considered the gold standard, taking us down to 1 meter per second, meaning that scientists could discern via Doppler methods the tiny pull of a planet on the star first towards us, and then away from Earth. The ESPRESSO instrument (Echelle Spectrograph for Rocky OxoPlanet and Stable Spectroscopic Observations) installed at the European Southern Observatory’s Very Large Telescope in Chile, takes us into centimeters per second range, which means detecting Earth-size habitable zone planets around Sun-like stars.

Image: The left side of this image shows light from the star 51 Pegasi spread out into a spectrum that reveals distinct wavelengths. The right-hand section shows a zoomed-in view of three wavelength lines from the star. Gaps in the lines indicate the presence of specific chemical elements in the star. Credit: Guðmundur Kári Stefánsson/Princeton University/NSF’s National Optical-Infrared Astronomy Research Laboratory/KPNO/NSF/AURA.

Can NEID likewise reach the realm of centimeters per second? At the AAS meeting, researchers described the instrument they are calling an ‘extreme precision Doppler spectrograph.’ Exploring radial velocity detection in this realm will demand the upgrades the NEID team has made to the observatory, allowing the spectrograph to achieve room temperature fluctuations below +/-0.2 degrees Celsius in the short term.

Vibration from the WIYN telescope also must be taken into account using high-precision accelerometers and speckle imaging data taken on-sky to achieve the needed precision. The team believes the instrument is capable of reaching 27 centimeters per second and perhaps lower. The goal of the ESPRESSO group is 10 centimeters per second. As we explore the capabilities of both instruments, we are revitalizing radial velocity and making it ever more relevant to the quest to discover and characterize small worlds that may support liquid water.

TOI 700 d: A Possible Habitable Zone Planet

Among the discoveries announced at the recent meeting of the American Astronomical Society in Hawaii was TOI 700 d, a planet potentially in the habitable zone of its star. TOI stands for TESS Object of Interest, reminding us that this is the first Earth-size planet the Transiting Exoplanet Survey Satellite has uncovered in its data whose orbit would allow the presence of liquid water on the surface. The Spitzer instrument has confirmed the find, highlighting the fact that Spitzer itself, a doughty space observatory working at infrared wavelengths, is nearing the end of its operations. Thus Joseph Rodriguez (Center for Astrophysics | Harvard & Smithsonian):

“Given the impact of this discovery – that it is TESS’s first habitable-zone Earth-size planet – we really wanted our understanding of this system to be as concrete as possible. Spitzer saw TOI 700 d transit exactly when we expected it to. It’s a great addition to the legacy of a mission that helped confirm two of the TRAPPIST-1 planets and identify five more.”

Image: The three planets of the TOI 700 system, illustrated here, orbit a small, cool M dwarf star. TOI 700 d is the first Earth-size habitable-zone world discovered by TESS. Credit: NASA’s Goddard Space Flight Center.

TOI-700 is an M-dwarf star in the constellation Dorado, a southern sky object whose mass and size are roughly 40 percent that of the Sun, with half the Sun’s surface temperature. Remember that TESS monitors sky sectors in 27-day blocks, a period lengthy enough to spot the changes in stellar brightness that mark the transit of a planet across the star’s face as seen from Earth.

It’s interesting to note how any misclassification of stellar type can confound our conclusions about a transiting planet. In this case, the star was originally thought to be closer in size and type to the Sun, which would have meant planets that were larger and hotter than we now know are there. Correcting the problem revealed what looks to be a very interesting world.

“When we corrected the star’s parameters, the sizes of its planets dropped, and we realized the outermost one was about the size of Earth and in the habitable zone,” said Emily Gilbert, a graduate student at the University of Chicago. “Additionally, in 11 months of data we saw no flares from the star, which improves the chances TOI 700 d is habitable and makes it easier to model its atmospheric and surface conditions.”

Video: NASA’s Transiting Exoplanet Survey Satellite (TESS) has discovered its first Earth-size planet in its star’s habitable zone, the range of distances where conditions may be just right to allow the presence of liquid water on the surface. Scientists confirmed the find, called TOI 700 d, using NASA’s Spitzer Space Telescope and have modeled the planet’s potential environments to help inform future observations. Credit: NASA’s Goddard Space Flight Center.

What we now know about TOI 700 is that there are at least three planets here, with TOI 700 d being the outermost and the only one likely to be in the habitable zone. The planet is in a 37 day orbit and receives 86 percent of the insolation that the Sun gives the Earth. Here again we look to Spitzer, for its data allowed researchers not only to confirm the existence of TOI 700 d but also to tighten the constraints on its orbital period by 56% and its size by 38%. Further observations from the Las Cumbres Observatory network also tightened the orbital period.

The other worlds in the TOI 700 system are TOI 700 b, about Earth size and probably rocky, orbiting the star every 10 days, and TOI 700 c, 2.6 times larger than Earth and in a 16 day orbit. As to the intriguing TOI 700 d, let’s keep in mind that it’s relatively close at just over 100 light years, making it a potential target for follow-up observations by future space observatories, although not the James Webb Space Telescope, as I’ll explain in a moment. TOI 700 d is also likely to be, along with its planetary companions, in tidal lock with the star, keeping one side constantly in daylight, the other in perpetual night.

This nearby star appears to have low flare activity, adding to the potential that if TOI 700 d is truly in its habitable zone, any life developing there would not have to cope with severe doses of UV and X-rays. We should be able to get radial velocity information on this system that could firm up our assumptions about the composition of the three planets by determining their density when contrasted with the transit data that gives us their size.

For the time being, researchers at NASA GSFC have modeled 20 potential environments for TOI 700 d, using 3D climate models that consider various surface types and atmospheric compositions. Led by Gabrielle Engelmann-Suissa (a USRA visiting research assistant at GSFC), the team simulated 20 spectra for the 20 modeled environments. Dry, cloudless worlds and ocean-covered surfaces showed the range of possibilities. Such simulations can be of high value, as the paper on this modeling points out:

While the detection threshold of the spectral signals for this particular planet are most likely unfeasible for near-term observing opportunities, the end-to-end atmospheric modeling and spectral simulation study that we have performed in this work is an illustrative example of how global climate models can be coupled with a spectral generation model to assess the potential habitability of any HZ terrestrial planets discovered in the future, as we have done here with the exciting new discovery, TOI-700 d. With more discoveries on the horizon with TESS and ground-based surveys, we hope that this methodology will prove useful for not only predicting the observability of HZ planets but also for interpreting actual observations in the years to come.

The paper on the modeling is one of three describing the work on TOI 700 d. It makes clear that the noise floor of JWST, which takes into account instrument noise aboard the telescope, makes it unlikely the observatory will be able to characterize TOI 700 d. Similarly, direct imaging even by next-generation extremely large telescopes (ELTs) is challenging. Thus the paper’s conclusion: “Significant characterization efforts will therefore require future space-based IR interferometer missions such as the proposed LIFE (Large Interferometer For Exoplanets) mission.”

It’s good, then, to have TOI 700 d in our catalogs, but it’s not going to be the first exoplanet whose atmosphere we can probe for potential biosignatures.

Three papers describe this work. They are Gilbert et al., “The First Habitable Zone Earth-sized Planet from TESS. I: Validation of the TOI-700 System,” submitted to AAS Journals (preprint); Rodriguez et al., “The First Habitable Zone Earth-Sized Planet From TESS II: Spitzer Confirms TOI-700 d,” submitted to AAS Journals (preprint); Engelmann-Suissa et al., “The First Habitable Zone Earth-sized Planet from TESS. III: Climate States and Characterization Prospects for TOI-700 d,” submitted to the Astrophysical Journal (preprint).