Centauri Dreams

Imagining and Planning Interstellar Exploration

Black Hole Propulsion as Technosignature

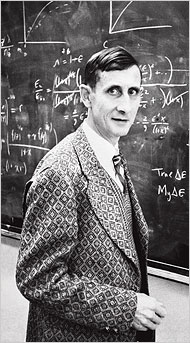

When he was considering white dwarfs and neutron stars in the context of what he called ‘gravitational machines,’ Freeman Dyson became intrigued by the fate of a neutron star binary. He calculated in his paper of the same name (citation below) that gradual loss of energy through gravitational radiation would bring the two neutron stars together, creating a gravitational wave event of the sort that has since been observed. Long before LIGO, Dyson was talking about gravitational wave detection instruments that could track the ‘gravitational flash.’

Image: Artist conception of the moment two neutron stars collide. Credit: LIGO / Caltech / MIT.

Observables of this kind, if we could figure out how to do it (and we subsequently have) fascinated Dyson, who was in this era (early 1960s) working out his ideas on Dyson spheres and the capabilities of advanced civilizations. As to the problematic merger of neutron stars in a ‘machine,’ he naturally wondered whether astrophysical evidence of manipulations of these would flag the presence of such cultures, noting that “…it would be surprising if a technologically advanced species could not find a way to design a nonradiating gravitational machine, and so to exploit the much higher velocities which neutron stars in principle make possible.”

He goes on in the conclusion to the “Gravitational Engines” paper to say this: “In any search for evidences of technologically advanced societies in the universe, an investigation of anomalously intense sources of gravitational radiation ought to be included.”

Searching for unusual astrophysical activity is part of what would emerge as ‘Dysonian SETI,’ or in our current parlance, the search for ‘technosignatures.’ It’s no surprise that since he discusses using binary black holes as the venue for his laser-based gravity assist, David Kipping should also be thinking along these lines. If the number of black holes in the galaxy were large enough to support a network of transportation hubs using binary black holes, what would be the telltale sign of its presence? Or would it be observable in the first place?

Remember the methodology: A spacecraft emits a beam of energy at a black hole that is moving towards it, choosing the angles so that the beam returns to the spacecraft (along the so-called ‘boomerang geodesic’). With the beam making the gravitational flyby rather than the spacecraft, the vehicle can nonetheless exploit the kinetic energy of the black hole for acceleration. Huge objects up to planetary size could be accelerated in such a way, assuming their mass is far smaller than the mass of the black hole. No fuel is spent aboard the spacecraft which, using stored energy from the beam, continues to accelerate up to terminal velocity.

Image: Simulated image of the two merging black holes detected by LIGO, viewed face-on. LIGO’s gravitational-wave detection is the first direct observation of such a merger. Credit: LIGO / AAS Nova.

Kipping likes to talk about the process in terms of a mirror. Because light loops around the approaching black hole and returns to the spacecraft, the black hole exhibits mirror-like behavior. Thus on Earth, if we bounce a ping-pong ball off a mirror, the ball returns to us. But if the mirror is moving towards us quickly, the ball returns faster because it has picked up momentum from the mirror. Light acts the same way, but light cannot return faster than the speed of light. Instead, in gaining momentum from the black hole, the light blueshifts.

We exploit the gain in energy, and we can envision a sufficiently advanced civilization doing the same. If it can reach a black hole binary, it has gained an essentially free source of energy for continued operations in moving objects to relativistic speeds. Operations like these at a black hole binary result in certain effects, so there is a whisper of an observable technosignature.

I discussed the question with Kipping in a recent email exchange. One problem emerges at the outset, for as he writes: “The halo drive is a very efficient system by design and that’s bad news for technosignatures: there’s zero leakage with an idealized system.” But he goes on:

The major effect I considered in the paper is the impact on the black hole binary itself. During departure, one is stealing energy from the black binary, which causes the separation between the two dead stars to shrink slightly via the loss of gravitational potential energy. However, an arriving ship would cancel out this effect by depositing approximately the same energy back into the system during a deceleration maneuver. Despite this averaging effect, there is presumably some time delay between departures and arrivals, and during this interval the black hole binary is forced into a temporarily contracted state. Since the rate of binary merger via gravitational radiation is very sensitive to the binary separation, these short intervals will experience enhanced infall rates. And thus, overall, the binary will merge faster than one should expect naturally. It may be possible to thus search for elevated merger rates than that expected to occur naturally. In addition, if the highway system is not isotropic, certain directions are preferred over others, then the binary will be forced into an eccentric orbit which may also lead to an observational signature.

Tricky business, this, for a black hole binary in this formulation can be used not only for acceleration but deceleration. The latter potentially undoes the distortions caused by the former, though Kipping believes elevated merger rates between the binary pair will persist. Our technosignature, then, could be an elevated binary merger rate and excess binary eccentricity.

I was also interested in directionality — was the spacecraft limited in where it could go? I learned that the halo drive would be most effective when moving in a direction that lies along the plane of the binary orbit. Traveling out of this plane is possible, though it would involve using onboard propellant to attain the correct trajectory. The potential of reaching the speed of the black hole itself remains, but excess stored energy would then need to be applied to an onboard thruster to make the course adjustment. Kipping says he has not run the numbers on this yet, but from the work so far be believes that a spacecraft could work with angles as high as 20 degrees out of the plane of the binary orbit and still reach an acceleration equal to that of the black hole.

For more on the halo drive, remember that Kipping has made available a video that you can access here. The other citations are Kipping, “The Halo Drive: Fuel-free Relativistic Propulsion of Large Masses via Recycled Boomerang Photons,” accepted at the Journal of the British Interplanetary Society (preprint); and Dyson, “Gravitational Machines,” in A.G.W. Cameron, ed., Interstellar Communication, New York: Benjamin Press, 1963, Chapter 12.

Investigating the ‘Halo Drive’

One of the interesting things about gravitational assists is their ability to accelerate massive objects up to high speeds, provided of course that the astrophysical object being used for the assist is moving at high speeds itself. Freeman Dyson realized, as we saw yesterday, that a pair of tightly rotating white dwarfs could offer such an opportunity, while a binary neutron star carried even more clout. When Dyson was writing his “Gravitational Machines” paper, neutron stars were still a theoretical concept, so he primarily focused the paper on white dwarfs.

Get two neutron stars in a tight enough orbit and the speeds they achieve would make it possible to accelerate a spacecraft making a gravity assist up to a substantial percentage of lightspeed. But what an adventure that close pass would be — the tidal forces would be extreme. I don’t recall seeing a neutron star propulsive flyby portrayed in science fiction (help me out here), though Gregory Benford offers a variant on the white dwarf idea in his early work Deeper than the Darkness (1970, later re-done as The Stars in Shroud, which ran in Galaxy in 1978), where a neutron star and an F-class star comprise a system he calls a ‘Flinger,’ with potential uses both for acceleration and deceleration.

David Kipping’s thoughts on extending Dyson to black holes were partially triggered by a fascinating paper from William Stuckey in 1993 entitled “The Schwarzschild Black Hole as a Gravitational Mirror” (citation below). The gravitational mirror in the title is what happens when photons skim near the event horizon and return to the source, a photon ‘boomerang’ that gains propulsive impact because the returning light rays receive a blueshift thanks to the black hole’s relative motion.

Thus the Kipping concept: Use laser beaming technologies to beam toward a precisely targeted member of a black hole binary. The light is returned in blue-shifted form to the spacecraft, the extra energy being used to push the craft. What’s especially intriguing here is that in this scenario, it is not the spacecraft that is making the messy plunge between two rapidly moving, tightly orbiting black holes, but rather the photon beam that is used for propulsion.

It is the light beam that gets the gravitational assist as photons are re-emitted and re-absorbed, for as the paper puts it, “…the halo drive transfers kinetic energy from the moving black hole to the spacecraft by way of a gravitational assist.” A less harrowing experience for any crew, to be sure, and one in which extreme time dilation can be avoided, not to mention the dangers of tidal disruption and radiation referred to above.

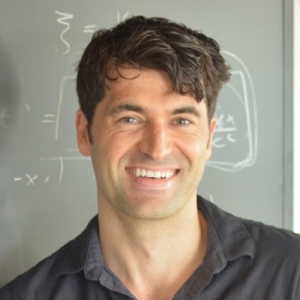

Image: David Kipping (Columbia University), creator of the ‘halo drive’ concept.

Kipping described the idea to me in an email recently:

The idea is to essentially to perform a Dyson slingshot remotely, by firing a collimated particle/energy beam just to the side of the event horizon of a Schwarzchild black hole. If you choose the angle carefully, the beam loops around (like a halo) and comes back to you. If the black hole is moving towards you (I envisaged a compact binary like Dyson), then the beam returns blue shifted. When you initially fire the beam, your ship receives a small momentum kick and when the beam returns and strikes your ship you get another. This is how the ship is propelled, much like a light sail. But the beam actually returns with more energy than it departed, since it siphoned some of the kinetic energy from the black hole. So not only did you accelerate, but your ship actually gained stored energy.

Do this right and the speed of your spacecraft eventually matches that of the black hole, but the cumulative blue shifts allow the craft to continue firing the laser past that point. No new ‘free’ energy is gained but energy from the stored cells aboard the craft can keep the acceleration going up to, the author calculates, 4/3 the speed of the black hole. It’s interesting to note that we are not limited to small masses in such a calculation. Unlike the Breakthrough Starshot energy issue we discussed yesterday, we can drive arbitrarily large masses up to potentially relativistic speeds. All of this by exploiting the fantastic energies available through astrophysical objects.

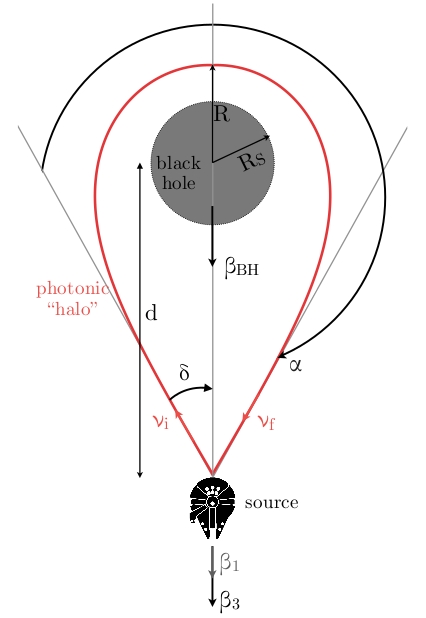

Image: This is Figure 1 from the paper. Caption: Figure 1. Outline of the halo drive. A spaceship traveling at a velocity ?i emits a photon of frequency ?i at a specific angle ? such that the photon completes a halo around the black hole, returning shifted to ?f due to the forward motion of the black hole, ?BH. Credit: David Kipping.

As you would imagine, for the gravitational mirror to function in this way, the beam must be precisely oriented. From the paper:

In order for the deflection to be strong enough to constitute a boomerang, this requires the light’s closest approach to the black hole to be within a couple of Schwarzschild radii, RS ? 2GM/c2. Light which makes a closest approach smaller than 3GM/c2 becomes trapped in orbit, known as the photon sphere, and thus typical boomerang geodesics skim just above this critical distance [italics mine].

Kipping calls this a ‘halo drive’ because the photons returning to the craft appear as a halo around the black hole. Single black holes as well as binaries can be used (and evidently Kerr black holes as well as Schwarschild black holes, though the author plans future work on this), but the paper notes that the potential for tight configurations at relativistic speeds makes binaries preferable. 10 million binary black holes are thought to exist in the galaxy [I’ve seen this figure reduced to 1 million recently — clearly, the issue is still open], raising the possibility of a network of starship acceleration points or, for that matter, deceleration stations.

A range of possible uses for binary black holes emerges, as the paper notes:

Although not the focus of this work, it is worth highlighting that halo drives could have other purposes besides just accelerating spacecraft. For example, the back reaction on the black hole taps energy from it, essentially mining the gravitational binding energy of the binary. Similarly, forward reactions could be used to not only decelerate incoming spacecraft but effectively store energy in the binary like a fly-wheel, turning the binary into a cosmic battery.

Another possibility is that the halos could be used to deliberately manipulate black holes into specific configurations, analogous to optical tweezers. This could be particularly effective if halo bridges are established between nearby pairs of binaries, causing one binary to excite the other. Such cases could lead to rapid transformation of binary orbits, including the deliberate liberation of a binary.

A natural question is why an interstellar civilization, one already capable of reaching a black hole binary and manipulating it in this manner would need to establish a galactic network of transit points. A possible answer is that the amount of energy liberated from such a black hole binary is, as with other kinds of gravitational assist, arriving essentially ‘free’ at the spacecraft. Thus a transportation network on the cheap could be established between specific locations, a mechanism for cost-savings that may well be too efficient to ignore.

Freeman Dyson’s interest in using astrophysical objects for propulsion included, of course, his abiding fascination with the technosignatures of advanced civilizations. Would a galactic infrastructure at work in the galaxy exhibit evidence of its use to distant astronomers? In my next post, I’ll look into the possibility.

The Dyson paper is “Gravitational Machines,” in A.G.W. Cameron, ed., Interstellar Communication, New York: Benjamin Press, 1963, Chapter 12. The Kipping paper is “The Halo Drive: Fuel-free Relativistic Propulsion of Large Masses via Recycled Boomerang Photons,” accepted at the Journal of the British Interplanetary Society (preprint). The Stuckey paper is “The Schwarzschild Black Hole as a Gravitational Mirror,” American Journal of Physics Vol. 61, Issue 5 (1993), pp. 448-456.

Pondering the ‘Dyson Slingshot’

Let’s start the week by talking about gravitational assists, where a spacecraft uses a massive body to gain velocity. Voyager at Jupiter is the classic example, because it so richly illustrates the ability to alter course and accelerate without propellant. Michael Minovitch was working on this kind of maneuver at UCLA as far back as the early 1960s, but it was considered even before this, as in a 1925 paper from Friedrich Zander. It took Voyager to put gravity assists into the public consciousness because the idea enabled the exploration of the outer planets.

Can we use this kind of maneuver to help us gain the velocity we need to make an interstellar crossing? Let’s consider how it works: We’re borrowing energy from a massive object when we do a gravity assist. From the perspective of the Voyager team, their spacecraft got something for ‘free’ at Jupiter, in the sense that no additional propellant was needed. What’s really happening is that the spacecraft gained energy at the expense of the planet. Jupiter being what it is, the change in its own status was invisible, but it lent enough energy to Voyager to prove enabling.

According to David Kipping (Columbia University), the maximum speed increase equals twice the velocity of the planet we’re using for the maneuver, and when you look at Jupiter’s orbital speed around the Sun (around 13.1 kilometers per second), you can see that we’re only talking about a fraction of what it would take to get us to interstellar speeds. But the principle is enticing, because traveling with little or no propellant is a longstanding goal, one that drives research into solar sails and their fast cousins, beamed lightsails. And it has been much on Kipping’s mind.

For gravitational assists from planets are only one aspect of the question, there being other kinds of astrophysical objects that can help us out. Depending on their orbital configuration, some of these are moving fast indeed. In the early 1960s, Freeman Dyson went to work on the physics of gravitational assists around binary white dwarf stars — he would ultimately go on to consider the case of neutron star binaries (back when neutron stars were still purely theoretical). Such concepts obviously imply an interstellar civilization capable of reaching the objects in the first place. But once there, the energies to be exploited would be spectacular.

While I want to begin with Dyson’s ideas, I’ll move tomorrow to Kipping’s latest paper, which addresses the question in a novel way. Kipping, well known for his work in the Hunt for Exomoons with Kepler project, has been pondering Dyson’s notions but also applying them to what would seem, on the surface of things, to be an entirely different proposition: Beamed propulsion. How he combines the two may surprise you as much as it did me, as we’ll see in coming days.

Image: An artist’s conception of two orbiting white dwarf stars. Credit: Tod Strohmayer (GSFC), CXC, NASA, Illustration: Dana Berry (CXC).

Nature of the Question

If we talk about manipulating astrophysical objects, a natural objection arises: Why should we study things that are impossible for our species today? After all, we can get to Jupiter, but getting to the nearest white dwarf, much less a white dwarf binary, is beyond us.

But big ideas can be productive. Consider Daedalus, conceived in the 1970s as the first serious design for a starship. The idea was to demonstrate that a spacecraft could be designed using known physics that could make a journey to another star. The massive two-stage Daedalus (54,000 tonnes) seems impossible today and doubtless will never be built. Was it worth studying?

The answer is yes, because once you’ve established that something is not impossible, you can go to work on ways to engineer a result that may differ hugely from the original. Breakthrough Starshot is built around the idea of using lasers to propel a different kind of spacecraft, not of 54,000 tonnes but of 1 gram, carried by a small lightsail, and designed to be sent not as a one-off mission but as a series of probes driven by the same laser installation.

Once again we’re stretching our thinking, but here the technologies to do such a thing may (or may not, depending on what Breakthrough Starshot’s analyses come up with) be no more than a few decades away. The current Breakthrough effort is all about finding out what is feasible.

Again we’re designing something before we’re sure we can do it. The challenges are obviously immense. Consider: To go interstellar with cruise times of several decades, we need to ramp up velocity, and that takes enormous amounts of energy. Kipping calculates that 2 trillion joules — the output of a nuclear power plant running continuously for 20 days — would be needed to send the Breakthrough Starshot ‘chip’ payload to Proxima Centauri. And that’s just for one ‘shot’, not for the multiple chips envisioned in what might be considered a ‘swarm’ of probes.

Working with Massive Objects

Are there other ways to generate such energies? Freeman Dyson’s extraordinary white dwarf binary gravitational assist appears in “Gravitational Machines,” a short paper that ran in a book A.G.W. Cameron edited called Interstellar Communication (New York, 1963). Conventional gravity assists aren’t sufficient because to be effective, a gravitational ‘machine’ would have to be built on an astronomical scale. Fortunately, the universe has done that for us. So we should be thinking about the principles involved, and what they imply:

…if our species continues to expand its population and its technology at an exponential rate, there may come a time in the remote future when engineering on an astronomical scale will be both feasible and necessary. Second, if we are searching for signs of technologically advanced life already existing elsewhere in the universe, it is useful to consider what kinds of observable phenomena a really advanced technology might be capable of producing.

Dyson’s considers the question in terms of binary stars, specifically white dwarfs, but goes on to address even denser concentrations of matter in neutron stars. Now we’re talking about a kind of gravitational assist that has serious interstellar potential. A spacecraft could be sent into a neutron star binary system for a close pass around one of the stars, to be ejected from the system at high velocity. If 3,000 kilometers per second appears possible with a white dwarf binary, fully 81,000 kilometers per second could occur — 0.27 c — with a neutron star binary.

Hence the ‘Dyson slingshot.’ (As an aside, I’ve always wondered what it must be like to have a name so famous in your field that everything from ‘Dyson spheres’ to ‘Dyson dots’ are named after you. The range of Dyson’s thinking on these matters certainly justifies the practice!).

The slingshot isn’t particularly effective with stars of solar class, where what you gain from a gravitational assist is still outweighed by the possibility of using stellar photons for propulsion. But as Dyson shows, once you get into white dwarf range and then extend the idea down to neutron stars, you’re ramping up the gravitational energy available to the spacecraft while at the same time reducing stellar luminosity. An advanced civilization, in ways Dyson explores, might tighten the orbital distance until the binary’s orbital period reached a scant 100 seconds.

Now a gravity assist has serious punch. In other words, there is the potential here for a civilization to manipulate astrophysical objects to achieve a kind of galactic network, where binary neutron stars offer transportation hubs for propelling spacecraft to relativistic speeds. As you would imagine, this plays to Dyson’s longstanding interest in searching for technological artifacts, and we’ll be talking about that possibility as we get into David Kipping’s new paper.

For Kipping will take Dyson several steps further, by looking not at neutron stars but black hole binaries and coming up with an entirely novel way of exploiting their energies, one in which a beam of light, rather than the spacecraft itself, gets the gravitational assist and passes those energies back to the vehicle. Kipping calls his idea the ‘Halo Drive,’ and we’ll begin our discussion of it, and a novel insight that inspired it, tomorrow.

The Dyson paper is “Gravitational Machines,” in A.G.W. Cameron, ed., Interstellar Communication, New York: Benjamin Press, 1963, Chapter 12. The Kipping paper is “The Halo Drive: Fuel-free Relativistic Propulsion of Large Masses via Recycled Boomerang Photons,” accepted at the Journal of the British Interplanetary Society (preprint). For those who want to get a head start, Dr. Kipping has also prepared a video on the Halo Drive that is available here.

Evidence of Passing Stars

The sheer range of possible outcomes in a planetary system is something we’re beginning to appreciate with each new exoplanet. Not long ago we looked at a possible collision between two large worlds in the young system Kepler 107, and the knowledge of how violent an evolving system can be informs our thinking about the formation of our own Moon and other Solar System phenomena. Now we’re learning to look for signs of another kind of early cataclysm, the migration of a planet caused by the close passage of one or more nearby stars.

None of this should be surprising when we think about the outer system today. We have a vast cloud made up of trillions of comets encircling a more disk-like belt of debris in the Kuiper Belt, and a host of small objects moving on orbits that challenge our theories of how they formed. Indeed, the orbits of ‘scattered disk’ objects influenced by Neptune and, even more intriguing, unusual trans-Neptunian objects like Sedna may implicate a yet undiscovered planet 9.

Some of what we are seeing may well be the result of a star passing near the Sun, and we know, for example, that the binary system known as Scholz’s star (WISE 0720?0846) passed through the Oort Cloud some 70,000 years ago. Close passes much earlier in the evolution of our protoplanetary disk could obviously have played a role in disrupting existing orbits.

An F-class star in the constellation Crux about 300 light years from Earth, HD 106906 may hold promising information about just such an event in another stellar system. The star is orbited by a directly imaged planet in a misaligned orbit that has been under investigation by UC-Berkeley’s Paul Kalas, working with Robert De Rosa (Kavli Institute for Particle Astrophysics and Cosmology). With a mass of about 11 Jupiters, the planet is tilted 21 degrees from the plane of the circumstellar disk. It’s also a whopping 738 AU out, 18 times farther from its star than Pluto from the Sun. That brings into doubt its in situ formation.

Image: Two binary stars, now far apart, skated by one another 2-3 million years ago, leaving a smoking gun: a disordered planetary system (left). Credit: UC-Berkeley. Credit: Paul Kalas.

A closer look using the Gemini Planet Imager and the Hubble Space Telescope produced the finding that this star is orbited by a belt of comets in an equally lopsided orbit. The signs of disruption were clear, and Kalas and De Rosa trace out a tortured history for this unusual world. Through gravitational instability induced by too close a passage to the central binary star (a finding discussed by Grenoble Observatory researchers led by Laetitia Rodet in 2017), the planet would have gone interstellar but for the close passage of a pair of passing stars. Their gravitational influence left it in the remote outer regions of its system on an eccentric orbit.

Image: Simulation of a binary star flyby of a young planetary system. UC Berkeley and Stanford astronomers suspect that such a flyby altered the orbit of a planet (in blue) around the star HD 106906 so that it remained bound to the system in an oblique orbit similar to that of a proposed Planet Nine attached to our own solar system. Animation credit: Paul Kalas.

Kalas and De Rosa used data from the European Space Agency’s Gaia mission to firm up this hypothesis. The scientists collected information on 461 stars from Gaia DR2 astrometry, all of them in the stellar grouping known as the Scorpius–Centaurus (Sco–Cen) association. Incorporating ground-based radial velocity work as well, the team calculated the positions of these stars backward in time, revealing the binary stars — HIP 59716 and HIP 59721 — as candidates for the stars that altered the young system some 3 million years ago.

“What we have done here is actually find the stars that could have given HD 106906 b the extra gravitational kick, a second kick so that it became long-lived, just like a hypothetical Planet Nine would be in our solar system,” Kalas said.

“Studying the HD 106906 planetary system is like going back in time to watch the Oort cloud of comets forming around our young sun. Our own giant planets gravitationally kicked countless comets outward to large distances. Many were ejected completely, becoming interstellar objects like ?Oumuamua, but others were influenced by passing stars. That second kick by a stellar flyby can detach a comet’s orbit from any further encounters with the planets, saving it from the prospect of ejection. This chain of events preserved the most primitive solar system material in a deep freeze far from the sun for billions of years.”

Image: Some 2 to 3 million years ago, in a young, newly formed planetary system, a planet was in danger of being kicked out of the system because of gravitational interactions with the central, binary star (left panel). A close pass by another binary star (not shown) within the same cluster gave the planet an extra kick that stabilized the orbit and rescued it from certain ejection (right panel). Credit: Paul Kalas.

The binary pair came into the system disk of HD 106906 on a trajectory that was within 5 degrees of the system disk, maximizing the extent of the encounter. From the paper:

HIP 59716 and HIP 59721 are the best candidates of the currently known members of Sco–Cen for a dynamically important close encounter with HD 106906 within the last 15 Myr. The flyby of these two stars fulfill many of the criteria for the stabilization scenario described in Rodet et al. (2017). Their trajectories are almost coplanar with the debris disk in its current orientation, their velocities relative to HD 106906 at closest approach are low (the change in velocity of the orbiting planet being inversely proportional to the relative velocity of the passing star at closest approach), and the distribution of closest approach distances for HIP 59716 is consistent with a dynamically significant encounter within 0.5 pc.

Continuing work on this system will investigate the relative radial velocities of the stars involved, which will mean future spectroscopic studies of the two candidate perturbers. The authors point out that the astrometry for each star will be improved with upcoming Gaia data releases. “We started with 461 suspects and discovered two that were at the scene of the crime,” says Kalas. “Their exact role will be revealed as we gather more evidence.”

The paper is De Rosa & Kalas, “A Near-coplanar Stellar Flyby of the Planet Host Star HD 106906,” accepted for publication at the Astronomical Journal (abstract).

Tuning Up HPF: The Habitable Zone Planet Finder

If you had a hot new instrument like the Habitable Zone Planet Finder (HPF) now mounted at the Hobby-Eberly Telescope (McDonald Observatory, University of Texas), how would you run it through its paces for fine-tuning and verification of its performance specs? The team behind HPF has chosen to deploy the instrument during its commissioning phase on a nearby target, Barnard’s Star, which for these purposes we can consider something of an M-dwarf standard.

Working at near-infrared wavelengths, HPF uses radial velocity methods to identify low-mass planets around nearby M-dwarf stars. The choice of wavelength is determined by the mission: M-dwarfs (also known as ‘red dwarfs’) are prey to substantial magnetic activity that shows up as spots and flares that disrupt instruments working in visible light, not to mention the fact that they are small to begin with and thus faint on the sky. In the near-infrared, close to but not in the visible spectrum, this category of star appears brighter and its surface activity more muted.

I mentioned Barnard’s Star as a kind of standard because it precisely suits astronomers’ needs for calibrating such an instrument. Here let me quote from a Penn State blog on HPF (Penn State built the instrument), which lays out the ideal for commissioning:

While the ultimate goal of any Doppler spectrograph is to find lots of exoplanets, boring is better during the commissioning phase. The only way to test the stability and precision of your end-to-end measurement system-from the telescope, through the fiber optics, and ultimately the optics and detector of the spectrograph-is to make repeated measurements of a star with little or no variability. That way, any variability seen in the measurements must be caused by the instrument, rather than the star itself. In other words, the less variability we measure in observations of our stable “standard star,” the better the instrument is performing.

Barnard’s Star fits the bill beautifully. For one thing, it’s close by, at about 6 light years, making it the second-closest system to the Sun. At 14 percent of the Sun’s mass, it’s also typical of the kind of stars HPF will survey. But the real value lies in its age, for Barnard’s Star is thought to be extremely old, possibly as old as the Milky Way itself. The star rotates slowly and shows little stellar activity of the kind that would mask the radial velocity signal in other M-dwarfs.

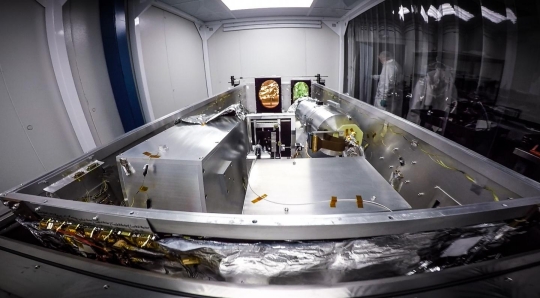

Image: The new Penn State-led Habitable Zone Planet Finder (HPF) provides the highest precision measurements to date of infrared signals from nearby stars. Pictured: The HPF instrument during installation in its clean-room enclosure in the Hobby Eberly Telescope at McDonald Observatory. Credit: Guðmundur Stefánssonn, Penn State.

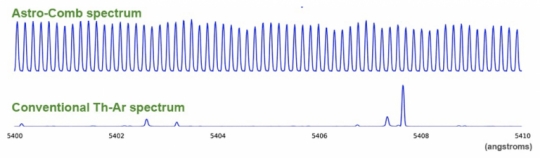

To increase precision at the HPF, Penn State has added a laser frequency comb (LFC) to the mix. Custom-built by the National Institute of Standards and Technology (NIST), the comb is a kind of ‘ruler’ that is used to calibrate the near-infrared signal from other stars. Work like this demands a calibration source because a spectrum from the observed star will ‘drift’ slightly, a movement that must be corrected when astronomers are looking for signals in the area of 1 meter per second to identify a small planet in the habitable zone of an M-dwarf. This is a kind of false Doppler effect likely due to physical issues in the instrument itself. Measuring the spectra of two sources at once — one of them being the stable frequency comb — allows the correction to be made, letting the true Doppler effect induced by planets around the star be observed.

Atomic emission lamps have been used for such calibration in the past, but laser frequency combs produce spectra with finely calibrated emission lines that are stable and of uniform brightness. Adding a laser comb to HPF ensures maximum performance, says Suvrath Mahadevan (Penn State), who is principal Investigator of the HPF project:

“The laser comb…separates individual wavelengths of light into separate lines, like the teeth of a comb, and is used like a ruler to calibrate the near-infrared energy from the stars. This combination of technologies has allowed us to demonstrate unprecedented near-infrared radial velocity precision with observations of Barnard’s Star, one of the closest stars to the Sun.”

Image: An example comparison of calibration spectra for astronomical spectrographs. Credit: HPF / Penn State.

Mahadevan adds that the technical challenges of reaching this level of precision are substantial. The instrument is highly sensitive to any infrared light emitted at room temperature, which means operations must take place at extremely cold temperatures. Thus far, the results speak for themselves, as discussed in a paper in Optica that describes the Barnard’s Star work (citation below).

The current data series on Barnard’s Star shows a stability of about 1.5 meters per second, which tops anything achieved by an infrared instrument. This is actually close to the best earlier measurements of the star, which have come from the renowned HARPS spectrograph working at visible wavelengths (378 nm – 691 nm); these come in at 1.2 meters per second. The HPF goal is 1 meter per second, not yet attained, though the team continues to refine its numbers while searching for possible instrumental issues that may play a role. From the blog:

We would be remiss if we did not emphasize that working all of the kinks out of an ultra-precise Doppler spectrograph is a years-long process, and we are far from done making improvements to the instrument and our analysis techniques. With that said, our early observations of Barnard’s star are extremely promising!

Can HPF confirm the Pale Red Dot project’s super-Earth around Barnard’s Star? Not yet. Although the instrument has the precision to see Barnard’s Star b, a problem remains:

As it turns out, cosmic coincidence prevents us from having much information on Barnard b at this point. The orbit of the proposed planet is eccentric, which means the Doppler signal is more pronounced at some phases of its orbit than others. Through nothing but luck, our HPF-LFC observations completely missed the most dynamic section of the Barnard b phase curve. Thus, while our HPF measurements do not rule out the proposed planet, they cannot yet confirm it, either. This is just one of many examples of how exoplanet detection is a data-intensive process!

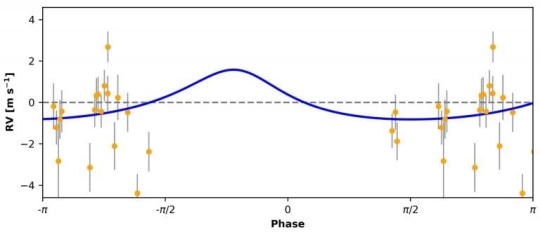

Image: The orbital model of Barnard b (blue), with HPF measurements (gold) folded to the orbital phase. Our measurements have not yet covered the maximum of the eccentric orbit. Credit: HPF team / Penn State.

The paper on applying laser frequency comb techniques to the HPF in studies of Barnard’s Star is Metcalf et al., “Stellar spectroscopy in the near-infrared with a laser frequency comb,” Optica Vol. 6, No. 2 (2019), pp. 233-239 (abstract).

Alternatives to DNA-Based Life

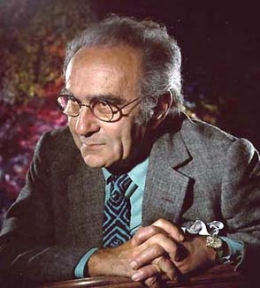

The question of whether or not we would recognize extraterrestrial life if we encountered it used to occupy mathematician and historian Jacob Bronowski (1908-1974), who commented on the matter in a memorable episode of his 1973 BBC documentary The Ascent of Man.

“Were the chemicals here on Earth at the time when life began unique to us? We used to think so. But the most recent evidence is different. Within the last few years there have been found in the interstellar spaces the spectral traces of molecules which we never thought could be formed out in those frigid regions: hydrogen cyanide, cyano acetylene, formaldehyde. These are molecules which we had not supposed to exist elsewhere than on Earth. It may turn out that life had more varied beginnings and has more varied forms. And it does not at all follow that the evolutionary path which life (if we discover it) took elsewhere must resemble ours. It does not even follow that we shall recognise it as life — or that it will recognise us.”

Bronowski wanted to show how human society had evolved as its conception of science changed — the title is a nod to Darwin’s The Descent of Man (1871), and the sheer elegance of the production reflected the fact that the series was the work of David Attenborough, whose efforts had likewise led to the production of Kenneth Clarke’s Civilisation (1969), among many other projects. If the interplay of art and science interests you, a look back at both these series will repay your time.

As to Bronowski, who died the year after The Ascent of Man was first aired, I can only imagine how fascinating he would have found new work out of the Foundation for Applied Molecular Evolution in Alachua, Florida. Led by Steven Benner, a team of scientists has addressed the question of alien life so unlike our own that we might not recognize it. Along the way, it has managed to craft a new informational system that, like DNA, can store and transmit genetic information. The difference is that Benner and team use eight, not four, key ingredients.

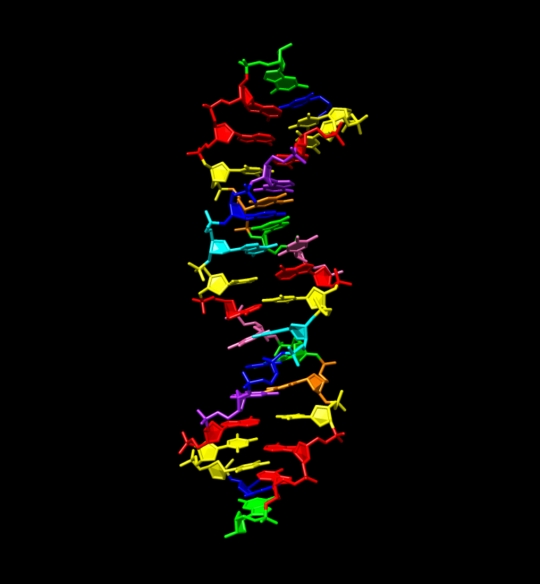

Image: This illustration shows the structure of a new synthetic DNA molecule, dubbed hachimoji DNA, which uses the four informational ingredients of regular DNA (green, red, blue, yellow) in addition to four new ones (cyan, pink, purple, orange). Credit: Indiana University School of Medicine.

DNA, a double-helix structure like the new “hachimoji DNA” (the Japanese term ‘hachi’ stands for ‘eight,’ while ‘moji’ means ‘letter’), is based upon four nucleotides that appear to be standard for life as we know it on Earth. ‘Hachimoji’ DNA likewise contains adenine, cytosine, guanine, and thymine, but puts four other nucleotides into play to store and transmit information.

We begin to see alternatives to the ways life can structure itself, pointing to environments where a different kind of structure could survive whereas DNA-based life might not. That could be useful as we’re beginning to put spacecraft into highly interesting environments like Europa and Enceladus, but to get the most out of our designs, we need to have a sense of what we’re looking for. What kinds of molecules could store information in the worlds we’ll be exploring?

Thus Mary Voytek, senior scientist for astrobiology at NASA headquarters:

“Incorporating a broader understanding of what is possible in our instrument design and mission concepts will result in a more inclusive and, therefore, more effective search for life beyond Earth.”

Creating something unusual right here on Earth is one way to approach the problem, but of course there are others, and I am reminded of Paul Davies work and his own notions of what he calls ‘weird life.’ The Arizona State scientist, a prolific author in his own right, has examined the concept of a ‘second genesis,’ a fundamentally different kind of life that might already be here, having evolved on our planet and remaining on it in what we might call a ‘shadow biosphere.’

Finding alternate life on our own planet would relieve us of the burden of creating new mechanisms to make life work in our labs, so perhaps the thorough investigation of deep sea hydrothermal vents, salt lakes and high radiation environments may cut straight to the chase, if such life is there. In any case, finding a second genesis would make it far more likely that we’re going to find life on other worlds, and such life, as Davies reminds us, might be right under our noses. Like ‘hachimoji DNA,’ such life would challenge and stimulate all our assumptions.

The paper is Hoskika et al., “Hachimoji DNA and RNA: A genetic system with eight building blocks,” Science Vol. 363, Issue 6429 (22 Feb 2019), pp. 884-887 (abstract). Thanks to Byron Rogers for an early tip on this work.