Centauri Dreams

Imagining and Planning Interstellar Exploration

Science Fiction and the Symposium

Science fiction is much on my mind this morning, having just been to a second viewing of The Martian (this time in 3D, which I didn’t much care for), and having just read a new paper on wormholes that suggests a bizarre form of communication using them. More about both of these in a moment, but the third reason for the SF-slant is where I’ll start. The 100 Year Starship organization’s fourth annual symposium is now going on in Santa Clara (CA), among whose events is the awarding of the first Canopus Awards for Interstellar Writing.

A team of science fiction writers will anchor what the organization is calling Science Fiction Stories Night on Halloween Eve. Among the writers there, I’m familiar with the work of Pat Murphy, whose novel The Falling Woman (Tor, 1986) caught my eye soon after publication. I remember reading this tale of an archaeological dig in Central America and the ‘ghosts’ it evokes with fascination, although it’s been long enough back that I don’t recall the details. Joining Murphy will be short story writer Juliette Wade, novelist Brenda Cooper and publisher Jacob Weisman, whose Tachyon publishing is a well-known independent press.

As to the Canopus Awards, they’re to be an annual feature of the 100 Year Starship initiative aimed at highlighting “the importance of great story telling to propel the interstellar movement” (I’m quoting here from their press materials). In case you’re looking for some reading ideas, here are the Canopus finalists going into the event.

In the category of “Previously Published Long-Form Fiction” (40,000 words or more):

Other Systems by Elizabeth Guizzetti

The Creative Fire (Ruby’s Song) by Brenda Cooper

InterstellarNet: Enigma (Volume 3) by Edward M. Lerner

Aurora by Kim Stanley Robinson

Coming Home by Jack McDevitt

——-

In the category of “Previously Published Short-Form Fiction” (between 1,000 and 40,000 words):

“Race for Arcadia” by Alex Shvartsman

“Stars that Make Dark Heaven Light” by Sharon Joss

“Homesick” by Debbie Urbanski

“Twenty Lights to the Land of Snow” by Michael Bishop

“Planet Lion” by Catherynne M. Valente

“The Waves” by Ken Liu

“Dreamboat” by Robin Wyatt Dunn

——-

In the category of “Original Fiction” (1,000-5,000 words):

“Landfall” by Jon F. Zeigler

“Project Fermi” by Michael Turgeon

“Everett’s Awakening” by Ry Yelcho

“Groundwork” by G. M. Nair

“His Holiness John XXIV about Father Angelo Baymasecchi’s Diary” by Óscar Garrido González

“The Disease of Time” by Joseph Schmidt

——-

In the category of “Original Non-Fiction” (1,000-5,000 words):

“Why Interstellar Travel?” by Jeffrey Nosanov

“Finding Earth 2.0 from the Focus of the Solar Gravitational Lens” by Louis D. Friedman and Slava Turyshev

Of Martians and Wormholes

This will be the first 100YSS symposium I’ve missed and I’ll regret missing the chance to meet Pat Murphy and see Mae Jemison, Lou Friedman, Jill Tarter and many others who have made past events so enjoyable. I imagine Jack McDevitt will be there as well; he usually goes to these. His Canopus Award entry Coming Home (Ace, 2014) is another in the Alex Benedict series, featuring a future antiques dealer among whose many artifacts are ‘antiques’ that were crafted far in our own future. I mention Jack because I admire him, have read all the Alex Benedict novels, and thought Coming Home was one of his best.

As to The Martian, it’s a movie I loved for its attention to detail and the sheer bravura of its proceedings. For people who remember Apollo, the idea of a Mars exploration program of similar audacity is a wonderful morale-booster, a reminder that the spirit that took us to the Moon is still alive. It also makes for a jolting comparison between those days and today’s public apathy and budgetary dilemmas, all of which make Mars a target that always seems to be, like fusion, somewhere in the future. Movies like The Martian could do something to reach younger generations, and perhaps ignite interest in both government and private attempts to get to the Red Planet. But be aware that the regular version offers far more verisimilitude than the 3D, whose effects seem contrived and often distracting.

I don’t have time to dig deeply into Luke Butcher’s new paper on wormholes, but I do at least want to mention this effort as one that has caught the interest of wormhole specialist Matt Visser, and should intrigue science fiction authors for its plot possibilities. Working at the University of Cambridge, Butcher has been studying how to keep wormhole mouths open, the problem being that although people like Kip Thorne have speculated on using negative energy to do the trick, wormholes appear to be utterly unstable, closing before they can be used.

If wormholes do exist and we could find a way to use them, we might have a way to cross huge distances without contradicting Einstein’s limits on travel faster than light, using the wormhole’s ability to shortcut its way through spacetime itself. Butcher looks at negative energy in terms of Casimir’s parallel plates sitting close together in a vacuum. What if a wormhole’s own shape could generate such Casimir energies, thus holding it open long enough to use?

Image: Imagining a wormhole. Here we see a simulated traversable wormhole that connects the square in front of the physical institutes of University of Tübingen with the sand dunes near Boulogne sur Mer in the north of France. The image is calculated with 4D raytracing in a Morris-Thorne wormhole metric, but the gravitational effects on the wavelength of light have not been simulated. Credit: Wikimedia Commons.

Butcher can’t find ways to keep wormholes open for long, but he does offer the theoretical possibility that we might be able to keep one open long enough to get a beam of light into it. Get the picture? Communications moving through the wormhole, with the same effect as if they were moving faster than light, with all the interesting causal issues that raises. From the paper:

…the negative Casimir energy does allow the wormhole to collapse extremely slowly, its lifetime growing without bound as the throat-length is increased. We find that the throat closes slowly enough that its central region can be safely traversed by a pulse of light.

So there you are, science fiction writers, another plot possibility involving communications between starships or, for that matter, between planets in, say, a galaxy-spanning civilization of the far future. Make of it what you will. The delight of science fiction is that it can take purely theoretical constructs like this one and run down the endless chain of possibilities. In our era of deep space probes, astrobiology and exoplanet research, science fiction has truly moved out of the literary ghetto in which it once saw itself enclosed. Canopus Award winners take note: You’re starting to go mainstream.

The paper is Butcher, “Casimir Energy of a Long Wormhole Throat,” submitted to Physical Review D (preprint).

Where We Might Sample Europa’s Ocean

No one interested in the prospects for life on other worlds should take his or her eyes off Europa for long. We know that its icy surface is geologically active, and that beneath it is a global ocean. While water ice is prominent on the surface, the terrain is also marked by materials produced by impacts or by irradiation. Keep in mind the presence of Io, which ejects material like ionized sulfur and oxygen that, having been swept up in Jupiter’s magnetosphere, eventually reaches Europa. Irradiation can break molecular bonds to produce sulfur dioxide, oxygen and sulfuric acid. And we’re learning that local materials can be revealed by geology.

A case in point is a new paper that looks at infrared data obtained with the adaptive optics system at the Keck Observatory. The work of Mike Brown, Kevin Hand and Patrick Fischer (all at Caltech, where Fischer is a graduate student), suggests that the best place to look for compounds indicative of life would be in the jumbled areas of Europa called chaos terrain. Here we may have materials brought up from the ocean below.

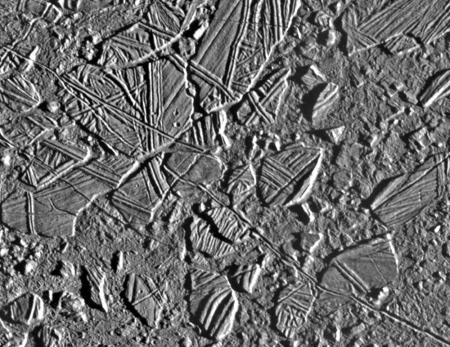

“We have known for a long time that Europa’s fresh icy surface, which is covered with cracks and ridges and transform faults, is the external signature of a vast internal salty ocean,” says Brown, and our imagery of these areas taken by Galileo shows us a shattered landscape, with great ‘rafts’ of ice that have broken, moved and later refrozen. The clear implication is that water from the internal ocean may have risen to the surface as these chaos areas shifted and cracked. And while a direct sampling of Europa’s ocean would be optimal, our best bet for studying its composition for now may well be a lander that can sample frozen deposits.

Image: On Europa, “chaos terrains” are regions where the icy surface appears to have been broken apart , moved around, and frozen back together. Observations by Caltech graduate student Patrick Fischer and colleagues show that these regions have a composition distinct from the rest of the surface which seems to reflect the composition of the vast ocean under the crust of Europa. Credit: NASA/JPL-Caltech.

Brown and team, whose work has been accepted at The Astrophysical Journal, examined data taken in 2011 using the OSIRIS spectrograph at Keck, which measures spectra at infrared wavelengths. Keck is also able to bring adaptive optics into play to sharply reduce distortions produced by Earth’s atmosphere. Spectra were produced for 1600 different locations on the surface of Europa, then sorted into major groupings using algorithms developed by Fischer. The results were mapped onto surface data produced by the Galileo mission.

The result: Three categories of spectra showing distinct compositions on Europa’s surface. From the paper:

The first component dominates the trailing hemisphere bullseye and the second component dominates the leading hemisphere upper latitudes, consistent with regions previously found to be dominated by irradiation products and water ice, respectively. The third component is geographically associated with large geologic units of chaos, suggesting an endogenous identity. This is the first time that the endogenous hydrate species has been mapped at a global scale.

We knew about Europa’s abundant water ice, and we also expected to find chemicals formed from irradiation. The third grouping, though, being particularly associated with chaos terrain, is intriguing. Here the chemical indicators did not identify any of the salt materials thought to be on Europa. The paper continues:

The spectrum of component 3 is not consistent with linear mixtures of the current spectral library. In particular, the hydrated sulfate minerals previously favored possess distinct spectral features that are not present in the spectrum of component 3, and thus cannot be abundant at large scale. One alternative composition is chloride evaporite deposits, possibly indicating an ocean solute composition dominated by the Na+ and Cl? ions.

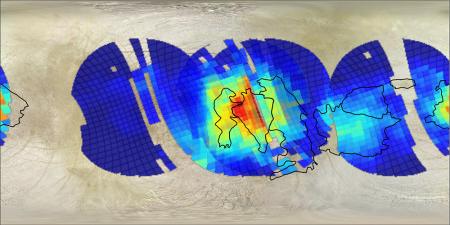

Image: Mapping the composition of the surface of Europa has shown that a few large areas have large concentrations of what are thought to be salts. These salts are systematically located in the recently resurfaced “chaos regions,” which are outlined in black. One such region, named Western Powys Regio, has the highest concentration of these materials presumably derived from the internal ocean, and would make an ideal landing location for a Europa surface probe.

Credit: M.E. Brown and P.D. Fischer/Caltech , K.P. Hand/JPL.

The association with chaos areas is significant. Because these spectra map to areas with recent geological activity, they are likely to be native to Europa, and conceivably material related to the internal ocean. In this Caltech news release, Brown speculates that a large amount of ocean water flowing out onto the surface and then evaporating could leave behind salts. As in the Earth’s desert areas, the composition of the salt can tell us about the materials that were dissolved in the water before it evaporated. Brown adds:

“If you had to suggest an area on Europa where ocean water had recently melted through and dumped its chemicals on the surface, this would be it. If we can someday sample and catalog the chemistry found there, we may learn something of what’s happening on the ocean floor of Europa and maybe even find organic compounds, and that would be very exciting.”

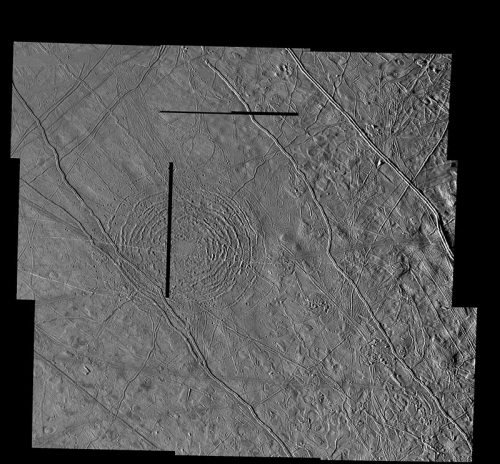

So we’re learning where a Europa lander should be able to do the most productive science in relation to astrobiology and the ocean beneath the ice. Keep your eye on the western portion of the area known as Powys Regio, where the Caltech team found the strongest concentrations of local salts. Powys Regio is just south of what appears to be an old impact feature called Tyre. The image below, with the concentric rings of Tyre clearly visible, reminds us that an ocean under a mantle of ice is vulnerable to surface activity and external strikes that would break through the ice and deposit ocean materials within reach of the right kind of lander.

Image: The feature called Tyre, showing signs of an ancient Europan impact. Credit: NASA/JPL-Caltech.

The paper is Fischer, Brown & Hand, “Spatially Resolved Spectroscopy of Europa: The Distinct Spectrum of Large-scale Chaos,” accepted at The Astrophysical Journal (preprint).

Catching Up with the Outer System

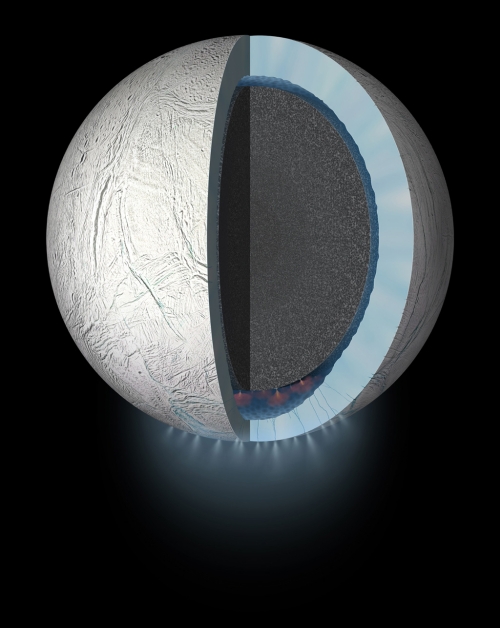

We now pivot from Dysonian SETI to the ongoing exploration of our own system, where lately there have been few dull moments. Today the Cassini Saturn orbiter will make its deepest dive ever into the plume of ice, water vapor and organic molecules streaming out of four major fractures (the ‘Tiger Stripes’) at Enceladus’ south polar region. The plume is thought to come from the ocean beneath the moon’s surface ice, and while Cassini is not able to detect life, it is able to study molecular hydrogen levels and more massive molecules including organics. Understanding the hydrothermal activity taking place on Enceladus helps us explore the possible habitability of the ocean for simple forms of life.

Image: This artist’s rendering showing a cutaway view into the interior of Saturn’s moon Enceladus. NASA’s Cassini spacecraft discovered the moon has a global ocean and likely hydrothermal activity. A plume of ice particles, water vapor and organic molecules sprays from fractures in the moon’s south polar region. Credit: NASA/JPL-Caltech.

Cassini’s cosmic dust analyzer (CDA) instrument can detect up to 10,000 particles per second, telling us how much material the plume is spraying into space from the internal ocean. Another key measurement in the flyby will be the detection of molecular hydrogen by the spacecraft’s INMS (ion and neutral mass spectrometer) instrument, says Hunter Waite (SwRI):

“Confirmation of molecular hydrogen in the plume would be an independent line of evidence that hydrothermal activity is taking place in the Enceladus ocean, on the seafloor. The amount of hydrogen would reveal how much hydrothermal activity is going on.”

We’re also going to get a better picture of the plume’s structure — individual jets or ‘curtain’ eruptions — that may clarify how material is making its way to the surface from the ocean below. Cassini will move through the plume at an altitude of 48 kilometers, which is about the distance between Baltimore and Washington, DC. “We go screaming by all this at speeds in excess of 19,000 miles per hour,” says mission designer Brent Buffington. “We’re flying the deepest we’ve ever been through this plume, and these instruments will be sensing the gases and looking at the particles that make it up.” Cassini has just one Enceladus flyby left, on December 19.

NASA’s online toolkit for the final Enceladus flybys can be found here.

Meanwhile, in the Kuiper Belt…

Although its mission at Pluto/Charon has been accomplished, there are a lot of reasons to be excited about New Horizons beyond the data streaming back from the spacecraft. On October 25th, mission controllers directed a targeting maneuver using the craft’s hydrazine thrusters, one that lasted about 25 minutes and was the largest propulsive maneuver ever conducted by New Horizons. The burn, the second in a series of four, adjusts the spacecraft’s trajectory toward Kuiper Belt object 2014 MU69.

If all goes well (and assuming NASA signs off on the extended mission), the encounter will occur on January 1, 2019. The science team intends to bring New Horizons closer to MU69 than the 12500 kilometers that separated it from Pluto on closest approach. Two more targeting maneuvers are planned, one for October 28, the other for November 4. Every indication from data received at the Johns Hopkins University Applied Physics Laboratory is that the Sunday burn was successful. We now have a new destination a billion miles further out than Pluto.

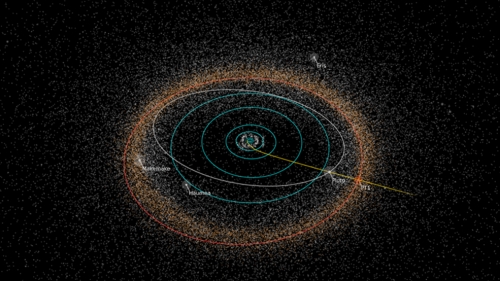

Image: Path of NASA’s New Horizons spacecraft toward its next potential target, the Kuiper Belt object 2014 MU69. Although NASA has selected 2014 MU69 as the target, as part of its normal review process the agency will conduct a detailed assessment before officially approving the mission extension to conduct additional science. Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute/Alex Parker.

Remember that this is a spacecraft that was designed for operations in the Kuiper Belt, one that carried the extra hydrazine necessary for the extended mission, and one whose communications system was designed to operate at these distances. The science instruments aboard New Horizons were also designed to operate in much lower light levels than the spacecraft experienced at Pluto/Charon, and we should have enough power for many years.

Principal investigator Alan Stern commented on the choice of this particular Kuiper Belt object (also being called PT1 for ‘Potential Target 1’) at the end of the summer, noting its advantages:

“2014 MU69 is a great choice because it is just the kind of ancient KBO, formed where it orbits now, that the Decadal Survey desired us to fly by. Moreover, this KBO costs less fuel to reach [than other candidate targets], leaving more fuel for the flyby, for ancillary science, and greater fuel reserves to protect against the unforeseen.”

What we know about 2014 MU69 is that it is about 45 kilometers across, about ten times larger than the average comet, and 1000 times more massive. Even so, it’s only between 0.5 and 1 percent of the size of Pluto, and 1/10,000th as massive. Researchers consider objects like these the building blocks of dwarf worlds like Pluto. While we’ve visited asteroids before, Kuiper Belt objects like this one are thought to be well preserved samples of the early Solar System, having never experienced the solar heating that asteroids in the inner system encounter.

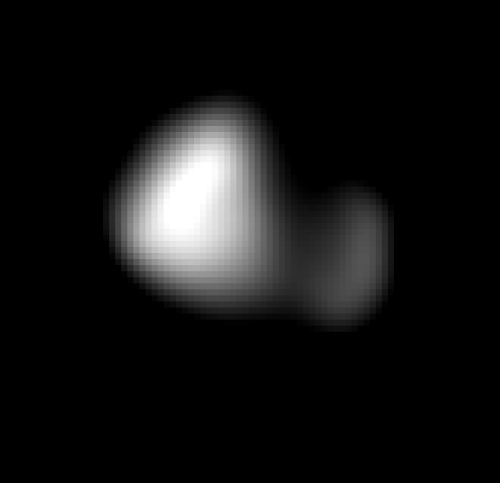

We also have a newly received image of Pluto’s tiny moon Kerberos, which turns out to be smaller and much more reflective than expected, with a double-lobed shape. The larger lobe is about eight kilometers across, the smaller approximately 5 kilometers. Mission scientists speculate that the moon was formed from the merger of two smaller objects. Its reflectivity indicates that it is coated with water ice. Earlier measurements of the gravitational influence of Kerberos on the other nearby moons were evidently incorrect — scientists had expected to find it darker and larger than it turns out to be, another intriguing surprise from the Pluto system.

Image: This image of Kerberos was created by combining four individual Long Range Reconnaissance Imager (LORRI) pictures taken on July 14, 2015, approximately seven hours before New Horizons’ closest approach to Pluto, at a range of 396,100 km from Kerberos. The image was deconvolved to recover the highest possible spatial resolution and oversampled by a factor of eight to reduce pixilation effects. Kerberos appears to have a double-lobed shape, approximately 12 kilometers across in its long dimension and 4.5 kilometers in its shortest dimension. Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute.

Why SETI Keeps Looking

How do you feel about a universe that shows no signs of intelligent life? Let’s suppose that we pursue various forms of SETI for the next century or two and at the end of that time, find no evidence whatsoever for extraterrestrial civilizations. Would scientists of that era be disappointed or simply perplexed? Would they, for that matter, keep on looking?

I suspect the latter is the case, not because extraterrestrial civilizations would demonstrate that we’re not alone, but because in matters of great scientific interest, it’s the truth we’re after, not just the results we want to see. In my view, learning that there was no other civilization within our galaxy — at least, not one we can detect — would be a profoundly interesting result. It might imply that life itself is rare, or even more to the point, that any civilizations that do arise are short-lived. This is that tricky term in the Drake equation that refers to the lifespan of a technological civilization, and if that lifetime is short, then our own position is tenuous.

The anomalous light curve in the Kepler data from KIC 8462852 focuses this issue because on the one hand I’m hearing from critics that SETI researchers simply want to see extraterrestrials in their data, and thus misinterpret natural phenomena. An equally vocal group asks why people like me are so keen on looking for natural explanations when the laws of physics do not rule out other civilizations. All I can say is that we need to be dispassionate in the SETI search, looking for interesting signals (or objects) while learning how to distinguish their probable causes.

In other words, I don’t have a horse in this race. The universe is what it is, and the great quest is to learn as much as we can about it. I am not going to lose sleep if we discover a natural cause for the KIC 8462852 light curves because whatever is going on there is astrophysically interesting, and will help us as we deepen our transit studies of other stars. The recent paper from Wright et al. discusses how transiting megastructures could be distinguished from exoplanets, and goes on to describe the natural sources that could produce such signatures. The ongoing discussion is fascinating in its own right and sharpens our observational skills.

Image: The Kepler field of view, containing portions of the constellations Cygnus, Lyra, and Draco. Credit: NASA.

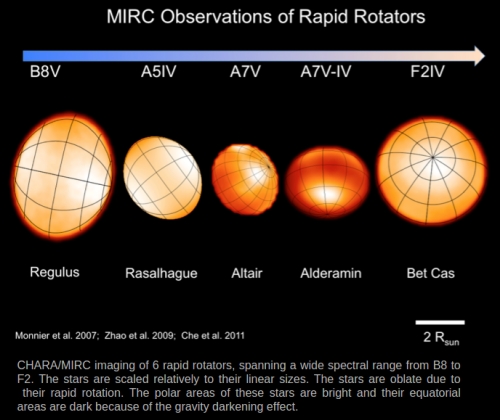

Yesterday’s post looked at ‘gravity darkening’ as a possible explanation for what we see at KIC 8462852, with reference to conversations we’ve been having in the comments section here. Gravity darkening appears in the Wright paper, though not with reference to KIC 8462852, and is also under study in other systems, particularly the one called PTFO 8-8695. But its prospects seem to be dimming when it comes to KIC 8462852, as Wright explained in a tweet.

@centauri_dreams @steinly0 This "solution" doesn't work: planets don't block 22% of stellar disk. Star spins fast, but not THAT fast.

— Jason Wright (@Astro_Wright) October 26, 2015

He went on to elaborate in yesterday’s comments section:

Gravity darkening might be a small part of the puzzle, but it does not explain the features of this star. Tabby’s star does not rotate fast enough to experience significant gravity darkening. That post also suggests that planets could be responsible, but planets are not large enough to produce the observed events, and there are too many events to explain with planets or stars.

The Wright paper lists nine natural causes of anomalous light curves in addition to gravity darkening, including planet/planet interactions, ring systems and debris fields, and starspots. Exomoons, the subject of continuing work by David Kipping and colleagues at the Hunt for Exomoons with Kepler project, also can play a role, with a sufficiently large moon producing its own transit events and leaving a signature in transit timing and duration variations.

We have examples of objects whose anomalies have been investigated and found to be natural, including the interesting CoRoT-29b, in which gravity darkening is likewise rejected. From the paper:

CoRoT-29b shows an unexplained, persistent, asymmetric transit — the amount of oblateness and gravity darkening required to explain the asymmetry appears to be inconsistent with the measured rotational velocity of the star (Cabrera et al. 2015). Cabrera et al. explore each of the natural confounders in Table 2.3 for such an anomaly, and find that none of them is satisfactory. Except for the radial velocity measurements of this system, which are consistent with CoRoT-29b having planetary mass, CoRoT-29b would be a fascinating candidate for an alien megastructure.

We can also assign a natural explanation to KIC 1255b, an interesting find because its transit depths vary widely even between consecutive transits, and its transit light curves show an asymmetry between ingress and egress. What we are apparently looking at here is a small planet that is disintegrating, creating a thick, comet-like coma and tail that is producing the asymmetries in the transit light curves. This is an intriguing situation, as the Wright paper notes, with the planet likely pared of 70 percent of its mass and reduced to an iron-nickel core.

We may well find a natural explanation that takes care of KIC 8462852 as well, and the large scope of the challenge will ensure that the object remains under intense scrutiny. Both CoRoT-29b and KIC 1255b are useful case studies because they show us how unusual transit signatures can be identified and explained. We also have to keep in mind that such signatures may not be immediately found because Kepler data assessment techniques are not tuned for them, as the paper notes:

…in some cases of highly non-standard transit signatures, it may be that only a model-free approach — such as a human-based, star-by-star light curve examination — would turn them up. Indeed, KIC 8462852 was discovered in exactly this manner. KIC 8462852 shows transit signatures consistent with a swarm of artificial objects, and we strongly encourage intense SETI efforts on it, in addition to conventional astronomical efforts to find more such objects (since, if it is natural, it is both very interesting in its own right and unlikely to be unique).

Thus we leave the KIC 8462852 story for now, although I would encourage anyone interested in Dysonian SETI to read through the Wright paper to get a sense of the range of transiting signatures that draw SETI interest. The paper is Wright et al., “The ? Search for Extraterrestrial Civilizations with Large Energy Supplies. IV. The Signatures and Information Content of Transiting Megastructures,” submitted to The Astrophysical Journal (preprint).

KIC 8462852: Enter ‘Gravity Darkening’

Back from my break, I have to explain to those who asked about what exotic destination I was headed for that I didn’t actually go anywhere (the South Pacific will have to wait). The break was from writing Centauri Dreams posts in order to concentrate on some other pressing matters that I had neglected for too long. Happily, I managed to get most of these taken care of, all the while keeping an eye on interstellar news and especially the interesting case of KIC 8462852 (for those just joining us, start with KIC 8462852: Cometary Origin of an Unusual Light Curve? and track the story through the next two entries).

Whatever the explanation for what can only be described as a bizarre light curve from this star, KIC 8462852 is a significant object. While Dysonian SETI has been percolating along, ably studied by projects like Glimpsing Heat from Alien Technologies, the public has continued to see SETI largely in terms of radio and deliberate attempts to communicate. Tabetha Boyajian and team, who produced the first paper on KIC 8462852, have put an end to that, ensuring wide coverage of the object as well as the notion that detection of an extraterrestrial intelligence might occur through observing large artificial structures in our astronomical data.

Meanwhile, the delightful ‘Tabby’s Star’ is beginning to emerge as a replacement for the star’s unwieldy designation. Coverage of the story has been all over the map. The term ‘alien megastructures’ has appeared in various headlines, while others have focused on the natural explanations that could mimic the ETI effect. The tension between natural and artificial is going to persist, and it’s the subject of Jason Wright and colleagues in their recent paper (submitted to The Astrophysical Journal), which asks that kinds of signatures an alien civilization’s activities could create, and what natural phenomenon could explain such signatures.

I think the Wright paper hits exactly the right note in its conclusion:

Invoking alien engineering to explain an anomalous astronomical phenomenon can be a perilous approach to science because it can lead to an “aliens of the gaps” fallacy (as discussed in §2.3 of Wright et al. 2014b) and unfalsifiable hypotheses. The conservative approach is therefore to initially ascribe all anomalies to natural sources, and only entertain the ETI hypothesis in cases where even the most contrived natural explanations fail to adequately explain the data. Nonetheless, invoking the ETI hypothesis can be a perfectly reasonable way to enrich the search space of communication SETI efforts with extraordinary targets, even while natural explanations are pursued.

Just so, and the lengthy discussions in the comments section here on the previous three articles on KIC 8462852 are much in that spirit. We do have the cometary hypothesis suggested in the original Boyajian paper as what had been considered the leading candidate, and Michael Million, a regular in these pages, has pointed to a paper from Jason Barnes (University of Idaho) and colleagues that looks at the phenomenon of gravity darkening and spin-orbit misalignment.

In this scenario, we have a star that is spinning fast enough to become oblate; i.e., it has a larger radius at the equator than it does at the poles, producing higher temperatures and ‘brightening’ at the poles, while the equator is consequently darkened. The transits of a planet in this scenario can produce asymmetrical light curves, a process the Wright paper notes, and one that Million began to discuss as early as the 17th in the comments here. That discussion was picked up in Did the Kepler space telescope discover alien megastructures? The mystery of Tabby’s star solved, which appeared in a blog called Desdemona Despair. The author sees the case as clear-cut: “There are four discrete events in the Kepler data for KIC 8462852, and planetary transits across a gravity-darkened disk are plausible causes for all of them.”

Image: Effects of rapid rotation on the shape of stars. Credit: Ming Zhao (Penn State).

Meanwhile, Centauri Dreams reader Jim Galasyn uncovered a paper by a team led by Shoya Kamiaka (University of Tokyo) studying gravity darkening of the light curves for the transiting system PTFO 8-8695, also studied by Barnes, which involves a ‘hot Jupiter’ orbiting a rapidly rotating pre-main-sequence star. Gravity darkening appears to be very much in play, and we can, as the Desdemona Despair blog does, cite the Barnes paper: “An oblique transit path across a gravity-darkened, oblate star leads to the long transit duration and asymmetric lightcurve evident in the photometric data [for the PTFO 8-8695 system].”

In Wright et al.’s “Signatures and Information Content of Transiting Megastructures” paper, which looks in depth at the natural sources of unusual light curves, these possibilities are discussed in relation to non-spherical stars, and this is worth quoting:

The dominant effect of a non-disk-like stellar aspect on transit light curves is to potentially generate an anomalous transit duration; the effects on ingress and egress shape are small. Gravity darkening, which makes the lower-gravity portions of the stellar disk dimmer than the other parts, can have a large effect on the transit curves of planets and stars with large spin-orbit misalignment, potentially producing transit light curves with large asymmetries and other in-transit features (first seen in the KOI-13 system, Barnes 2009; Barnes et al. 2011).

Another effect of a non-spherical star is to induce precession in an eccentric orbit. Wright also takes note of PTFO 8-8695, “which exhibits asymmetric transits of variable depth, variable duration, and variable in-transit shape.” Here astronomers were helped by the star’s age, which was soundly established by its association with the Orion star forming region. Wright adds that effects of this magnitude would not be expected for older, more slowly rotating objects.

The work on KIC 8462852 continues, and I also need to mention that the Allen Telescope Array focused in on this fascinating target beginning on October 16, even as the American Association of Variable Star Observers (AAVSO) published an Alert Notice requesting that astronomers begin observing the system. For more on this, see SETI Institute Undertakes Search for Alien Signal from Kepler Star KIC 8462852. Universe Today quotes the SETI Institute’s Gerald Harp as saying: “This is a special target. We’re using the scope to look at transmissions that would produce excess power over a range of wavelengths.” I’ll obviously be reporting on the paper that comes out of the ATA search.

The papers discussed today are Wright et al., “The ? Search for Extraterrestrial Civilizations with Large Energy Supplies. IV. The Signatures and Information Content of Transiting Megastructures,” submitted to The Astrophysical Journal (preprint); Barnes et al., “Measurement of Spin-Orbit Misalignment and Nodal Precession for the Planet around Pre-Main-Sequence Star PTFO 8-8695 From Gravity Darkening,” accepted at The Astrophysical Journal (preprint) and Kamiaka et al., “Revisiting a gravity-darkened and precessing planetary system PTFO 8-8695: spin-orbit non-synchronous case,” accepted at Publications of the Astronomical Society of Japan (preprint).

No Posts Until 26 October

As mentioned in Friday’s post, I’m taking a week off. The next regular Centauri Dreams post will be on Monday the 26th. In the interim, I’ll check in daily for comment moderation. When I get back, we’ll be starting off with a closer at Jason Wright’s recent paper out of the Glimpsing Heat from Alien Technologies project at Penn State, with a focus on interesting transiting lightcurve signatures and how to distinguish SETI candidates from natural phenomena.