Centauri Dreams

Imagining and Planning Interstellar Exploration

Near-Term Missions: What the Future Holds

Discussing the state of space mission planning recently with Centauri Dreams contributor Ashley Baldwin, I mentioned my concerns about follow-up missions to the outer planets once New Horizons has done its job at Pluto/Charon. No one is as plugged into mission concepts as Dr. Baldwin, and as he discussed what’s coming up both in exoplanet research as well as future planetary missions, I realized we needed to pull it all together in a single place. What follows is Ashley’s look at what’s coming down the road in exoplanetary research as well as planetary investigation in our own Solar System, an overview I hope you’ll find as useful as I have. Dr. Baldwin is a consultant psychiatrist at the 5 Boroughs Partnership NHS Trust (Warrington, UK) and a former lecturer at Liverpool and Manchester Universities. He is also, as his latest essay makes clear, a man with a passion for what we can do in space.

by Ashley Baldwin

We’ve come a long way since the discovery of the first “conventional” exoplanets in 1995 ( of course, “pulsar” orbiting planets had been discovered several years earlier). Since then ground based RV and Transit surveys have discovered several hundreds of planets, supplemented by a thousand confirmed finds plus many more “candidates” by the miracle that is Kepler — the original and K2, aided and abetted ably by Corot and re modeled Hubble and Spitzer. Between these several dozen larger examples have been “characterised ” by a combination of RV,transit and transit spectroscopy. We are at a crossroads and stand at the edge of the beginning of a golden age, this despite the austere times in which we live. But as they say, necessity is the mother of invention, and the innovation arising from lack of funds has led to a versatility in hardware use unimaginable twenty years ago (along with 1800 plus exoplanets!).

So what next? Lots of things. Before talking about obvious things like space telescopes and spectroscopy I feel I must make space for asteroseismology. A new science and still little known, but without it hardly any of the recent exoplanet advances would have been possible. It is the process by which kinetic energy or pulsations inside stars is converted into vibrations. In effect sound waves. Originating in the Sun with helioseismology, asteroseismology is basically the same process by which seismic waves of earthquakes are used to inform us about the details of the Earth’s interior.

Vibrations from different parts of stellar interiors expand outwards to the star’s surface where their nature and origin can be determined with increasing accuracy. There are three types of these vibrations:

- Gravity or ‘g’ waves originate from stellar cores and have been implicated in the movement of stellar material into other areas as well as contributing to the uniform rotation of the core, linking thus with the outer convective zone of stellar-mass stars. These waves only reach the surface in special circumstances.

- Pressure or ‘p’ waves, arise from the outer convective zone and are the main source of information on this crucial area of a star’s interior as they reach the surface.

- Finally, “f” waves are surface “ripples”. These vibrations help astronomers accurately calculate the mass, age, diameter and temperature of a star, with age in particular being crucially determined to an accuracy of 10%. Why so important? Well, apart from determining the nature of stars themselves, they also underpin the description of orbiting exoplanets.

Understand the star and you understand the planet. The more stars that are subject to this analysis, the more precise it becomes. This is a little known but crucial element of Kepler (and CoRoT) and will also be central to PLATO (Planetary Transits and Oscillations of stars) in the 2020s. TESS (Transiting Exoplanet Survey Satellite), sadly, is too small and its pointing times per star too brief to add a lot to the process despite its huge capabilities for such a low budget. Kepler has a dedicated committee that oversees the correlation of all asteroseismological data as will PLATO, and the huge amounts of stellar information collected will provide precision detail on exoplanets by the end of the next decade (and indeed before).

Near-Term Developments

Things start hotting up from next year with “first Light” on the revolutionary RV spectrograph ESPRESSO (Echelle SPectrograph for Rocky Exoplanets and Stable Spectroscopic Observations) at the VLT in Chile. This device is an order of magnitude more powerful than previous devices like HARPS, and in combination with the large telescopes of the VLT can discover planets the same size as Earth in the habitable zones of Sun-like stars. The first of its kind to do so. Apart from positioning such planets for potential direct imaging and spectroscopic characterisation, it will also provide mass estimates with varying degrees of accuracy.

Meanwhile, nearby ALMA (Atacama Large Millimeter/submillimeter Array) will provide unprecedented images and detail on the all-important protoplanetary disks from which planets form, and which inform the nature of our own system’s evolutionary history. The Square Kilometer Array (SKA), due to become operational next decade in South Africa and Australia, will also do this in longer, radio wavelengths, and its enormous collecting area (quite literally a square kilometer) with moveable unit telescopes (as with ALMA too) will create a synthetic “filled” aperture on a par with a solid telescope of similar dimensions and consequent exquisite resolution.

Image: ALMA antennae on the Chajnantor Plateau. Credit: ALMA (ESO/NAOJ/NRAO), O. Dessibourg.

Submillimetre astronomy is often referred to as molecular imaging, as the wavelengths used are perfect, given their low energy and related cool temperatures, for picking up chemical molecules in the interstellar medium, and have been instrumental in showing the ubiquity of many of the materials needed for life, like amino acids, the building blocks of proteins, and PAHs (poly aromatic hydrocarbons) which are key constituents of cell membranes as well as the long chain amines in the goo on Titan. ?ALMA has identified hydrogen cyanide and methyl cyanide , poisonous elements on Earth, but critical progenitor molecules for protein and life building amino acids. No one has discovered life off Earth to date, but the commonest elements created by stars, carbon, hydrogen,oxygen and nitrogen (CHON), are amongst the main constituents of life and in conjunction with molecules like amino acids and PAHs suggest that the key components of life are ubiquitous.

Even greater accuracy can be achieved by combining all the radio telescopes dotted across the Earth and even in space to create a “diluted ” aperture (not completely filled in but with equivalent width of its most remote elements) wider than the Earth itself. It isn’t difficult to guess the extent of such a device’s resolution! The SKA and ALMA, as with any new and sophisticated astronomical hardware, have a planned-out mission itinerary, but given their extreme capabilities have the added ability to make unexpected and exciting discoveries.

Returning to shorter wavelengths, ground based telescopes are being equipped with increasingly sophisticated adaptive optics (AO), in conjunction with high altitude sites, allowing them to image with increasing detail in wavelengths from optical to mid-infrared and bring to bear their large light gathering capacity without the huge expense of launch to and maintenance in space. This will culminate in the completion of the three extremely large telescopes (ELTs) between 2020 and 2024. Work is underway on 25-40 m apertures that will capture sufficient light in combination with AO to discover, image and characterise planets down even to Earth size.

Space-Based Observation

In the shorter term, hot on the heels of ESPRESSO and reliant on its discoveries is TESS, a small satellite with multiple telescope/cameras and sensors, due for launch to a specially designed widely elliptical orbit to maximise imaging of exoplanet transits round “bright” nearby stars, largely M dwarfs. These small stars’ planets orbit close in and their transits eclipse a larger portion of the star, creating so- called “deep transits” on a regular basis (including in the habitable zones which are only 0.25 AU for even the largest M-class stars) that can be added together, or “binned”, to produce a potent signal.

Better still, TESS will work in concert with the James Webb Space Telescope following its launch a year later. JWST is largely an infrared telescope designed to look at extragalactic objects and cosmological concepts. Although, sadly, not an exoplanet imager, it has been optimised to spectroscopically analyse exoplanets, and with a 6.5m aperture it should do very well. It will image a transit and analyse the small amount of starlight passing through the outer atmosphere of the transiting planet in order to characterise it: A “transmission” spectrum. Alternatively, as the transit times can be calculated precisely, a spectrum of the combined planet and star light can be taken when they are next to each other and by subtracting the spectrum of the star alone whilst the planet is eclipsed behind it, a net planetary spectrum can be calculated.

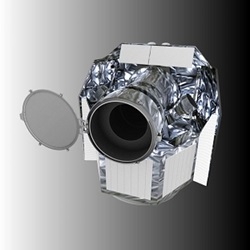

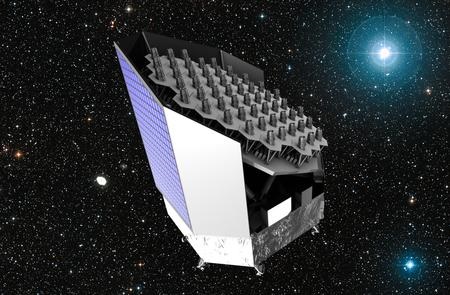

Image: The principal goal of the TESS mission is to detect small planets with bright host stars in the solar neighborhood, so that detailed characterizations of the planets and their atmospheres can be performed. Credit: MIT.

It’s likely that given the huge workload of JWST, its exoplanetary characterisation work will be limited to premier targets. Smaller mission funding pools will be utilised to produce small but dedicated exoplanet transit spectroscopy telescopes to characterise larger exoplanets, as suggested in the previously unsuccessful ESA and NASA concepts EChO and FINESSE.

TESS itself will look at half a billion stars across the whole sky over a two year period and if it holds together, should get a much longer mission expansion. Parts of its field of imaging around the ecliptic poles are designed to overlap with the JWST’s area of operation to maximise their synergy. The longest periods of “staring” also occur there to allow analysis of planets with the longest orbital periods in the habitable zones of the largest possible (most Sun-like) stars. Ordinarily three proven transits are required for proof of discovery but given the nearby target stars any discoveries can be followed up by ESPRESSO for proof, reducing required transits to just two. There is growing optimism that with JWST, TESS might make the ultimate discovery!

Launched in a similar timeframe as TESS, the small ESA telescope CHEOPs will look for transits predicted by RV discoveries, allowing accurate mass and density calculations of up to 500 planets of gas giant to mini-Neptune size to add to the growing list of planets characterised this way, thus helping build up a picture of planetary nature and distribution. At present, planets in this category have been grouped by Marcy et al and the data suggests that Earth like planets (rocky with a thin atmosphere) exist up to about 1.6 R Earth or 5M Earth with larger objects more likely to have a thick atmosphere and be more akin to “mini Neptunes”. The larger the sample, the greater the accuracy, hence CHEOPs, TESS and ESPRESSO’s wider importance in characterisation, which will also,inform efficient future imaging searches.

Image: CHEOPS – CHaracterising ExOPlanet Satellite – is the first mission dedicated to searching for exoplanetary transits by performing ultra-high precision photometry on bright stars already known to host planets. Credit: ESA.

Into the Next Decade

Crunch time arrives in the 2020s. The beginning of the decade is the time of the routine Decadal survey that lays out NASA’s plans and priorities over the following ten years. It will determine the priority that exoplanets (and Solar System planets) are given. The JWST has left a huge hole in the budget that must be balanced and at a time when manned space flight, never cheap, is reappearing after its post Space Shuttle hiatus. There is room for plenty of optimism, though.

Unlike my dear old National Health Service here in Britain, year on year funding can be stored to be used at a later date. Any ATLAST (Advanced Technology Large Aperture Space Telescope), Terrestrial Planet Finder telescope or High Definition Space Telescope in the proportions necessary for detailed exoplanetary characterisation will cost upward of $15 billion. Huge, but not insurmountable, if funds are hoarded over 15 years ahead of a 2035 launch. Imagining a 16m monster like that! Quite a supposition.

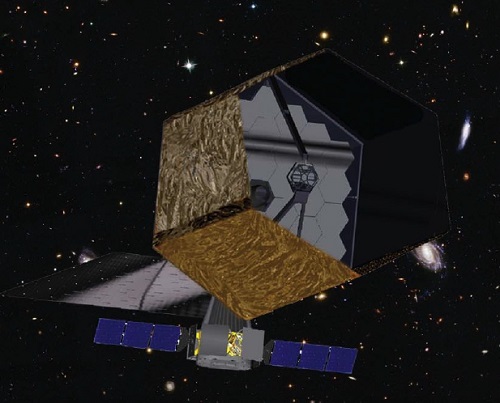

Image: The Advanced Technology Large Aperture Space Telescope (ATLAST) is a NASA strategic mission concept study for the next generation of UVOIR [near-UV to far-infrared] space observatory. ATLAST will have a primary mirror diameter in the 8m to 16m range that will allow us to perform some of the most challenging observations to answer some of our most compelling astrophysical questions. Credit: Space Telescope Science Institute.

What is more definite is the Wide-field Infrared Survey telescope, WFIRST, due for launch circa 2024, maybe a bit earlier. Originally planned as a dark energy mission, it has grown enormously thanks to the NRO donation of a Hubble dimension, high-quality wide-field mirror. At the same time, Princeton’s David Spergel made a compelling and successful case for inclusion of an internal starlight occulter or coronagraph at about half a billion dollars extra (much of which was covered by partner space agencies). This would be a “technological demonstrator ” instrument. Large funds were released for advancing this largely theoretical technology to a useable level through experimental “Probe” concepts which also developed an external occulter technology.

The coronagraph has already massively exceeded all expectation of success and has at least 5 years more development time before the telescope development begins. That’s a lot of useful time. It’s aim is to allow direct imaging of Jupiter-Neptune mass planets about as far out from a star as Mars. The coronagraph blocks out the much brighter starlight that swamps the feeble planet light. Already the technology has improved to the point where a few Super Earths or even smaller planets might be visualised. Sadly, not quite in the habitable zone, but the wider orbits will allow more accurate categorisation of the planetary cohort thus telling us what to look for in the future. The final orbit of this telescope is yet to be decided and is crucial.

Given the long gap until ATLAST (envisioned as a flagship mission of the 2025 – 2035 period) and the finite life expectancy of Hubble and JWST, WFIRST is obviously intended to bridge the gap, and thus will need servicing like Hubble. To this effect it was felt necessary to keep it near to Earth (for convenience of data download too), but rapid advances in robotic servicing mean it could now be stationed as far afield as the ideal viewing spot, the Sun/Earth Lagrange point L2. It could possibly even be moved nearer to the moon for servicing. Thus locale would allow the addition of an external occulter if funding was available. This technology allows closer imaging to the star than the coronagraph, even into the habitable zone. Whether WFIRST ends up with both internal and external occulters remains to be seen and will likely to be decided by the 2020 Decadal study according to the political and financial climate of the day. Meantime, it’s great to know that such a useful planet hunter will be operational for a long time post 2024.

WFIRST does other useful exoplanetary work. It too will discover exoplanets by the transit method and also by the often forgotten microlensing principle. This involves a nearby star sitting in front of a further out star and effectively focusing its light via gravity, as described by Einstein in his relativity work. Exoplanets orbiting the nearer star stand out during this process and can have their radius and mass determined accurately. As this method works for further out planets, it provides a way of populating the full range of planetary orbits and characteristics, which we have seen is critical to establishing the nature of alien star system architecture. The downside of microlensing is that it is a one-off experience and can’t be revisited. Direct imaging, transiting, and microlensing makes WFIRST one potent exoplanet observatory. What more can it do? The answer is a lot.

Image: The Wide-Field Infrared Survey Telescope (WFIRST) is a NASA observatory designed to perform wide-field imaging and slitless spectroscopic surveys of the near infrared (NIR) sky for the community. The current Astrophysics Focused Telescope Assets (AFTA) design of the mission makes use of an existing 2.4m telescope to enhance sensitivity and imaging performance. Credit: NASA.

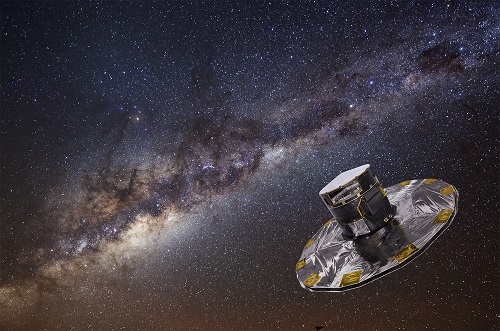

Consider astrometry. Like asteroseismology it is a little known science but rapidly expanding, and like asteroseismology is the shape of things to come. Astrometry measures sidewards movement of a star due to the gravitational effects of orbiting stars. A bit similar to the R.V method, but better in that it accurately determines mass of planets and also their location with pinpoint accuracy. Meanwhile, the ESA telescope Gaia (is currently in the process of staring for extended periods at over a billion Milky Way stars in order to determine their position to within 1% error. In the process, as with Kepler and PLATO, it will carry out detailed asteroseismology, which will advance this critical field even further. Know the star and you know the planet.

Astrometry will allow Gaia the added benefit of single-handedly accurately positioning several thousand gas giant planets. However, combining its results with WFIRST should allow accurate positioning and mass/radius of nearby planets down to Earth size, including planets in the habitable zone, helping develop an effective search strategy for the WFIRST direct imaging technology whether by internal or external occulter or both. Critically, astrometry helps discover and characterise planets around M-dwarfs which form the large part of the stellar neighbourhood. As the habitable zone for even the largest of these stars (and many of the next class up, K-class stars) is inside 0.4AU, it is unlikely that even an advanced internal or external occulting device would allow direct imaging so close to the star, so any orbiting planets could only be classified by astrometry and transit spectroscopy if they transit the star.

Image: Gaia is an ambitious mission to chart a three-dimensional map of our Galaxy, the Milky Way, in the process revealing the composition, formation and evolution of the Galaxy. Gaia will provide unprecedented positional and radial velocity measurements with the accuracies needed to produce a stereoscopic and kinematic census of about one billion stars in our Galaxy and throughout the Local Group. This amounts to about 1 per cent of the Galactic stellar population. Credit: ESA.

Generally, the closer a planet is to a star, the greater the likelihood of a transit, and the nature of planetary formation around M dwarfs also leads to protoplanetary disks that form in such a position as to create transiting planets. This, ironically, was the proposed for the now defunct TPF-I. If Gaia goes beyond its initial 5 year mission, WFIRST should be able to find and characterise up to 20,000 Jupiter or Neptune sized planets! All that for just $2.5 billion means that WFIRST will likely be one of the greatest observatories, anywhere, of all time.

PLATO is a cross between Kepler and TESS. Like Kepler, it is designed to find Earth-sized planets in the habitable zone of Sun-like stars, and as with TESS, these stars will be close enough to characterise and confirm from ground-based telescopes and spectroscopes. PLATO, too, will carry out extensive asteroseismology, which along with Kepler and Gaia will give unprecedented knowledge of most star types by 2030.

Image: PLAnetary Transits and Oscillations of stars (PLATO) is the third medium-class mission in ESA’s Cosmic Vision programme. Its objective is to find and study a large number of extrasolar planetary systems, with emphasis on the properties of terrestrial planets in the habitable zone around solar-like stars. PLATO has also been designed to investigate seismic activity in stars, enabling the precise characterisation of the planet host star, including its age. Credit: ESA.

Meanwhile, Hubble has been given a clean bill of health until at least 2020. The aim is for as much overlap with JWST as possible, bridging the gap to WFIRST. Spitzer will likely be phased out once JWST is up. WFIRST, if serviced and upgraded regularly like Hubble, could also last twenty years plus, certainly until the ATLAST telescope is operational and well after the Extremely Large Telescopes are fully functional on the ground. Given the huge cost of building, launching and maintaining space telescopes (not least $8.5 billion for JWST), NASA have now made it clear that future designs will be multi-purpose and modular for ease of service/upgrade.

Imaging an Exoplanet

In terms of resolving and imaging an exoplanet, we move into the realm of science fiction for now. To produce even a ten-pixel spatial image of a nearby planet would require a space telescope with an aperture equivalent to 200 miles. Clearly impossible for one telescope, but a thirty minute exposure employing 150 3m diameter mirrors with varying separations of up to 150 km, linked together as a “hyper telescope”, would be sufficient to act as an ‘Exo-Earth imager’ able to detect several pixel “green spots” similar to the Amazon basin on a planet within ten light years.The short exposure time is an added necessity for spatial imaging in order avoid blurring caused by clouds or planetary rotation. This is why it may be important to have an external occulter with WFIRST, not just for potent imaging but to allow the “formation flying ” necessary to link the two devices together. A small step but a necessary one to get to direct spatial imaging.

Meanwhile, everything we learn from direct imaging will be via increasingly sensitive spectroscopy of O2, O3, CH4, H20 (liquid in the habitable zone as determined by astrometry) and photosynthetic pigments like the chlorophyll “red edge” bio signatures from “point sources”. The bigger the telescope, the better the signal to noise ratio (SNR) and the better the spectroscopic resolution. WFIRST has a three dimensional “Integral field spectroscope” with a maximum resolution of 70. When you think that high resolution runs to the hundreds of thousands, it shows that we are only just scratching the surface. Apart from that and until spatial imaging (if ever), SETI, Infrared heat emission or spacecraft exhausts might be the only way to separate intelligent life from life per se.

That said, things are going to happen that would have been inconceivable even in 1995. Twenty thousand plus exoplanets by 2030, hundreds characterised. Exciting if crude and controversial spectroscopic findings, and just five years perhaps from launching a 16m segmented telescope into an orbit 1.5 million kms from Earth where it will be regularly serviced by astronauts and robots practising for Mars missions.

Missions and Their Development Paths

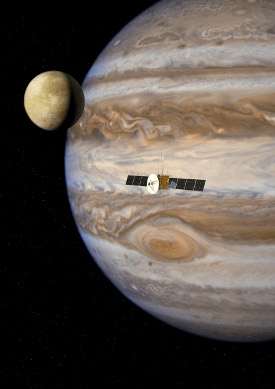

Closer to home, we have the ESA JUICE (Jupiter Icy Moons Explorer) mission to Jupiter with flybys of Europa and Callisto and a Ganymede orbiter. NASA will hopefully get its act together for a cost-effective Europa Clipper and we may yet find signs of life closer to home, though my money is on biosignatures first from an exoplanet, possibly as early as TESS but certainly from ATLAST. The key for me is that life (as we know it) is made from elements and molecules that are common.

This is why infrared astronomy is so important, for infrared light travels long distances and isn’t easily absorbed, and if it is, it soon gets re emitted. The missions to icy bodies in our system, like Rosetta, Dawn, OSIRIS-REX and New Horizons, are as critical to life discovery as TESS, WFIRST or even ATLAST, as they illustrate the ubiquity of all the necessary ingredients of life (including water) as well as the violent formation of our solar system. In the absence of “flagship missions”, the highest-funded NASA missions that were suspended after the JWST overspend, most planetary-style missions are now funded by smaller amounts, like Explorer (different cash levels up to $220 million plus a launch), Discovery ($500 million plus a launch) and New Frontiers ($1 billion plus a launch). NASA even has a list of prescribed launch vehicles and savings made, but fitting any mission into a smaller launcher can feed into the mission itself. Up to $16 million for a Discovery concept, for example. Applications are invited for missions according to how often they fly with the cheapest, Explorer, launching every three years.

Image: JUICE (JUpiter ICy moons Explorer) is the first large-class mission in ESA’s Cosmic Vision 2015-2025 programme. Planned for launch in 2022 and arrival at Jupiter in 2030, it will spend at least three years making detailed observations of the giant gaseous planet Jupiter and three of its largest moons, Ganymede, Callisto and Europa. Credit: ESA.

The limited funding has had the advantage, however, of inspiring great innovation and hugely successful mission concepts like TESS, just $200 million, Kepler at about $700 million, and Juno, a New Frontiers $1 billion mission currently en route to Jupiter. Without going into detail, the costs of missions are made up of numerous elements with hardware like telescopes and spacecraft contributing the biggest element, but they require constant engineering and operating support throughout their lifetime, which builds up and has to be factored into the initial budget.

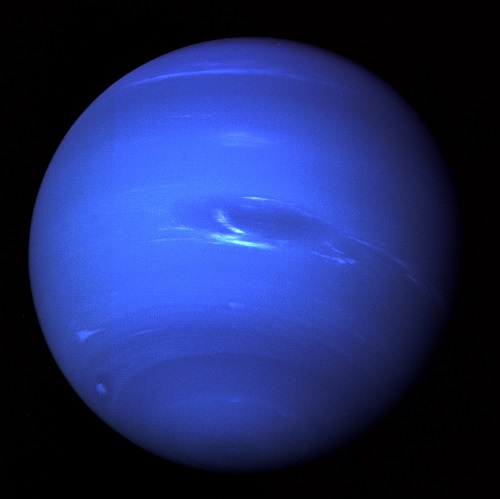

Juno hasn’t reached Jupiter yet, but its science and engineering teams are all at work making sure it operates. So although budgets of hundreds of millions sound like a lot, they are in fact fairly small, especially if compared to “flagship” missions like Cassini, Galileo and the Voyagers, which in current funding would run into many billions of dollars. This contributes to a big hole in exploration, preventing follow up intermediate telescopes and interplanetary missions. The lack of any mission to Uranus or Neptune is a classic example, with no plan for even getting close since Voyager 2. The fact that even a heavily cut back Europa Clipper is still estimated at $2 billion for a 3.5 year multiple flyby mission (which is cheaper than an orbiter). The heavy contribution of running costs is bizarrely demonstrated by the fact that a Europa lander was considered over an orbiter because it would be cheaper simply because it wouldn’t last long due to the hostile environment! The next round of New Frontiers bids is just starting for a 2021 launch with just one outer Solar System concept involving a Saturn atmospheric probe and relay spacecraft. It is expected to transmit f0r 50 minutes after an 8 year journey and costs $1 billion.

All of this illustrates the problem mission planners face and the huge cost of such missions. Europa Clipper is actually a very good value mission and might just fly. In conjunction with the ESA JUICE mission, Europa Clipper will drive forward our knowledge of Jupiter’s inner moons, certainly confirming or disproving the idea of sub-surface oceans beyond all doubt and maybe finding some interesting things leaking out from the depths! The ESA face the same situation with JUICE, funded through their large or “L” programme scheme with a budget of just over a billion Euros, or about $1.5 billion. Their lesser funds have forced even greater innovation than NASA and the low cost of JUICE is due to innovative lightweight and cheap materials like silicon carbide for a mission concept very similar to Europa Clipper.

Returning to Uranus and Neptune, these planets always appear in both NASA and ESA discovery “road maps” but always with other things further ahead which, with limited funds, ultimately take precedent. There is constant pressure to have visible results, the success of which was obvious with the ESA Rosetta mission and, we can assume, with Dawn at Ceres as well. Out of necessity, such mission concepts tend to be favoured as opposed to a mission to Uranus that with conventional rockets would take as long as 13 years.

Remember that throughout that time the spacecraft needs looking after remotely from both an engineering and operations perspective, requiring the maintenance of near full time staff, all of which eats into a limited budget of $1 billion, the New Frontiers maximum unless alternative funding sources or partnerships are used. This is one of the reasons I welcome the Falcon Heavy launcher so much. It is much cheaper at about $100 million than any other comparable launcher and can lift bigger loads off Earth into orbit. What isn’t as well known is that it has the ability to send missions direct to their target rather than needing gravitational assists from Earth, Venus and Jupiter, as with previous outer Solar System missions since Voyager.

Falcon Heavy could lift about a 5 tonne payload to Uranus in well under ten years, and in reducing the mission length would of course lower its cost, allowing more “mission proper”, perhaps even fitting within a New Frontiers cost envelope. The ESA were certainly able to produce a stripped down “straw man” dual Uranus/ Neptune concept, ODINUS, within an L budget. New ion propulsion systems like NEXT (or its descendants) require far less propellant than conventional chemical rockets and could ultimately be used to slow a Uranus probe into orbit without taking up too much mass of the critical spacecraft and its instrument suite.

Image: Neptune, a compelling target but one without a current mission in the pipeline. Credit: NASA.

That just leaves one big obstacle: Power. So far out from the Sun, even huge versions of today’s efficient solar cells would be inadequate to power even basic spacecraft functions, never mind complex scientific equipment. Traditionally power comes from converting heat to electricity via radioactive decay of the isotope Plutonium 238 (not to be confused with its deadly bomb making cousin Plutonium 239) in a “Radioisotope Thermal Generator”. Cassini uses such a device, as does Voyager 2, that with an 80 year plus half life is ideally suited for such extended missions.

This isotope is a byproduct of nuclear bomb making, so post Cold War it is in increasingly poor supply and what is available is earmarked for other projects well in advance. This situation faces all missions mooted to go beyond Jupiter, like an Enceladus or Titan orbiter/lander, and is a real deal breaker that needs addressing. Uranus and Neptune in particular need to be explored in detail not least because the exoplanet categorisation described above illustrates that they, in varying sizes, are the most ubiquitous planet type in the galaxy and are on our doorstep.

It’s impossible to talk observational astronomy and not mention Mars. Undoubtedly, the most popular mission target given its proximity, with a solid surface on which to land and the possibility of life, slim but possible if not now then at some time in the distant past. The next tranche of missions starts in 2016 with an ESA orbiter and stationary lander and a NASA lander, Insight. Both landers are intended to last two Earth years and prepare the way for rovers.

The NASA mission was part of the Discovery programme and was chosen just ahead of the TiME concept, Titan Mare Explorer – a floating lander that would analyse the Titanian methane lakes whilst Earth was above the horizon so it could transmit direct to Earth without the need for an expensive orbiter. What a mission that would have been, and for just half a billion dollars. That chance is now gone for twenty years or so, and with it any hope of a near-term Saturn mission after Cassini, given the expense of a more complex mission profile to either Titan or Enceladus.

Meanwhile, Insight will dig some holes and do more analysis and help prepare the way for the Mars 2020 rover, a beefed up version of Curiosity which will have more sophisticated instruments, including drills, that will look specifically for life rather than just water, as with Curiosity. Crucially this will use one of the few remaining RTGs as opposed to solar panels like those on Opportunity (still going after running a marathon in ten years), thereby removing the possibility of using the device for outer Solar System exploration. Plutonium 238 production has begun again at Oak Ridge but yearly production is tiny, and it will take years to produce the kilogram masses necessary to power space missions. The ESA are also sending a rover to Mars, in 2018, funding and builders Roscosmos allowing. It will be solar powered and launched by a Russian Proton rocket, whose success rate isn’t the best.

For all the potential deficiencies in exploration, what has been achieved over the last twenty years is immense. What is planned over the next twenty years is not fully clear yet, but it is likely to culminate in a huge multi-purpose space telescope that will pull all previous work together, and in concert with other space and ground telescopes, and hopefully multiple interplanetary missions, will discover signs of life, if not at present, certainly in the past. I think ultimately we will find out that life is common, but much as I would like to disagree with Fermi and “Rare Earth”, I think finding intelligent life is going to be a whole lot harder. As the Hitchhikers Guide to the Galaxy says, “Space is a very big place “. Both in size and time.

Considering we are living in times of austerity, though, I think what we have done so far isn’t at all bad !

Enter ‘Galactic Archaeology’

I’ve used the term ‘interstellar archaeology’ enough for readers to know that I’m talking about new forms of SETI that look for technological civilizations through their artifacts, as perhaps discoverable in astronomical data. But there is another kind of star-based archaeology that is specifically invoked by the scientists behind GALAH, as becomes visible when you unpack the acronym — Galactic Archaeology with HERMES. A new $13 million instrument on the Anglo-Australian Telescope at Siding Spring Observatory, HERMES is a high resolution spectrograph that is about to be put to work.

Image: I can’t resist running this beautiful 1899 photograph of M31, then known as the Great Andromeda Nebula, when talking about our evolving conception of how galaxies form. Credit: Isaac Roberts (d. 1904), A Selection of Photographs of Stars, Star-clusters and Nebulae, Volume II, The Universal Press, London, 1899. Via Wikimedia Commons.

And what an instrument HERMES is, capable of providing spectra in four passbands for 392 stars simultaneously over a two degree field of view. What the project’s leaders intend is to survey one million stars by way of exploring how the Milky Way formed and evolved. The idea is to uncover stellar histories through the study of their chemistry, as Joss Bland-Hawthorn (University of Sydney) explains:

“Stars formed very early in our galaxy only have a small amount of heavy elements such as iron, titanium and nickel. Stars formed more recently have a greater proportion because they have recycled elements from other stars. We reach back to capture this chemical state – by analysing the mixture of gases from which the star formed. You could think of it as its chemical fingerprint – or a type of stellar DNA from which we can unravel the construction of the Milky Way and other galaxies.”

Determining the histories of these stars with reference to 29 chemical signatures as well as stellar temperatures, mass and velocity should help the researchers create a map of their movements over time. This should be a fascinating process, for views of galaxy formation have changed fundamentally since the days when Allan Sandage and colleagues proposed (in 1962) that a protogalactic gas cloud that settled into a disk could explain galaxies like the Milky Way.

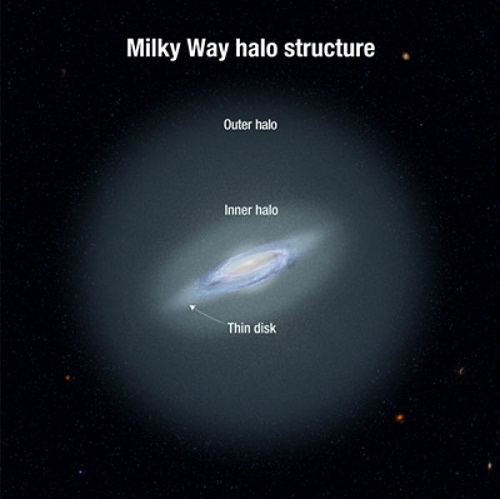

That concept suggested that the oldest stars in the galaxy were formed from gas that was being drawn toward the galactic center, collapsing from the halo to the plane, and in Sandage’s view, this collapse was relatively rapid (on the order of 100 million years), with the initial contraction beginning roughly ten billion years ago. Later we begin to see a different model developing, one in which the galaxy formed through the agglomeration of smaller elements like satellite galaxies. Both these processes are now believed to play a role, with infalling satellite systems affecting not just the galactic halo but also the disk and bulge.

Image: Structure of the Milky Way, showing the inner and outer halo. Credit: NASA, ESA, and A. Feild (STScI).

Galactic archaeology is all about detecting the debris of these components, making it possible to reconstruct a plausible view of the proto-galaxy. How the galactic disk and bulge were built up is the focus, determined by using what the researchers call ‘the stellar relics of ancient in situ star formation and accretion events…’ The authors explain the challenges they face:

… unraveling the history of disc formation is likely to be challenging as much of the dynamical information such as the integrals of motion is lost due to heating. We need to examine the detailed chemical abundance patterns in the disc components to reconstruct [the] substructure of the protogalactic disc. Pioneering studies on the chemodynamical evolution of the Galactic disc by Edvardsson et al. (1993) followed by many other such works (e.g. Reddy et al. 2003; Bensby, Feltzing & Oey 2014), show how trends in various chemical elements can be used to resolve disc structure and obtain information on the formation and evolution of the Galactic disc, e.g. the abundances of thick disc stars relative to the thin disc. The effort to detect relics of ancient star formation and the progenitors of accretion events will require gathering kinematic and chemical composition information for vast numbers of Galactic field stars.

Two days ago we looked briefly at globular clusters and speculated on what the view from a planetary surface deep inside one of these clusters might look like. The globular clusters, part of the galaxy’s halo, contain some of its oldest stars, and the entire halo is poor in metals. Going into the GALAH survey, the researchers believe that a large fraction of the halo stars are remnants of early satellite galaxies that evolved independently before being acquired by the Milky Way, a process that seems to be continuing as we discover more dwarf satellites and so-called ‘stellar streams,’ associations of stars that have been disrupted by tidal forces.

Seventy astronomers from seventeen institutions in eight countries are involved in GALAH, which is led by Bland-Hawthorn, Gayandhi De Silva (University of Sydney) and Ken Freeman (Australian National University). Their work should give us much new information not just about the halo and globular clusters but the interactions of stars throughout the disk and central bulge. The paper on the project is De Silva et al., “The GALAH survey: scientific motivation,” Monthly Notices of the Royal Astronomical Society Vol. 449, Issue 3 (2015), pp. 2604-2617 (abstract). A University of Sydney news release is also available.

And in case you’re interested, the classic paper by Sandage et al. is “Evidence from the motions of old stars that the Galaxy collapsed,” Astrophysical Journal, Vol. 136 (1962), p. 748 (abstract).

Ganymede Bulge: Evidence for Its Ocean?

What to make of the latest news about Ganymede, which seems to have a bulge of considerable size on its equator? William McKinnon (Washington University, St. Louis) and Paul Schenk (Lunar and Planetary Institute) have been examining old images of the Jovian moon taken by the Voyager spacecraft back in the 1970s, along with later imagery from the Galileo mission, in the process of global mapping. The duo discovered the striking feature that Schenk described on March 20 at the 46th Lunar and Planetary Science Conference in Texas. Says McKinnon:

“We were basically very surprised. It’s like looking at old art or an old sculpture. We looked at old images of Ganymede taken by the Voyager spacecraft in the 1970s that had been completely overlooked, an enormous ice plateau, hundreds of miles across and a couple miles high… It’s like somebody came to you and said, ‘I have found a thousand mile wide plateau in Australia that was six miles high.’ You’d probably think they were out of their minds or spent too much time in the Outback.”

The bulge is about 600 kilometers across and 3 kilometers tall, and the researchers believe that it may be an indication of the moon’s sub-surface ocean. The going theory is that the bulge emerged at one of Ganymede’s poles and slid along the top of the ocean in a motion called true polar wander (TPW). The find sets us up for future mapping of Ganymede, for the polar wander theory leads McKinnon and Schenk to believe that a similar bulge should exist opposite this one. If current mission planning holds, we may learn the answer early in the 2030s.

Image: Jupiter’s moon Ganymede probably has a sub-surface ocean, as recent work suggests. Credit: NASA/JPL.

If it takes a global sub-surface ocean to produce true polar wander, then we should expect the same thing on Europa, and indeed, the evidence points to the phenomenon, though here the signs of TPW are much clearer presumably because the ice crust is thinner. Strain produced by the shell’s rotation forces concentric grooves — the researchers call them ‘crop circles’ — to emerge. McKinnon and Schenk found that the ‘two incomplete sets of concentric arcuate trough-like depressions’ previously identified on Europa are offset in a pattern that fits the stresses of true polar wander. Moreover, additional features show evidence of TPW, including fissure-like fractures and smaller subsidiary fractures that seem to be associated with the concentric features and their patterning on the surface. “The TPW deformation pattern on Europa,” the authors add, “is thus more complex than the original features reported.” Here again it will take future mapping to provide the higher resolution needed to explore these issues.

The bulge now identified on Ganymede indicates that at some point in the past, the moon’s surface ice rotated, with what had been thicker polar shell material now being found at the equator. McKinnon’s surprise at the finding is understandable given that there is no other surface sign of true polar wander on Ganymede, as the LPSC proceedings paper makes clear:

Extensive search of the entire Voyager and Galileo image library, including all terminator image sequences and all high resolution images reveals no trace of any of the features currently associated with TPW on Europa. No arcuate troughs, no irregular depressions, no raised plateaus, no crosscutting en-echelon fractures. This may be consistent with a thicker ice shell on Ganymede.

That thicker ice shell would have made true polar wander less likely, but the phenomenon could have occurred in the past over a thinner surface crust. The paper goes on:

A thicker ice shell (and lithosphere) will not deform as easily, and will resist polar wander in the first place; a thinner icy shell, more plausible in Ganymede’s past, may have undergone polar wander, but the resultant stresses will be lower by a factor of 3 compared with those on Europa and may not have created such a distinctive tectonic signature. We are engaged in a global search for other manifestations of TPW on Ganymede and will report on our findings.

The question, then, is how a bulge the size of the one the researchers have identified on Ganymede can still be in place. In an article on this work in National Geographic (Bizarre Bulge Found on Ganymede, Solar System’s Largest Moon), McKinnon had this to say:

“Any ideas about how you support a three-kilometer-high [two-mile] ice bulge, hundreds of kilometers wide, over the long term on Ganymede are welcome… We’ve never seen anything like it before; we don’t know what it is.”

As we’ve seen recently, Ganymede’s sub-surface ocean has been confirmed by Joachim Saur and colleagues (University of Cologne) through the study of auroral activity (see Evidence Mounts for Ganymede’s Ocean). Now we have further indication that crustal slippage has occurred on the moon, all but requiring an ocean separating surface materials from the deeper core. We can expect to learn much more, including whether or not there is a corresponding bulge opposite to this one, when the Jupiter Icy Moon Explorer mission arrives in 2030. If the proposed timeline is met, JUICE will begin orbital operations around Ganymede in 2033.

A St. Louis Public Radio news release on McKinnon and Schenk’s work is also available.

In Search of Colliding Stars

How often do two stars collide? When you think about the odds here, the likelihood of stellar collisions seems remote. You can visualize the distance between the stars in our galaxy using a method that Rich Terrile came up with at the Jet Propulsion Laboratory. The average box of salt that you might buy at the grocery store holds on the order of five million grains of salt. Two hundred boxes of salt, then, make a billion grains, while 20,000 boxes give us 100 billion. That’s now considered a low estimate of the number of stars in our galaxy, which these days tends to be cited at about 200 billion, but let’s go with the low figure because it’s still mind-boggling.

So figure you have 20,000 boxes of salt and you spread the grains out to mimic the actual separation of stars in the part of the galaxy we live in. Each grain of salt would have to be eleven kilometers away from any of its neighbors. These are considerable distances, to say the least, but of course there are places in the galaxy where stars are far closer to each other than here.

I was reminded of this recently while reading Jack McDevitt’s novel Seeker (Ace, 2005), which Centauri Dreams reader Rob Flores had mentioned in our discussion of Scholz’s Star and its close pass by the Solar System some 70,000 years ago (see Scholz’s Star: A Close Flyby). Seeker is one of Jack’s tales about Alex Benedict, a far future dealer in antiquities, some of which are thousands of years in our own future. Here’s a bit of dialogue that brings up stellar collisions. On the distant world of the McDevitt universe, Benedict’s aide Chase Kolpath is discussing the matter with an astrophysicist and asks how frequent such collisions are:

“They happen all the time, Chase. We don’t see much of it around here because we’re pretty spread out. Thank God. Stars never get close to one another. But go out into some of the clusters—” She stopped and thought about it. “If you draw a sphere around the sun, with a radius of one parsec, you know how many other stars will fall within that space?”

“Zero,” I said. “Nothing’s close.” In fact the nearest star was Formega Ti, six light-years out.

“Right. But you go out to one of the clusters, like maybe the Colizoid, and you’d find a half million stars crowded into that same sphere.”

“You’re kidding.”

“I never kid, Chase. They bump into one another all the time.” I tried to imagine it. Wondered what the night sky would look like in such a place. Probably never got dark.

I’ve always had the same thought, and tried to imagine myself in a globular cluster like 47 Tucanae or the ancient Messier 5. Another place that (almost) never got dark was the planet in Isaac Asimov’s story “Nightfall” (Astounding Science Fiction, September 1941). Asimov took us to a world where six stars kept the sky continually illuminated except for a brief night every 2049 years. The story, so Asimov’s autobiography tells us, grew out of John Campbell’s asking Asimov to write something based on a famous quotation from Ralph Waldo Emerson:

If the stars should appear one night in a thousand years, how would men believe and adore, and preserve for many generations the remembrance of the city of God!

Of course, the Asimov tale involves a six-star system in which the inhabitants know nothing beyond the stars that keep them illuminated. In a galactic cluster, things get incredibly tight, with typical star distances in the range of one light year, but distances in the core much closer to the size of the Solar System. Planetary orbits in such tight regions would surely be unstable, but we can still try to imagine a sky spangled with stars this close in all directions, if only as an exercise for the imagination. Keep in mind, too, that clusters like Omega Centauri can have several million solar masses worth of stars. An environment like this one is surely ripe for stellar collisions.

Image: This sparkling jumble is Messier 5 — a globular cluster consisting of hundreds of thousands of stars bound together by their collective gravity. But Messier 5 is no normal globular cluster. At 13 billion years old it is incredibly old, dating back to close to the beginning of the Universe, which is some 13.8 billion years of age. It is also one of the biggest clusters known, and at only 24 500 light-years away, it is no wonder that Messier 5 is a popular site for astronomers to train their telescopes on. Messier 5 also presents a puzzle. Stars in globular clusters grow old and wise together. So Messier 5 should, by now, consist of old, low-mass red giants and other ancient stars. But it is actually teeming with young blue stars known as blue stragglers. These incongruous stars spring to life when stars collide, or rip material from one another. Credit: Cosmic fairy lights by ESA/Hubble & NASA. Via Wikimedia Commons.

17th Century Detection of a Collision?

Now we have word that astronomers have found evidence that the nova known as Nova Vulpeculae 1670 was actually the result of a stellar collision. Appearing in 1670 and recorded by both Giovanni Domenico Cassini and Johannes Hevelius, great figures in the astronomy of their day, the ‘new star’ was a naked eye object that varied in brightness over the course of two years. It vanished, reappeared, vanished, reappeared and finally disappeared for good.

We’ve known since the 1980s that a faint nebula in the suspected location of the event was probably all that was left of the star, but new work using APEX (Atacama Pathfinder Experiment telescope), the Submillimeter Array (SMA) and the Effelsberg radio telescope has allowed us to study the chemical composition of the nebula and measure the ratios of different isotopes in the gas. We learn that these ratios do not correspond to what we would expect from a nova.

So what was Nova Vul 1670? The mass of material was too great to be the product of a nova explosion. The paper on this work argues that we are looking at what is left after a collision between two stars, which leaves us with what is known as a red transient, in which material from the stellar interiors is blown into space, leaving only a cool, dusty remnant.

Only a few such objects, also known as luminous red novae, have been detected, with the first confirmed instance being the object M85 OT2006-1 in Messier 85. We also have the case of V1309 Scorpii, which appears to be an interesting instance of the merger of a contact binary, detected in 2008. With so few examples to work with, we have much to learn about the frequency and nature of these phenomena. But globular clusters do appear to be the best place to look for collisions. Back in 2000, Michael Shara (American Museum of Natural History) told a symposium that several hundred collisions per hour could be expected throughout the visible universe, almost all of which we will never detect. (see Two Stars Collide; A New Star Is Born).

Shara estimated as well that in the ten billion year lifetime of the Milky Way, about one million collisions have occurred within globular clusters, or about one every 10,000 years. So Jack McDevitt’s astrophysicist seems to have it about right. If the entire universe is your stage, then stars collide all the time. When Hevelius described Nova Vul 1670 as ‘nova sub capite Cygni’ — a new star below the head of the Swan — he could have no idea how rare his observation was in terms of a human lifetime, but how common on a cosmic time scale.

The paper on Nova Vul 1670 is Kami?ski et al., “Nuclear ashes and outflow in the oldest known eruptive star Nova Vul 1670,” published online in Nature 23 March 2015. The link to the abstract is broken as of this morning, but I’ll post it when it’s functional.

Puzzling Out the Perytons

Recently we looked at Fast Radio Bursts (FRBs) and the ongoing effort to identify their source (see Fast Radio Bursts: SETI Implications?) Publication of that piece brought a call from my friend James Benford, a plasma physicist who is CEO of Microwave Sciences. Jim noticed that the article also talked about a different kind of signal dubbed ‘perytons,’ analyzed in a 2011 paper by Burke-Spolaor and colleagues. Detected at the Parkes radio telescope, as were all but one of the FRBs, perytons remain a mystery. As described in the essay below, Jim’s recent trip to Australia gave him the opportunity to discuss the peryton question with key players in the radio astronomy community there. He has a theory about what causes these odd signals that is a bit closer to home than some of our speculations on the separate Fast Radio Burst question, and as he explains, we’ll soon know one way or another if he’s right.

by James Benford

A few weeks ago I visited Swinburne University in Melbourne Australia. I was invited there to give a public address about the controversy surrounding METI (Messaging to Extraterrestrial Intelligence). I also visited the radio astronomy group and discussed how to search for an explanation for the Perytons, dispersed swept-frequency signals. My host was Ian Morrison. I also spoke for several hours with Emily Petroff, Willem van Straten and Matthew Bailes, the head of the group.

Ian had sent me the Burke-Spolaor paper before I arrived, so I knew what the basic observations were. In our discussions, I learned that there have been other observations of Perytons and that they have the same general features: They occur from one portion of the sky (which is a clue), happen around midday, and peak in the southern hemisphere’s winter around July (another clue). The shape of the frequency versus time curve is not quite the same as true dispersion measure (DM) signals. There are kinks and dropouts in the frequency-time graph. And the shape is a bit off of the standard DM scaling, 1/f2. And they always occur at the same frequency, about 1.4 GHz.

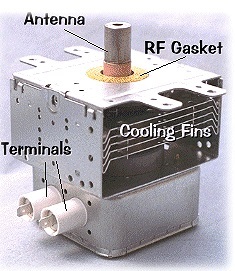

Although they had concluded in the 2011 Burke-Spolaor paper that signals came from the horizon and were not local to the Parkes radio telescope site, they are now beginning to think that it might be emission from some local electronics. Since they knew of my knowledge of microwave sources, they asked me whether or not whether microwave ovens could be an explanation.

I had already concluded that it was a likely explanation because microwave ovens are highly nonlinear devices and can produce several frequencies. They are designed to stay on a single frequency, 2.45 GHz, but can oscillate at a variety of frequencies. If the magnetron voltage changes, other oscillation modes, with lower frequencies, will occur.

Although microwave ovens when they leave the factory have Faraday shields around them and do not radiate into the environment, over time these precautions can fail due to wear on the equipment. The primary means of preventing radiation from an oven into kitchens is redundant safety interlocks, which remove power from the magnetron if the door is opened. Microwaves generated in microwave ovens cease once the electrical power is turned off.

Image: The Parkes Visitor Centre with the 65-m dish in the background. Credit: Jim Benford.

After describing magnetrons in some detail I offered a hypothesis that leakage of microwaves was occurring from the magnetron, which generates them, and then leak from the enclosing metallic cabinet. They can easily develop separations between the metal case that the microwave magnetron is in and also in the outer case, of which the door is a part. The door is the weakest part of the shield.

Microwave ovens operate at a single frequency and are not dispersed, as their Peryton signals are. But magnetrons sometimes fail to produce a single frequency, due to mechanical disturbances or changing electrical characteristics.

Conditions change in the magnetron when it is turning on or turning off. The voltage on the cathode rises at the turn on and falls at the turn off. That changes the resonance condition and thus excites different oscillation modes, with lower frequencies. This ‘mode hopping’ may explain the observed Perytons fall in frequency.

People simply opening the door, interrupting operation, could well cause this odd radiation. Yanking the door open shuts down the voltage on the cathode of the magnetron, but the electron cloud in the resonator takes a short time to collapse because the cathode is still hot, and still can emit electrons as the voltage falls. Therefore the magnetron will continue to resonate until both the voltage goes to zero and the cathode cools down.

A microwave oven doesn’t cease to radiate instantly when the electricity drops off. The timescale for the cessation is not well documented. It’s a contest between the L/R timescale of the electrical circuit and the cooling of the cathode. The Peryton signals last a few tenths of a second, which could be consistent with the fall time of the voltage and the time for the electron cloud to collapse. The frequency shifts as the voltage falls because a resonant condition, which depends on the ratio of applied voltage to insulating magnetic field (V/B), is changing. (The magnetic field doesn’t change because it’s produced by a permanent magnet.) V/B is proportional to the circulation speed of electrons in the cavity of the magnetron, which relates to the resonant frequency of the device. (For more on magnetron operation, see High Power Microwaves, Second Edition, Benford, Swegle & Schamiloglu, Taylor & Francis, 2007).

Image: Microwave magnetron from a microwave oven. Credit: Jim Benford.

I was thinking that the radio telescope at Parkes looks at a small part of the sky. But it also has side lobes through which the telescope is less sensitive to signals at other angles. The most important of these is the back lobe exactly opposite to the direction in which the telescope is pointing. This occurs because any source directly behind the dish radiating signals will diffract around the edge of the dish. This diffracted signal will arrive coherently at the receiver at the focal point of the dish.

So I inquired as to what was directly behind the dish when it was pointed at the Peryton location. They said it was the Visitor Center.

I realized at once that the Visitor Center was the microwave oven location that would have the most use and fitted all the clues. That use would occur primarily in the midday. And that in the southern hemisphere winter, more people would visit Parkes in the outback.

So I made a prediction: That they would find that the Perytons were coming from the microwave oven in the Visitor Center. I suggested the Swinburne researchers could check on that by several tests:

1) The simplest thing would be to simply remove the old oven and replace it with a new one. The Perytons would cease. But that would require taking a lot of data over time to see if they had really disappeared since Perytons are infrequent phenomena.

2) They could replace all ovens with a non-microwave cooker. That’s also a slow approach.

3) A more aggressive approach would be to rewire the oven, to defeat the safety interlocks and turn the oven on, allowing it to radiate directly into the Visitor Center. Then the signal should be quite evident and they would see a lot of Perytons. Turning the oven off and on would prove it to be the source. (Of course one would evacuate people and whoever turns the oven on would need to be behind a conducting radiation shield.)

I hear the Swinburne team is going to conduct such experiments. I hope they get a clear result. If my hypothesis is proved true, it may call into question whether the famous Lorimer Burst of 2001 was in fact a Peryton. If so, it was not extragalactic, as its large DM was taken to mean.

Perhaps we shall soon know the origin of these mysterious signals.

The Burke-Spolaor paper on perytons is “Radio Bursts with Extragalactic Spectral Characteristics Show Terrestrial Origins,” Astrophysical Journal Vol. 727, No. 1 (2011), 18 (abstract). The paper on the ‘Lorimer Burst’ is Lorimer et al., “A Bright Millisecond Radio Burst of Extragalactic Origin,” Science Vol. 318 no. 5851 (2 November 2007), pp. 777-780 (abstract).

Migratory Jupiter: A Theory of Gas Giant Formation

An interesting model of planetary formation suggests that the architecture of our Solar System owes much to the effects of the giant planets as they migrated through the protoplanetary disk. Frédéric Masset (Universidad Nacional Autónoma de México) and colleagues go so far as to speculate that planetary embryos in orbits near Mars and the asteroid belt may have migrated outwards, depleting the region of materials that would become the cores of Jupiter and Saturn. The key is the heat an embryonic planet generates in the protoplanetary disk.

Writing in Nature, the authors describe computations that model what happens to the rocky cores that will become gas giants. Tidal forces affecting planets in the protoplanetary disk have been thought to cause them to lose angular momentum, making their orbits gradually decay. The migration in this case should be inwards toward the star. But the researchers’ model takes heat generated by material impacting onto the planetary embryos into account, a factor that may slow and can perhaps reverse migration.

Image: An artist’s impression showing the formation of a gas giant planet in the ring of dust around a young star. The protoplanet is surrounded by a thick cloud of material so that, seen from this position, its star is almost invisible and red in colour because of the scattering of light from the dust. Credit: ESO/L. Calçada.

Remember that a gas giant is composed of a small rocky core surrounded by a huge envelope of gas. The ‘heating torque’ the authors describe works at high efficiency when the mass of the embryo — which will become the core — is between 0.5 and 3 Earth masses, a useful number because this is the mass range needed for such a core to develop into a Jupiter-class world once it has migrated outwards. Studying mass and density of the protoplanetary disk near the forming planet, the authors show that the embryo heats the disk near it, creating regions that are hotter and less dense than surrounding material.

From the paper:

This situation favours the lobe that appears behind the planet: its material approaches closer to the planet, receives more heat and is consequently less dense than the other lobe, leading to a positive torque on the planet… The heating torque therefore constitutes a robust trap against inward migration in any realistic disk, when accretion rates are large enough.

As for the depletion of material and its possible signs in the asteroid belt, the authors note that the heating torque they describe should be less efficient on the warm side of the ‘snow line’ (the distance from the star allowing water ice to form) because the opacity of the disk drops there. But planetary embryos that formed beyond where the snow line will eventually be should have experienced strong heating torque and thus outward migration, factors that should cause a depletion of solid material in the region. Jupiter’s rocky core may thus have formed within the region where the main asteroid belt is today before migration set in.

Estimates of the snow line in our Solar System range from 2.7 AU to 3.1 AU, while the main asteroid belt lies between 1.8 and 4.5 AU. By this theory, we can expect to find a similar depleted region inside the orbit of the first giant planet in many planetary systems.

The authors suggest that migration can take two routes: Planetary embryos between 0.5 and 3 Earth masses avoid inward migration as long as accretion rates in the disk are high. When accretion rates are low, embryos undergo inward migration, though at a slower rate. So we have two types of behavior depending upon the accretion rate of the embryo within the disk. The researchers find that accretion rates that correspond to a mass-doubling time of less than roughly 60,000 years produce outward migration on objects in the size range specified.

These two behaviors may help to explain the correlation between giant planets and the heavy metal content (metallicity) of the host star. From the paper:

…since the heating torque scales with the accretion rate and the accretion rate, in turn, scales with the amount of solid content (a proxy of which is the metallicity), protoplanetary disks with larger metallicity will engender planets that can avoid inward migration and grow to become giant planets. In contrast, embryos born in lower-metallicity environments cannot avoid inward migration, leading to results as hitherto found in models of planetary population synthesis, with low yields of giant planets and ubiquitous super-Earths.

The authors note, though, that the relation of super-Earths to host star metallicity is still controversial, and add that they have not performed calculations on embryos forming at very small orbital distances, although heating torque in these regions would likely be high. To learn more about the consequences of heating torque will require more data on protoplanetary disks and the embryos moving within them. A mechanism that explains giant planet formation and associated migration would be a welcome addition to our toolkit for exoplanet research.

The paper is Benitez-Llambay et al., “Planet Heating Prevents Inward Migration of Planetary Cores,” Nature Vol. 520 (2 April 2015). Abstract available. For another take on our Solar System’s seemingly unusual architecture and what explains it, see Batygin and Laughlin, “Jupiter’s decisive role in the inner Solar System’s early evolution,” Proceedings of the National Academy of Sciences, published online February 11, 2015 (abstract). The latter is ably described by Lee Billings in Jupiter, Destroyer of Worlds, May Have Paved the Way for Earth.