Centauri Dreams

Imagining and Planning Interstellar Exploration

Measuring Non-Transiting Worlds

Although I want to move on this morning to some interesting exoplanet news, I’m not through with fusion propulsion, not by a long shot. I want to respond to some of the questions that came in about the British ZETA experiment, and also discuss some of Rod Hyde’s starship ideas as developed at Lawrence Livermore Laboratory in the 1970s. Also on the table is Al Jackson’s work with Daniel Whitmire on a modified Bussard ramjet design augmented by lasers. But I need to put all that off for about a week as I wait for some recently requested research materials to arrive, and also because next week I’m taking a short break, about which more on Monday.

For today, then, let’s talk about an advance in the way we study distant solar systems, for we’re finding ever more ingenious ways of teasing out information about exoplanets we can’t even see. The latest news comes from the study of Tau Boötis b, a ‘hot Jupiter’ circling its primary — a yellow-white dwarf about 20 percent more massive than the Sun — with an orbital period of 3.3 days. An international team has been able to measure the mass of this planet even though it is not a transiting world. The work, published in Nature and augmented with another paper in Astrophysical Journal Letters, opens up a new way to study not just the mass of exoplanets but also their atmospheres, whose signature is a key part of the work.

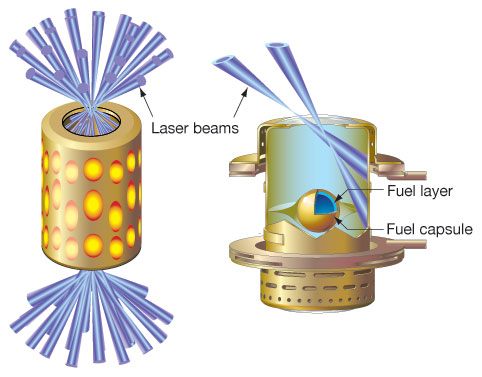

Image: This image of the sky around the star Tau Boötis was created from the Digitized Sky Survey 2 images. The star itself, which is bright enough to be seen with the unaided eye, is at the centre. The spikes and coloured circles around it are artifacts of the telescope and photographic plate used and are not real. The exoplanet Tau Boötis b orbits very close to the star and is completely invisible in this picture. The planet has only just been detected directly from its own light using ESO’s VLT. Credit: ESO/Digitized Sky Survey 2.

51 light-years away in the constellation of Boötes (the Herdsman), Tau Boötis b was discovered by Geoff Marcy and Paul Butler in 1996 through radial velocity methods, which measure the gravitational tug of the planet on its host star. Picking up the stellar ‘wobble’ flags the presence of a planet but leaves us with a wide range of mass possibilities because the angle of the planet’s orbit around the star is unknown. Thus a gas giant at a high angle to the line of sight could show the same signature as a smaller planet at a lower angle. For that reason, we’ve been limited with radial velocity to setting just a lower limit to a planet’s mass.

Transits work better for determining mass because we can measure the lightcurve of the planet as the star’s light dips during the transit, adding that to the radial velocity findings to work out both mass and radius. We’ve also been able to study exoplanet atmospheres in transiting worlds, looking at the star’s light and subtracting out the atmospheric signature. But transiting worlds are uncommon, dependent on the chance alignment of the system with our line of sight. What this new work provides is a way to study non-transiting worlds at a higher level of detail, down to investigating the composition of their atmosphere. Future telescopes should be able to apply these techniques far beyond the realm of hot Jupiters like Tau Boötis b.

What Matteo Brogi (Leiden University) and team did was to use the high-resolution CRIRES spectrograph on the Very Large Telescope at the European Southern Observatory’s Paranal Observatory in Chile to study the light from this system and determine the exquisitely fine changes in wavelength caused by the motion of the planet around the star. The observations at near infrared wavelength (2.3 microns) worked with the signature of carbon monoxide in the atmosphere, which allowed the researchers to work out the angle (44 degrees) that Tau Boötis b orbits the primary. Brogi, lead author of the paper on this work, comments:

“Thanks to the high quality observations provided by the VLT and CRIRES we were able to study the spectrum of the system in much more detail than has been possible before. Only about 0.01% of the light we see comes from the planet, and the rest from the star, so this was not easy.”

The hope is that the technique can be used in future studies to look for molecules associated with life. We’re a long way from that result, but this first step tell us how exciting the era of the new giant observatories — think the European Extremely Large Telescope and its ilk — is going to be. Tau Boötis b turns out to be about six times as massive as Jupiter, as determined through the displacement of the spectral lines of the detected carbon monoxide, and the team intends to look for other molecules in its atmosphere as the studies continue. Larger instruments will allow us to move far beyond the limited range of transiting worlds to tighten our knowledge of distant exoplanet systems, a step forward in the refinement of radial velocity techniques.

The paper is Brogi et al., “The signature of orbital motion from the dayside of the planet τ Boötis b,” Nature 486 (28 June 2012), pp. 502-504 (abstract). See also Rodler et al., “Weighing the Non-Transiting Hot Jupiter τ Boo b,” Astrophysical Journal Letters Vol. 753, No. 1 (2012), L25 (abstract). A news release from the European Southern Observatory is also available, as is this release from the Carnegie Institution for Science. Thanks to Antonio Tavani for the pointer to this work.

Fusion and the Starship: Early Concepts

Having looked at the Z-pinch work in Huntsville yesterday, we’ve been kicking around the question of fusion for propulsion and when it made its first appearance in science fiction. The question is still open in the comments section and I haven’t been able to pin down anything in the World War II era, though there is plenty of material to be sifted through. In any case, as I mentioned in the comments yesterday, Hans Bethe was deep into fusion studies in the late 1930s, and I would bet somewhere in the immediate postwar issues of John Campbell’s Astounding we’ll track down the first mention of fusion driving a spacecraft.

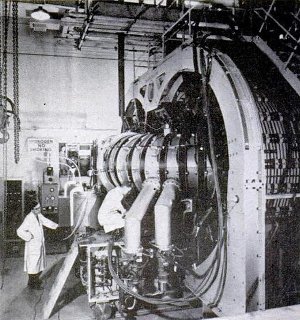

While that enjoyable research continues, the fusion question continues to entice and frustrate anyone interested in pushing a space vehicle. The first breakthrough is clearly going to be right here on Earth, because we’ve been working on making fusion into a power production tool for a long time, the leading candidates for ignition being magnetic confinement fusion (MCF) and Inertial Confinement Fusion (ICF). The former uses magnetic fields to trap and control charged particles within a low-density plasma, while ICF uses laser beams to irradiate a fuel capsule and trap a high-density plasma over a period of nanoseconds. To be commercially viable, you have to get a ratio of power-in to power-out somewhere around 10, much higher than breakeven.

Image: The National Ignition Facility at Lawrence Livermore National Laboratory focuses the energy of 192 laser beams on a target in an attempt to achieve inertial confinement fusion. The energy is directed inside a gold cylinder called a hohlraum, which is about the size of a dime. A tiny capsule inside the hohlraum contains atoms of deuterium (hydrogen with one neutron) and tritium (hydrogen with two neutrons) that fuel the ignition process. Credit: National Ignition Facility.

Kelvin Long gets into all this in his book Deep Space Propulsion: A Roadmap to Interstellar Flight (Springer, 2012), and in fact among the books in my library on propulsion concepts, it’s Long’s that spends the most time with fusion in the near-term. The far-term possibilities open up widely when we start talking about ideas like the Bussard ramjet, in which a vehicle moving at a substantial fraction of lightspeed can activate a fusion reaction in the interstellar hydrogen it has accumulated in a huge forward-facing scoop (this assumes we can overcome enormous problems of drag). But you can see why Long is interested — he’s the founding father of Project Icarus, which seeks to redesign the Project Daedalus starship concept created by the British Interplanetary Society in the 1970s.

Seen in the light of current fusion efforts, Daedalus is a reminder of how massive a fusion starship might have to be. This was a vehicle with an initial mass of 54,000 tonnes, which broke down to 50,000 tonnes of fuel and 500 tonnes of scientific payload. The Daedalus concept was to use inertial confinement techniques with pellets of deuterium mixed with helium-3 that would be ignited in the reaction chamber by electron beams. With 250 pellet detonations per second, you get a plasma that can only be managed by a magnetic nozzle, and a staged rocket whose first stage burn lasts two years, while the second stage burns for another 1.8. Friedwardt Winterberg’s work was a major stimulus, for it was Winterberg who was able to couple inertial confinement fusion into a drive design that the Daedalus team found feasible.

I should mention that the choice of deuterium and helium-3 was one of the constraints of trying to turn fusion concepts into something that would work in the space environment. Deuterium and tritium are commonly used in fusion work here on Earth, but the reaction produces abundant radioactive neutrons, a serious issue given that any manned spacecraft would have to carry adequate shielding for its crew. Shielding means a more massive ship and corresponding cuts to allowable payload. Deuterium and helium-3, on the other hand, produce about one-hundredth the amount of neutrons of deuterium/tritium, and even better, the output of this reaction is far more manipulable with a magnetic nozzle. If, that is, we can get the reaction to light up.

It’s important to note the antecedents to Daedalus, especially the work of Dwain Spencer at the Jet Propulsion Laboratory. As far back as 1966, Spencer had outlined his own thoughts on a fusion engine that would burn deuterium and helium-3 in a paper called “Fusion Propulsion for Interstellar Missions,” a copy of which seems to be lost in the wilds of my office — in any case, I can’t put my hands on it this morning. Suffice it to say that Spencer’s engine used a combustion chamber ringed with superconducting magnetic coils to confine the plasma in a design that he thought could be pushed to 60 percent of the speed of light at maximum velocity.

Spencer envisaged a 5-stage craft that would decelerate into the Alpha Centauri system for operations there. Rod Hyde (Lawrence Livermore Laboratory) also worked with deuterium and helium-3 and adapted the ICF idea using frozen pellets that would be exploded by lasers, hundreds per second, with the thrust being shaped by magnetic coils. You can see that the Daedalus team built on existing work and extended it in their massive starship design. It will be fascinating to see how Project Icarus modifies and extends fusion in their work.

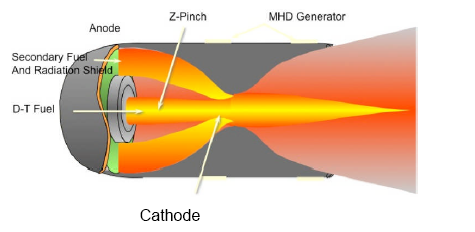

The Z-pinch methods we looked at yesterday are a different way to ignite fusion in which isotopes of hydrogen are compressed by pumping an electrical current into the plasma, thus generating a magnetic field that creates the so-called ‘pinch.’ Jason Cassibry, who is working on these matters at the University of Alabama at Huntsville, is one of those preparing to work with the Decade Module 2 pulsed-power unit that has been delivered to nearby Redstone Arsenal. Let me quote what the recent article in Popular Mechanics said about his thruster idea:

Cassibry says the acceleration of such a thruster wouldn’t pin an astronaut to the back of his seat. During shuttle liftoff, rocket boosters generate a thrust of about 32 million newtons. In contrast, the pulsed-fusion system would generate an estimated 10,000 newtons of thrust. But rocket fuel burns out quickly while pulsed-fusion systems could keep going at a “slow” but steady 24 miles per second. That’s about five times faster than a shuttle drifting around in Earth orbit.

A slow but steady burn like this would be a wonderful accomplishment if it can be delivered in a package light enough to prove adaptable for spaceflight. Given all the background we’ve just examined — and the continuing research into both MCF and ICF for uses here on Earth — I’m glad to see the Decade Module 2 available in Huntsville, even though it may be some time before it can be assembled and the needed work properly funded. Because while we’ve developed theoretical engine designs using fusion, we need to proceed with the kind of laboratory experiments that can move the ball forward. The Z-pinch machine in Huntsville will be a useful step.

Z-Pinch: Powering Up Fusion in Huntsville

The road to fusion is a long slog, a fact that began to become apparent as early as the 1950s. It was then that the ZETA — Zero-Energy Toroidal (or Thermonuclear) Assembly — had pride of place as the fusion machine of the future, or so scientists working on the device in the UK thought. A design based on a confinement technique called Z-pinch (about which more in a moment), ZETA began operations in 1957 and began producing bursts of neutrons, thought to flag fusion reactions in an apparent sign that the UK had taken the lead over fusion efforts in the US.

This was major news in its day and it invigorated a world looking for newer, cheaper sources of power, but sadly, the results proved bogus, the neutrons being byproducts of instabilities in the system and not the result of fusion at all. Fusion has had public relations problems ever since, always the power source of the future and always just a decade or two away from realization. But of course, we learn from such errors, and refined pinch concepts — in which electric current induced into a plasma causes (via the Lorenz force) the plasma to pinch in on itself, compressing it to fusion conditions — continue to be explored.

Image: The ZETA device at Harwell. The toroidal confinement tube is roughly centered, surrounded by a series of stabilizing magnets (silver rings). The much larger peanut shaped device is the magnet used to induce the pinch current in the tube. Credit: Wikimedia Commons.

In today’s Z-pinch work, current to the plasma is provided by a large bank of capacitors, creating the magnetic field that causes the plasma to be pinched into a smaller cylinder to reach fusion conditions (the axis of current flow is called the z axis, hence the name). Here we can think of two major facilities used in nuclear weapons effects testing in the defense industry as well as in fusion energy research: The Z Machine located at Sandia National Laboratories in New Mexico and the MAGPIE pulsed power generator at Imperial College, London. And lately a Z-pinch machine called a Decade Module Two has made the news.

As explained by an article in The Huntsville Times back in May, researchers at Marshall Space Flight Center and their colleagues at the University of Alabama at Huntsville will have a Decade Module Two (DM2) at their disposal once they finish the process of unloading and assembling the house-sized installation at Redstone Arsenal. Originally designed as one of several modules to be used in radiation testing, the DM2 was the prototype for a larger machine, but even this smaller version required quite an effort to move it, as can be seen in this Request for Proposal issued back in August of 2011, which laid out the dismantling and move from L-3 Communications Titan Corporation in San Leandro, CA.

The DM2 got a brief spike in the press as news of the acquisition became available, but note that the 60-foot machine is not expected to be powered up any earlier than 2013 and will not reach break-even fusion when it does. But this pulsed-power facility should be quite useful as engineers test Z-pinch fusion techniques and nozzle systems that would allow the resulting plasma explosions to be directed into a flow of thrust to propel a spacecraft. Z-pinch and related ignition methods have been under study for half a decade, but Huntsville’s DM2 may move us a step closer to learning whether pulsed fusion propulsion will be practical.

Image: Z-pinch engine concept. Credit: NASA.

The fusion power effort includes not just MSFC and UAH but Boeing as well. Jason Cassibry (UAH) talks about its ultimate goal as being a lightweight pulsed fusion system that could cut the travel time to Mars down to six to eight weeks, and he’s quoted in this story in Popular Mechanics as saying that “The time is perfect to reevaluate fusion for space propulsion.” Working the kinks out of Z-pinch would be a major contribution to that effort, and we can hope the DM2 installation may lead to the design and testing of actual thrusters, though right now we’re still early in the process.

The Popular Mechanics article goes on to explain Z-pinch’s propulsion possibilities this way:

Cassibry imagines attaching a large reactor on the back of a human transport vessel. Similar to how the piston of a car compresses fuel and air in the engine, the reactor would use electrical and magnetic currents to compress hydrogen gas. That compression raises temperatures within the reactor up to 100 million degrees C—hot enough to strip the electrons off of hydrogen atoms, create a plasma, and fuse two hydrogen nuclei together. In the process of fusing, the atoms release more energy, which keeps the reactor hot and causes more hydrogen to fuse and release more energy. (These reactions occur about 10 times per second, which is why it’s “pulsed.”) A nozzle in the reactor would allow some of the plasma to rush outward and propel the spacecraft forward.

There aren’t many machines that can produce the power demanded by pulsed fusion experiments and Centauri Dreams wishes the DM2 team success not only at the tricky business of Z-pinch fusion experimentation but the even trickier challenge of funding deep space propulsion research. None of that will be easy, but if we can get to the stage of testing a magnetic nozzle for a pulsed fusion engine using the DM2 we’ll be making serious headway. Right now the work proceeds one step at a time, the first being to get the DM2 up and running.

Uses of a Forgotten Cluster

Astronomical surprises can emerge close to home, close in terms of light years and close in terms of time. Take NGC 6774, an open cluster of stars also known as Ruprecht 147 in the direction of Sagittarius. In astronomical terms, it’s close enough — at 800 to 1000 light years — to be a target for binoculars in the skies of late summer. In chronological terms, the cluster has had a kind of re-birth in our astronomy. John Herschel identified it in 1830, calling it ‘a very large straggling space full of loose stars’ and including it in the General Catalog of astronomical objects.

But NGC 6774 remained little studied, and it took a more intensive look by Jaroslav Ruprecht in the 1960s to give the cluster both a new name and a firmer identity. This loose group of stars had long been thought to be an asterism, a chance alignment of stars that when seen from the Earth gave the impression of being a cluster. Ruprecht realized this was no asterism, and now new work with the MMT telescope in Arizona and the Canada-France-Hawaii Telescope on Mauna Kea is telling us that this cluster is, at about 2.5 billion years old, about half the age of the Sun. In fact, it’s about the age the Sun was when multicellular life began to emerge on our planet’s surface.

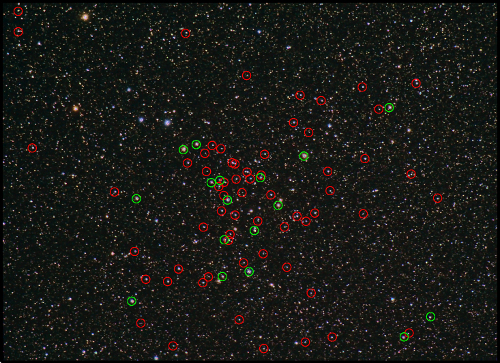

Image: Penn State University astronomers have determined that 80 of the stars in this photo are members of the long-known but underappreciated star cluster Ruprecht 147. In this image, the brightest of these stars are circled in green, and the less-bright ones are circled in red. These stars were born out of the same cloud of gas and dust approximately 2-billion years ago, and now are traveling together through space, bound by the force of gravity. The astronomers have identified this cluster as a potentially important new reference gauge for fundamental stellar astrophysics. Credit: Chris Beckett and Stefano Meneguolo, Royal Astronomical Society of Canada. Annotations by Jason Curtis, Penn State University.

Jason Wright (Penn State University) has been working on NGC 6774 with graduate student Jason Curtis, who will present the new findings in an upcoming conference in Barcelona, the 17th Cambridge Workshop on Cool Stars, Stellar Systems, and the Sun. Wright sees the significance of the cluster in terms of exoplanets and the stars that host them:

“The Ruprecht 147 cluster is very unusual and very important astrophysically because it is close to Earth and its stars are closer to the Sun’s age than those in all the other nearby clusters. For the first time, we now have a useful laboratory in which to search for and study bright stars that are of similar mass and also of similar age as the Sun. When we discover planets around Sun-like and lower-mass stars, we will be able to interpret how old those stars are by comparing them to the stars in this cluster.”

Most of the other nearby clusters are much younger than the Sun, making such comparisons less helpful, and it is work of Wright’s team that has demonstrated the cluster’s age as well as distance from Sol. These observations take in the directions and velocities of the stars in the cluster, showing that they are indeed moving together through space and are not a random pattern in the sky. Thus far 100 stars have been identified as part of the cluster but as the project continues, more are expected to be found. All told, NGC 6774 may become what Wright calls “a standard gauge in fundamental stellar astrophysics,” a helpful measuring stick in our neighborhood that can help us tighten up our age estimates of Sun-like, low mass stars.

The NGC 6774 work has been submitted to the Astronomical Journal. This Penn State news release has more.

Celestial Spectacle: Planets in Tight Orbits

I’ve always had an interest in old travel books. A great part of the pleasure of these journals of exploration lies in their illustrations, sketches or photographs of landscapes well out of the reader’s experience, like Victoria Falls or Ayers Rock or the upper reaches of the Amazon. Maybe someday we’ll have a travel literature for exoplanets, but until that seemingly remote future, we’ll have to use our imagination to supply the visuals, because these are places that in most cases we cannot see and in the few cases when we can, we see them only as faint dots.

None of that slows me down because imagined landscapes can also be awe-inspiring. This morning I’m thinking about what it must be like on the molten surface of the newly discovered world Kepler-36b, a rocky planet 1.5 times the size of Earth and almost 5 times as massive. This is not a place to look for life — certainly not life as we know it — for it orbits its primary every 14 days at a scant 17.5 million kilometers. But if we could see the view from its surface, we would see another planet, a gaseous world, sometimes appearing three times larger than the Moon from the Earth.

That may sound like a view from the satellite of the larger planet, but in this case we have two planets orbiting closer to each other than any planets we’ve found elsewhere. The larger planet, Kepler-36c, is a Neptune-class world about 3.7 times the size of the Earth and 8 times as massive. While the inner world orbits 17.5 million kilometers from its star, Kepler-36c takes up a position a little over 19 million kilometers out, making for a close orbital pass indeed. Conjunctions occur every 97 days on average, at which point no more separates the two worlds than about 5 Earth-Moon distances. Now that would make for quite an image, but rather than just sketching a huge planet in the sky, the University of Washington’s Eric Agol, one of the researchers on this work, decided to show the unknown in terms of the familiar, as below:

Image: Sleepless in Seattle? This view might keep you up for a while. Adapted by Eric Agol of the UW, it depicts the view one might have of a rising Kepler-36c (represented by a NASA image of Neptune) if Seattle (shown in a skyline photograph by Frank Melchior, frankacaba.com) were placed on the surface of Kepler-36b. Credit: Eric Agol/UW.

We can only imagine the kind of gravitational effects the two planets are having on each other. It’s interesting to see as well the powerful uses the researchers, who report their work in Science Express this week, have made of asteroseismology, as noted in this news release from the Harvard-Smithsonian Center for Astrophysics. The sound waves trapped inside Sun-like stars set up oscillations that an instrument like Kepler or CoRoT can measure. Bill Chaplin (University of Birmingham, UK), a co-author of the paper on this work, says this:

“Kepler-36 shows beautiful oscillations. By measuring the oscillations we were able to measure the size, mass and age of the star to exquisite precision. Without asteroseismology, it would not have been possible to place such tight constraints on the properties of the planets.”

The more we know about the parent star, in other words, the better we can interpret the lightcurves we are gathering as we observe planetary transits. The pattern we’re familiar with in our own Solar System — rocky planets closer to the Sun, gas giants in the outer system — breaks dramatically here with two worlds of different compositions and densities in remarkably tight orbits. At Iowa State University, researcher Steve Kawaler was on the team that worked on these data. Kawaler describes the situation in a news release from the university:

“Small, rocky planets should form in the hot part of the solar system, close to their host star – like Mercury, Venus and Earth in our Solar System. Bigger, less dense planets – Jupiter, Uranus – can only form farther away from their host, where it is cool enough for volatile material like water ice, and methane ice to collect. In some cases, these large planets can migrate close in after they form, during the last stages of planet formation, but in so doing they should eject or destroy the low-mass inner planets.

“Here, we have a pair of planets in nearby orbits but with very different densities. How they both got there and survived is a mystery.”

Indeed, we have two planets whose densities differ by a factor of eight in orbits that differ by a mere 10 percent, offering a real challenge to current theories of planet formation and migration. The star, Kepler-36a, is about as massive as the Sun but only about 25 percent as dense, and it has somewhat lower metallicity. Researchers believe it is several billion years older than our star and has entered a sub-giant phase to attain a radius about 60 percent greater than the Sun’s.

We can hope that extreme systems like this can help us refine our thinking on planetary migration and its effects. The planets in this system, some 1200 light years from Earth in the constellation Cygnus, are puzzling but doubtless not alone in representing unusual configurations of the kind we’ll see more of as we continue to sift the Kepler results. The paper is Carter et al., “Kepler-36: A Pair of Planets with Neighboring Orbits and Dissimilar Densities,” published online in Science Express June 21, 2012 (abstract). See also this news release from the University of Washington.

Robotics: Anticipating Asimov

My friend David Warlick and I were having a conversation yesterday about what educators should be doing to anticipate the technological changes ahead. Dave is a specialist in using technology in the classroom and lectures all over the world on the subject. I found myself saying that as we moved into a time of increasingly intelligent robotics, we should be emphasizing many of the same things we’d like our children to know as they raise their own families. Because a strong background in ethics, philosophy and moral responsibility is something they will have to bring to their children, and these are the same values we’ll want to instill into artificial intelligence.

The conversation invariably summoned up Asimov’s Three Laws of Robotics, first discussed in a 1942 science fiction story (‘Runaround,’ in Astounding Science Fiction‘s March issue) but becoming the basic principles of all his stories about robots. In case you’re having trouble remembering them, here are the Three Laws:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Asimov is given credit for these laws but was quick to acknowledge that it was through a conversation with science fiction editor John Campbell in 1940 that the ideas within them fully crystallized, so we can in some ways say that they were a joint creation. As Dave and I talked, I was also musing about the artificial intelligence aboard the Alpha Centauri probe in Greg Bear’s Queen of Angels (1990), which runs into existential issues that force it into an ingenious solution, one it could hardly have been programmed to anticipate.

We are a long way from the kind of robotic intelligence that Asimov depicts in his stories, but interesting work out of Cornell University (thanks to Larry Klaes for the tip) points to the continued growth in that direction. At Cornell’s Personal Robotics Lab, researchers have been figuring out how to understand the relationship between people and the objects they use. Can a robot arrange a room in a way that would be optimal for humans? To make it possible, the robot would need to have a basic sense of how people relate to things like furniture and gadgets.

It should be easy enough for a robot to measure the distances between objects in a room and to arrange furniture, but people are clearly the wild card. What the Cornell researchers are doing is teaching the robots to imagine where people might stand or sit in a room so that they can arrange objects in ways that support human activity. Earlier work in this field was based on developing a model that showed the relationship between objects, but that didn’t factor in patterns of human use. A TV remote might always be near a TV, for example, but if a robot located it directly behind the set, the people in the room might have trouble finding it.

Here’s the gist of the idea as expressed in a Cornell news release:

Relating objects to humans not only avoids such mistakes but also makes computation easier, the researchers said, because each object is described in terms of its relationship to a small set of human poses, rather than to the long list of other objects in a scene. A computer learns these relationships by observing 3-D images of rooms with objects in them, in which it imagines human figures, placing them in practical relationships with objects and furniture. You don’t put a sitting person where there is no chair. You can put a sitting person on top of a bookcase, but there are no objects there for the person to use, so that’s ignored. The computer calculates the distance of objects from various parts of the imagined human figures, and notes the orientation of the objects.

Image: Above left, random placing of objects in a scene puts food on the floor, shoes on the desk and a laptop teetering on the top of the fridge. Considering the relationships between objects (upper right) is better, but the laptop is facing away from a potential user and the food higher than most humans would like. Adding human context (lower left) makes things more accessible. Lower right: how an actual robot carried it out. Credit: Personal Robotics Lab.

The goal is for the robot to learn constants of human behavior, thus figuring out how humans use space. The work involves images of various household spaces like living rooms and kitchens, with the robots programmed to move things around within those spaces using a variety of different algorithms. In general, factoring in human context made the placements more accurate than working just with the relationships between objects, but the best results came from combining human context with object-to-object programming, as shown in the above image.

We’re a long way from Asimov’s Three Laws, not to mention the brooding AI of the Greg Bear novel. But it’s fascinating to watch the techniques of robotic programming emerge because what Cornell is doing is probing how robots and humans will ultimately interact. These issues are no more than curiosities at the moment, but as we learn to work with smarter machines — including those that begin to develop a sense of personal awareness — we’re going to be asking the same kind of questions Asimov and Campbell did way back in the 1940s, when robots seemed like the wildest of science fiction but visionary writers were already imagining their consequences.