Centauri Dreams

Imagining and Planning Interstellar Exploration

A Dark Energy Option Challenged

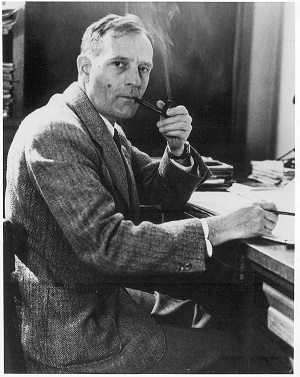

Having a constant named after you ensures a hallowed place in astronomical history, and we can assume that Edwin Hubble would have been delighted with our continuing studies of the constant that bears his name. It was Hubble who showed that the velocity of distant galaxies as measured by their Doppler shift is proportional to their distance from the Earth. But what would the man behind the Hubble Constant have made of the ‘Hubble Bubble’? It’s based on the idea that our region of the cosmos is surrounded by a bubble of relatively empty space, a bubble some eight-billion light years across that helps account for our observations of the universe’s expansion.

The theory goes something like this: We assume that the Hubble Constant should be the same no matter where it is measured, because we make the larger assumption that our planet does not occupy a special position in the universe. But suppose that’s wrong, and that the Earth is near the center of a region of extremely low density. If that’s the case, then denser material outside that void would attract material away from the center. What we would see would be stars accelerating away from us at a rate faster than the more general expansion of the universe.

The Hubble Bubble is an ingenious notion, one of the ideas advanced as an alternative to dark energy to explain why the expansion of the universe seems to be accelerating. If you had to choose between a Hubble Bubble and a mysterious dark energy that worked counter to gravity, the Bubble would seem a safer choice, given that it doesn’t conjure up a new form of energy, but the observational evidence for the Bubble is lacking, and now the idea has been hobbled by new work by Adam Riess (Space Telescope Science Institute) and colleagues.

Deflating the Bubble

Riess used data from the new Wide Field Camera 3 (WFC3) aboard the Hubble Space Telescope to measure the Hubble Constant to a greater precision than ever before. The value the team arrived at — 73.8 kilometers per second per megaparsec — means that for every additional megaparsec (3.26 million light years) a galaxy is from Earth, it appears to be moving 73.8 kilometers per second faster away from us. The uncertainty over the figure for the universe’s expansion rate in the new observations has now been reduced to just 3.3 percent, reducing the error margin by a full 30 percent over the previous Hubble measurement in 2009.

The new precision is thanks to Wide Field Camera 3, which helps the scientists study a wider range of stars to eliminate systematic errors introduced by comparing the measurements of different telescopes. The team compared the apparent brightness of Type Ia supernovae and Cepheid variable stars to measure their intrinsic brightness and calculate the distances to Type Ia supernovae in distant galaxies. Riess calls WFC3 the best ever flown on Hubble for such measurements, adding that it improved “…the precision of prior measurements in a small fraction of the time it previously took.” Further WFC3 work should tighten the Constant even more, and even better numbers should be within range of the James Webb Space Telescope.

This work is significant because as we tighten our knowledge of the universe’s expansion rate, we restrict the range of dark energy’s strength. The Bubble theory arose because scientists were looking for ways around a dark energy that opposed gravity. But the consequences of a Hubble Bubble are clear — if it’s there, the universe’s expansion rate must be slower than astronomers have calculated, with the lower-density bubble expanding faster than the more massive universe that surrounds it. Riess and team have tightened the Hubble Constant to the point where this lower value — 60 to 65 kilometers per second per megaparsec — is no longer tenable.

Lucas Macri (Texas A&M University), who collaborated with Riess, notes the importance of the study:

“The hardest part of the bubble theory to accept was that it required us to live very near the center of such an empty region of space. This has about a one in a million chance of occurring. But since we know that something weird is making the universe accelerate, it’s better to let the data be our guide.”

Riess, you may recall, is one of the co-discoverers of the universe’s accelerating expansion, having demonstrated that distant Type Ia supernovae were dimmer than they ought to be, an indication of additional distance that had to be the result of faster than expected expansion. Meanwhile, it’s extraordinary to realize how much the Hubble instrument has helped us pin down the value of the Hubble Constant, which had seen estimates varying by a factor of two before the telescope’s 1990 launch. This NASA news release points out that by 1999, the Hubble telescope had refined the value of the Hubble Constant to an error of about ten percent. Riess’ new work continues the 80-year measurement of this critical value and promises more to come.

The paper is Riess et al., “A 3% Solution: Determination of the Hubble Constant with the Hubble Space Telescope and Wide Field Camera 3,” Astrophysical Journal Vol. 730, Number 2 (April 1, 2011). Abstract available.

Fukushima: Reactors and the Public

All weekend long, as the dreadful news and heart-wrenching images from Japan kept coming in, I wondered how press coverage of the nuclear reactor situation would be handled. The temptation seemed irresistible to play the story for drama and maximum fear, citing catastrophic meltdowns, invoking Chernobyl and even Hiroshima, along with dire predictions about the future of nuclear power. My first thought was that the Japanese reactors were going to have the opposite effect than many in the media suppose. By showing that nuclear plants can survive so massive an event, they’ll demonstrate that nuclear power remains a viable option.

This is an important issue for the Centauri Dreams readership not just in terms of how we produce energy for use here on Earth, but because nuclear reactors are very much in play in our thinking about future deep space missions. Thus the public perception of nuclear reactors counts, and I probably don’t have to remind any of you that when the Cassini orbiter was launched toward Saturn, it was with the background of protest directed at its three radioisotope thermoelectric generators (RTGs) that use plutonium-238 to generate electricity. A similar RTG-carrying mission will doubtless meet the same kind of response.

What the public thinks about nuclear power, then, has a bearing on how we proceed both on Earth and in space. So how are the media doing so far with what is happening in Japan? Josef Oehmen, an MIT research scientist, has some thoughts on the matter. In an essay published yesterday by Jason Morgan, an English teacher and earthquake survivor who blogs from Japan, Oehmen gives us his initial reaction:

I have been reading every news release on the incident since the earthquake. There has not been one single (!) report that was accurate and free of errors (and part of that problem is also a weakness in the Japanese crisis communication). By “not free of errors” I do not refer to tendentious anti-nuclear journalism – that is quite normal these days. By “not free of errors” I mean blatant errors regarding physics and natural law, as well as gross misinterpretation of facts, due to an obvious lack of fundamental and basic understanding of the way nuclear reactors are built and operated. I have read a 3 page report on CNN where every single paragraph contained an error.

Reactor Basics and the Fukushima Story

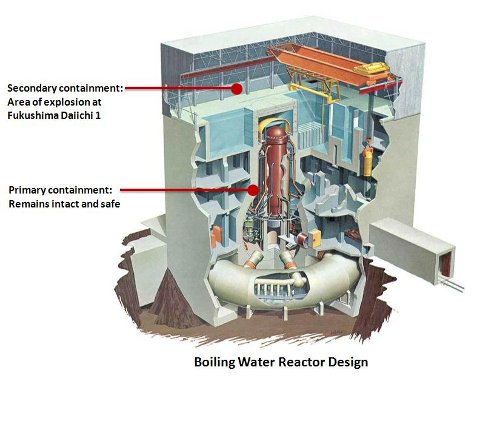

What to do? Oehmen proceeds to go through the basics about nuclear reactors and in particular those at Fukushima, which use uranium oxide as nuclear fuel in so-called Boiling Water Reactors. Here the process is straightforward: The nuclear fuel heats water to create steam, which in turn drives turbines that create electricity, after which the steam is cooled and condensed back into water that can now be returned for heating by the nuclear fuel. Oehmen’s article, which I recommend highly, walks us through the containment system at these plants.

Image: Reactor design at Fukushima. Credit: BraveNewClimate.

The scientist also makes an obvious point that some in the media should probably take note of. We are not talking about possible nuclear explosions here of the kind that happen when we detonate a nuclear device. For that matter, we’re not talking about a Chernobyl event — the latter was the result of pressure build-up, a hydrogen explosion and, as Oehmen shows, the rupture of the containments within the plant, which drove molten core material into the local area. That’s the equivalent of what’s known as a ‘dirty bomb,’ and the main point of Oehmen’s article is that it’s not happening now in the Japanese reactors and it is not going to happen later.

I won’t go through the entire Fukushima situation here, but instead will send you directly to Oehmen’s essay, which was also reproduced on the BraveNewClimate site with a series of illustrations. But a few salient points: When the earthquake hit, the nuclear reactors went into automatic shutdown, with control rods inserted into the core and the nuclear chain reaction of the uranium stopped. The cooling system to carry away residual heat was knocked out by the tsunami, which destroyed the emergency diesel power generators, kicking in the battery backups, which finally failed when external power generators could not be connected.

This is the stage at which people began to talk about a core meltdown. Here is Oehmen on that scenario:

The plant came close to a core meltdown. Here is the worst-case scenario that was avoided: If the seawater could not have been used for treatment, the operators would have continued to vent the water steam to avoid pressure buildup. The third containment would then have been completely sealed to allow the core meltdown to happen without releasing radioactive material. After the meltdown, there would have been a waiting period for the intermediate radioactive materials to decay inside the reactor, and all radioactive particles to settle on a surface inside the containment. The cooling system would have been restored eventually, and the molten core cooled to a manageable temperature. The containment would have been cleaned up on the inside. Then a messy job of removing the molten core from the containment would have begun, packing the (now solid again) fuel bit by bit into transportation containers to be shipped to processing plants. Depending on the damage, the block of the plant would then either be repaired or dismantled.

Nuclear After-Effects

So much for mushroom clouds over Fukushima. Oehmen’s article refers primarily to the Daiichi-1 reactor, but what is happening at Daiichi-3 seems to parallel the first reactor. He goes on to walk through the after-effects of all this, including the not inconsiderable issue that Japan will experience a prolonged power shortage, with as many as half of the country’s nuclear reactors needing inspection and the nation’s power generating capacity reduced by 15 percent. As to the effect of radiation in the environment:

Some radiation was released when the pressure vessel was vented. All radioactive isotopes from the activated steam have gone (decayed). A very small amount of Cesium was released, as well as Iodine. If you were sitting on top of the plants’ chimney when they were venting, you should probably give up smoking to return to your former life expectancy. The Cesium and Iodine isotopes were carried out to the sea and will never be seen again.

I don’t want to minimize the effect of any of this (and I certainly don’t want to play down the pain, both physical and psychological, of the brave people of Japan), but at a time when terror over nuclear operations seems to be running rampant, it’s important that a more balanced view come to the fore in the media. In a message a few minutes ago (about 1330 UTC), Jason Morgan told me that the Oehmen essay would be posted soon on the MIT website, so I’ll link to that as soon as it goes up. Do read what Oehmen has to say as a counterbalance to the sensationalism that seems to follow nuclear issues whenever they appear, and help us keep a sane outlook on a very sad situation.

Addendum: MIT has now published a modified version of the Oehmen essay.

Antimatter: The Conundrum of Storage

Are we ever going to use antimatter to drive a starship? The question is tantalizing because while chemical reactions liberate about one part in a billion of the energy trapped inside matter — and even nuclear reactions spring only about one percent of that energy free — antimatter promises to release what Frank Close calls ‘the full mc2 latent within matter.’ But assuming you can make antimatter in large enough amounts (no mean task), the question of storage looms large. We know how to store antimatter in so-called Penning traps, using electric and magnetic fields to hold it, but thus far we’re talking about vanishingly small amounts of the stuff.

Moreover, such storage doesn’t scale well. An antimatter trap demands that you put charged particles into a small volume. The more antimatter you put in, the closer the particles are to each other, and we know that electrically charged particles with the same sign of charge repel each other. Keep pushing more and more antimatter particles into a container and it gets harder and harder to get them to co-exist. We know how to store about a million antiprotons at once, but Close points out in his book Antimatter (Oxford University Press, 2010) that a million antiprotons is a billion billion times smaller than what you would need to work with a single gram of antimatter.

Antihydrogen seems to offer a way out, because if you can make such an anti-atom (and it was accomplished eight years ago at CERN), the electric charges of positrons and antiprotons cancel each other out. But now the electric fields restraining our antimatter are useless, for atoms of antihydrogen are neutral. Antimatter that comes into contact with normal matter annihilates, so whatever state our antimatter is in, we have to find ways to keep it isolated.

A Novel Antihydrogen Trap

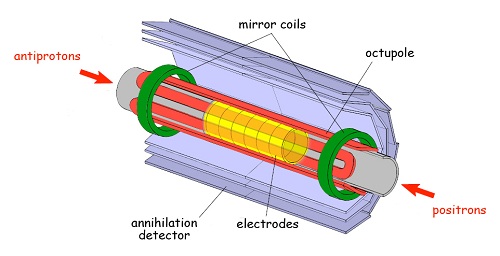

One solution for antihydrogen is being explored at CERN through the international effort known as the ALPHA collaboration, which reported its findings in a recent issue of Nature. Here positrons and antiprotons are cooled and held in the separate sections of what researchers are calling a Minimum Magnetic Field Trap by electric and magnetic fields before being nudged together by an oscillating electric field, forming low-energy antihydrogen. Keep the anti-atoms at low energy levels and although they are neutral in charge, they still have a magnetic moment that can be used to capture and hold them. Says ALPHA team member Joel Fajans (UC-Berkeley):

“Trapping antihydrogen proved to be much more difficult than creating antihydrogen. ALPHA routinely makes thousands of antihydrogen atoms in a single second, but most are too ‘hot'”—too energetic—”to be held in the trap. We have to be lucky to catch one.”

Image: Antiprotons and positrons are brought into the ALPHA trap from opposite ends and held there by electric and magnetic fields. Brought together, they form anti-atoms neutral in charge but with a magnetic moment. If their energy is low enough they can be held by the octupole and mirror fields of the Minimum Magnetic Field Trap. Credit: Lawrence Berkeley National Laboratory.

Clearly we’re in the earliest stages of this work. In the team’s 335 experimental trials, 38 antihydrogen atoms were recorded that had been held in the trap for about two-tenths of a second. Thousands of antihydrogen atoms are created in each of the trials, but most turn out to be too energetic and wind up annihilating themselves against the walls of the trap. In this Lawrence Berkeley National Laboratory news release, Fajans adds a progress update:

“Our report in Nature describes ALPHA’s first successes at trapping antihydrogen atoms, but we’re constantly improving the number and length of time we can hold onto them. We’re getting close to the point where we can do some classes of experiments on antimatter atoms. The first attempts will be crude, but no one has ever done anything like them before.”

Taming the Positron

So we’re making progress, but it’s slow and infinitely painstaking. Further interesting news comes from the University of California at San Diego, where physicist Clifford Surko is constructing what may turn out to be the world’s largest antimatter container. Surko is working not with antihydrogen but positrons, the anti-electrons first predicted by Paul Dirac some eighty years ago. Again the trick is to slow the positrons to low energy levels and let them accumulate for storage in a ‘bottle’ that holds them with magnetic and electric fields, cooled to temperatures as low as liquid helium, to the point where they can be compressed to high densities.

One result is the possibility of creating beams of positrons that can be used to study how antiparticles react with normal matter. Surko is interested in using such beams to understand the properties of material surfaces, and his team is actively investigating what happens when positrons bind with normal matter. As you would guess, such ‘binding’ lasts no more than a billionth of a second, but as Surko says, “the ‘stickiness’ of the positron is an important facet of the chemistry of matter and antimatter.” The new trap in his San Diego laboratory should be capable of storing more than a trillion antimatter particles at a time. Let me quote him again (from a UC-SD news release):

“These developments are enabling many new studies of nature. Examples include the formation and study of antihydrogen, the antimatter counterpart of hydrogen; the investigation of electron-positron plasmas, similar to those believed to be present at the magnetic poles of neutron stars, using a device now being developed at Columbia University; and the creation of much larger bursts of positrons which could eventually enable the creation of an annihilation gamma ray laser.”

An interesting long-term goal is the creation of portable antimatter traps, which should allow us to find uses for antimatter in settings far removed from the huge scientific facilities in which it is now made. Robert Forward was fascinated with ‘mirror matter’ and its implications for propulsion, writing often on the topic and editing a newsletter on antimatter that he circulated among interested colleagues. But he was keenly aware of the problems of production and storage, issues we’ll have to solve before we can think about using antimatter stored in portable traps for actual space missions. Much painstaking work on the basics lies ahead.

The antihydrogen paper is Andresen et al., “Trapped antihydrogen,” Nature 468 (2 December 2010), pp. 673-676 (abstract). Clifford Surko described his work on positrons at the recent meeting of the American Association for the Advancement of Science in a talk called “Taming Dirac’s Particle.” The session he spoke in was aptly named “Through the Looking Glass: Recent Adventures in Antimatter.”

The Rhetoric of Interstellar Flight

Isn’t it fascinating how the Voyager spacecraft keep sparking the public imagination? When Voyager 2 flew past Neptune in 1989, the encounter was almost elegaic. It was as if we were saying goodbye to the doughty mission that had done so much to acquaint us with the outer Solar System, and although there was talk of continuing observations, the public perception was that Voyager was now a part of history. Which it is, of course, but the two spacecraft keep bobbing up in the news, reminding us incessantly about the dimensions of the Solar System, its composition, its relationship to the challenging depths of interstellar space the Voyagers now enter.

In the public eye, Voyager has acquired a certain patina of myth, a fact once noted by NASA historian Roger Launius and followed up by author Stephen Pyne in his book Voyager: Seeking Newer Worlds in the Third Great Age of Discovery:

…the Voyager mission tapped into a heritage of exploration — that was its cultural power. But there was always to the Grand Tour a quality that went beyond normal expeditioning, a sense of the providential or, for the more literary, perhaps of the mythical. The aura would have embarrassed hardheaded engineers intent on ensuring that solders would not shake loose during launch and electronics could survive immersion in Jovian radiation. Yet it was there, a tradition that predates exploration and in fact a heritage that Western exploration itself taps into.

Part of that mythos is the fact that the Voyagers persist, year after year, decade after decade, with perhaps another ten years ahead before they go silent. People who were born the year of the Voyager launches are now in their 34th year, and as of this morning (local time) at 1307 UTC, Voyager 1 has flown 19,228,196,899 kilometers and counting. And just when we’ve begun to absorb the distances and ponder the continuing data return, Voyager does something new to catch our eye, in this case a roll maneuver on March 7 needed to perform scientific observations.

A Star to Guide Us

Something new? Ponder this: Except for a test last month, the last time Voyager performed a roll like the 70-degree counterclockwise rotation (as seen from Earth) it performed this week, it was to snap a rather famous portrait, an image of the planets around the Sun including the ‘pale blue dot’ of Earth. That was back in February of 1990, fully 21 years ago, although to me it seems like yesterday. This time the roll was for a different purpose. Rather than looking back, the vehicle was looking forward, orienting its Low Energy Charged Particle instrument to study the stream of charged particles from the Sun as they near the edge of the Solar System.

The new roll, held in position by spinning gyroscopes for two hours and 33 minutes, tells us that the old spacecraft still has a few moves left. Suzanne Dodd is a Voyager project manager at JPL:

“Even though Voyager 1 has been traveling through the solar system for 33 years, it is still a limber enough gymnast to do acrobatics we haven’t asked it to do in 21 years. It executed the maneuver without a hitch, and we look forward to doing it a few more times to allow the scientists to gather the data they need.”

You may recall that back in June of last year, the same Low Energy Charged Particle instrument began to show that the net outward flow of the solar wind was zero, a reading that has continued ever since. The question now under scrutiny is just how the wind — an outflow of ions from the Sun — turns as it reaches the outer edge of the heliosphere in the area known as the heliosheath. Outside the ‘bubble’ of the heliosphere is the interstellar wind, and the complex interactions between our own star and the interstellar wind are now Voyager’s raison d’etre.

It gives me a kick to realize that when the recent test roll was accomplished on February 2, the spacecraft quickly re-oriented itself by locking onto a guide star with some resonance here, Alpha Centauri. And now that the Low Energy Charged Particle instrument team has acquired what it needs, mission planners are looking to do another series of rolls in coming days as the study of the heliosheath continues. I admit to liking the idea of Alpha Centauri as a guide star for all it conjures up about bright stars in southern skies and ancient voyaging deep into wine-dark seas.

Making the Interstellar Case

Does it also point to future voyaging as we venture outside the range of our own Solar System? Let’s hope so. It’s interesting to see that Stephen Hawking and Buzz Aldrin have joined forces in what they’re calling a Unified Space Vision to “…continue the expansion of the human presence in space and ensure the perpetuation of the species.” Voyager, of course, is a robotic presence, but as we push the boundaries of artificial intelligence with ever more sophisticated probes, we’ll be finding out how the human angle meshes with robotic investigations. I suspect the distinction between living and robotic is going to be less significant as we extend our reach outward.

Human or robot, deep space demands what I might call a rhetoric of interstellar flight, and maybe that’s where Hawking and Aldrin come in, along with The Planetary Society, and Project Icarus, the British Interplanetary Society and Tau Zero. Because Voyager continues to capture the imagination, and that tells me that the innate quality that makes people explore is not a trait we have lost in our time. One of the three ancient arts of discourse, rhetoric is all about how you make the case for something. It’s a way of framing a debate, using language persuasively, and when used right it’s the counterpoise to sophistry. A skilled rhetorician can convince you of the merits of a case, but he or she will do so using logic as a way of getting at underlying truth.

We need to make the interstellar case. It’s the one triggered by every new report from spacecraft that really are going where no one has gone before, and it relies on our understanding that even as we work to solve problems here at home, we must not stop dreaming and planning for a future that pushes our limits hard. Recently we’ve discussed the effects of tightened budgets on NASA missions to the outer system, but the larger picture — the one we need to bring to the public — is that the time-frame for our explorations is less essential than the persistent effort to make them happen. We’ll build a future in space one dogged step at a time, and when asked how long humanity will struggle before reaching the stars, we’ll respond, “As long as it takes.”

Enceladus: Heat Output a Surprise

What do we make of the ‘tiger stripes’? The intriguing terrain in the south polar region of Saturn’s moon Enceladus is geologically active and one of the most fascinating finds of the Cassini mission. The ‘stripes’ are actually four trenches, more or less in parallel, that stretch 130 kilometers, each about 2 kilometers wide. What Cassini showed us was that geysers of ice particles and water vapor are being ejected into space from the interior of the moon, setting off astrobiological speculations that elevated Enceladus to a new and deeply intriguing status.

Is there liquid water under the surface? You could make that case based on recent work showing that some ice particles ejected from the moon are rich in salt, a sign that they may be frozen droplets from a saltwater ocean in contact with Enceladus’ mineral-rich core. Interesting place indeed — we’ve got a serious possibility of liquid water and an energy source in the form of tidal effects from Enceladus’ orbit as it changes in relation to Dione and Saturn itself. We’ve also got observations of carbon-rich chemicals in the plumes streaming from the moon.

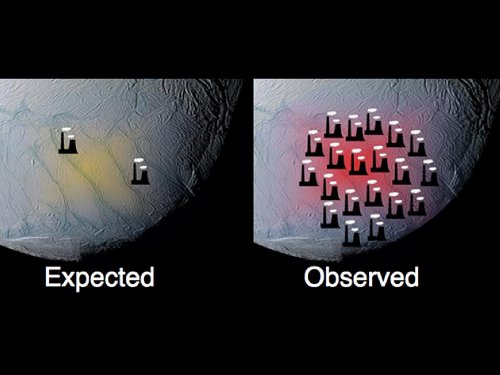

Given all that, new observations of the heat output from the south polar region of Enceladus are provocative. An analysis recently published in the Journal of Geophysical Research draws on data from Cassini’s composite infrared spectrometer to show that heat output from the ‘tiger stripes’ region is much greater than previously thought. In fact, the internal heat-generated power is thought to be about 15.8 gigawatts, more than an order of magnitude higher than predicted. Enceladus’ south polar region is giving us 2.6 times the power output of all the hot springs in the Yellowstone region, using mechanisms that are not yet understood.

Image: This graphic, using data from NASA’s Cassini spacecraft, shows how the south polar terrain of Saturn’s moon Enceladus emits much more power than scientists had originally predicted. Data from Cassini’s composite infrared spectrometer indicate that the south polar terrain of Enceladus has an internal heat-generated power of about 15.8 gigawatts. Credit: NASA/JPL/SWRI/SSI.

A 2007 study of the internal heat of Enceladus, which was based largely on tidal interactions between the moon and Dione, had found no more than 1.1 gigawatts averaged over the long term, with heating from natural radioactivity within Enceladus adding another 0.3 gigawatts. The new work covers the entire south polar region, raising speculation that the unusually high output is due to periodic surges in the tidal heating as resonance effects change over time. But the paper acknowledges “The mechanism capable of producing such a high endogenic power remains a mystery and challenges the current models of proposed heat production.” Whatever the case, the higher heat flow finding makes the presence of liquid water that much more likely.

The paper is Howett et al., “High heat flow from Enceladus’ south polar region measured using 10–600 cm?1 Cassini/CIRS data,” Journal of Geophysical Research 116 , E03003 (2011). Abstract available. This JPL news feature summarizes the paper’s findings.

On Meteorites and Budgets

Two kinds of astrobiology stories are in the wind this morning. One of them has to do with the weekend eruption of stories concerning evidence of fossilized life inside a meteorite. The other deals with scientific investigation off-planet, and although sparsely covered, it’s the one with the greater significance for finding life elsewhere. But first, let’s get Richard Hoover’s paper about meteorite life out of the way, for the growing consensus this morning is that there are serious problems with his analysis, especially as regards contamination of the sample here on Earth.

I have no problems with the panspermia idea — the notion that life just may be ubiquitous, and that planetary systems may be seeded with life not just from other planets within the system but from other stellar systems entirely. It’s an appealing and elegant concept, but thus far we have no proof, and despite what Dr. Hoover is seeing in samples from three meteorites, we still can’t definitively say that we’ve found fossilized microbes from any biosphere but our own. The skeptics are weighing in loud and clear, and Alan Boyle has collected many of their thoughts.

I want to send you to Alan’s Cosmic Log for the bulk of these comments, but let me lift one from Dale Andersen (SETI Institute) to give you the gist. Andersen acknowledges the excitement of the story if it could be proven true, but he’s worried about the fact that Hoover’s work is not playing well within the scientific community, and he is waiting for serious peer review:

“Peer review will include the examination of his and other scientists’ data and logic, and not until that has occurred will we see how the story unfolds. Occam’s razor will eventually be used to slice and dice the carbonaceous chondrites used by Richard to present his evidence. Is it more likely that upon looking into the interior of a meteorite collected on Earth and finding photosynthetic cyanobacteria, which on Earth are usually found in water or wet sediments, their presence is due to contamination from terrestrial sources or that it formed inside the parent body of comet or asteroid in deep space? There will be many other possibilities to rule out before one arrives at the extraterrestrial answer.”

You can find Hoover’s “Fossils of Cyanobacteria in CI1 Carbonaceous Meteorites,” in the Journal of Cosmology, Vol. 13 (March, 2011), which is available online.

Addendum: Be sure to check out Philip Ball’s “The Aliens Haven’t Landed,” published online in Nature today.

Meanwhile, Around Europa

Hoover’s ideas will be considered in a much longer process of investigation as other scientists analyze his evidence, but as is always the case, let’s be cautious indeed about claiming life from elsewhere in the universe. None of which is to argue that we slow down our investigations into such life, but alas, in the other astrobiology story of the morning, our chances of getting to a prime site for a closer look are taking a hit. Tightened budgets are threatening to push the Jupiter-Europa Orbiter further into the future, joining other big missions on indefinite hold.

The Jupiter-Europa Orbiter was conceived as part of a collaborative campaign between NASA and ESA called the Europa Jupiter System Mission, a two-spacecraft investigation originally planned for a launch around 2022. The troublesome news about the NASA contribution comes from a new report from the National Research Council, which while recommending a suite of flagship missions over the decade 2013-2022, also takes pains to note that budgetary constraints could limit NASA to small scale missions of the New Frontiers and Discovery class in that period. Steven Squyres (Cornell University) chaired the committee that wrote the report:

“Our recommendations are science-driven, and they offer a balanced mix of missions — large, medium, and small — that have the potential to greatly expand our knowledge of the solar system. However, in these tough economic times, some difficult choices may have to be made. With that in mind, our priority missions were carefully selected based on their potential to yield the most scientific benefit per dollar spent.”

The priorities are there, but throw in the fact that these recommendations were informed by NASA’s 2011 projected budget scenario — and that the 2012 projections are less favorable — and you can see that the Jupiter Europa Orbiter (JEO) is in trouble. The JEO comes in as the second priority for NASA’s large-scale planetary science missions, after the Mars Astrobiology Explorer Cacher (MAX-C), and while the report notes how promising Europa and its subsurface ocean may be for astrobiological studies, it says that JEO should fly only if NASA’s budget for planetary science is increased and the $4.7 billion mission is made more affordable.

Expect the launch of a Europa orbiter between 2013-2022, then, only if both the mission and the budget outlook change significantly. The same holds for the Uranus Orbiter and Probe, an exciting mission concept that would deploy an atmospheric probe to study this interesting world while the primary spacecraft orbits Uranus and makes planetary measurements as well as flybys of the larger moons. Space News notes the threat to all three missions, citing remarks by Jim Green, director of NASA’s Planetary Science Division on March 3:

…because the decadal survey’s findings are based on NASA’s more generous 2011 out-year funding projections, rather than the declining profile laid out in the 2012 budget request, it is unlikely the agency can afford to embark on any new large projects in the coming decade. Green, speaking March 1 at a meeting of the NASA Advisory Council’s planetary science subcommittee, warned members not to expect the funding outlook to improve.

Looking to Smaller Missions

The upshot of all this is that the flagship missions with the most interesting astrobiological profile are on hold in an environment favoring only small- to medium-class probes. We still have one flagship mission, the $2.5 billion Mars Science Laboratory rover, set to launch later this year, but the grim truth is that the MSL will probably be the last planetary flagship mission for quite some time. What had appeared a promising funding prospect in the 2011 NASA budget request has reversed itself with the administration’s 2012 request (released in mid-February), which shows a decline in planetary spending after 2012 that will not support these larger mission plans.

So while we have support for both Mars sample collection and the Europa orbiter in the report, the reality is that the money is just not there. For the time being, then, realistic outer planet prospects focus on Juno, a mission slated for launch this summer that will spend a year orbiting Jupiter. The solar-powered vehicle will orbit Jupiter’s poles 33 times to study the planet’s atmosphere, structure and magnetosphere. Juno is currently undergoing environmental testing at Lockheed Martin Space Systems near Denver.

We’ll wait, too, to see what medium-sized missions NASA will select for the New Frontiers program, which would benefit from the trimming of the flagship missions. The agency is selecting one New Frontiers mission now and the committee recommends two more missions be chosen for the 2013-2023 period. Possibilities include a Jupiter mission to Io and a trojan asteroid rendezvous. The Discovery program of low-cost, highly focused planetary science missions also receives the report’s blessing as hopes for the big missions are deferred.

Pre-publication copies of Vision and Voyages for Planetary Science in the Decade 2013-2022 are available from the National Academies Press.