Centauri Dreams

Imagining and Planning Interstellar Exploration

Ramping Up the Technosignature Search

In the ever growing realm of acronyms, you can’t do much better than COSMIC – the Commensal Open-Source Multimode Interferometer Cluster Search for Extraterrestrial Intelligence. This is a collaboration between the SETI Institute and the National Radio Astronomy Observatory (NRAO), which operates the Very Large Array in New Mexico. The news out of COSMIC could not be better for technosignature hunters: Fiber optic amplifiers and splitters are now installed at each of the 27 VLA antennas.

What that means is that COSMIC will have access to the complete datastream from the entire VLA, in effect an independent copy of everything the VLA observes. Now able to acquire VLA data, the researchers are proceeding with the development of high-performance Graphical Processing Unit (GPU) code for data analysis. Thus the search for signs of technology among the stars gains momentum at the VLA.

Image: SETI Institute post-doctoral researchers, Dr Savin Varghese and Dr Chenoa Tremblay, in front of one of the 25-meter diameter dishes that makes up the Very Large Array. Credit: SETI Institute.

Jack Hickish, digital instrumentation lead for COSMIC at the SETI Institute, takes note of the interplay between the technosignature search and ongoing work at the VLA:

“Having all the VLA digital signals available to the COSMIC system is a major milestone, involving close collaboration with the NRAO VLA engineering team to ensure that the addition of the COSMIC hardware doesn’t in any way adversely affect existing VLA infrastructure. It is fantastic to have overcome the challenges of prototyping, testing, procurement, and installation – all conducted during both a global pandemic and semiconductor shortage – and we are excited to be able to move on to the next task of processing the many Tb/s of data to which we now have access.”

Tapping the VLA for the technosignature search brings powerful tools to bear, considering that each of the installation’s 27 antennas is 25 meters in diameter, and that these movable dishes can be spread over fully 22 miles. The Y-shaped configuration is found some 50 miles west of Socorro, New Mexico in the area known as the Plains of San Agustin. By combining data from the antennas, scientists can create the resolution of an antenna 36 kilometers across, with the sensitivity of a dish 130 meters in diameter.

Each of the VLA antennas uses eight cryogenically cooled receivers, covering a continuous frequency range from 1 to 50 GHz, with some of the receivers able to operate below 1 GHz. This powerful instrumentation will be brought to bear, according to sources at COSMIC SETI, on 40 million star systems, making this the most comprehensive SETI observing program ever undertaken in the northern hemisphere. (Globally, Breakthrough Listen continues its well-funded SETI program, using the Green Bank Observatory in West Virginia and the Parkes Observatory in Australia).

Cherry Ng, a SETI Institute COSMIC project scientist, points to the range the project will cover:

“We will be able to monitor millions of stars with a sensitivity high enough to detect an Arecibo-like transmitter out to a distance of 25 parsecs (81 light-years), covering an observing frequency range from 230 MHz to 50 GHz, which includes many parts of the spectrum that have not yet been explored for ETI signals.”

The VLA is currently conducting the VLA Sky Survey, a new, wide-area centimeter wavelength survey that will cover the entire visible sky. The SETI work is scheduled to begin when the new system becomes fully operational in early 2023, working in parallel with the VLASS effort.

Microlensing: K2’s Intriguing Find

Exoplanet science can look forward to a rash of discoveries involving gravitational microlensing. Consider: In 2023, the European Space Agency will launch Euclid, which although not designed as an exoplanet mission per se, will carry a wide-field infrared array capable of high resolution. ESA is considering an exoplanet microlensing survey for Euclid, which will be able to study the galactic bulge for up to 30 days twice per year, perhaps timed for the end of the craft’s cosmology program.

Look toward crowded galactic center long enough and you just may see a star in the galaxy’s disk move in front of a background star located much further away in that dense bulge. The result: The lensing phenomenon predicted by Einstein, with the light of the background star magnified by the intervening star. If that star has a planet, it’s one we can detect even if it’s relatively small, and even if it’s widely spaced from its star.

For its part, NASA plans to launch the Roman space telescope by 2027, with its own exoplanet microlensing survey slotted in as a core science activity. The space telescope will be able to conduct uninterrupted microlensing operations for two 72-day periods per year, and may coordinate these activities with the Euclid team. In both cases, we have space instruments that can detect cool, low-mass exoplanets for which, in many cases, we’ll be able to combine data from the spacecraft and ground observatories, helping to nail down orbit and distance measurements.

While we await these new additions to the microlensing family, we can also take surprised pleasure in the announcement of a microlensing discovery, the world known as K2-2016-BLG-0005Lb. Yes, this is a Kepler find, or more precisely, a planet uncovered through exhaustive analysis of K2 data, with the help of ground-based data from the OGLE microlensing survey, the Korean Microlensing Telescope Network (KMTNet), Microlensing Observations in Astrophysics (MOA), the Canada-France-Hawaii Telescope and the United Kingdom Infrared Telescope. I list all these projects and instruments by way of illustrating how what we learn from microlensing grows with wide collaboration, allowing us to combine datasets.

Kepler and microlensing? Surprise is understandable, and the new world, similar to Jupiter in its mass and distance from its host star, is about twice as distant as any exoplanets confirmed by Kepler, which used the transit method to make its discoveries. David Specht (University of Manchester) is lead author of the paper, which will appear in Monthly Notices of the Royal Astronomical Society. The effort involved sifting K2 data for signs of an exoplanet and its parent star occulting a background star, with accompanying gravitational lensing caused by both foreground objects.

Eamonn Kerins is principal investigator for the Science and Technology Facilities Council (STFC) grant that funded the work. Dr Kerins adds:

“To see the effect at all requires almost perfect alignment between the foreground planetary system and a background star. The chance that a background star is affected this way by a planet is tens to hundreds of millions to one against. But there are hundreds of millions of stars towards the center of our galaxy. So Kepler just sat and watched them for three months.”

Image: The view of the region close to the Galactic Center centered where the planet was found. The two images show the region as seen by Kepler (left) and by the Canada-France-Hawaii Telescope (CFHT) from the ground. The planet is not visible but its gravity affected the light observed from a faint star at the center of the image (circled). Kepler’s very pixelated view of the sky required specialized techniques to recover the planet signal. Credit: Specht et al.

This is a classic case of pushing into a dataset with specialized analytical methods to uncover something the original mission designers never planned to see. The ground-based surveys that examined the same area of sky offered a combined dataset to go along with what Kepler saw slightly earlier, given its position 135 million kilometers from Earth, allowing scientists to triangulate the system’s position along the line of sight, and to determine the mass of the exoplanet and its orbital distance.

What an intriguing, and decidedly unexpected, result from Kepler! K2-2016-BLG-0005Lb is also a reminder of the kind of discovery we’re going to be making with Euclid and the Roman instrument. Because it is capable of finding lower-mass worlds at a wide range of orbital distances, microlensing should help us understand how common it is to have a Jupiter-class planet in an orbit similar to Jupiter’s around other stars. Is the architecture of our Solar System, in other words, unique or fairly representative of what we will now begin to find?

Animation: The gravitational lensing signal from Jupiter twin K2-2016-BLG-0005Lb. The local star field around the system is shown using real color imaging obtained with the ground-based Canada-France-Hawaii Telescope by the K2C9-CFHT Multi-Color Microlensing Survey team. The star indicated by the pink lines is animated to show the magnification signal observed by Kepler from space. The trace of this signal with time is shown in the lower right panel. On the left is the derived model for the lensing signal, involving multiple images of the star cause by the gravitational field of the planetary system. The system itself is not directly visible. Credit: CFHT.

From the paper:

The combination of spatially well separated simultaneous photometry from the ground and space also enables a precise measurement of the lens–source relative parallax. These measurements allow us to determine a precise planet mass (1.1 ± 0.1 ?? ), host mass (0.58 ± 0.03 ??) and distance (5.2 ± 0.2 kpc).

The authors describe the world as “a close analogue of Jupiter orbiting a K-dwarf star,” noting:

The location of the lens system and its transverse proper motion relative to the background source star (2.7 ± 0.1 mas/yr) are consistent with a distant Galactic-disk planetary system microlensing a star in the Galactic bulge.

Given that Kepler was not designed for microlensing operations, it’s not surprising to see the authors refer to it as “highly sub-optimal for such science.” But here we have a direct planet measurement including mass with high precision made possible by the craft’s uninterrupted view of its particular patch of sky. Euclid and the Roman telescope should have much to contribute given that they are optimized for microlensing work. We can look for a fascinating expansion of the planetary census.

The paper is Specht et al., “Kepler K2 Campaign 9: II. First space-based discovery of an exoplanet using microlensing,” in process at Monthly Notices of the Royal Astronomical Society” (preprint).

Methane as Biosignature: A Conceptual Framework

A living world around another star will not be an easy catch, no matter how sophisticated the coming generation of space- and ground-based telescopes turns out to be. It’s one thing to develop the tools to begin probing an exoplanet atmosphere, but quite another to be able to say with any degree of confidence that the result we see is the result of biology. When we do begin picking up an interesting gas like methane, we’ll need to evaluate the finding against other atmospheric constituents, and the arguments will fly about non-biological sources for what might be a biosignature.

This is going to begin playing out as the James Webb Space Telescope turns its eye on exoplanets, and methane is the one potential sign of life that should be within its range. We know that oxygen, ozone, methane and carbon dioxide are produced through biological activity on Earth, and we also know that each can be produced in the absence of life. The simultaneous presence of such gases is what would intrigue us most, but the opening round of biosignature detection will be methane. Here I’ll quote Maggie Thompson, who is a graduate student in astronomy and astrophysics at UC Santa Cruz and lead author of a new study on methane in exoplanet atmospheres:

“Oxygen is often talked about as one of the best biosignatures, but it’s probably going to be hard to detect with JWST. We wanted to provide a framework for interpreting observations, so if we see a rocky planet with methane, we know what other observations are needed for it to be a persuasive biosignature.”

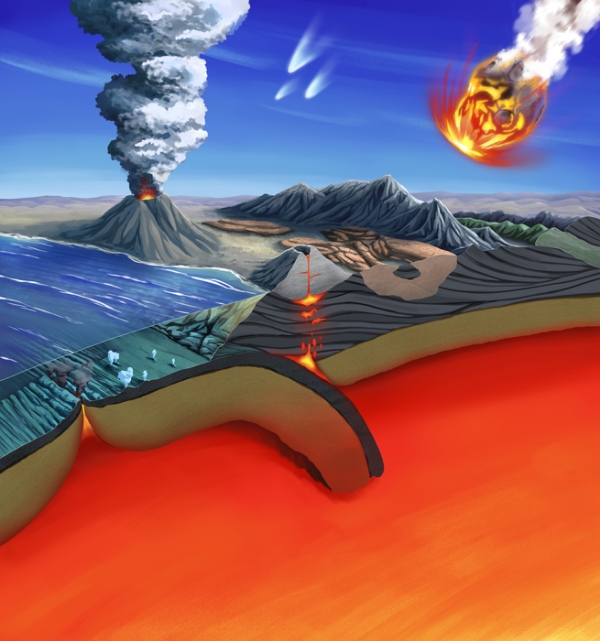

The problem of interpretation is huge, given how numerous are the sources of methane. The study Thompson is discussing has just appeared in Proceedings of the National Academy of Sciences, addressing a wide range of phenomena from volcanic activity, hydrothermal vents, tectonic subduction zones to asteroid or comet impacts. There are many ways to produce methane, but because it is unstable in an atmosphere and easily destroyed by photochemical reactions, it needs to be replenished to remain at high levels. Thus the authors look for clues as to how that replenishment works and how to distinguish these processes from signs of life.

Image: Methane in a planet’s atmosphere may be a sign of life if non-biological sources can be ruled out. This illustration summarizes the known abiotic sources of methane on Earth, including outgassing from volcanoes, reactions in settings such as mid-ocean ridges, hydrothermal vents, and subduction zones, and impacts from asteroids and comets. Credit: © 2022 Elena Hartley.

Current methods for studying exoplanet atmospheres rely upon analyzing the light of the host star during a transit as it passes through the planet’s atmosphere, the latter absorbing some of the starlight to offer clues to its composition. To do this well, we need relatively quiet stars with little flare activity. M-dwarfs are great targets for this kind of work because of their small size, so that the transit depth of a rocky planet in the habitable zone will be relatively large and the signal stronger. It’s also useful that small red stars represent as much as 80% of all stars in the galaxy (for a deep dive into this question, see Alex Tolley’s Red Dwarfs: Their Impact on Biosignatures).

Context will be the key in the hunt for biosignatures, with false positives a persistent danger. Outgassing volcanoes should add not only methane but also carbon monoxide to the atmosphere, while biological activity should consume carbon monoxide. The authors argue that it would be difficult for non-biological processes to produce an atmosphere rich in both methane and carbon dioxide with little carbon monoxide.

Thus a small, rocky world in the habitable zone will need to be evaluated in terms of its geochemistry and its geological processes, not to mention its interactions with its host star. Find atmospheric methane here and it is more likely to be an indication of life if the atmosphere also shows carbon dioxide and the methane is more abundant than carbon monoxide and the planet is not extremely rich in water. The paper is an attempt to build a framework for distinguishing not just false positives, but identifying real biosignatures that may be easy to overlook.

Making the process even more complex is the fact that the scope of abiotic methane production on a planetary scale is not fully understood. Even so, the authors argue that while various abiotic mechanisms can replenish methane, it is hard to produce a methane flux comparable to Earth’s biogenic flux without creating clues that signal a false positive. Here we’re at the heart of things; let me quote the paper:

…we investigated whether planets with very reduced mantles and crusts can generate large methane fluxes via magmatic outgassing and assessed the existing literature on low-temperature water-rock and metamorphic reactions, and, where possible, determined their maximum global abiotic methane fluxes. In every case, abiotic processes cannot easily produce atmospheres rich in both CH4 and CO2 with negligible CO due to the strong redox disequilibrium between CO2 and CH4 and the fact that CO is expected to be readily consumed by life. We also explored whether habitable-zone exoplanets that have large volatile inventories like Titan could have long lifetimes of atmospheric methane. We found that, for Earth-mass planets with water mass fractions that are less than ?1 % of the planet’s mass, the lifetime of atmospheric methane is less than ?10 Myrs, and observational tools can likely distinguish planets with larger water mass fractions from those with terrestrial densities.

Let’s also recall that when searching for biosignatures, terms like ‘Earth-like’ are easy to misuse. Today’s atmosphere is a mix of nitrogen, oxygen and carbon dioxide, but we know that over geological time, the atmosphere has changed profoundly. The early Earth would have been shrouded in hydrogen and helium, with volcanic eruptions producing carbon dioxide, water vapor and sulfur. The oxygenation event that occurred two and a half billion years ago brought oxygen levels up. We thus have to remember where a given exoplanet may be in its process of development as we evaluate it.

So by all means let’s hope we one day find something like a simultaneous detection of oxygen and methane, two gases that ought not to co-exist unless there were a sustaining process (life) to keep them present. An out of equilibrium chemistry is intriguing, because life wants to throw chemical stability out of whack. And by all means let’s accelerate our work in the direction of biosignature analysis to root out those false positives. We begin with methane because that is what JWST can most readily detect.

And as to the question of ambiguity in life detection, JWST is not likely able to detect atmospheric oxygen and ozone, nor will it be a reliable source on water vapor, so its ability to make the call on habitability is limited. Going forward, the authors think that if the instrument detects significant methane and carbon dioxide and can constrain the ratio of carbon monoxide to methane, this will serve as a motivator for future instruments like ground-based Extremely Large Telescopes to follow up these observations. It will take observational tools in combination to nail down methane as a biosignature, but the ELTs should be well placed to take the next step forward.

The paper is Thompson et al., “The case and context for atmospheric methane as an exoplanet biosignature,” Proceedings of the National Academy of Sciences 119 (14) (March 30, 2022). Abstract. See also Krissansen-Totton et al., “Understanding planetary context to enable life detection on exoplanets and test the Copernican principle,” Nature Astronomy 6 (2022), 189-198 (abstract).

SETI as Exploration

Early exoplanet detections always startled my friends outside the astronomical community. Anxious for a planet something like the Earth, they found themselves looking at a ‘hot Jupiter’ like 51 Pegasi b, which at the time seemed like little more than a weird curiosity. A Jupiter-like planet hugging a star? More hot Jupiters followed, which led to the need to explain how exoplanet detection worked with radial velocity methods, and why big planets close to their star should turn up early in the hunt.

Earlier, there were the pulsar planets, as found by Aleksander Wolszczan and Dale Frail around the pulsar PSR B1 257+12 in the constellation Virgo. These were interestingly small, but obviously accumulating a sleet of radiation from their primary. Detected a year later, PSR B1620-26 b was found to orbit a white dwarf/pulsar binary system. But these odd detections some 30 years ago actually made the case for the age of exoplanet discovery that was about to open, a truly golden era of deep space research.

Aleksander Wolszczan himself put it best: “If you can find planets around a neutron star, planets have to be basically everywhere. The planet production process has to be very robust.”

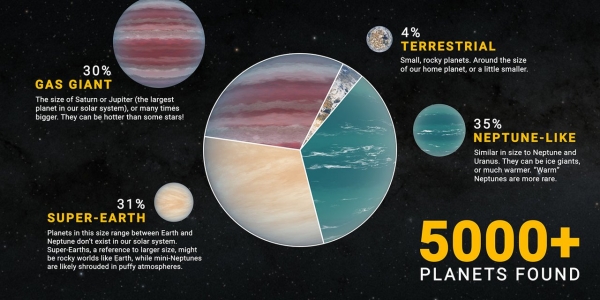

Indeed. With NASA announcing another 65 exoplanets added to its Exoplanet Archive, we now take the tally of confirmed planets up past 5000, their presence firmed up by multiple detection methods or by analytical techniques. These days, of course, the quickly growing catalog is made up of all kinds of worlds, from those gas giants near their stars to the super-Earths that seem to be rocky worlds larger than our Earth, and the intriguing ‘mini-Neptunes, which seem to slot into a category of their own. And let’s not forget those interesting planets on circumbinary orbits in multiple star systems.

Wolszczan is quoted in a NASA news release as saying that life is an all but certain find – “most likely of some primitive kind” – for future instrumentation like ESA’s ARIEL mission (launching in 2029), the James Webb Space Telescope, or the Nancy Grace Roman Space Telescope, which will launch at the end of the decade. These instruments should be able to take us into exoplanet atmospheres, where we can start taking apart their composition in search of biosignatures. This, in turn, will open up whole new areas of ambiguity, and I predict a great deal of controversy over early results.

Image: The more than 5,000 exoplanets confirmed in our galaxy so far include a variety of types – some that are similar to planets in our Solar System, others vastly different. Among these are a mysterious variety known as “super-Earths” because they are larger than our world and possibly rocky. Credit: NASA/JPL-Caltech.

But what about life beyond the primitive? I noticed a short essay by Seth Shostak recently published by the SETI Institute which delves into why we humans seem fixated on finding not just exo-biology but exo-intelligence. Shostak digs into the act of exploration itself as a justification for this quest, pointing out that experiments to find life around other stars are not science experiments as much as searches. After all, there is no way to demonstrate that life does not exist, so the idea of a profoundly biologically-infused universe is not something that any astronomer can falsify.

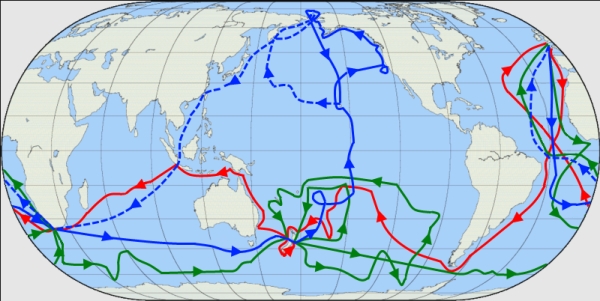

So is exploration, rather than science, a justification for SETI? Surely the answer is yes. Exploration usually mixes with commercial activity – Shostak’s example is the voyages of James Cook, who served the British admiralty by looking for trade routes and mapping hitherto uncharted areas of the southern ocean. Was there a new continent to be found somewhere in this immensity, a Terra Australis, as some cartographers had been placing on maps to balance between the land-heavy northern hemisphere and the south? The idea was ancient but still had life in Cook’s time.

In our parlous modern world, we make much of the downside of enterprises once considered heroic, noting their depredations in the name of commerce and empire. But we shouldn’t overlook the scope of their accomplishment. Says Shostak:

Exploration has always been important, and its practical spin-offs are often the least of it. None of the objectives set by the English Admiralty for Cook’s voyages was met. And yes, the exploration of the Pacific often left behind death, disease and disruption. But two-and-a-half centuries later, Cook’s reconnaissance still has the power to stir our imagination. We thrill to the possibility of learning something marvelous, something that no previous generation knew.

Image: The routes of Captain James Cook’s voyages. The first voyage is shown in red, second voyage in green, and third voyage in blue. The route of Cook’s crew following his death is shown as a dashed blue line. Credit: Wikimedia Commons / Jon Platek. CC BY-SA 3.0.

Shostak’s mention of Cook reminds me of the Conference on Interstellar Migration, held way back in 1983 at Los Alamos, where anthropologist Ben Finney and astrophysicist Eric Jones, who had organized the interdisciplinary meeting, discussed humans as what they called “The Exploring Animal.” Like Konrad Lorenz, Finney and Jones saw the exploratory urge as an outcome of evolution that inevitably pushed people into new places out of innate curiosity. The classic example, discussed by the duo in a separate paper, was the peopling of the Pacific in waves of settlement, as these intrepid sailors set off, navigating by the stars, the wind, the ocean swells, and the flight of birds.

The outstanding achievement of the Stone Age? Finney and Jones thought so. In my 2004 book Centauri Dreams, I reflected on how often the exploratory imperative came up as I talked with interstellar-minded writers, physicists and engineers:

The maddening thing about the future is that while we can extrapolate based on present trends, we cannot imagine the changes that will make our every prediction obsolete. It is no surprise to me that in addition to their precision and, yes, caution, there is a sense of palpable excitement among many of the scientists and engineers with whom I talked. Their curiosity, their sense of quest, is the ultimate driver for interstellar flight. A voyage of a thousand years seems unthinkable, but it is also within the span of human history. A fifty-year mission is within the lifetime of a scientist. Somewhere between these poles our first interstellar probe will fly, probably not in our lifetimes, perhaps not in this century. But if there was a time before history when the Marquesas seemed as remote a target as Alpha Centauri does today, we have the example of a people who found a way to get there.

I’ve argued before that exploration is not an urge that can be tamped down, nor is it one that needs to be exercised by a large percentage of the population to shape outcomes that can be profound. To return to the Cook era, most people involved in the voyages that took Europeans to the Pacific islands, Australia and New Zealand in those days were exceptions, the few who left what they knew behind (some, of course, were forced to go due to the legal apparatus of the time). The point is: It doesn’t take mass human colonization to be the driver for our eventual spread off-planet. It does take inspired and determined individuals, and history yields up no shortage of these.

The 1983 conference in Los Alamos is captured in the book Interstellar Migration and the Human Experience, edited by Ben R. Finney and Eric M. Jones (Berkeley: University of California Press, 1985), an essential title in our field.

A Hybrid Interstellar Mission Using Antimatter

Epsilon Eridani has always intrigued me because in astronomical terms, it’s not all that far from the Sun. I can remember as a kid noting which stars were closest to us – the Centauri trio, Tau Ceti and Barnard’s Star – wondering which of these would be the first to be visited by a probe from Earth. Later, I thought we would have quick confirmation of planets around Epsilon Eridani, since it’s a scant (!) 10.5 light years out, but despite decades of radial velocity data, astronomers have only found one gas giant, and even that confirmation was slowed by noise-filled datasets.

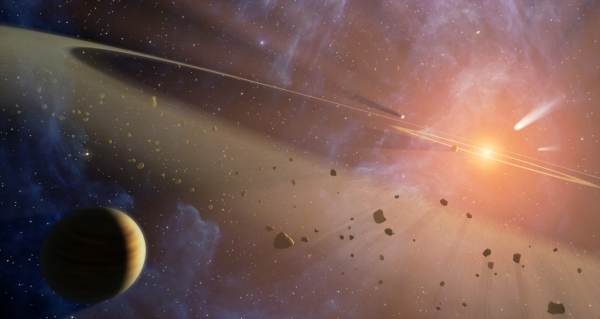

Even so, Epsilon Eridani b is confirmed. Also known as Ægir (named for a figure in Old Norse mythology), it’s in a 3.5 AU orbit, circling the star every 7.4 years, with a mass somewhere between 0.6 and 1.5 times that of Jupiter. But there is more: We also get two asteroid belts in this system, as Gerald Jackson points out in his new paper on using antimatter for deceleration into nearby star systems, as well as another planet candidate.

Image: This artist’s conception shows what is known about the planetary system at Epsilon Eridani. Observations from NASA’s Spitzer Space Telescope show that the system hosts two asteroid belts, in addition to previously identified candidate planets and an outer comet ring. Epsilon Eridani is located about 10 light-years away in the constellation Eridanus. It is visible in the night skies with the naked eye. The system’s inner asteroid belt appears as the yellowish ring around the star, while the outer asteroid belt is in the foreground. The outermost comet ring is too far out to be seen in this view, but comets originating from it are shown in the upper right corner. Credit: NASA/JPL-Caltech/T. Pyle (SSC).

This is a young system, estimated at less than one billion years. For both Epsilon Eridani and Proxima Centauri, deceleration is crucial for entering the planetary system and establishing orbit around a planet. The amount of antimatter available will determine our deceleration options. Assuming a separate method of reaching Proxima Centauri in 97 years (perhaps beamed propulsion getting the payload up to 0.05c), we need 120 grams of antiproton mass to brake into the system. A 250 year mission to Epsilon Eridani at this velocity would require the same 120 grams.

Thus we consider the twin poles of difficulty when it comes to antimatter, the first being how to produce enough of it (current production levels are measured in nanograms per year), the second how to store it. Jackson, who has long championed the feasibility of upping our antimatter production, thinks we need to reach 20 grams per year before we can start thinking seriously about flying one of these missions. But as both he and Bob Forward have pointed out, there are reasons why we produce so little now, and reasons for optimism about moving to a dedicated production scenario.

Past antiproton production was constrained by the need to produce antiproton beams for high energy physics experiments, requiring strict longitudinal and transverse beam characteristics. Their solution was to target a 120 GeV proton beam into a nickel target [41] followed by a complex lithium lens [42]. The world record for the production of antimatter is held by the Fermilab. Antiproton production started in 1986 and ended in 2011, achieving an average production rate of approximately 2 ng/year [43]. The record instantaneous production rate was 3.6 ng/year [44]. In all, Fermilab produced and stored 17 ng of antiprotons, over 90% of the total planetary production.

Those are sobering numbers. Can we cast antimatter production in a different light? Jackson suggests using our accelerators in a novel way, colliding two proton beams in an asymmetric collider scenario, in which one beam is given more energy than the other. The result will be a coherent antiproton beam that, moving downstream in the collider, is subject to further manipulation. This colliding beam architecture makes for a less expensive accelerator infrastructure and sharply reduces the costs of operation.

The theoretical costs for producing 20 grams of antimatter per year are calculated under the assumption that the antimatter production facility is powered by a square solar array 7 km x 7 km in size that would be sufficient to supply all of the needed 7.6 GW of facility power. Using present-day costs for solar panels, the capital cost for this power plant comes in at $8 billion (i.e., the cost of 2 SLS rocket launches). $80 million per year covers operation and maintenance. Here’s Jackson on the cost:

…3.3% of the proton-proton collisions yields a useable antiproton, a number based on detailed particle physics calculations [45]. This means that all of the kinetic energy invested in 66 protons goes into each antiproton. As a result, the 20 g/yr facility would theoretically consume 6.7 GW of electrical power (assuming 100% conversion efficiencies). Operating 24/7 this power level corresponds to an energy usage of 67 billion kW-hrs per year. At a cost of $0.01 per kW-hr the annual operating cost of the facility would be $670 million. Note that a single Gerald R. Ford-class aircraft carrier costs $13 billion! The cost of the Apollo program adjusted for 2020 dollars was $194 billion.

Science Along the Way

Launching missions that take decades, and in some cases centuries, to reach their destination calls for good science return wherever possible, and Jackson argues that an interstellar mission will determine a great deal about its target star just by aiming for it. Whereas past missions like New Horizons could count on the position of targets like Pluto and Arrokoth being programmed into the spacecraft computers, the preliminary positioning information uploaded to the craft came from Earth observation. Our interstellar craft will need more advanced tools. It will have to be capable of making its own astrometrical observations, sending its calculations to the propulsion system for deceleration into the target system and orbital insertion, thus refining exoplanet parameters on the fly.

Remember that what we are considering is a hybrid mission, using one form of propulsion to attain interstellar cruise velocity, and antimatter as the method for deceleration. You might recall, for example, the starship ISV Venture Star in the film Avatar, which uses both antimatter engines and a photon sail. What Jackson has added to the mix is a deep dive into the possibilities of antimatter for turning what would have been a flyby mission into a long-lasting planet orbiter.

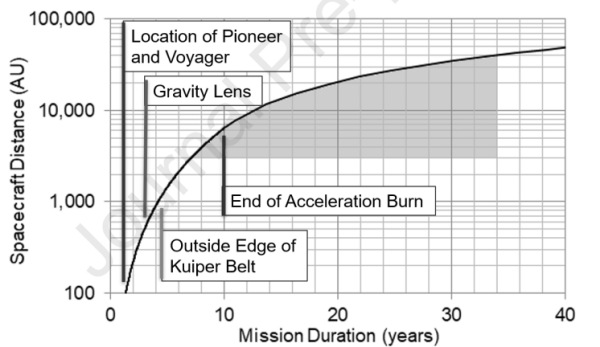

Let’s consider what happens along the line of flight as a spacecraft designed with these methods makes its way out of the Solar System. If we take a velocity of 0.02c, our spacecraft passes the outgoing Voyager and Pioneer spacecraft in two years, and within three more years it passes into the gravitational lensing regions of the Sun beginning at 550 AU. A mere five years has taken the vehicle through the Kuiper Belt and moved it out toward the inner Oort Cloud, where little is currently known about such things as the actual density distribution of Oort objects as a function of radius from the Sun. We can also expect to gain data on any comparable cometary clouds around Proxima Centauri or Epsilon Eridani as the spacecraft continues its journey.

By Jackson’s calculations, when we’re into the seventh year of such a mission, we are encountering Oort Cloud objects at a pretty good clip, with an estimated 450 Oort objects within 0.1 AU of its trajectory based on current assumptions. Moving at 1 AU every 5.6 hours, we can extrapolate an encounter rate of one object per month over a period of three decades as the craft transits this region. Jackson also notes that data on the interstellar medium, including the Local Interstellar Cloud, will be prolific, including particle spectra, galactic cosmic ray spectra, dust density distributions, and interstellar magnetic field strength and direction.

Image: This is Figure 7 from the paper. Caption: Potential early science return milestones for a spacecraft undergoing a 10-year acceleration burn with a cruise velocity of 0.02c. Credit: Gerald Jackson.

It’s interesting to compare science return over time with what we’ve achieved with the Voyager missions. Voyager 2 reached Jupiter about two years after launch in 1977, and passed Saturn in four. It would take twice that time to reach Uranus (8.4 years into the mission), while Neptune was reached after 12. Voyager 2 entered the heliopause after 41.2 years of flight, and as we all know, both Voyagers are still returning data. For purposes of comparison, the Voyager 2 mission cost $865 million in 1973 dollars.

Thus, while funding missions demands early return on investment, there should be abundant opportunity for science in the decades of interstellar flight between the Sun and Proxima Centauri, with surprises along the way, just as the Voyagers occasionally throw us a curveball – consider the twists and wrinkles detected in the Sun’s magnetic field as lines of magnetic force criss-cross, and reconnect, producing a kind of ‘foam’ of magnetic bubbles, all this detected over a decade ago in Voyager data. The long-term return on investment is considerable, as it includes years of up-close exoplanet data, with orbital operations around, for example, Proxima Centauri b.

It will be interesting to see Jackson’s final NIAC report, which he tells me will be complete within a week or so. As to the future, a glimpse at one aspect of it is available in the current paper, which refers to what the original NIAC project description referred to as “a powerful LIDAR system…to illuminate, identify and track flyby candidates” in the Oort Cloud. But as the paper notes, this now seems impractical:

One preliminary conclusion is that active interrogation methods for locating 10 km diameter objects, for example with the communication laser, are not feasible even with megawatts of available electrical power.

We’ll also find out in the NIAC report whether or not Jackson’s idea of using gram-scale chipcraft for closer examination of, say, objects in the Oort has stood up to scrutiny in the subsequent work. This hybrid mission concept using antimatter is rapidly evolving, and what lies ahead, he tells me in a recent email, is a series of papers expanding on antimatter production and storage, and further examining both the electrostatic trap and electrostatic nozzle. As both drastically increasing antimatter production, as well as learning how to maximize small amounts, are critical for our hopes to someday create antimatter propulsion, I’ll be tracking this report closely.

Antimatter-driven Deceleration at Proxima Centauri

Although I’ve often seen Arthur Conan Doyle’s Sherlock Holmes cited in various ways, I hadn’t chased down the source of this famous quote: “When you have eliminated all which is impossible, then whatever remains, however improbable, must be the truth.” Gerald Jackson’s new paper identifies the story as Doyle’s “The Adventure of the Blanched Soldier,” which somehow escaped my attention when I read through the Sherlock Holmes corpus a couple of years back. I’m a great admirer of Doyle and love both Holmes and much of his other work, so it’s good to get this citation straight.

As I recall, Spock quotes Holmes to this effect in one of the Star Trek movies; this site’s resident movie buffs will know which one, but I’ve forgotten. In any case, a Star Trek reference comes into useful play here because what Jackson (Hbar Technologies, LLC) is writing about is antimatter, a futuristic thing indeed, but also in Jackson’s thinking a real candidate for a propulsion system that involves using small amounts of antimatter to initiate fission in depleted uranium. The latter is a by-product of the enrichment of natural uranium to make nuclear fuel.

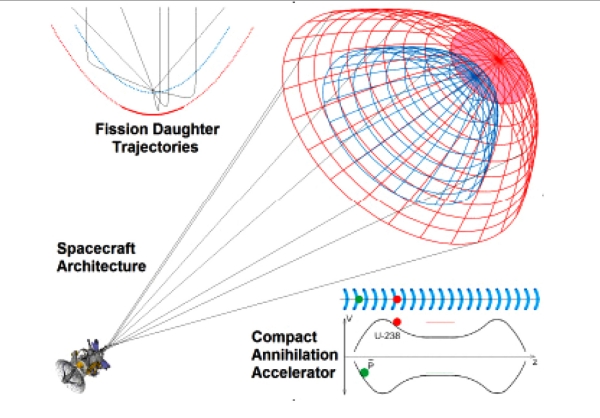

Both thrust and electrical power emerge from this, and in Jackson’s hands, we are looking at a mission architecture that can not only travel to another star – the paper focuses on Proxima Centauri as well as Epsilon Eridani – but also decelerate. Jackson has been studying the matter for decades now, and has presented antimatter-based propulsion concepts for interstellar flight at, among other venues, symposia of the Tennessee Valley Interstellar Workshop (now the Interstellar Research Group). In the new paper, he looks at a 10-kilogram scale spacecraft with the capability of deceleration as well as a continuing source of internal power for the science mission.

Image: Depiction of the deceleration of interstellar spacecraft utilizing antimatter concept. Credit: Gerald Jackson.

On the matter of the impossible, the quote proves useful. Jackson applies it to the propulsion concepts we normally think of in terms of making an interstellar crossing. This is worth quoting:

Applying this Holmes Method to space propulsion concepts for exoplanet exploration, in this paper the term “impossible” is re-interpreted arbitrarily to mean any technology that requires: 1) new physics that has not been experimentally validated; 2) mission durations in excess of one thousand years; and 3) material properties that are not currently demonstrated or likely to be achievable during this century. For example, “warp drives” can currently be classified as impossible by criterion #1, and chemical rockets are impossible due to criterion #2. Breakthrough Starshot may very well be impossible according to criterion #3 simply because of the needed material properties of the accelerating sail that must survive a gigawatt laser beam for 30 minutes. Though traditional nuclear thermal rockets fail due to criterion #2, specific fusion-based propulsion systems might be feasible if breakeven nuclear fusion is ever achieved.

Can antimatter supply the lack? The kind of mission Jackson has been analyzing uses antimatter to initiate fission, so we could consider this a hybrid design, one with its roots in the ‘antimatter sail’ Jackson and Steve Howe have described in earlier technical papers. For the background on this earlier work, you can start by looking at Antimatter and the Sail, one of a number of articles here on Centauri Dreams that has explored the idea.

In this paper, we move the antimatter sail concept to a deceleration method, with the launch propulsion being handed off to other technologies. The sail’s antimatter-induced fission is not used only to decelerate, though. It also provides a crucial source of power for the decades-long science mission at target.

If we leave the launch and long cruise of the mission up to other technologies, we might see the kind of laser-beaming methods we’ve looked at in other contexts as part of this mission. But if Breakthrough Starshot can develop a model for a fast flyby of a nearby star (moving at a remarkable 20 percent of lightspeed) via a laser array, various problems emerge, especially in data acquisition and return. On the former, the issue is that a flyby mission at these velocities allows precious little time at target. Successful deceleration would allow in situ observations from a stable exoplanet orbit.

That’s a breathtaking idea, given how much energy we’re thinking about using to propel a beamed-sail flyby, but Jackson believes it’s a feasible mission objective. He gives a nod to other proposed deceleration methods, which have included using a ‘magnetic sail’ (magsail) to brake against a star’s stellar wind. The problem is that the interstellar medium is too tenuous to slow a craft moving at a substantial percentage of lightspeed for orbital insertion upon arrival – Jackson considers the notion in the ‘impossible’ camp, whereas antimatter may come in under the wire as merely ‘improbable.’ That difference in degree, he believes, is well worth exploring.

The antimatter concept described generates a high specific impulse thrust, with the author noting that approximately 98 percent of antiprotons that stop within uranium induce fission. It turns out that antiproton annihilation on the nucleus of any uranium isotope – and that includes non-fissile U238 – induces fission. In Jackson’s design, about ten percent of the annihilation energy released is channeled into thrust.

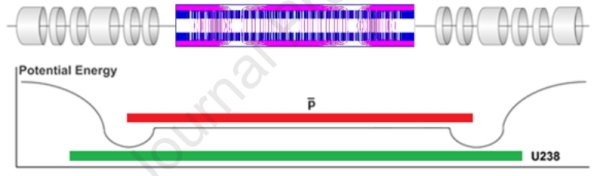

Jackson analyzes an architecture in which the uranium “propagates as a singly-charged atomic ion beam confined to an electrostatic trap.” The trap can be likened in its effects to what magnetic storage rings do when they confine particle beams, providing a stable confinement for charged particles. Antiprotons are sent in the same direction as the uranium ions, reaching the same velocity in the central region, where the matter/antimatter annihilation occurs. Because the uranium is in the form of a sparse cloud, the energetic fission ‘daughters’ escape with little energy loss.

Here is Jackson’s depiction of an electrostatic annihilation trap. In this design, both the positively charged uranium ions and the negatively charged antiprotons are confined.

Image: This is Figure 1 from the paper. Caption: Axial and radial confinement electrodes (top) and two-species electrostatic potential well (bottom) of a lightweight charged-particle trap that mixes U238 with antiprotons.

A workable design? The author argues that it is, saying:

Longitudinal confinement is created by forming an axial electrostatic potential well with a set of end electrodes indicated in figure 1. To accomplish the goal of having oppositely charged antiprotons and uranium ions traveling together for the majority of their motion back and forth (left/right in the figure) across the trap, this electrostatic potential has a double-well architecture. This type of two-species axial confinement has been experimentally demonstrated [53].

The movement of antiprotons and uranium ions within the trap is complex:

The antiprotons oscillate along the trap axis across a smaller distance, reflected by a negative potential “hill”. In this reflection region the positively charged uranium ions are accelerated to a higher kinetic energy. Beyond the antiproton reflection region a larger positive potential hill is established that subsequently reflects the uranium ions. Because the two particle species must have equal velocity in the central region of the trap, and the fact that the antiprotons have a charge density of -1/nucleon and the uranium ions have a charge density of +1/(238 nucleons), the voltage gradient required to reflect the uranium ions is roughly 238 times greater than that required to reflect the antiprotons.

The design must reckon with the fact that the fission daughters escape the trap in all directions, which is compensated for through a focusing system in the form of an electrostatic nozzle that produces a collimated exhaust beam. The author is working with a prototype electrostatic trap coupled to an electrostatic nozzle to explore the effects of lower-energy electrons produced by the uranium-antiproton annihilation events as well as the electrostatic charge distribution within the fission daughters.

Decelerating at Proxima Centauri in this scheme involves a propulsive burn lasting ten years as the craft sheds kinetic energy on the long arc into the planetary system. Under these calculations, a 200 year mission to Proxima requires 35 grams of total antiproton mass. Upping this to a 56-year mission moving at 0.1 c demands 590 grams.

Addendum: I wrote ’35 kilograms’ in the above paragraph before I caught the error. Thanks, Alex Tolley, for pointing this out!

Current antimatter production remains in the nanogram range. What to do? In work for NASA’s Innovative Advanced Concepts office, Jackson has argued that despite minuscule current production, antimatter can be vastly ramped up. He believes that production of 20 grams of antimatter per year is a feasible goal. More on this issue, to which Jackson has been devoting his life for many years now, in the next post.

The paper is Jackson, “Deceleration of Exoplanet Missions Utilizing Scarce Antimatter,” in press at Acta Astronautica (2022). Abstract.