Centauri Dreams

Imagining and Planning Interstellar Exploration

Proxima Centauri b: Artificial Illumination as a Technosignature

Our recent look at the possibility of technosignatures at Alpha Centauri is now supplemented with a new study on the detectability of artificial lights on Proxima Centauri b. The planet is in the habitable zone, roughly similar in mass to the Earth, and of course, it orbits the nearest star, making it a world we can hope to learn a great deal more about as new instruments come online. The James Webb Space Telescope is certainly one of these, but the new work also points to LUVOIR (Large UV/Optical/IR Surveyor), a multi-wavelength space-based observatory with possible launch in 2035.

Authors Elisa Tabor (Stanford University) and Avi Loeb (Harvard) point out that a (presumably) tidally locked planet with a permanent nightside would need artificial lighting to support a technological culture. As we saw in Brian Lacki’s presentation at Breakthrough Discuss (see Alpha Centauri and the Search for Technosignatures), coincident epochs for civilizations developing around neighboring stars are highly unlikely, making this the longest of longshots. On the other hand, a civilization arising elsewhere could be detectable through its artifacts on worlds it has chosen to study.

We learn by asking questions and looking at data. In this case, asking how we would detect artificial light on Proxima b involves factoring in the planet’s radius, which is on the order of 1.3 Earth radii (1.3 R?) as well as that of Proxima Centauri itself, which is 0.14 that of the Sun (0.14 R?). We also know the planet is in an 11 day orbit at 0.05 AU. Other factors influencing its lightcurve would be its albedo and orbital inclination. Tabor and Loeb use recent work on Proxima Centauri c’s inclination (citation below) to ballpark an orbital inclination for the inner world.

Image: Northern Italy at night. City lights are an obvious technosignature, but can we detect them at interstellar distances? Credit: NASA/ESA.

The question then becomes whether soon to be flown technology like the James Webb Space Telescope could detect artificial lights if they were present at Proxima b. The authors detail in this paper their calculation of the lightcurves that would be involved, using two scenarios: Artificial lighting with the same spectrum found in LEDs on Earth, and a narrower spectrum leading to the “same proportion of light as the total artificial illumination on Earth.” The calculations draw on open source software source code called Exoplanet Analytic Reflected Lightcurve (EARL), and likewise deploy the JWST Exposure Time Calculator (ETC) to estimate the feasibility of detection.

What Loeb and Tabor find is that JWST could detect LED lighting “making up 5% of stellar power” with 85 percent confidence — in other words, 5% of the power the planet would receive at its orbital distance from Proxima Centauri. That would mean our space telescope could find a level of illumination from LEDs that is 500 times more powerful than found on Earth.

To detect the current level of artificial illumination (including but not limited to LEDs) on Earth, the spectral band would have to be 103 times narrower. “ In either case,” the authors add, “JWST will thus allow us to narrow down the type of artificial illumination being used.”

All of this demands maximum performance from JWST’s Near InfraRed Spectrograph (NIRSpec). Much depends upon what methods a civilization at Proxima b might use. From the paper:

Proxima b is tidally locked if its orbit has an eccentricity below 0.06, where for reference, the eccentricity of the Earth’s orbit is 0.017 (Ribas et al. 2016). If Proxima b has a permanent day and nightside, the civilization might illuminate the nightside using mirrors launched into orbit or placed at strategic points (Korpela et al. 2015). In that case, the lights shining onto the permanent nightside should be extremely powerful, and thus more likely to be detected with JWST.

That last comment calls to mind Karl Schroeder’s orbital mirrors lighting up brown dwarf planets in his novel Permanence (2002). A snip from the book, referring to a brown dwarf planet named Treya as seen by the protagonist, Rue:

A pinprick of light appeared on the limb of Treya and quickly grew into a brilliant white star. This seemed to move out and away from Treya, which was an illusion caused by Rue’s own motion. Treya’s artificial sun did not move, but stayed at the Lagrange point, bathing an area of the planet eighty kilometers in diameter with daylight. The sun was a sphere of tungsten a kilometer across. It glowed with incandescence from concentrated infrared light, harvested from Erythrion [the brown dwarf] by hundreds of orbiting mirrors. If it were turned into laser power, this energy could reshape Treya’s continents— or launch interstellar cargoes.

A flat line of light appeared on Treya’s horizon. It quickly grew into a disk almost too bright to look at. When Rue squinted at it she could make out white clouds, blue lakes, and the mottled ochre and green of grassland and forests. The light was bright enough to wash away the aurora and even make the stars vanish. Down there, she knew, the skies would be blue.

Back to Proxima b: The LUVOIR instrument should be able to confirm the presence or lack of artificial illumination with greater precision, serving as a follow-up to JWST observations with significantly higher performance. Loeb has previously worked with Manasvi Lingam to show the likelihood of detecting a spectral edge in the reflectance of photovoltaic cells on the planet’s dayside, so in terms of technosignatures, we’re learning what we will be able to identify based on a growing set of scenarios for any civilization there.

The paper is Tabor & Loeb, “Detectability of Artificial Lights from Proxima b,” (preprint). The paper on photovoltaic cells is Loeb & Lingam, “Natural and Artificial Spectral Edges in Exoplanets,” Monthly Notices of the Royal Astronomical Society Vol. 470, Issue 1 (September 2017), L82-L86 (abstract). The work on Proxima c’s orbital inclination is Kervella, Arenou & Schneider, “Orbital inclination and mass of the exoplanet candidate Proxima c,” Astronomy & Astrophysics Vol. 635, L14 (March 2020). Abstract / Full Text.

Exploring Ice Giant Oceans

Laboratory work on Earth is, as we saw yesterday, leading to hypotheses about how planets form and the effect of these processes on subsequent life. Whether in our own outer Solar System or orbiting other stars, planets in the ‘ice giant’ category, like Uranus and Neptune, remain mysterious, with Voyager 2’s flybys of the latter the only missions that have gone near them. We also know that sub-Neptune planets are common, many of these doubtless sharing the characteristics of their larger namesake.

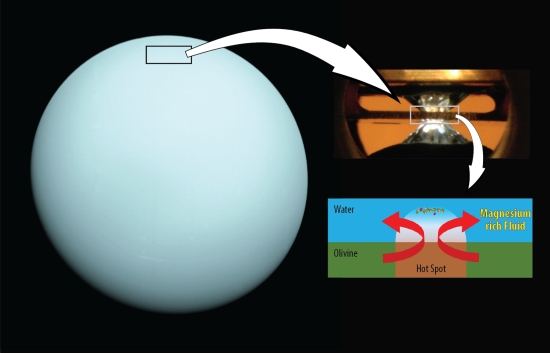

Thus recent experiments probing ice giant interiors catch my eye this morning. Involving an international team of collaborators, the work looks at the interactions between water and rock that we would expect to find in the extreme conditions inside an ice giant. Planets like Uranus and Neptune are thought to house most of their mass in a deep water layer, a dense fluid overlaying a rocky core, a sharp departure from terrestrial worlds. What happens at that interface is ripe for examination.

The experiments were performed at Arizona State University’s DanShimLab, which is dedicated to the study of planetary materials at a wide range of pressures and temperatures using diamond-anvil and shockwave techniques. To probe this environment, the scientists immersed the rock-forming minerals olivine and ferropericlase in water and then compressed them to high pressures using a diamond-anvil. Heating the sample with a laser, the team could then track the water/mineral reaction under these conditions by way of X-ray measurements.

The result: High concentrations of magnesium, with implications for the composition of oceans much different from Earth’s, as study co-author Sang-Heon Dan Shim (Arizona State University) explains:

“We found that magnesium becomes much more soluble in water at high pressures. In fact, magnesium may become as soluble in the water layers of Uranus and Neptune as salt is in Earth’s ocean. If an early dynamic process enabled a rock-water reaction in these exoplanets, the topmost water layer may be rich in magnesium, possibly affecting the thermal history of the planet.”

Image: A diamond-anvil (top right) and laser were used in the lab on a sample of olivine to reach the pressure-temperature conditions expected at the top of the water layer beneath the hydrogen atmosphere of Uranus (left). In this experiment, the magnesium in olivine dissolved in the water. Credit: Shim/ASU.

Laser-heated diamond anvil cells can create pressures in the range of 1-5,000,000 bars by compressing materials between diamond ‘anvils’ that are transparent to X-rays, infrared and visible light. The lab’s laser heating systems can take samples to 1,000-5,000 K, all by way of exploring how materials behave under conditions thought to exist in planetary interiors. Thus the range of such laboratory work extends through rocky worlds and into the realm of not just the ice giants but gas giants like Jupiter.

Given the lab’s finding of high concentrations of magnesium under conditions of high pressure and temperature, Shim argues that the mineral may become as soluble in ice giant interiors as salt is in Earth’s oceans. Conceivably, this finding could explain why the atmosphere of Uranus is considerably colder than Neptune’s, for magnesium in larger amounts could block heat from escaping the interior. “This magnesium-rich water may act like a thermal blanket for the interior of the planet,” says Shim.

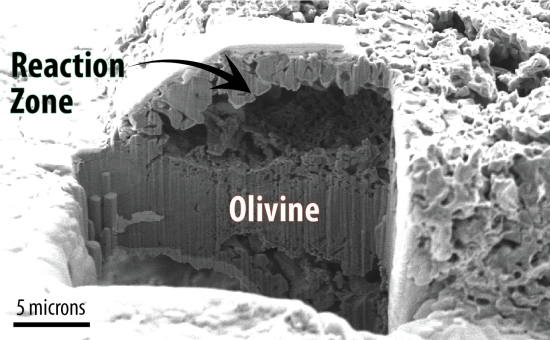

Image: An electron microscopy image of the olivine sample shows a large empty dome structure where magnesium under high-pressure water precipitated as magnesium oxide. Credit: Kim et al.

Oceans rich in magnesium may thus be common in ice giants, with a thick layer of water covering a rocky interior. Moreover, the idea that the interior of water worlds is sharply differentiated between rock and water has been challenged in recent work, with implications for the thermal evolution of these planets. To probe deeper into these matters, the study calls for an examination of other icy materials like CO2 and NH3, but note this cautionary remark in its conclusion:

Extrapolation of our results beyond the pressure range covered in our experiments should [be] treated with caution because of possible changes in the properties of H2O at very high pressures. Nevertheless, the models based on our experiments demonstrate that geochemical cycles and thermal history of water-rich planets could be sensitive to the size of the planet because of pressure-dependent chemical processes.

We have a useful methodology here to extend the study of the geochemical cycle on a range of planetary interiors. We have much to learn, for as the paper points out, the interactions of major rock-forming minerals at the interface between ocean and rock in ice giants have rarely been explored at the high pressures used in these experiments.

The paper is Kim et al., “Atomic-scale mixing between MgO and H2O in the deep interiors of water-rich planets,” Nature Astronomy 17 May 2021 (abstract).

Planet Formation Modes as a Key to Habitability

While a planet’s position in the habitable zone is thought critical for the development of life like ourselves, new work out of Rice University suggests an equally significant factor in planetary growth. Working at a high-pressure laboratory at the university, Damanveer Grewal and Rajdeep Dasgupta have explored how planets capture and retain key volatiles like nitrogen, carbon and water as they form The team used nitrogen as a proxy for volatile distribution in a range of simulated protoplanets.

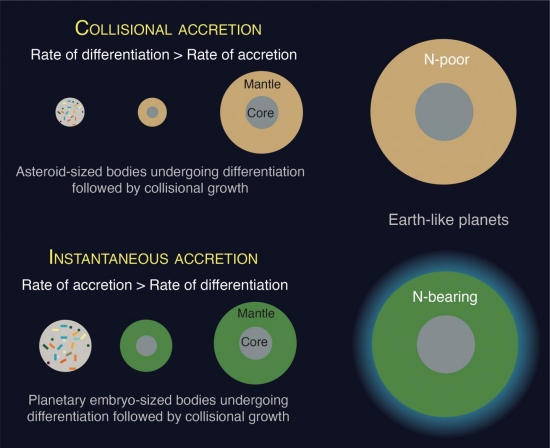

Two processes are under study here, the first being the accretion of material in the circumstellar disk into a protoplanet, and the rate at which it proceeds. The second is differentiation, as the protoplanet separates into layers ranging from a metallic core to a silicate shell and, finally, an atmospheric envelope. The interplay between these processes is found to determine which volatiles the subsequent planet retains.

Most of the nitrogen is found to escape into the atmosphere during differentiation and is then lost to space as the protoplanet cools or, perhaps, collides with other protoplanets during the turbulent era of planet formation. The data, however, demonstrate the likelihood of nitrogen remaining in the metallic core. Says Grewal:

“We simulated high pressure-temperature conditions by subjecting a mixture of nitrogen-bearing metal and silicate powders to nearly 30,000 times the atmospheric pressure and heating them beyond their melting points. Small metallic blobs embedded in the silicate glasses of the recovered samples were the respective analogs of protoplanetary cores and mantles.”

Nitrogen, the researchers learned, is distributed in different ways between the core, the molten silicate shell and the atmosphere, with the extent of this fractionation being governed by the size of the body. The takeaway: If the rate of differentiation is faster than the rate of accretion for planetary embryos of Moon or Mars-size, then the planets that form from them will not have accreted enough volatiles to support later life.

Earth’s path would have been different. The scientists believe that the building blocks of Earth grew quickly into planetary embryos before they finished differentiating, forming within one to two million years at the beginning of the Solar System. The slower rate of differentiation allowed nitrogen, and other volatiles, to be accreted. Adds Dasgupta:

“Our calculations show that forming an Earth-size planet via planetary embryos that grew extremely quickly before undergoing metal-silicate differentiation sets a unique pathway to satisfy Earth’s nitrogen budget. This work shows there’s much greater affinity of nitrogen toward core-forming metallic liquid than previously thought.”

Image: Nitrogen-bearing, Earth-like planets can be formed if their feedstock material grows quickly to around moon- and Mars-sized planetary embryos before separating into core-mantle-crust-atmosphere, according to Rice University scientists. If metal-silicate differentiation is faster than the growth of planetary embryo-sized bodies, then solid reservoirs fail to retain much nitrogen and planets growing from such feedstock become extremely nitrogen-poor. Credit: Illustration by Amrita P. Vyas/Rice University.

This work takes the emphasis off the stellar nebula and places volatile depletion in the context of processes within the rocky body in formation, especially the affinity of nitrogen toward metallic cores. Here’s how the paper sums it up:

…we show that protoplanetary differentiation can explain the widespread depletion of N in the bulk silicate reservoirs of rocky bodies ranging from asteroids to planetary embryos. Parent body processes rather than nebular processes were responsible for N (and possibly C) depleted character of the bulk silicate reservoirs of rocky bodies in the inner Solar System. A competition between rates of accretion versus rates of differentiation defines the N inventory of bulk planetary embryos, and consequently, larger planets. N budget of larger planets with protracted growth history can be satisfied if they accreted planetary embryos that grew via instantaneous accretion.

And the nebular conclusion:

Because most of the N in those planetary embryos resides in their metallic portions, the cores were the predominant delivery reservoirs for N and other siderophile volatiles like C. Establishing the N budget of the BSE [bulk silicate Earth] chiefly via the cores of differentiated planetary embryos from inner and outer Solar System reservoirs obviates the need of late accretion of chondritic materials as the mode of N delivery to Earth.

Rajdeep Dasgupta, by the way, is principal investigator for the NASA-funded CLEVER Planets project (one of the teams in the Nexus of Exoplanetary Systems Science — NExSS — research network). CLEVER Planets, according to its website, is “working to unravel the conditions of planetary habitability in the Solar System and other exoplanetary systems. The overarching theme of our research is to investigate the origin and cycles of life-essential elements (carbon, oxygen, hydrogen, nitrogen, sulfur, and phosphorus – COHNSP) in young rocky planets.”

All of which reminds us that the essential elements for life must be present no matter where a given planet exists in its star’s habitable zone.

The paper is Grewal et al., “Rates of protoplanetary accretion and differentiation set nitrogen budget of rocky planets,” Nature Geoscience 10 May 2021 (abstract / preprint).

Alpha Centauri and the Search for Technosignatures

Is there any chance we may one day find technosignatures around the nearest stars? If we were to detect such, on a planet, say, orbiting Alpha Centauri B, that would seem to indicate that civilizations are to be found around a high percentage of G- and K-class stars. Brian Lacki (UC-Berkeley) examined the question from all angles at the recent Breakthrough Discuss, raising some interesting issues about the implications of technosignatures, and the assumptions we bring to the search for them.

We’re starting to consider a wide range of technosignatures rather than just focusing on Dysonian shells around entire stars. Other kinds of megastructure are possible, some perhaps so exotic we wouldn’t be sure how they operated or what they were for. Atmospheres could throw technosignatures by revealing industrial activity along with their potential biosignatures. We could conceivably detect power beaming directed at interstellar spacecraft or even an infrastructure within a particular stellar system. One conceivable technosignature, rarely mentioned, is a world that has been terraformed.

All this takes us well beyond conventional radio and optical SETI. But let’s take the idea, as Lacki does, to Alpha Centauri, which we can begin by noting that in the past several years, Proxima Centauri b, that promising world in the habitable zone of the nearest red dwarf, has found its share of critics as a possible home to advanced life, if not life itself.

Michael Hippke noted in a 2019 paper that rocket launch to orbit from a super-Earth would be difficult, possibly inhibiting a civilization there from building a local space infrastructure. Milan ?irkovi? and Branislav Vukoti? asked in 2020 whether the frequent flare activity of Proxima Centauri would inhibit radio technologies altogether. Whether abiogenesis could occur under these kinds of flare conditions remains unknown.

Alpha Centauri A and B, the central binary, offer a much more benign environment, both stars being exceedingly quiet at radio wavelengths. Moreover, we have known since the 1990s that habitable orbits are possible around each of them. We have no knowledge about whether the evolution of life would necessarily lead to intelligence; as Lacki pointed out, this is an empirical question — we need data to answer it.

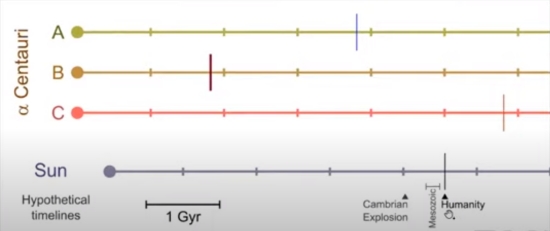

The Drake Equation points to further unresolved issues. In what portion of a star’s lifespan would we expect technological cultures to emerge? Our own civilization has used radio for about a century — one part in 100,000,000 of the lifespan of the Sun. Hence the slide below, which is telling in several ways. Lacki refers here to temporal coincidence, meaning that we might expect societies around other stars to be separated in time, not just in space. Hence this simple graph of a very deep subject.

Deep time always takes getting used to, no matter how many times we think we’ve gotten a handle on figures like 4.6 billion years or, indeed, 13.8 billion years, the lifetimes of the Sun and cosmos respectively. I stared at this figure for some time. Lacki has arbitrarily placed a civilization at Centauri B, as shown by the vertical line, and another at Centauri A and C. Our own is shown in its known place in the Sun’s lifetime, except for the striking fact that the thickness of humanity’s timeline on the chart corresponds to a lifetime of 10 million years. If we wanted a line showing our 100 years of technological use — i.e., radio — the line would have to be 100,000 times thinner.

The odds that the lines of any two stars would coincide seem infinitely small, unless we are talking about societies that can persist over many millions of years. But here we can begin to turn the question around. We might want to rethink nearby technosignatures if we remind ourselves that what they represent is not the civilization itself, but the works it created, which might greatly outlive their builders. Objects like Dyson spheres would seem to fit this category and would exist at planetary scale.

Small artifacts can also last for vast periods — our own Voyagers will be intact for millions of years — though finding them would be an obvious challenge (here it’s worth thinking about the controversy over ‘Oumuamua. If a piece of dead technology were to pass near the Sun, would we be able to recognize its artificial nature? I simply raise the question — I remain agnostic on the question of ‘Oumuamua itself).

But can we be sure we are the first intelligent technological society on Earth? It’s worth considering whether we would know it if an advanced culture had existed hundreds of millions of years ago, perhaps on Earth or a different planet in the Solar System. Looking forward, if we go extinct, will another intelligent species evolve? We don’t know the answer to these questions, and the depth of our ignorance is shown by the fact that we can’t say for sure that intelligence might not evolve over and over again.

If interstellar flight is possible, and it seems to be, we can consider the possibility of intelligence spreading throughout the galaxy, perhaps via self-reproducing von Neumann probes. Even with very slow interstellar velocities, the Milky Way could be settled in relatively short order, astronomically speaking. Michael Hart pointed this out in the 1970s, and Frank Tipler argued in 1980 that at current spacecraft speeds alone, self-replicating probes could colonize the galaxy in less than 300 million years.

Sending physical craft to other stars may be difficult, but there are advantages. So-called Bracewell probes could be deployed that would wait for evidence of intelligence and report back to the home system, as well as carrying inscribed messages intended for the target system. This is Jim Benford’s ‘lurker’ scenario, one which he proposes to explore by searching nearby objects in our own system including the Moon. After all, if they might exist elsewhere, there may be a lurker here.

We don’t know how long a probe like this might remain active, but as a physical artifact, it remains a conceivably detectable object for millions, perhaps billions of years. Finding such a probe in our own system would imply similar probes around other stars and, indeed, the likelihood of a civilization that has spread widely in the galaxy. We might well wonder whether a kind of galactic Internet might exist in which information relays around stars are common, perhaps using gravitational lensing.

In other words, the question of technosignatures at Alpha Centauri doesn’t necessarily imply anything found there would have come from a civilization that originated in that system. The same civilization might have seeded stars widely in the Orion Arm and beyond.

All of these ‘ifs’ define the limits of our knowledge. They also point to a case for looking for technosignatures no matter what our intuitions are about their existence at Alpha Centauri. A lack of obvious technosignatures in our system would imply a similar lack around the nearest stars, but we haven’t run the kind of fine-grained search for artifacts that would find them on our own Moon, much less on nearby smaller objects.

Image: How quickly would a single civilization using self-replicating probes spread through a galaxy like this one (M 74)? Moreover, what sort of factors might govern this ‘percolation’ of intelligence through the spiral? We’ll be looking further at these questions in coming days. Image credit: NASA, ESA, and the Hubble Heritage (STScI/AURA)-ESA/Hubble Collaboration.

Intuition says we’re unlikely to find a technosignature at Alpha Centauri. But I return to the chart on civilizational overlap reproduced above. To me, the greatest take-away here is the placement of our own civilization within the realm of deep time. Given how short the lifetime of technology has been on Earth, I’m reminded viscerally of how precious — and perhaps rare — intelligence is as an emerging facet of a universe becoming aware of itself. That’s true no matter what we find around the nearest stars.

Voyager: A Persistent Clue to the Density of the Interstellar Medium

What are the long-lasting waves detected by Voyager 1? Our first working interstellar probe — admittedly never designed for that task — is operating beyond the heliosphere, which it exited back in 2012. A paper just published in Nature Astronomy explores what’s going in interstellar space just beyond, but still affected by, the heliosphere’s passage through the Local Interstellar Medium (LISM).

We have a lot to learn out here, for even as we exit the heliosphere, the picture is complex. The so-called Local Bubble is a low-density region of hot plasma in the interstellar medium, the environment of radiation and matter — gas and dust — that exists between the stars. Within this ‘bubble’ exists the Local Interstellar Cloud (LIC), about 30 light years across, with a slightly higher hydrogen density flowing from the direction of Scorpius and Centaurus. The Sun seems to be within the LIC near its boundary with the G-cloud complex, where the Alpha Centauri stars reside.

Image: Map of the local galactic neighborhood showing the Sun located near the edge of our local interstellar cloud (LIC). Alpha-Centauri is located just over 4 light-years away in the neighboring G-cloud complex. Outside these clouds, the density may be lower than 0.001 atoms/cc. Our Sun and the LIC have a relative velocity of 26 km/sec. Credit: JPL.

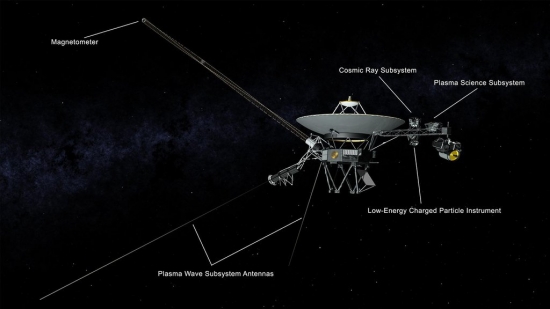

But if the interstellar medium is a sparse collection of widely spaced particles and radiation, it proves to be anything but quiet. We learn this from Voyager 1’s Plasma Wave Subsystem, which involves two antennae extending 30 meters from the spacecraft (see image below). What the PWS can pick up are clues to the density of the medium that show up in the form of waves. Some are produced by the rotation of the galaxy; others by supernova explosions, with smaller effects from the Sun’s own activity.

Vibrations of the ionized gas — plasma — in the interstellar medium have been detectable since late 2012 by Voyager 1 in the form of ‘whistles’ that show up only occasionally, but offer ways to study the density of the medium. The new work in Nature Astronomy, led by Stella Koch Ocker (Cornell University), sets about finding a more consistent measure of interstellar medium density in the Voyager data.

Image: An illustration of NASA’s Voyager spacecraft showing the antennas used by the Plasma Wave Subsystem and other instruments. Credit: NASA/JPL-Caltech.

A weak signal appearing at the same time as a ‘whistle’ in the 2017 Voyager data seems to have been the key finding. Ocker describes it as “very weak but persistent plasma waves in the very local interstellar medium.” When whistles appear in the data, the tone of this plasma wave emission rises and falls with them. Adds Ocker:

“It’s virtually a single tone. And over time, we do hear it change – but the way the frequency moves around tells us how the density is changing. This is really exciting, because we are able to regularly sample the density over a very long stretch of space, the longest stretch of space that we have so far. This provides us with the most complete map of the density and the interstellar medium as seen by Voyager.”

So we have an extremely useful instrument, Voyager 1’s Plasma Wave Subsystem, continuing to return data with increasing distance from the Sun. Analyzing the data over time, we learn that the electron density around the spacecraft began rising in 2013, just after its exit from the heliosphere, and reached current levels in 2015. These levels, which persist to the end of 2020 through the dataset, show a 40-fold increase in electron density. Up next for Ocker and team is the development of a physical model of the plasma wave emission that will offer insights into its proper interpretation.

As we begin to think seriously about interstellar probes in this century, it’s striking how much we have to learn about the medium through which they will pass. Voyager 1 is helping us learn about conditions immediately outside the heliosphere. A probe sent to Alpha Centauri will need to cross the boundary between the Local Interstellar Cloud and the G-cloud, a region we have yet to penetrate. The nature of and variation within the interstellar medium will require continuing work with our admittedly sparse data.

The paper is Ocker et al., “Persistent plasma waves in interstellar space detected by Voyager 1,” Nature Astronomy 10 May 2021. Abstract / Preprint.

Interstellar Research Group: 7th Interstellar Symposium Call for Papers

Regular Acceptance: Abstracts Due June 30, 2021

The Interstellar Research Group (IRG) hereby invites participation in its 7th Interstellar Symposium, hosted by the University of Arizona to be held from Friday, September 24 through Monday, September 27, 2021, in Tucson, Arizona. The Interstellar Symposium has the following elements:

The Interstellar Symposium focuses on all aspects of interstellar travel (human and robotic), including power, communications, system reliability/maintainability, psychology, crew health, anthropology, legal regimes and treaties, ethics, and propulsion with an emphasis on possible destinations (including the status of exoplanet research), life support systems, and habitats.

Working Tracks are collaborative, small group discussions around a set of interdisciplinary questions on an interstellar subject with the objective of producing “roadmaps” and/or publications to encourage further developments in the respective topics. This year we will be organizing the Working Tracks to follow selected plenary talks with focused discussions on the same topic.

Sagan Meetings. Carl Sagan famously employed this format for his 1971 conference at the Byurakan Observatory in old Soviet Armenia, which dealt with the Drake Equation. Each Sagan Meeting will invite five speakers to give a short presentation staking out a position on a particular question. These speakers will then form a panel to engage in a lively discussion with the audience on that topic.

Seminars are 3-hour presentations on a single subject, providing an in depth look at that subject. Seminars will be held on Friday, September 24, 2021, with morning and afternoon sessions. The content must be acceptable to be counted as continuation education credit for those holding a Professional Engineer (PE) Certificate.

Other Content includes, but is not limited to, posters, displays of art or models, demonstrations, panel discussions, interviews, or public outreach events.

Publications: Since the IRG serves as a critical incubator of ideas for the interstellar community. We intend to publish the work of the 7th Symposium in many outlets, including a complete workshop proceedings in book form. No Paper, No Podium: If a written paper is not submitted by the final manuscript deadline (To Be Announced), authors will not be permitted to present their work at the event. Papers should be original work that has not been previously published. Select papers may be submitted for journal publication, such as in the Journal of the British Interplanetary Society (JBIS).

Video and Archiving: All symposium events may be captured on video or in still images for use on the IRG and other sponsors websites, in newsletters and social media. All presenters, speakers and selected participants will be asked to complete a Release Form that grants permission for IRG to use this content as described.

ABSTRACT SUBMISSION

Abstracts for the Interstellar Symposium must relate to one or more of the many interstellar mission related topics, such as power, communications, system reliability/maintainability, psychology, crew health, anthropology, legal regimes and treaties, ethics, and propulsion with an emphasis on possible destinations (including the status of exoplanet research), life support systems, and habitats.

All abstracts must be submitted online here. Submitters must create accounts on the IRG website in order to submit abstracts.

PRESENTING AUTHOR(S) – Please list ONLY the author(s) who will actually be in attendance and presenting at the conference. (first name, last name, degree – for example, Susan Smith, MD)

ADDITIONAL AUTHORS – List all authors here, including Presenting Author(s) – (first name, last name, degree(s) – for example, Mary Rockford, RN; Susan Smith, MD; John Jones, PhD)

ABBREVIATIONS within the body should be kept to a minimum and must be defined upon first use in the abstract by placing the abbreviation in parenthesis after the represented full word or phrase. Proprietary drug names and logos may NOT be used. Non-proprietary (generic) names should be used.

ABSTRACT LENGTH – The entire abstract, (EXCLUDING title, authors, presenting author’s institutional affiliation(s), city, state, and text), including any tables or figures should be a maximum of 350 words. It is your responsibility to verify compliance with the length requirement.

ABSTRACT STRUCTURE – abstracts must include the following headings:

- Title – the presentation title

- Background – describes the research or initiative context

- Objective – describes the research or initiative objective

- Methods – describes research methodology used. For initiatives, describes the target population, program or curricular content, and evaluation method

- Results – summarizes findings in sufficient detail to support the conclusions

- Conclusions – states the conclusions drawn from results, including their applicability.

Questions and responses to this Call for Papers, Workshops and Participation should be directed to: info@irg.space.

For updates on the meeting, speakers, and logistics, please refer to the website: https://irg.space/irg-2021/

The Tennessee Valley Interstellar Workshop (doing business as the Interstellar Research Group, IRG) is a non-profit scientific, educational corporation in the state of Tennessee. For U.S. tax purposes, IRG is a tax-exempt, 501(c)(3) educational, non-profit corporation.