Centauri Dreams

Imagining and Planning Interstellar Exploration

Solid Results from ‘Second Light’

If they did nothing else for us, space missions might be worth the cost purely for their role in tuning up human ingenuity. Think of rescues like Galileo, where the Jupiter-bound mission lost the use of its high-gain antenna and experienced numerous data recorder issues, yet still managed to return priceless data. Mariner 10 overcame gyroscope problems by using its solar panels for attitude control, as controllers tapped into the momentum imparted by sunlight.

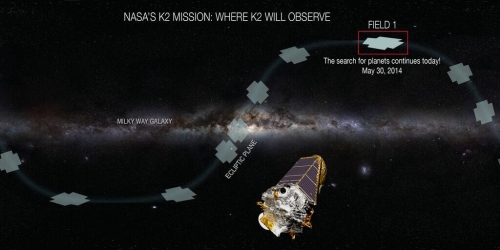

Overcoming obstacles is part of the game, and teasing out additional science through extended missions taps into the same creativity. Now we have news of how successful yet another mission re-purposing has been through results obtained from K2, the Kepler ‘Second Light’ mission that grew out of problems with the critical reaction wheels aboard the spacecraft. It was in November of 2013 that K2 was proposed, with NASA approval in May of the following year.

Kepler needed its reaction wheels to hold it steady, but like Mariner 10, the wounded craft had a useful resource, the light from the Sun. Proper positioning using photon momentum can play against the balance created by the spacecraft’s remaining reaction wheels. As K2, the spacecraft has to switch its field of view every 80 days, but these methods along with refinements to the onboard software have brought new life to the mission. K2, we quickly learned, was still in the exoplanet game, detecting a super-Earth candidate (HIP 116454b) in late 2014 in engineering data that had been taken as part of the run-up to full observations.

Image: NASA’s K2 mission uses the Kepler exoplanet-hunter telescope and reorients it so that it points along the Solar System’s plane. This mission has quickly proven itself with a series of exoplanet finds. Credit: NASA.

Now we learn that K2 has, in its first year of observing, identified more than 100 confirmed exoplanets, including 28 systems with at least two planets and 14 with at least three — the spacecraft has also identified more than 200 unconfirmed candidate planets. Some of the multi-planet systems are described in a new paper from Evan Sinukoff (University of Hawaii at Manoa). Drawn from Campaigns 1 and 2 of the K2 mission, the paper offers a catalog of ten multi-planet systems comprised of 24 planets. Six of these systems have two known planets and four have three known planets, the majority of them being smaller than Neptune.

In general, the K2 planets orbit hotter stars than earlier Kepler discoveries. These are also stars that are closer to Earth than the original Kepler field, making K2 exoplanets useful for study in the near future through missions like the James Webb Space Telescope. From the Sinukoff paper:

Kepler planet catalogs (Borucki et al. 2011; Batalha et al. 2013; Burke et al. 2014; Rowe et al. 2015; Mullally et al. 2015) spawned numerous statistical studies on planet occurrence, the distribution of planet sizes, and the diversity of system architectures. These studies deepened our understanding of planet formation and evolution. Continuing in this pursuit, K2 planet catalogs will provide a wealth of planets around bright stars that are particularly favorable for studying planet compositions—perhaps the best link to their formation histories.

As this article in Nature points out, K2 has already had quite a run. Among its highlights: A system of three super-Earths orbiting a single star (EPIC 201367065, 150 light years out in the constellation Leo) and the discovery of the disintegrating remnants of a planetary system around a white dwarf (WD 1145+017, 570 light years away in Virgo).

Coming up in the spring, K2 begins a three-month period in which it segues from using the transit method to gravitational microlensing, in which the presence of a planet is flagged by the brightening of more distant cosmic objects as star and planet move in front of them. We’ll keep a close eye on this effort, which will be coordinated with other telescopes on the ground. The K2 team believes the campaign will identify between 85 and 120 planets in its short run.

The Sinukoff paper is “Ten Multi-planet Systems from K2 Campaigns 1 & 2 and the Masses of Two Hot Super-Earths,” submitted to The Astrophysical Journal (preprint). For more on EPIC 201367065 and habitability questions, see

Andrew LePage’s Habitable Planet Reality Check: Kepler’s New K2 Finds.

Space Habitats Beyond LEO: A Short Step Towards the Stars

Building a space infrastructure is doubtless a prerequisite for interstellar flight. But the questions we need to answer in the near-term are vital. Even to get to Mars, we subject our astronauts to radiation and prolonged weightlessness. For that matter, can humans live in Mars’ light gravity long enough to build sustainable colonies without suffering long-term physical problems? Gregory Matloff has some thoughts on how to get answers, involving the kind of space facility we can build with our current technologies. The author of The Starflight Handbook (Wiley, 1989) and numerous other books including Solar Sails (Copernicus 2008) and Deep Space Probes (Springer, 2005), Greg has played a major role in the development of interstellar propulsion concepts. His latest title is Starlight, Starbright (Curtis, 2015).

by Gregory Matloff

The recent demonstrations of successful rocket recovery by Blue Origin and SpaceX herald a new era of space exploration and development. We can expect, as rocket stages routinely return for reuse from the fringes of space, that the cost of space travel will fall dramatically.

Some in the astronautics community would like to settle the Moon; others have their eyes set on Mars. Many would rather commit to the construction of solar power satellites, efforts to mine and/or divert Near Earth Asteroids (NEAs), or construct enormous cities in space such as the O’Neill Lagrange Point colonies.

But before we can begin any or all of these endeavors, we need to answer some fundamental questions regarding human life beyond the confines of our home planet. Will humans thrive under lunar or martian gravity? Can children be conceived in extraterrestrial environments? What is the safe threshold for human exposure to high-Z galactic cosmic rays (GCRs)?

To address these issues we might require a dedicated facility in Earth orbit. Such a facility should be in a higher orbit than the International Space Station (ISS) so that frequent reboosting to compensate for atmospheric drag is not required. It should be within the ionosphere so that electrodynamic tethers (ETs) can be used for occasional reboosting without the use of propellant. An orbit should be chosen to optimize partial GCR-shielding by Earth’s physical bulk. Ideally, the orbit selected should provide near-continuous sunlight so that the station’s solar panels are nearly always illuminated and experiments with closed-environment agriculture can be conducted without the inconvenience of the 90 minute day/night cycle of equatorial Low Earth Orbit (LEO). Initial crews of this venture should be trained astronauts. But before humans begin the colonization of the solar system, provision should be made for ordinary mortals to live aboard the station, at least for visits of a few months’ duration.

Another advantage of such a “proto-colony” is proximity to the Earth. Resupply is comparatively easy and not overly expensive in the developing era of booster reuse. In case of medical emergency, return to Earth is possible in a few hours. That’s a lot less than a 3-day return from the Moon or L5 or a ~1-year return from Mars.

A Possible Orbital Location

An interesting orbit for this application has been analyzed in a 2004 Carleton University study conducted in conjunction with planning for the Canadian Aegis satellite project [1]. This is a Sun-synchronous orbit mission with an inclination of 98.19 degrees and a (circular) optimum orbital height of 699 km. At this altitude, atmospheric drag would have a minimal effect during the planned 3-year satellite life. In fact, the orbital lifetime was calculated as 110 years. The mission could still be performed for an orbital height as low as 600 km. The satellite would follow the Earth’s terminator in a “dawn-to-dusk” orbit. In such an orbit, the solar panels of a spacecraft would almost always be illuminated.

For a long-term human-occupied research facility in or near such an orbit, a number of factors must be considered. These include cosmic radiation and space debris. It is also useful to consider upper-atmosphere density variation during the solar cycle.

The Cosmic Ray Environment

From a comprehensive study by Susan McKenna-Lawlor and colleagues of the deep space radiation environment [2], the one-year radiation dose limits for 30, 40, 50, and 60 year old female astronauts are respectively 0.6, 0.7, 0.82, and 0.98 Sv. Dose limits for men are about 0.18 Sv higher than for women. At a 95% confidence level, such exposures are predicted not to increase the risk of exposure-related fatal cancers by more than 3%.

Al Globus and Joe Strout have considered the radiation environment experienced within Earth-orbiting space settlements below the Van Allen radiation belt [3]. This source recommends annual radiation dose limits for the general population and pregnant women respectively at 20 mSv and 6.6 mGy (where “m” stands for milli, “Sv” stands for Sieverts and “Gy” stands for Gray). Conversion of Grays to Sieverts depends upon the type of radiation and the organs exposed. As demonstrated in Table 1 of Ref. 3, serious or fatal health effects begin to affect a developing fetus at about 100 mGy. If pregnant Earth-bound women are exposed to more than the US average 3.1 mSv of background radiation, the rates of spontaneous abortion, major fetal malformations, retardation and genetic disease are estimated respectively at 15%, 2-4%, 4%, and 8-10%. Unfortunately, these figures are not based upon exposure to energetic GCRs [3].

In their Table 5, Globus and Strout present projected habitat-crew radiation levels as functions of orbital inclination and shielding mass density [3]. Crews aboard habitats in high inclination orbits will experience higher dosages than those aboard similar habitats in near equatorial orbits. In a 90-degree inclination orbit, a crew member aboard a habitat shielded by 250 kg/m2 of water will be exposed to about 334 mSv/year. To bring radiation levels in this case below the 20 mSV/year threshold for adults in the general population requires a ~12-fold increase in shielding mass density [3].

But Table 4 of the Globus and Strout preprint demonstrates that, for a 600-km circular equatorial orbit, elimination of all shielding increases radiation dose projections to about 2X that of the habitat equipped with a 250 kg/m2 water shield. If shielding is not included and this scaling can be applied to the high-inclination orbit, expected crew dose rates will be less than 0.8 Sv/year [3]. This is within the annual dose limits for all male astronauts and female astronauts older than about 45 [2].

Early in the operational phase of this high-inclination habitat, astronauts can safely spend about a year aboard. Adults in the general public can safely endure week-long visits. Pregnant women who visit will require garments that provide additional shielding for the fetus. Some of the short-term residents aboard the habitat may be paying “hotel” guests. As discussed below, additional shielding may become available if development of this habitat is a joint private/NASA project.

Is Space Debris an Issue?

According to a 2011 NASA presentation to the United Nations Subcommittee on the Peaceful Uses of Outer Space, space debris is an issue of concern in all orbits below ~2,000 km. About 36% of catalogued debris objects are due to two incidents: the intentional destruction of Fengyun-1C in 2007 and the 2009 accidental collision between Cosmos 2251 and Iridium 33 [4].

The peak orbital height range for space debris density is 700-1,000 km. At the 600-km orbital height of this proposed habitat, the spatial density of known debris objects is about 4X greater than at the ~400 km orbital height of the International Space Station (ISS) [4]. As is the case with the ISS, active collision avoidance will sometimes be necessary.

Atmospheric Drag at 600 km

An on-line version of the Standard Atmosphere has been consulted to evaluate exospheric molecular density at orbital heights [5]. A summary of this tabulation follows:

Atmospheric Density, km/m2 at various solar activity levels

| height | Low | Mean | Extremely High |

|---|---|---|---|

| 400 km | 5.68E-13 | 3.89E-12 | 5.04E-11 |

| 500 | 6.03E-14 | 7.30E-13 | 1.70E-11 |

| 600 | 1.03E-14 | 1.56E-13 | 6.20E-12 |

Note that atmospheric density levels at 600 km are in all cases far below the corresponding levels at the ISS ~400 km orbital height. But orbit adjustment will almost certainly be required during periods of peak solar activity.

Since the proposed 600-km orbital height is within the Earth’s ionosphere, there are a number of orbit-adjustment systems that require little or no expenditure of propellant. One such technology is the Electrodynamic Tether [6].

Habitat Properties and Additional Shielding Possibilities

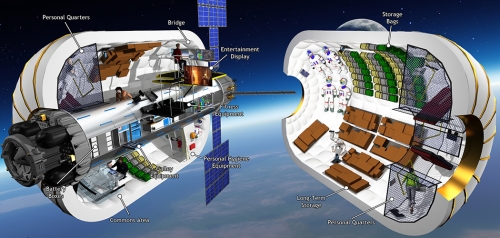

A number of inflatable space habitats have been studied extensively or are under consideration for future space missions. Two that could be applied to construction of a ~600-km proto-colony are NASA’s Transhab and Bigelow Aerospace’s BA330 (also called B330).

Transhab, which was considered by NASA for application with the ISS and might find use as a habitat module for Mars-bound astronauts, would have a launch mass of about 13,000 kg. Its in-space (post-inflation) diameter would be 8.2 m and its length would be 11 m [7]. Treating this module as a perfect cylinder, its surface area would be about 280 m2. Transhab could comfortably accommodate 6 astronauts.

Image: Cutaway of Transhab Module with Crew members. Credit: NASA.

According to Wikipedia, the BA330 would have a mass of about 20,000 kg. Its length and diameter would be 13.7 m and 6.7 m, respectively. The Bigelow Aerospace website reports that the approximate length of this module would be 9.45 m. It could accommodate 6 astronauts comfortably during its projected 20-year operational life.

Both of these modules are designed for microgravity application. Since the study of the adjustment of humans and other terrestrial life forms to intermediate gravity levels might be one scientific goal of the proposed 600-km habitat, the habitat should consist of two modules arranged in dumbbell configuration connected by a variable-length spar with a hollow, pressurized interior. The rotation rate of the modules around the center could be adjusted to provide various levels of artificial gravity. Visiting spacecraft could dock at the center of the structure. It is possible that the entire disassembled and uninflated structure could be launched by a single Falcon Heavy.

Image: The pressurized volume of a 20 ton B330 is 330m3, compared to the 106m3 of the 15 ton ISS Destiny module; offering 210% more habitable space with an increase of only 33% in mass. Credit: Bigelow Aerospace.

One module could support the crew, which would be rotated every 3-6 months. The other module could accommodate visitors and scientific experiments. It is anticipated that visitors would pay for their week-duration experience to help support the project. Experiments would include studies of the effects of GCR and variable gravity on humans, experimental animals and experiments with in-space agriculture. The fact that the selected orbit provides near-constant exposure to sunlight should add a realistic touch to the agriculture studies. These experiments will hopefully lead to the eventual construction of in-space habitats, hotels, deep-space habitats and other facilities.

The possibility exists for cooperation between the developers of this proposed 600-km habitat and the NASA asteroid retrieval mission. Under consideration for the mid-2020’s, this mission would use the Space Launch System to robotically retrieve a ~7-meter diameter boulder and return it to high lunar orbit for further study [8]. The mass of this object in lunar orbit could exceed half a million kilograms. It is conceivable that much of this material could be used to provide GCR-shielding for Earth-orbiting habitats such as one considered here. As well as reducing on-board radiation levels, such an application would provide valuable experience to designers of deep-space habitats such as the O’Neill space colonies.

——-

References

1. S. Beaudette, “Carleton University Spacecraft Design Project; 2004 Final Design Report, “Satellite Mission Analysis”, FDR-SAT-2004-3.2.A (April 8, 2004).

2. S. McKenna-Lawlor, A. Bhardwaj, F. Ferrari, N. Kuznetsov, A. K. Lal, Y. Li, A. Nagamatsu, R. Nymmik, M. Panasyuk, V. Petrov, G. Reitz, L. Pinsky, M. Shukor, A. K. Singhvi, U. Strube, L. Tomi, and L. Townsend, “Recommendations to Mitigate Against Human Health Risks Due to Energetic Particle Irradiation Beyond Low Earth Orbit/BLEO”, Acta Astronautica, 109, 182-193 (2015).

3. A. Globus and J. Strout, “Orbital Space Settlement Radiation Shielding”, preprint, issued July 2015 available on-line at space.alglobus.net).

4. NASA, “USA Space Debris Environment, Operations, and Policy Updates”, Presentation to the 48th Session of the Scientific and Technical Subcommittee, Committee on the Peaceful Uses of Outer Space (7-9 February 2011).

5. Physical Properties of U.S. Standard Atmosphere, MSISE-90 Model of Earth’s Upper Atmosphere, www.braeunig.us/space/atmos.htm

6. L. Johnson and M. Herrmann, “International Space Station: Electrodynamic Tether Reboost Study, NASA/TM-1998-208538 (July, 1998).

7. “Transhab Concept” spaceflight.nasa.gov/history/station/transhab

8. M. Wall, “The Evolution of NASA’s Ambitious Asteroid Capture Mission”, www.space.com/28963-nasa-asteroid-capture-mission-history

HD 7449Ab: Choreography of a Planetary Dance

Given this site’s predilections, it’s natural to think of Centauri A and B whenever the topic of planets around close binary stars comes up. But systems with somewhat similar configurations can produce equally interesting results. Take what we’re finding around the G-class star HD 7449, some 127 light years from our Sun. In 2011, a planet of roughly eight times Jupiter’s mass was found orbiting the star in an orbit so eccentric that it demanded explanation. A highly eccentric orbit can indicate another object in the system that is affecting the planet.

Exactly what has now been determined. “The question was: is it a planet or a dwarf star?” says Timothy Rodigas (Carnegie Institution for Science), who led the work on the discovery. Rodigas’ team went to work using the Magellan adaptive optics system (MagAO) on the Magellan II (Clay) instrument at Las Campanas in Chile. MagAO allows sharp visible-light images to be acquired, with the instrument capable of resolving objects down to the 0.02 arcsecond level.

The object near HD 7449 was quickly spotted and we learn that the system has a second star, an M-class dwarf. So now we have HD 7449A and the dwarf secondary, HD 7449B, along with the gas giant HD 7449Ab. HD 7449B is a tiny object with about one-twentieth the Sun’s mass, and it’s close enough to the primary (18 AU) to evoke that Alpha Centauri comparison I made above — Centauri A and B move between 11.4 and 36.0 AU as they orbit. I’ll grant that the comparison is strained by the fact that Centauri B is a K-class dwarf, while HD 7449B is a far smaller M-dwarf. Moreover, we have nothing like planet HD 7449Ab in the Alpha Centauri system.

What we’re seeing in HD 7449Ab is a planet that in the words of Rodigas is “‘dancing’ between the two stars.” The gravitational influences at work here evidently go back millions of years. The system, in fact, may be showing us the so-called Kozai mechanism at work. First described by the Russian astronomer Michael Lidov and later by Japanese researcher Yoshihide Kozai, the Kozai mechanism is one factor that can shapes the orbits of multiple-star systems. We see an oscillation between the planet’s orbital eccentricity and its orbital inclination, the ‘dance’ that Rodigas refers to. Have a look at the team’s visualization of the effect.

Image: This animation shows the Kozai mechanism at work in the HD 7449 system. Credit: Carnegie Institution for Science.

From the paper:

If the planet and outer companion were initially on mutually-inclined orbits of at least 39.2°, then the planet’s eccentricity and inclination would oscillate with oppositely-occurring minima and maxima (Holman et al. 1997). Based on the nominal parameters for the planet and M dwarf companion, the length of a Kozai cycle would be ? a few hundred years, which is certainly short enough to be plausible given the age of the system (? 2 Gyr).

The Kozai mechanism is not the only explanation for the planet’s eccentric orbit, but the paper argues that it is the most convincing:

Another explanation for the planet’s large eccentricity is planet-planet scattering in the inner parts of the system (e.g., Rasio & Ford 1996). In this case, one or more planets may have been ejected from the system, leaving behind the eccentric HD 7449Ab. This scattering scenario would require both the surviving planet and the scattered planet to be relatively massive (7–10 MJ ) and the eccentricity damping of the original circumstellar disk to be small (Moorhead & Adams 2005). Given the “smoking gun” (the nearby M dwarf companion), it seems more likely that Kozai cycles are responsible.

The paper calls for continuing monitoring of this system by both radial velocity and direct imaging methods, the latter important because it can provide further constraints on the planet’s orbital eccentricity and inclination, allowing for a more accurate estimate of its mass. The dwarf star is itself interesting, the paper noting that it can become a benchmark object for studies of stellar structure. What this system gives us is a rare case of an M-dwarf with a measurable age (via the primary) and a mass that will be measured by astrometric means. Spectroscopic follow-up should constrain metallicity, improving structure models for similar cool stars.

The paper is Rodigas et al., “MagAO Imaging of Long-period Objects (MILO). I. A Benchmark M Dwarf Companion Exciting a Massive Planet around the Sun-like Star HD 7449,” accepted at The Astrophysical Journal (preprint). Thanks to Dave Moore for alerting me to this story.

Globular Clusters: Home to Intelligent Life?

I can think of few things as spectacular as a globular cluster. Messier 5 is a stunning example in Serpens. With a radius of some 200 light years, M5 shines by the light of half a million stars, and at 13 billion years old, it’s one of the older globular clusters associated with our galaxy. Clusters like these orbit the galactic core, stunning chandeliers of light packed tightly with stars. The Milky Way has 158 known globulars, while M31, the Andromeda galaxy, boasts as many as 500. Giant elliptical galaxies like M87 can have thousands.

Image: The globular cluster Messier 5, consisting of hundreds of thousands of stars. Credit: ESA/Hubble & NASA. Via Wikimedia Commons.

Given the age of globular clusters (an average of ten billion years), it’s a natural assumption that planets within them are going to be rare. We would expect their stars to contain few of the heavy elements demanded by planets, since elements like iron and silicon are created by earlier stellar generations. And indeed, only one globular cluster planet has been found, an apparent gas giant that accompanies a white dwarf, both orbiting a pulsar in the globular cluster M4.

A team led by David Weldrake (Max-Planck-Institut für Astronomie) reported on its large ground-based search for ‘hot Jupiters’ in the globular clusters 47 Tucanae and Omega Centauri in 2006, finding no candidates. So should we give up on the notion of planets in such environments? Rosanne Di Stefano (Harvard-Smithsonian Center for Astrophysics) thinks that would be premature. She presented the research at a press conference at the ongoing meeting of the American Astronomical Society, which this year occurs in Kissimmee, Florida.

Working with Alak Ray (Tata Institute of Fundamental Research, Mumbai), Di Stefano points out that we have found low-metallicity stars known to have planets elsewhere in the galaxy. And while we can couple the occurrence of Jupiter-class planets with stars that have higher metallicity, there seems to be no such correlation when we’re dealing with smaller, rocky worlds like the Earth. Perhaps, then, we shouldn’t dismiss planets in globular clusters.

Di Stefano also argues that while stellar distances are small within a globular cluster, this may not work against the possibility of habitable planets, and may actually be a benefit for any civilizations that do emerge there. Most of the stars in these ancient clusters are red dwarfs with lifetimes in the trillions of years. The habitable zone around such small stars is close in, making potentially habitable worlds relatively safe from disastrous stellar interactions.

So let’s imagine the possibility of life evolving in ten billion year old globulars. In our comparatively sparse region of the Milky Way, the nearest star is presently some 4.2 light years away, a staggering 40 trillion kilometers. Within a large globular cluster, the nearest star could be twenty times closer. Imagine a star at roughly 15000 AU or somewhat less, about the same distance from our Sun as Proxima Centauri is from Centauri A and B. Then open out the view: Imagine fully 10,000 stars closer to us than Alpha Centauri, the night sky ablaze.

I would see this as a powerful inducement to developing interstellar technologies for any civilization that happened to emerge, and Di Stefano agrees, according to this CfA news release. The ‘globular cluster opportunity’ that she identifies would play off the fact that broadcasting messages to nearby stars would presume round-trip travel times not a lot longer than it took to get letters from the United States to Europe in the 18th Century by sail.

As to this site’s own idée fixe, Stefano sees further good news:

“Interstellar travel would take less time too. The Voyager probes are 100 billion miles from Earth, or one-tenth as far as it would take to reach the closest star if we lived in a globular cluster. That means sending an interstellar probe is something a civilization at our technological level could do in a globular cluster.”

That does give me pause — imagine one of our Voyagers already a tenth of the way to another star. If civilizations can develop inside globular clusters, they could be just what Di Stefano says, “the first place in which intelligent life is identified in our galaxy.” The problem for our current exoplanet methods is that even the closest globular cluster is a long way from us — both M4 and NGC 6397 are approximately 7200 light years out. Gravitational microlensing may produce some planetary finds, and perhaps new transit efforts could be effective, though we would be working on the outskirts of the cluster and small rocky worlds would be a tough catch.

Even so, it’s a breathtaking prospect, because my imagination is fired by the possibility of intelligent life looking out on a sky packed with stars from the center of a globular cluster. I also take heart from the fact that there is much we don’t know about such clusters. M5, for example, dates back close to the beginning of our universe, yet we know that along with its ancient stars, it also has a population of the young blue stars called ‘blue stragglers.’ Explaining their formation should help us better understand the dynamics of this dazzling environment.

Stellar Age: Recalibrating Our Tools

Making the call on the age of a star is tricky business. Yet we need to master the technique, for stellar age is a window into a star’s astrophysical properties, important in themselves and for understanding the star in the context of its interstellar environment. And for those of us who look at SETI and related issues, the age of a star can be a key factor — is the star old enough to have produced life on its planets, and perhaps a technological civilization?

Until recently, luminosity and surface temperature were the properties that helped us make a rough estimate of a star’s age, which gives insight into how challenging the problem is. These are factors that, while they do change over time, give us only approximations of age. More recently, researchers have learned to study sound waves deep in the stellar interior, a method that is confined to bright targets and cannot help us with vast numbers of dimmer stars. Called asteroseismology, this method has helped our estimates of stellar ages among old field stars.

Enter gyrochronology. Within the last decade, we’ve learned that stellar rotation rates can help us with a star’s age, for a star’s spin is slowed due to the interactions between its magnetic field and its stellar wind. The latter, a flow of charged particles moving away from the star, helps to inflate the ‘bubble’ in our system we know as the heliosphere. But these gases also become caught in the magnetic field as they spin outward, causing changes to the star’s angular momentum.

Thus a star’s magnetic field can over time brake its spin rate. Assuming we know the rotation rate and mass of the star, we can use the method to calculate its age. Now we have new work out of the Carnegie Institution for Science that should be helpful at refining this technique. What Jennifer van Saders and team have discovered is that our models for predicting the slowdown have been off. The braking action of the magnetic field becomes weaker as stars get older, becoming much less accurate for stars more than halfway through their lifetimes.

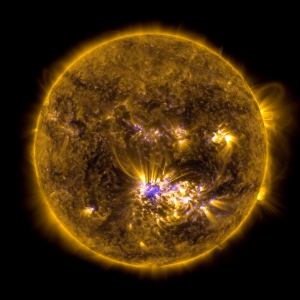

Image: Solar image courtesy of NASA’s Solar Dynamics Observatory.

Appearing in Nature, the paper is based on data from the Kepler spacecraft, which makes it possible to test gyrochronology for a large number of stars older than the Sun. Kepler gives us a significant sample of stars whose rotation rate is measurable and whose asteroseismic properties are now known.

If, as appears the case, there is a change in the way the magnetic field interacts with the stellar wind as a star ages, then we need to find out the nature of this change and when it occurs. In any case, we have hard data that stars more evolved than the Sun rotate more rapidly than our models predict, a fact that limits the usefulness of gyrochronology in these populations. Our own Sun could be approaching the age when its magnetic field loses some of its braking power.

Bear in mind that we are talking about astronomical timescales here, so whatever transition occurs may not happen for hundreds of millions of years. Moreover, the changes to the magnetic interactions are likely to take place over a long period. But the speed of the change and the process behind it point the way to future work as we try to unwrap the riddle of stellar ages. “Gyrochronology,” says van Saders, ” has the potential to be a very precise method for determining the ages of the average Sun-like star, provided we can get the calibrations correct.”

The paper is van Saders et al., “Weakened magnetic braking as the origin of anomalously rapid rotation in old field stars,” published online in Nature 4 January 2016 (abstract). Be aware as well of van Saders er al., “Rotation periods and seismic ages of KOIs – comparison with stars without detected planets from Kepler observations,” published online by Monthly Notices of the Royal Astronomical Society 11 December 2015 (abstract). This one deals with using Kepler Objects of Interest to calibrate gyrochronology, concluding that planet-hosting stars show rotational patterns similar to that of stars without any detected planets.

‘A City Near Centaurus’

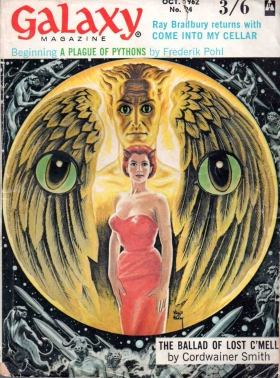

Let’s start the year off with a reflection on things past. Specifically, a story called “A City Near Centaurus,” set on a planet circling one of the Alpha Centauri stars. Just which star is problematic, because our author, Bill Doede, describes it as a planet circling ‘Alpha Centaurus II.’ I’m sure he means Centauri B, but the story, which appeared in Galaxy at the end of Frederick Pohl’s first year as editor, is less concerned about nomenclature and setting than the conflict between a humanoid alien called Maota and the timeless city he lives in.

Michaelson, our human protagonist, looks out upon this long deserted metropolis:

He gazed out from his position at the complex variety of buildings before him. Some were small, obviously homes. Others were huge with tall, frail spires standing against the pale blue sky. Square buildings, ellipsoid, spheroid. Beautiful, dream-stuff bridges connected tall, conical towers, bridges that still swung in the wind after half a million years. Late afternoon sunlight shone against ebony surfaces. The sands of many centuries had blown down the wide streets and filled the doorways. Desert plants grew from roofs of smaller buildings.

Maota is a humanoid who, fortunately for the reader and Michaelson, speaks English, evidently one of the few who remain half a million years after whatever has caused the disappearance of a once great civilization. If anything, this should remind you of Bradbury’s The Martian Chronicles, whose ancient cities shaped many a young imagination, but Doede’s story likewise has a bit of J. G. Ballard in it, this at a time when Ballard was being introduced to America by Cele Goldsmith, the brilliant editor of Amazing Stories and Fantastic. The early 1960s were truly fecund years for science fiction.

Disturbing a Living Past

I introduce Doede’s story today as a bit of a jeu d’espirit, but also out of genuine curiosity. I had thought I had read every story or novel dealing with the Centauri stars at one point or another. And indeed, I know for a fact that I read the October 1962 issue of Galaxy, for reasons I’ll explain in a moment. But when I stumbled across “A City Near Centaurus” over the weekend, it was by way of a chance encounter on Project Gutenberg, where the tale, its copyright never renewed, is now available. I therefore read it with fresh eyes.

We’re asked to swallow a lot if we’re dealing with humanoids on an alien world, although bear with the tale. To my mind, it’s spritely done and could easily be imagined as a TV script for Rod Serling’s Twilight Zone, a tale plucking at a kind of moral lesion in what it means to explore and encounter not just the alien but the past. Maota wants Michaelson to leave the city alone, fearing his amateur archeology will disrupt the spirits of the dead.

“What difference does it make?” Maota cried, suddenly angry. “You want to close up all these things in boxes for a posterity who may have no slightest feeling or appreciation. I want to leave the city as it is, for spirits whose existence I cannot prove.”

For his part, Michaelson is as clumsy as Schliemann at Troy, but he wants to know what made this culture tick. The tension is heightened by the fact that these two are alone in the deserted city.

Along the way we encounter an ancient book that seems to communicate telepathically. We also learn that Michaelson possesses a technology that allows teleportation, in the form of a small implant behind his ear that lets him be more or less wherever he chooses to be. Inevitably, although Maota is old, he and Michaelson fight both verbally and physically, and the book is destroyed. The old man then reveals what happened to the civilization once here:

Maota smiled a toothless, superior smile. “What do you suppose happened to this race?”

“You tell me.”

“They took the unknown direction. The books speak of it. I don’t know how the instrument works, but one thing is certain. The race did not die out, as a species becomes extinct.”

Michaelson was amused, but interested. “Something like a fourth dimension?”

“I don’t know. I only know that with this instrument there is no death. I have read the books that speak of this race, this wonderful people who conquered all disease, who explored all the mysteries of science, who devised this machine to cheat death. See this button here on the face of the instrument? Press the button, and….”

“And what?”

“I don’t know, exactly. But I have lived many years. I have walked the streets of this city and wondered, and wanted to press the button. Now I will do so.”

It would be churlish to give away what happens next, but it’s clear that the city lives, and that the tension between inevitable change and the urge to preserve what has been infuses even our encounter with beings from another star. Reading “A City Near Centaurus,” I became intrigued enough to look for information on Bill Doede. Biographical data didn’t emerge, though the The Internet Speculative Fiction Database did reveal that he published four other tales between 1960 and 1964, all in Galaxy save one for Fred Pohl’s fledgling Worlds of Tomorrow in early 1964. Clearly, he was an H. L. Gold discovery who managed a working relationship with Pohl after the editorial handoff of Galaxy in 1961.

Riffing on the Future

Some of us keep old magazines and collect others because the pleasures of science fiction from this era were so intense, and in any case, we all gravitate to the better memories of our childhood. I mentioned that I was sure I had read the issue of Galaxy that “A City Near Centaurus” appeared in, and that’s because the lead story in the issue was Cordwainer Smith’s “The Ballad of Lost C’Mell.” I envy those who haven’t made an acquaintance with Smith’s work — he was actually a well-traveled diplomat (and sometime spy) named Paul Linebarger, who led a life fully as interesting as some of his astonishing fiction.

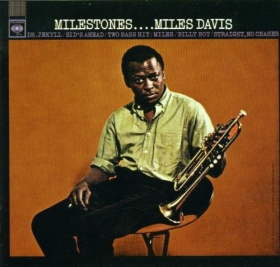

I remember where I was when I read most of Cordwainer Smith’s fiction for the first time, and though I don’t remember Bill Doede, I’m pleased to have recovered another story dealing in some way with our nearest stars. On a broader note, I was thinking this weekend, as I listened to a Bobby Hutcherson album while reading Doede, that jazz and science fiction have much in common. Whether keying off chord changes or moving in a modal direction (as in Miles Davis’ wonderful work from the same era as the Doede story), jazz gives musicians ways to explore sound, with no two performances representing the same idea. Listen to Davis, for example, doing the classic “Milestones” on the album of the same name and then compare the performance with his take on the same piece on Miles Davis In Europe.

It’s the same composition, but taken in entirely different directions — the accelerated tempo of the latter, recorded at the Antibes Jazz Festival, pushes the piece to its limits. Like jazz, science fiction shapes its materials — elements of emerging science — and plays speculative changes with and against them. We invent a future and explore it, tweak it and explore it again, a riffing of ideas that helps us see possible directions for our species. As we begin 2016, I ask myself what changes we’ll play off in the coming year, secure in the knowledge that there is no terminus. In some ways we are always, as David Deutsch would say, at the beginning of infinity.

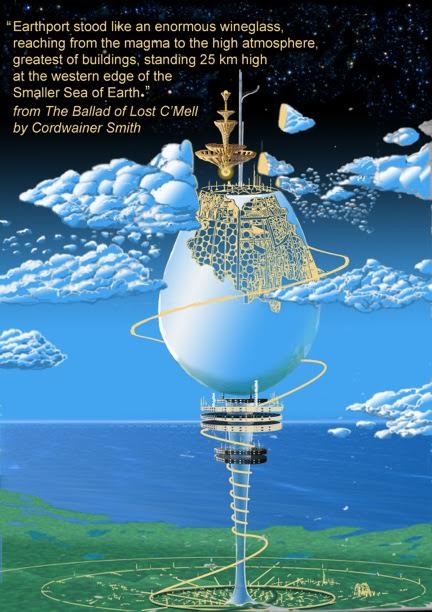

Addendum: Jon Lomberg passed along the beautiful artwork below, his visualization of Earthport, from Cordwainer Smith’s “Ballad of Lost C’Mell,” by way of letting me know he’s also a great fan of the author’s work. Jon also sent a scrupulously detailed chart of Smith’s fictional universe that I couldn’t get to display well due to the limitations of the format here, but have a look at Earthport. Jon’s art can be viewed in considerably more detail at http://www.jonlomberg.com/.