Centauri Dreams

Imagining and Planning Interstellar Exploration

Off on a Comet

Imagine what you could do with a comet at your disposal. In Seveneves, Neal Stephenson’s new novel (William Morrow, 2015), a Musk-like character named Sean Probst decides to go after Comet Grigg-Skjellerup. A lunar catastrophe has doomed planet Earth and humanity is in a frantic rush to figure out how to save at least a fraction of the population by living off-world. Probst understands that a comet would be a priceless acquisition:

“You can’t make rocket fuel out of nickel. But with water we can make hydrogen peroxide — a fine thruster propellant — or we can split it into hydrogen and oxygen to run big engines…. We have to act immediately on long-lead-time work that addresses what we do know. And what we know is that we need to bring water to the Cloud Ark. Physics and politics conspire to make it difficult to bring it up from the ground. Fortunately, I own an asteroid mining company…”

And so on. Lest you think that was a spoiler, be advised that it’s just the tip of a story of Stephensonian complexity. In the world of the novel, even with virtually all the world’s resources committed to putting people and things into space, time is short, and moving a cometary mass will take years. Given today’s technology, we couldn’t move Grigg-Skjellerup the way Probst intends, but I kept thinking about comets and their resources as I pondered the latest news from a comet we’re getting to know very well thanks to Rosetta: 67P/Churyumov-Gerasimenko.

Traveling with a Comet

Rosetta reached Comet 67P/Churyumov-Gerasimenko in August of last year, so we’ve had a year of up-close study, with perihelion of the object’s 6.5 year orbit occurring on August 13 of this year. Watching a comet in action as it reaches perihelion and then recedes from the Sun is what the mission was designed for, and we’re learning that it was money well spent. As the European Space Agency recently reported, we now see a water ice cycle at work on the comet.

The work, which appears in Nature, draws on Rosetta’s Visible, InfraRed and Thermal Imaging Spectrometer (VIRTIS). Lead author Maria Cristina De Sanctis (INAF-IAPS, Rome) explains:

“We found a mechanism that replenishes the surface of the comet with fresh ice at every rotation: this keeps the comet ‘alive’… We saw the tell-tale signature of water ice in the spectra of the study region but only when certain portions were cast in shadow. Conversely, when the Sun was shining on these regions, the ice was gone. This indicates a cyclical behaviour of water ice during each comet rotation.”

The data come from September of 2014, focusing on a single square kilometer region on the comet’s ‘neck,’ an area that at the time was one of the comet’s most active. Rotating roughly every twelve hours, the studied block on 67P/Churyumov-Gerasimenko moved into and out of sunlight. The researchers believe that water ice on the surface and just below it sublimates when illuminated by the Sun, the gases flowing away from the comet into space. As the region again darkens, the surface cools, and subsurface water ice that briefly continues sublimating freezes out again as the vapor reaches the surface. A new layer of ice is formed, only to sublimate as the cycle starts again when the Sun illuminates that part of the surface.

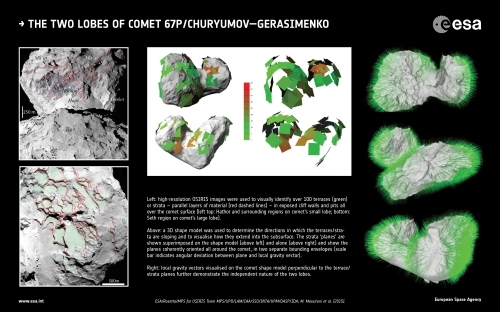

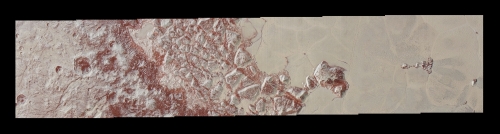

Image: Maps of water ice abundance (left) and surface temperature (right) focusing on the Hapi ‘neck’ region of Comet 67P/Churyumov-Gerasimenko. By comparing the two series of maps, the scientists have found that, especially on the left side of each frame, water ice is more abundant on colder patches (white areas in the water ice abundance maps, corresponding to darker areas in the surface temperature maps), while it is less abundant or absent on warmer patches (dark blue areas in the water ice abundance maps, corresponding to brighter areas in the surface temperature maps). In addition, water ice was only detected on patches of the surface when they were cast in shadow. This indicates a cyclical behaviour of water ice during each comet rotation. Credit/Copyright: ESA/Rosetta/VIRTIS/INAF-IAPS/OBS DE PARIS-LESIA/DLR; M.C. De Sanctis et al. (2015).

So we now have observational proof of the suspected water ice cycle on a cometary surface. The patch of the comet under study accounted for about three percent of the total amount of water vapor being emitted by the whole comet at the same time. Cometary activity, as you would expect, increased as 67P/Churyumov-Gerasimenko neared perihelion, producing abundant data from VIRTIS that will offer a further look as the object’s surface changes.

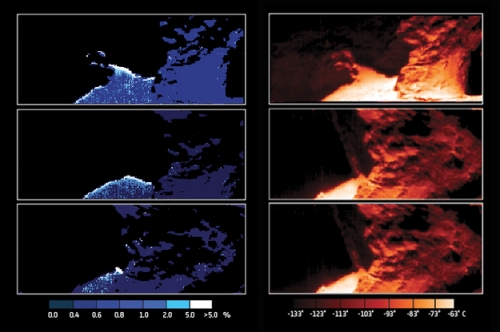

Further work appearing in Nature (and like the previous paper, discussed at the ongoing European Planetary Science Congress in Nantes, France) gives us a good reading on how the comet wound up with its unusual shape. The culprit: A low-speed collision between two separately formed comets. Lead author Matteo Massironi (University of Padova, Italy), an associate scientist of the OSIRIS team, used a 3D model to determine the slopes of over 100 terraced features seen on the comet’s surface, visualizing how they extended into the subsurface. The features were found to be coherently oriented around the comet’s lobes.

“It is clear from the images that both lobes have an outer envelope of material organised in distinct layers, and we think these extend for several hundred metres below the surface. You can imagine the layering a bit like an onion, except in this case we are considering two separate onions of differing size that have grown independently before fusing together.”

The ordered strata uncovered by Massironi and team show that a low-speed collision was the only way for the objects to merge while preserving the ordered strata found deep in the comet. So we can call 67P/Churyumov-Gerasimenko a ‘contact binary,’ one with a history that explains how it got its distinctive shape, which many people liken to a ‘rubber duck.’ Variations in the surface today are likely caused by different rates of sublimation. The frozen gases embedded within individual cometary layers are not necessarily distributed evenly throughout the comet.

Image (click to enlarge): Left: high-resolution OSIRIS images were used to visually identify over 100 terraces (green) or strata – parallel layers of material (red dashed lines) – in exposed cliff walls and pits all over the comet surface (top: Hathor and surrounding regions on comet’s small lobe; bottom: Seth region on comet’s large lobe). Middle: a 3D shape model was used to determine the directions in which the terraces/strata are sloping and to visualise how they extend into the subsurface. The strata ‘planes’ are shown superimposed on the shape model (left panel) and alone (right panel) and show the planes coherently oriented all around the comet, in two separate bounding envelopes (scale bar indicates angular deviation between plane and local gravity vector). Right: local gravity vectors visualised on the comet shape model perpendicular to the terrace/strata planes further realise the independent nature of the two lobes. Credit: ESA/Rosetta/MPS for OSIRIS Team MPS/UPD/LAM/IAA/SSO/INTA/UPM/DASP/IDA; M. Massironi et al. (2015)

Will we one day use comets the way Neal Stephenson’s character describes in Seveneves? Supporting a human presence in deep space will involve ‘living off the land’ and utilizing resources like these. We’ll doubtless one day look back on the pioneering work of the Rosetta team and remember how much Comet 67P/Churyumov-Gerasimenko had to teach us.

The paper on the water/ice cycle is De Sanctis et al., “The diurnal cycle of water ice on comet 67P/Churyumov-Gerasimenko,” Nature 525 (24 September 2015), 500-503 (abstract). The paper on the comet’s shape is Massironi et al., “Two independent and primitive envelopes of the bilobate nucleus of comet 67P,” Nature, published online 28 September 2015 (abstract).

On Habitability around Red Dwarf Stars

Learning that there is flowing water on Mars encourages the belief that human missions there will have useful resources, perhaps in the form of underground aquifers that can be drawn upon not just as a survival essential but also to produce interplanetary necessities like rocket fuel. What yesterday’s NASA announcement cannot tell us, of course, is whether there is life on Mars today, though if the detected water is indeed flowing up from beneath the surface, it seems a plausible conjecture that some form of bacterial life may exist below ground, a life perhaps dating back billions of years.

I’ve speculated in these pages that we may in fact identify life around other stars — through studies of exoplanet atmospheres — before we find it elsewhere in our Solar System, given the length of time we have to wait before return missions to places like Enceladus and Europa can be mounted. Perhaps the Mars news can help us accelerate that schedule, at least where the Red Planet is concerned.

Meanwhile, we continue to construct models of habitability not just for Martian organisms, but for more advanced creatures on planets around other suns. As witness today’s topic, recent work out of the University of Washington that is showing us that what seemed to be a major problem for life on planets around red dwarfs may in some cases actually be a blessing.

Of Tides and Magnetic Fields

Our understanding about planets around red dwarf stars is that potentially habitable worlds are close enough to their star to be tidally locked, with one side always facing the star. We’re seeing interesting depictions of such worlds in recent science fiction, such as Stephen Baxter’s Proxima (Roc, 2014), where a habitable planet around Proxima Centauri undergoes ‘winters’ due not to axial tilt but varying levels of activity on the star itself. But tidal locking is problematic, as is the process of getting into it. Circularizing an orbit creates tidally generated heat that can affect surface conditions as well as any magnetic field.

Will planets like these have magnetic fields in the first place?According to Rory Barnes (University of Washington), the general belief among astronomers is that they’re unlikely, a conclusion the new work rejects. It’s an important issue because magnetic fields are believed to protect planetary atmospheres from the charged particles of the stellar wind, thus preventing them from being dissipated into space. Such fields can also protect surface life from dangerous radiation, as from flare-spitting M-dwarfs.

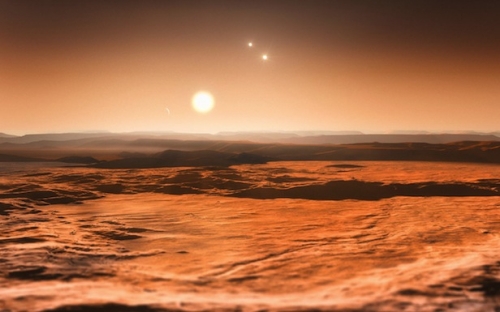

Image: An artist’s impression of the Gliese 667 system from one of the super-Earths that orbit Gliese 667C. Image credit: ESO/M. Kornmesser.

The new paper from Barnes and former UW postdoc Peter Driscoll (now at the Carnegie Institution for Science) takes a look at magnetic fields on planets around red dwarfs. Driscoll began with an examination of tidal effects. In our Solar System, think of Io, its surface punctuated by volcanic activity, to see tidal heating in action. Says Driscoll: “The question I wanted to ask is, around these small stars, where people are going to look for planets, are these planets going to be roasted by gravitational tides?”

And what would be the effect of tidal heating on magnetic fields over the aeons? To find out, Driscoll and Barnes used simulations of planets around stars ranging from 0.1 to 0.6 of a solar mass. Their finding is that tidal heating can help by making a planetary mantle more able to dissipate interior heat, a process that cools the core and thus helps in the creation of a magnetic field.

Thus we have a way to protect the surface of a red dwarf’s planet in an environment that can show a good deal of flare activity in the early part of the star’s lifespan. “I was excited to see that tidal heating can actually save a planet in the sense that it allows cooling of the core,” says Barnes. “That’s the dominant way to form magnetic fields.” A planet in the habitable zone of a red dwarf in its early flare phase may have just the protection it needs to allow life.

But note the mass threshold described in the paper:

…tides are more influential around low mass stars. For example, planets around 0.2 Msun stars with eccentricity of 0.4 experience a tidal runaway greenhouse for 1 Gyr and would be tidally dominated for 10 Gyr. These time scales would increase if the orbits were fixed, for example by perturbations by a secondary planetary companion. We find a threshold at a stellar mass of 0.45Msun, above which the habitable zone is not tidally dominated. These stars would be favorable targets in the search for geologically habitable Earth-like planets as they are not overwhelmed by strong tides.

With stellar mass as the key, as explored in the paper over a range of masses and orbital eccentricities, various outcomes emerge. Planets with low initial eccentricity experience only weak tides, while planets on highly eccentric orbits experience much stronger effects – high initial eccentricity and tight orbits around low mass stars produce extreme tides that help to circularize planetary orbits. As the mass of the star increases, the habitable zone moves to larger orbital distances and tidal dissipation decreases.

Given all these scenarios, helpful magnetic fields are only one possible outcome, and even when they form, they may not be sufficient to protect life. The Driscoll/Barnes model includes planetary cores that undergo super-cooling, thus solidifying and killing the magnetic dynamo. Also, hotter mantle temperatures and lower core cooling rates can weaken the magnetic field below the point at which it can protect the planet’s surface.

Other possibilities: Planets orbiting close to their star in highly eccentric orbits will experience enough tidal heating to produce a molten surface. Tidal heating can also produce high rates of volcanic eruption, producing a toxic environment for life (the atmospheres of such planets may well be detectable with future generations of space- and ground-based telescopes). Tidal heating effects are most extreme for planets in the habitable zone around very small stars, those less than half the mass of the Sun.

So we don’t exactly have a panacea that makes all red dwarf star planets in the habitable zone likely to support life. What we do have is a model showing that for worlds orbiting a star of above 0.45 solar masses, the tidal effects do not overwhelm the possibilities for surface life while they do allow the formation of a protective magnetic field. Some of the magnetic fields generated last for the lifetime of the planets.

Needed improvements in the model, the paper suggests, include factors like variable internal composition and dissipation in oceans or internal liquid layers. “With growing interest in the habitability of Earth-like exoplanets, the development of geophysical evolution models will be necessary to predict whether these planets have all the components that are conducive to life.”

The paper is Driscoll and Barnes, “Tidal Heating of Earth-like Exoplanets around M Stars: Thermal, Magnetic, and Orbital Evolutions,” Astrobiology Vol. 15, Issue 9 (22 September 2015). Abstract / preprint.

Pluto, Bonestell and Richard Powers

Like the Voyagers and Cassini before it, New Horizons is a gift that keeps on giving. As I looked at the latest Pluto images, I was drawn back to Chesley Bonestell’s depiction of Pluto, a jagged landscape under a dusting of frozen-out atmosphere. Bonestell’s images in The Conquest of Space (Viking, 1949) took the post-World War II generation to places that were only dimly seen in the telescopes of the day, Pluto being the tiniest and most featureless of all.

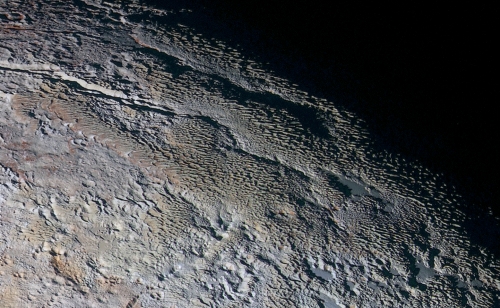

But paging through my copy of the book, I’m struck by how, in the case of Pluto, even Bonestell’s imagination failed to do it justice. The sense of surprise that accompanies many of the incoming New Horizons images reminds me of Voyager’s hurried flyby of Neptune and the ‘canteloupe’ terrain it uncovered on Triton back in 1989. On Pluto, as it turns out, we have ‘snakeskin’ terrain, just as unexpected, and likewise in need of a sound explanation.

Image: In this extended color image of Pluto taken by NASA’s New Horizons spacecraft, rounded and bizarrely textured mountains, informally named the Tartarus Dorsa, rise up along Pluto’s day-night terminator and show intricate but puzzling patterns of blue-gray ridges and reddish material in between. This view, roughly 530 kilometers across, combines blue, red and infrared images taken by the Ralph/Multispectral Visual Imaging Camera (MVIC) on July 14, 2015, and resolves details and colors on scales as small as 1.3 kilometers. Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute.

Taken near the terminator, the image teases out a pattern of linear ridges. What exactly causes a striated surface like this on a world so far from the Sun? I fully understand William McKinnon’s almost startled reaction to the image. McKinnon (Washington University, St. Louis) is a New Horizons Geology, Geophysics and Imaging (GGI) team deputy lead:

“It’s a unique and perplexing landscape stretching over hundreds of miles. It looks more like tree bark or dragon scales than geology. This’ll really take time to figure out; maybe it’s some combination of internal tectonic forces and ice sublimation driven by Pluto’s faint sunlight.”

The Pluto image below has an abstract quality that combines with our awareness of its location to create an almost surreal response. I’m reminded more than anything else of some of Richard Powers’ science fiction covers — Powers was influenced by the surrealists (especially Yves Tanguy) and developed an aesthetic that captured the essence of the hardcovers and paperbacks he illustrated. This starkly set view of mountains of ice amidst smooth plains could be a detail in a Powers cover for Ballantine, for whom he worked in the 1950s and 60s.

Image: High-resolution images of Pluto taken by NASA’s New Horizons spacecraft just before closest approach on July 14, 2015, are the sharpest images to date of Pluto’s varied terrain – revealing details down to scales of 270 meters. In this 120-kilometer section taken from the larger, high-resolution mosaic, the textured surface of the plain surrounds two isolated ice mountains. Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute.

Just for fun, here’s a Powers piece to make the point. ‘The Shape Changer’ was painted in 1973 for a novel by Keith Laumer.

But back to Pluto itself. Below we have a high resolution image showing dune-like features and what this JHU/APL news release describes as “the older shoreline of a shrinking glacial ice lake, and fractured, angular, jammed-together water ice mountains with sheer cliffs.”

Image: High-resolution images of Pluto taken by NASA’s New Horizons spacecraft just before closest approach on July 14, 2015, reveal features as small as 250 meters across, from craters to faulted mountain blocks, to the textured surface of the vast basin informally called Sputnik Planum. Enhanced color has been added from the global color image. This image is about 530 kilometers across. Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute.

The wide-angle Ralph Multispectral Visual Imaging Camera (MVIC) gives us a view of Pluto’s colors in the image below, as John Spencer (GGI deputy lead, SwRI) explains:

“We used MVIC’s infrared channel to extend our spectral view of Pluto. Pluto’s surface colors were enhanced in this view to reveal subtle details in a rainbow of pale blues, yellows, oranges, and deep reds. Many landforms have their own distinct colors, telling a wonderfully complex geological and climatological story that we have only just begun to decode.”

Image: NASA’s New Horizons spacecraft captured this high-resolution enhanced color view of Pluto on July 14, 2015. The image combines blue, red and infrared images taken by the Ralph/Multispectral Visual Imaging Camera (MVIC). The image resolves details and colors on scales as small as 1.3 kilometers. Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute.

Finally, we trace the distribution of methane across Pluto’s surface, seeing higher concentrations on the bright plains and crater rims, much less in darker regions.

“It’s like the classic chicken-or-egg problem,” said Will Grundy, New Horizons surface composition team lead from Lowell Observatory in Flagstaff, Arizona. “We’re unsure why this is so, but the cool thing is that New Horizons has the ability to make exquisite compositional maps across the surface of Pluto, and that’ll be crucial to resolving how enigmatic Pluto works.”

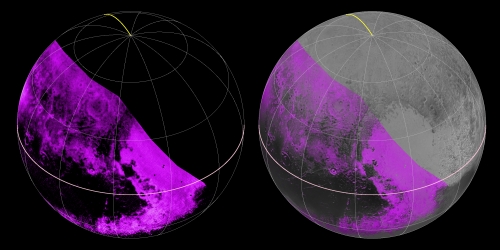

Image: The Ralph/LEISA infrared spectrometer on NASA’s New Horizons spacecraft mapped compositions across Pluto’s surface as it flew past the planet on July 14, 2015. On the left, a map of methane ice abundance shows striking regional differences, with stronger methane absorption indicated by the brighter purple colors, and lower abundances shown in black. Data have only been received so far for the left half of Pluto’s disk. At right, the methane map is merged with higher-resolution images from the spacecraft’s Long Range Reconnaissance Imager (LORRI). Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute.

In a chapter of The Conquest of Space called “The Solar Family,” Chesley Bonestell described what was then known about the nine planets of the Solar System, taking readers through the search for the ‘Trans-Neptune,’ the world we would learn to call Pluto. Everything about the Trans-Neptune turned out to defy expectations, he explains, noting that most astronomers of the time assumed it would be of about Neptune’s size, of low density and in an orbit far beyond Neptune’s. He writes:

Since everything turned out to be different from expectations, it is not surprising that a few of the old guard which did the theorizing tend to feel that Pluto is not the ninth planet they had been looking for, but an unexpected and unsuspected extra member of the solar family. The real ‘Trans-Neptune’ might still be undiscovered.

Even then, Pluto’s status as a planet seems to have been ambiguous, and today we hear speculations about another world in a far more distant orbit that could influence the trajectories of outer system objects like Sedna. In every way, it seems, Pluto has stirred the imagination while confounding our theories. The continuing dataflow from New Horizons deepens that tradition, and perhaps also contains the clues we’ll need to resolve Pluto’s mysteries.

Seeing Alien Power Beaming

We’ve long discussed intercepting not only beacons but stray radio traffic from other civilizations. The latter may be an all but impossible catch for our technology, but there is a third possibility: Perhaps we can intercept the ‘leakage’ from a beamed power infrastructure used to accelerate another civilization’s spacecraft. The idea has been recently quantified in the literature, and Jim Benford examines it here in light of a power-beaming infrastructure he has studied in detail on the interplanetary level. The CEO of Microwave Sciences, Benford is a frequent contributor to these pages and an always welcome voice on issues of SETI and its controversial cousin METI (Messaging to Extraterrestrial Intelligence).

by James Benford

Beaming of power to accelerate sails for a variety of missions has been a frequent topic on this site. It has long been pointed out that beaming of power for interplanetary commerce has many advantages. Beaming power for space transportation purposes can involve earth-to-space, space-to-earth, and space-to-space transfers using high-power microwave beams, millimeter-wave beams or lasers. The power levels are high and transient and could easily dwarf any of our previous leakage to space.

We should be mindful of the possibilities of increased leakage from Earth in the future, if we build large power beaming systems. It has been previously noted that such leakage from other civilizations might be an observable [1].

Now workers at Harvard have quantified the leakage from beaming for space propulsion, its observables and some implications for SETI. The paper is “SETI via Leakage From Light Sails in Exoplanetary Systems,” by James Guillochon and Abraham Loeb (http://arxiv.org/abs/1508.03043). Theirs is the first work to show quantitatively that beam-powered sailship leakage is an observable for SETI.

Studies have shown that leakage of TV and radio broadcast signals such as TV are essentially undetectable from one star to another. But the driving of sails by powerful beams of radiation is far more focused than isotropic communication signals, and of course far more powerful. Therefore they could be far more easily detected. These are not SETI signals so much as an easily detected aspect of advanced civilizations.

Fast track to Mars

The mission Guillochon and Loeb study is one that previous workers have quantified: interplanetary supply missions using unmanned spacecraft. The ship is a sail with payload, a sailship, propelled via microwave beam from Earth to Mars. Previous work was described here and in New Scientist (see New Sail Design to Reach 60 Kilometers Per Second in Centauri Dreams and “Solar super-sail could reach Mars in a month,” in New Scientist (29 January 2005).

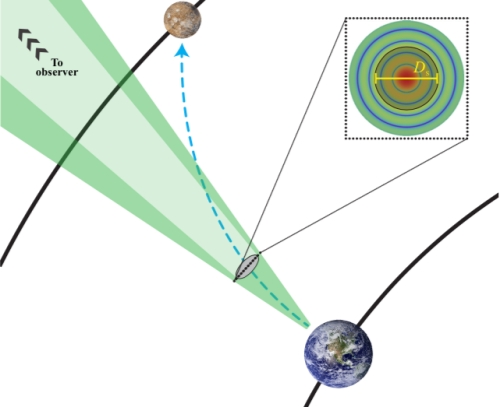

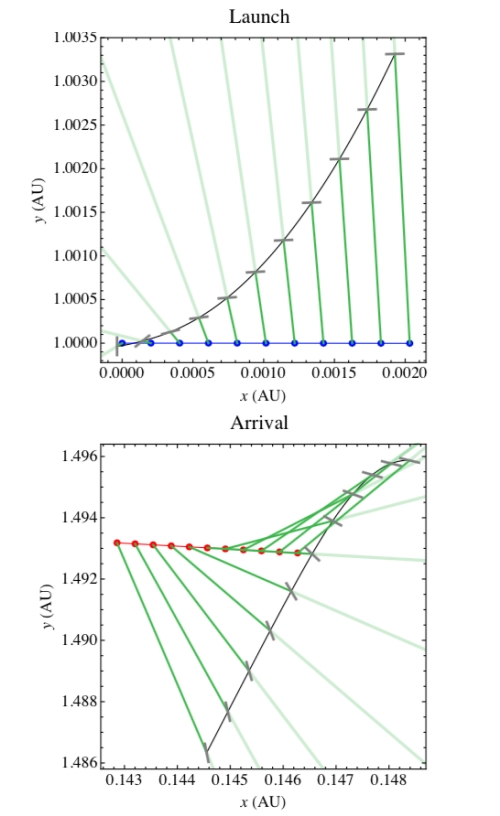

The Mars mission is shown in figure 1: a beam-driven sailship transits from Earth to Mars. An interstellar observer sees the beam accelerating the sail because the beam overlaps the sail to some extent at all distances.

Figure 1. Schematic of Mars cargo mission via microwave beaming, not to scale. The path of the sailship is the dashed arrow. The inset is the beam profile shown in green overlaying the sail of diameter Ds. The beam always overlaps the sail to some extent.

The beam is accelerated from near Earth and is decelerated by a similar system near Mars. Parameters for the mission are based on my papers on Cost-Optimized SETI Beacons and Sailships [2,3]: 1-ton sailship accelerated to 100 km/sec, beam power 1.5 TW, power duration 3 hours, beam frequency 68 GHz, acceleration 1 gee, transmitter aperture 1.5 km, sail diameter 300 m, sail surface density 4 10-5 kg/m2. (For background, see A Path Forward for Beamed Sails and Detecting a ‘Funeral Pyre’ Beacon).

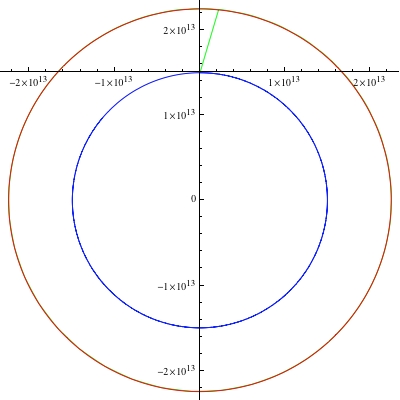

The calculation in the paper presumes the two planets are in conjunction for the voyage, the distance between the planets at this point is 0.5 AU, and the path, although shown bending in Fig. 1, is in fact almost a straight line between the two planets. Figure 2 shows the trajectory. This yields a 9 day transit time when traveling at 100km/s. Figure 3 shows the launch and arrival trajectories of the sailship.

Figure 2. Sail flight from Earth (blue orbit at 12 o’clock) to Mars(red orbit).

Figure 3. Top (bottom) panel shows sailship’s launch (arrival). Black curve shows sailship (gray segments, not to scale) leaving Earth (Mars), represented by the blue (red) curve. Blue (red) points represent Earth’s (Mars’) position at evenly spaced intervals. Green lines originating from the planets show beam direction.

The beam always overlaps the sail; that overlap will increase as the sail passes the limit for beam focusing with the given transmitter aperture. Beyond that distance the beam spot swells and the leakage rises as the sailship flies away, until the beam is turned off.

How to See Power Beams

At 100 parsecs (326 light years), beam intensity is estimated to be of order 1 Jansky, which is about 100 times the typical detectability of SETI radio searches. The sweeping action of the beam in Figure 2 has implications for observers. The receiving radio telescope will typically see a rising signal because the beam is beginning to sweep past, then a drop in signal as the sail’s shadow falls on the receiver, then a rise as the beam reappears, followed by a decline. In other words, the time varying history of intensity is a symmetric transient with two peaks with much less (or even nothing) in between. They estimate the timescale for the transit to be of order 10 seconds.

The transmitting and receiving systems will be used pretty constantly because they cost a lot to build, but launch expenses are low — basically the cost of the electrical energy. Because it is doing a transit from one planet to another, this gives an opportunity for us to use our growing knowledge of exoplanets in a clever way.

Because the rapidly accumulating information on exoplanets frequently produces all 6 elements needed to describe the orbits of the transiting planets, one can predict times when we will be in the line of sight of two planets which could be beaming power between each other to drive sailships. This ‘conjunction’ is a matter of our perspective; the two planets are not near each other, merely along our line of sight.

The Guillochon-Loeb paper quantifies this strategy for detecting such leakage transients. They estimate that if we monitor continuously, the probability of detection would be on the order of 1% per conjunction event. They state that “for a five-year survey with ~10 conjunctions per system, about 10 multiply-transiting, inhabited systems would need to be tracked to guarantee a detection” with our existing radio telescopes. Of course the key question is just which planets are inhabited, and that’s what SETI is trying to find out. The leakage would be evidence for that, so this statement is a bit circular.

The recently-announced Breakthrough Listen Initiative will have 25% of the observing time allocated on the Parkes and Green Bank telescopes, so could initiate such a search.

Consequences

This first-of-its-kind calculation shows that the forthcoming Breakthrough Listen Initiative has a new observable to look for. With enough observing time, the prospects for SETI seem to now be improved. If alien civilizations are using power beaming, as we ourselves will likely do in future centuries, we may observe the leakage of these more advanced societies.

Although not mentioned in the paper, there are two implications:

ETI, having done the same thinking, would realize that they could be observed. They could put a message on the power beam and broadcast it for our receipt at no additional energy or cost. So by observing leakage from power beams we may well find a message embedded on the beam. That message may use optimized power-efficient designs such as spread spectrum and energy minimization [4-5]. That would be more sophisticated than the simple 1 Hz tones looked for back in the 20th Century (see Is Energy a Key to Interstellar Communication?).

When we in future build large power beaming systems we will likely put messages on them. That is, if we’ve addressed the METI issue, meaning that we’ve had enough discussion and agreement by mankind of what we wish to say [6].

References

1. Gregory Benford, James Benford and Dominic Benford, “Searching for Cost Optimized Interstellar Beacons”, Astrobiology, 10, 491-498 (2010).

2. James Benford, Gregory Benford and Dominic Benford “Messaging With Cost Optimized Interstellar Beacons”, Astrobiology, 10, 475-490, (2010).

3. James Benford, “StarshIp Sails Propelled by Cost-Optimized Directed Energy”, JBIS, 66, 85-95, (2013).

4. David Messerschmitt, “Interstellar communication: The case for spread spectrum”, Acta Astronautica, 81, 227-238, (2012).

5. David Messerschmitt, “Design for minimum energy in interstellar communication”, Acta Astronautica, 107, 20-39, (2015).

6. John Billingham and James Benford, “Costs and Difficulties of large-scale METI, and the Need for International Debate on Potential Risks”, JBIS, 67, 17-23, (2014).

Another Search for Kardashev Type III

I have no idea whether we would be able to recognize a Kardashev Type III civilization if we saw one, but the search is necessary as we rule out some possibilities and examine others. As we saw yesterday, the Glimpsing Heat from Alien Technologies project at Penn State has examined data on 100,000 galaxies, finding 93 with mid-infrared readings that merit further study. One thing that we, operating with what we know about physics, would expect from a super-civilization is the production of waste heat, in the temperature range between 100 and 600 K, and that’s why previous searches for Dyson spheres have gone looking for such signatures.

But Kardashev Type III is an extreme reach. We’re talking about a civilization capable of using the energies not just of its own star but of its entire galaxy, and just how this would be done is a question about which we can only speculate. As Erik Zackrisson (Uppsala University) and colleagues do in a new paper that balances nicely against Michael Garrett’s recent study. The Zackrisson paper posits Dyson spheres as one way to harvest radiation energies, and takes as its inspiration a 1999 study by James Annis that considers this method in relation to detecting a galaxy-spanning civilization.

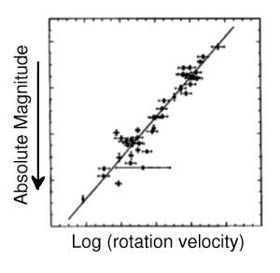

A Dyson sphere would partially shroud a star or, in its extreme form, completely surround it, making vast amounts of radiant energy available for the purposes of its builders. Annis wondered how a civilization building Dyson spheres throughout a galaxy would affect the light output of the galaxy. To study the matter, he suggested using the so-called Tully-Fisher relation, the correlation (in spiral galaxies) between galactic luminosity and rate of rotation.

Zackrisson follows Annis’ lead, knowing that if you can determine a galactic rotation velocity, you can use the Tully Fisher relation to come up with its intrinsic brightness. since the optical brightness of a galaxy shows a consistent relation to the maximum rotation velocity and radius of the galaxy. Annis, using a sample of 137 galaxies, looked for candidates that were darker than they should be, finding no outliers in his admittedly limited dataset. Michael Garrett also used a useful relation, in his case between the mid-infrared output of a galaxy and its radio emissions, one that has been shown to hold over a wide range of luminosity and redshifts, to look for cases where the relation failed.

Image: The Tully-Fisher relation shows that rotation curves can be correlated with luminosity. The higher the luminosity, the higher the maximum rotational velocity.

If there is a Kardashev Type III civilization building Dyson spheres on a galactic scale, its astroengineering projects should not affect the gravitational potential of the galaxy, but they should decrease the total optical luminosity, thus making the galaxy an outlier that would appear less luminous than it should. Zackrisson used Tully-Fisher on a sample of 1359 spiral galaxies drawn from a catalog of galaxies produced in 2007 by Christopher Springob and colleagues. The criterion for KIII candidate galaxies was the one used by Annis, that candidates should be ? 1.5 magnitudes below what would be expected from Tully-Fisher (the reasons for the choice have to do with limiting spurious detections and are explained at some length in the paper).

As with Michael Garrett’s study, we find little evidence of Kardashev Type III in the results. The conservative upper limit on the fraction of local disks that meet the criteria for a candidate KIII galaxy is ? 3%. But we need to drill down into this. Let me quote from the paper:

In this sample, a total of 11 objects are found to be significantly under-luminous (by a factor of 4 in the I-band) compared to the Tully-Fisher relation, and therefore qualify as Kardashev type III host galaxy candidates according to this test. However, by scrutinizing the optical morphologies and WISE 3.4–22 µm infrared fluxes of these objects, we find nothing that strongly supports the astroengineering interpretation of their unusually low optical luminosities.

So we do have a few anomalous galaxies that evidently owe their peculiarities to astrophysical causes not related to astroengineering on a K-III scale. The paper continues:

Hence, we conclude that their apparent positions in the Tully-Fisher diagram likely have mundane causes, with underestimated distances being the most probable explanation for most of the candidates. Under the assumption that none of them are bona fide KIII objects, we set a tentative upper limit of ? 0.3% on the fraction of disk galaxies harbouring KIII civilizations.

Image: This Hubble image shows the scatterings of bright stars and thick dust that make up spiral galaxy Messier 83, otherwise known as the Southern Pinwheel Galaxy. One of the largest and closest barred spirals to us, this galaxy has hosted a large number of supernova explosions, and appears to have a double nucleus lurking at its core. What we have yet to find in galaxies like these is any sign of KIII civilizations. Credit: NASA, ESA, and the Hubble Heritage Team (STScI/AURA) Acknowledgement: William Blair (Johns Hopkins University).

Does this mean that galaxy-spanning civilizations do not exist? The answer is no: The paper examines a specific scenario, that such civilizations would construct myriad Dyson spheres to harvest radiation energy, and that the waste heat of these constructions would be observable. We are a species whose experience of technology is negligible compared to a KIII culture, so we cannot know what kind of options they would have available after millions of years of technological development. All we can say for sure based on a study like this is that there is no evidence of massive deployment of Dyson spheres in any of the galaxies studied.

I don’t say this to in any way minimize the value of such work. We can’t know if something is there unless we look for it. We keep looking, then, while trying to imagine what civilizations far in advance of our own might do to use the maximum energy available to them. Ruling out one scenario is cause for a re-calibration of our assumptions and a continuing search.

The paper is Zackrisson et al., “Extragalactic SETI: The Tully-Fisher Relation as a Probe of Dysonian Astroengineering in Disk Galaxies,” in press at The Astrophysical Journal (preprint). The Annis paper is “Placing a limit on star-fed Kardashev type III civilisations,” JBIS 52, pp.33-36 (1999).

No Sign of Galactic Super-Civilizations

‘Dysonian SETI’ is all about studying astronomical data in search of evidence of advanced civilizations. As such, it significantly extends the SETI paradigm both backwards and forwards in time. It moves forward because it offers entirely new search space in not just our own galaxy but galaxies throughout the visible universe. But it also moves backward in the sense that we can use vast amounts of stored observational data from telescopes both ground- and space-based to do the work. We don’t always need new instruments to do SETI, or even new observations. With Dysonian SETI, we can do a deep dive into our increasingly abundant digital holdings.

At Penn State, Jason Wright and colleagues Matthew Povich and Steinn Sigurðsson have been conducting the Glimpsing Heat from Alien Technologies (G-HAT) project, which scans data in the infrared from the Wide-field Infrared Survey Explorer (WISE) mission and the Spitzer Space Telescope. This is ground-breaking work that I’ve written about here on several occasions — see G-HAT: Searching For Kardashev Type III and SETI and Stellar Drift for recent articles. Jason Wright himself explained G-HAT in the Centauri Dreams article Glimpsing Heat from Alien Technologies.

Last April, G-HAT produced a paper that found only a small number of galaxies out of the 100,000 studied that showed higher levels of mid-infrared than would be expected, with the significant caveat that there are natural processes at work that could mimic what might conceivably be the waste heat of an advanced civilization, a Kardashev Type III culture deploying the energies of its entire galaxy. This is a finding that shows us, in Wright’s words, that “…out of the 100,000 galaxies that WISE could see in sufficient detail, none of them is widely populated by an alien civilization using most of the starlight in its galaxy for its own purposes.”

A fascinating finding in its own right, because we’re dealing with a large sample of galaxies that are billions of years old. The Kardashev model moves from Type II, a technology capable of using the entire energy output of its star, to Type III, a technology capable of using an entire galaxy’s luminous energies. If we assume a progression toward ever more capable energy harvesting like this, then abundant time has been available for Type III cultures to arise. Those interesting galaxies with a mid-infrared signature larger than expected will receive more study, to be sure, but the result at this point seems stark. None of the galaxies studied show signs of civilizations that are reprocessing 85 percent or more of their starlight into the mid-infrared.

Image: The Sombrero galaxy (M104), a bright nearby spiral galaxy. The prominent dust lane and halo of stars and globular clusters give this galaxy its name. If a Kardashev Type III civilization were engaged here, shouldn’t we be able to detect it? Credit: NASA/ESA and The Hubble Heritage Team (STScI/AURA).

But we keep looking, and those few anomalous galaxies from G-HAT still need explanation. Michael Garrett is general and scientific director for ASTRON (Netherlands Institute for Radio Astronomy). Garrett has been working with data on 93 candidate galaxies found in the most recent paper from G-HAT (citation below). These were the galaxies with mid-infrared values enough out of the ordinary to elicit attention. The G-HAT paper (lead author Roger Griffith) calls them “…the best candidates in the Local Universe for Type III Kardashev civilizations.”

The stellar energy supply of a galaxy as examined in the Kardashev taxonomy is roughly 1038 watts, with waste heat energy expected to be radiated in the mid-infrared (MIR) wavelengths, which means temperatures between 100 and 600 K. Garrett’s new paper notes that the 93 sources G-HAT has found would — if the radiation measured here were interpreted as waste heat — include galaxies reprocessing more than 25 percent of their starlight. But can we make that interpretation? Garrett is quick to add that there are many ways that emissions in the mid-infrared can develop through entirely natural astrophysical processes.

To make a determination about what is actually happening, Garrett relies on a relation known as the infrared radio correlation, which the paper calls a ‘fundamental relation’ for galaxies that holds over a wide range of different redshifts and covers at least five orders of magnitude in luminosity. It extends, as the paper notes, well into the FIR/Mid-Infrared and sub-millimetre domains. The tight correlation between infrared and radio emissions was originally uncovered with data from the IRAS (Infrared Astronomical Satellite) mission, launched in 1983. And it takes us into interesting territory as a diagnostic tool, as the paper notes:

The physical explanation for the tightness of the correlation is that both the non-thermal radio emission and the thermal IR emission are related to mechanisms driven by massive star formation. For galaxies in which the bulk of the Mid-IR emission is associated with waste heat processes, there is no obvious reason why artificial radio emission would be similarly enhanced. While the continuum radio emission level might increase through the use of advanced communication systems, the amount of waste energy deposited in the radio domain is likely to be many orders of magnitude less than that expected at Mid-IR wavelengths.

The Garrett paper, then, looks at the 93 G-HAT sources in terms of the mid-infrared radio correlation, with the assumption that galaxies associated with Type III civilizations should appear as outliers. The result: The correlation holds as expected. The likely interpretation is that the excesses of radiation in mid-infrared wavelengths are due to natural heat sources rather than the heat of a titanic civilization going about its business. Garrett puts the point bluntly: “The original research at Penn State has already told us that such systems are very rare but the new analysis suggests that this is probably an understatement, and that advanced Kardashev Type III civilisations basically don’t exist in the local Universe.”

In an email this morning, I ran today’s post by Jason Wright, who said that Garrett’s study was the kind of thing the G-HAT team had hoped for, an investigation into what might have caused the galactic anomalies by other methods. He also noted that even with this information, we can’t absolutely rule out a K-III:

“Kardashev’s original line of research was to estimate the power available to a KIII to transmit radio waves that we would detect at Earth. Determining that these galaxies are radio bright in a way correlated with their MIR is a good bit of information to have, but it doesn’t rule anything out (they do seem to be consistent with starbursts, as expected, but they’re not inconsistent with KIII’s). On the other hand, there’s no reason I can think of that bright radio emissions from leaked communications would follow the MIR-radio correlation for starbursts (what a coincidence that would be!).”

Or is a Kardashev Type III civilization advanced enough that it produces low waste heat emissions in ways that are beyond our understanding? Whatever the case, the paper adds that the correlation method can be extended into the search for possible Kardashev Type II civilizations within our own or nearby galaxies:

…it should be noted that the IR-radio correlation is also known to hold on sub-galactic scales (e.g. Murphy (2006). A comparison of resolved Mid-IR and radio images of nearby galaxies on kpc scales can also be useful in identifying artificial mid-IR emission from advanced civilisations that lie between the Type II and Type III types. While Wright et al. (2014a) venture that Type III civilisations should emerge rapidly from Type IIs, it might be that some specific galactic localities are preferred – see for example Cirkovic & Bradbury (2006) or are to be best avoided e.g. the galactic centre. A comparison of the resolved radio and mid-IR structures can therefore also be relevant to future searches of waste heat associated with advanced civilisations.

The paper is Garrett, “The application of the Mid-IR radio correlation to the G^ sample and the search for advanced extraterrestrial civilisations,” accepted at Astronomy & Astrophysics (abstract). The Griffith paper on recent G-HAT results is Griffith et al., “The ? Infrared Search for Extraterrestrial Civilizations with Large Energy Supplies. III. The Reddest Extended Sources in WISE,” Astrophysical Journal Supplement Series Vol. 217, No. 2, published 15 April 2015 (abstract / preprint).