Centauri Dreams

Imagining and Planning Interstellar Exploration

Lost in Time and Lost in Space

by Dave Moore

Dave Moore, a frequent Centauri Dreams contributor, tells me he was born and raised in New Zealand, spent time in Australia, and now makes his home in California. “As a child I was fascinated by the exploration of space and science fiction. Arthur C. Clarke, who embodied both, was one of my childhood heroes. But growing up in New Zealand in the 60s, anything to do with such things was strictly a dream. The only thing it did lead to was to getting a degree in Biology and Chemistry.” But deep space was still on Dave’s mind and continues to be, as the article below, drawing on his recent paper in the Journal of the British Interplanetary Society, attests. “While I had aspirations at one stage of being a science fiction writer,” Dave adds, “I never expected that I would emulate the other side of Arthur C. Clarke and get something published in JBIS.” But he did, and now explains the thinking behind the paper.

The words from “Science Fiction/Double Feature” in the Rocky Horror Picture Show seem particularly apt after looking into the consequences of temporal dispersion in exosolar technological civilizations.

And crawling on the planet’s face

Some insects called the human race . . .

Lost in time

And lost in space

. . . and meaning.

All meaning.

Hence the title of my paper in a recent issue of the Journal of the British Interplanetary Society (Vol. 63 No. 8 pp 294-302). The paper, “Lost in Space and Lost in Time: The Consequences of Temporal Dispersion for Exosolar Technological Civilizations,” grew out of my annual attendance at Contact in San Jose, an interdisciplinary convention of scientists, artists and science fiction writers. From the papers presented there, I got a general feeling for the state of play in the search for extraterrestrial civilizations but never felt inclined to make a contribution until it occurred to me to look at the results of Exosolar Technological Civilizations (ETCs) emerging at different times. It would be an exercise similar to many that have been done using the Drake equation only, but instead of looking at the consequences of the spatial dispersion, I’d be looking at the consequences of different temporal spreads.

My presentation of the results and my conclusions went over sufficiently well that it was suggested that I turn it into a paper, but not having any experience in publishing papers, I let the project drop until Paul got to see my musings and suggested JBIS as a suitable forum.

The Separation Between Civilizations

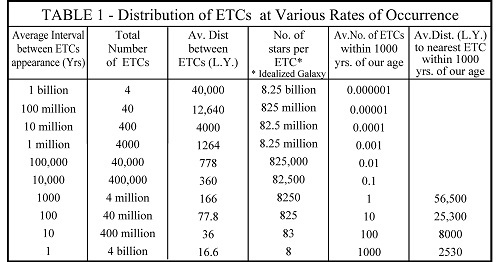

The core of the paper is a table showing the number of ETCs you would get and their average separations assuming they arose at various rates from a starting point four billion years ago.

I used an idealized galaxy which was a disk of uniform stellar density, that of our solar neighborhood, to keep things simple. (For the justification of why this is a reasonable assumption and to why it seems quite likely that potential life-bearing planets have been around for eight billion years, I’ll refer you to my paper.)

One of the first things I realized is that the median age of all civilizations is entirely independent of the frequency at which they occur. It’s always approximately one-third the age of the oldest civilization. If ETCs start emerging slowly and their frequency picks up (a more likely scenario), this skews the median age lower, but you are still looking at a period of about a billion years.

And the median age of all civilizations is also the median age of our nearest neighbor. There’s a fifty/fifty chance it will be either younger or older than that, but there’s a 90% chance it will at least be 10% of the median, which means that in all likelihood our nearest neighbor will be hundreds of millions of years older than us. And, if you want to find an ETC of approximately our own age, say within a thousand years of ours, you will on average have to pass by a million older to vastly older civilizations. As you can see from columns 5 and 6 in the table, if ETCs haven’t emerged with sufficient frequency to produce a million civilizations, then you won’t find one.

Once you realize that ETCs are not only scattered through vast regions of space but also scattered across a vast amount of time, then this casts a very different light on many common assumptions about the matter. Take the idea very prevalent in a lot of literature that the galaxy is full of approximately coequally-aged civilizations (emerging within a thousand years of each other), a scenario I will call the Star Trek universe. If you look at the bottom row of the table, you can see there are simply aren’t enough stars in our galaxy for this to work.

After discovering that when dealing with extraterrestrial civilizations, you are dealing with great age, I then began to look at the sort of effects great age would have on civilizations.

Age and Power

The first thing I did was to extrapolate our energy consumption, and I discovered that at a 2% compound growth rate our civilization would require the entire current energy output of the galaxy (reach a Kardashev III level) in less than 3000 years, which doesn’t look likely unless a cheap, convenient, FTL drive get discovered. What this does point out though is that in extraordinarily short times, geologically speaking, civilizations can theoretically grow to enormous power outputs.

The next thing I did was to review the literature on interstellar travel. Many of the interstellar propulsion scenarios have power requirements that cluster around the 100 Terawatt level. This is a million times that of a proposed 100 MW nuclear powered Mars vessel, which is considered to be within our current or near future range of capabilities. Assuming a society with a million times our current power consumption would find a 100 TW vessel similarly within its capabilities, then our first interstellar vessel would be 700 years into our future at a 2% growth rate.

Are these energy levels feasible? If Earth continues its current growth in energy consumption, we will overheat our planet through our waste heat alone in the next century, never mind global warming through CO2 emissions. So, it looks as if we remain confined to our planet, we will probably never have the ability to send out interstellar colony ships. There is, however, a way to have our civilization reach enormous energy levels while still within our solar system.

Our solar system may have as many as a trillion comets and KBOs orbiting it, ten times the mass of the Earth, all nicely broken up. (There may be more comets in our solar system than there are stars in our galaxy.) And as this is the bulk of the easily accessible material, it would be logical to assume that eventually this is where the bulk of our civilization will finish up.

A hydrogen-fusion powered civilization could spread throughout our cometary belt, and with no grand engineering schemes such as the construction of a Dyson sphere, it could, through the cumulative growth of small, individual colonies, eventually build up a civilization of immense power and size. For example, if each of a 100 billion comets were colonized with a colony that used 1000 MW of power (a small city’s worth) then the total civilizational power consumption would be in the order of 1020 Watt. Pushing it a bit, if there was a 20,000 MW colony on each of the 5 trillion comets in the Oort cloud and the postulated Hills cloud, then the total civilizational power consumption would be 1023 Watt, that of a red dwarf star.

For this society, interstellar colonization would be but another step.

The End of a Civilization

Ian Crawford has done some analysis of galactic colonization using a scenario in which a tenth-lightspeed colony ship plants a colony on a nearby star system. The colony then grows until it is capable of launching its own ship, and so on. This produces a 1000-2000 year cycle, with the assumptions I’ve been using, but even if you work this scenario conservatively, the galaxy is colonized in 20 million years, which is an order of magnitude less that the expected age of our nearest neighbor.

Of course, all the previous points may be moot if a civilization’s lifetime is short, so I then looked into the reasoning advanced for civilizational termination.

Various external causes have been postulated to truncate the life span of a technological civilization–Gamma Ray Bursters are a favorite. When you look at them though, you realize that anything powerful enough to completely wipe out an advanced technological civilization would also wipe out or severely impact complex life; there’s at most a 10,000 year window of vulnerability before a growing civilization spreading throughout the galaxy becomes completely immune to all these events. This is one fifty-thousandth of the 500 million years it took complex life to produce sentience. So any natural disasters frequent enough to destroy a large portion of extraterrestrial civilizations would also render them terminally rare to begin with. If extraterrestrial civilizations do come to an end, it must be by their own doing.

There’ve been numerous suggestions as to why this may happen, but these arguments are usually anthropocentric and parochial and not universal. If they don’t apply to just one civilization, that civilization can go on to colonize the galaxy. So, at most, self-extinction would represent but another fractional culling akin to the other terms in the Drake equation. There’ve also been many explanations for the lack of evidence of extraterrestrial civilizations: extraterrestrials are hiding their existence from us for some reason, they never leave their home world, our particular solar system is special in some way, etc., but these are also parochial arguments; the same reasoning applies. They also fail the test of Occam’s razor. The simplest explanation supported by the evidence is that our civilization is the only one extant in our galaxy.

Into the Fermi Question

The only evidence we have about the frequency and distribution of ETCs is that we can find no signs of them so far. This has been called the Fermi paradox, but I don’t regard this current null result as a paradox. Rather I regard it as a bounding measurement. Since the formation of the Drake equation, two major variables have governed our search for ETCs: their frequency and longevity. This leads to four possibilities for the occurrence of Exosolar civilizations.

- i) High frequency and longevity

- ii) High frequency and short life spans

- iii) Low frequency and longevity

- iv) Low frequency and short life spans

These four categories are arbitrary, in effect being hacked out of a continuum. The Fermi paradox eliminates the first one.

We can get a good idea of the limits for the second by looking at an article that Robert Zubrin did for the April 2002 issue of Analog. In it, he postulated a colonization scenario similar to Ian Crawford’s but cut the expanding civilizations off at arbitrary time limits. He then found the likelihood for Earth having drifted through the ETCs’ expanding sphere of influence in the course of our galactic orbit. The results indicated that unless all civilizations have lifetimes of under 20,000 years, we are very likely to have been visited or colonized frequently in the past. But to have every civilization last less than a specified time requires some sort of universalist explanation, which is hard to justify given the naturally expected variation in ETCs’ motivation.

Nothing that we had seen so far eliminates the third possibility however.

Implications for SETI Strategy

Finally, in the paper, I turned to reviewing our search strategies for ETCs in light of what has been learned.

Given that ETCs will most probably be very distant and have a high power throughput, then looking for the infrared excess of their waste heat looks like a good bet. Low frequency but high power also implies searching extra galactically. Take the Oort cloud civilization I postulated earlier and assume it colonizes every tenth star in a galaxy like ours. Its total power consumption would be in the order of 1030 Watt. This would show up as an infrared excess of one part in 107 to 108 of a galaxies’ output.

I found other ideas like searching for ancient artifacts and using gravitational lensing for a direct visual search seem to have some potential, but when I looked at radio searches, this turned out to be one of the least likely ways to find a civilization. The problem quickly becomes apparent after looking at Table I. Any ETCs close enough to us to make communication worthwhile will most likely be in the order of 108 to 109 years old, which gives them plenty of time to become very powerful, and therefore highly visible, and to have visited us. If civilizations occur infrequently, as in the top row of Table I, then the distances are such that the communication times are in the order of 10,000 years. If civilizational lifetimes are short but the frequency is high, then you still have enormous distances. (You can use Table I to get some idea of the figures involved. The last two columns show the distances at various frequencies for civilizations within 1000 years of our age. For ten thousand years move those figures up one row, for 100,000 years two rows, etc.) Under most cases, the signal reply time to the nearest civilization will exceed the civilizations’ lifetime–or our patience. Looking for stray radio signals under the distant but short-lived scenario does not look very hopeful either. To send a signal tens of thousands of light years, an effective isotropic radiated power of 1017 – 1020 Watts is required, and while this is within sight of our current technology, the infrastructure and power levels are far in excess of anything required for casual communication even to nearby stars.

The results of all my thinking are not so much answers but, hopefully, a framing for asking the right questions.

Considerations in SETI searches have tended to focus on the nearby and a close time period and were set when our knowledge in this field was in its infancy. There’ve been some refinements to our approach since them, but generally our thinking has been built on this base. It’s time to carefully go over all our assumptions and reexamine them in the light of our current knowledge. The Fermi paradox needs to be explained — not explained away.

The paper is Moore, “Lost in Time and Lost in Space: The Consequences of Temporal Dispersion for Exosolar Technological Civilisations,” JBIS Vol. 63, No. 8 (August 2010), pp. 294-301.

A New Confirmation of General Relativity

Einstein’s Special Theory of Relativity remains much in the news after a weekend of speculation about the curious neutrino results at CERN. Exactly what is going on with the CERN measurements remains to be seen, but the buzz in the press has been intense as the specifics of the experiment are dissected. It will be a while before we have follow-up experiments that could conceivably replicate these results, but it’s interesting to see that another aspect of Einstein’s work, the General Theory of Relativity, has received a new kind of confirmation, this time on a cosmological scale.

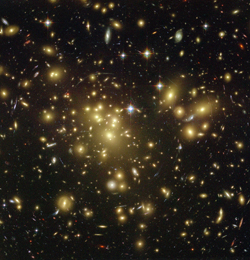

Here we’re talking not about the speed of light but the way light is affected by gravitational fields. The work is out of the Niels Bohr Institute at the University of Copenhagen, where researchers say they have tested the General Theory at a scale 1022 times larger than any laboratory experiment. Radek Wojtak, an astrophysicist at the Institute, has worked with a team of colleagues to analyze measurements of light from galaxies in approximately 8,000 galaxy clusters. Each cluster is a collage of thousands of individual galaxies, all held together by gravity.

Image: Researchers have analysed measurements of the light from galaxies in approximately 8,000 galaxy clusters. Galaxy clusters are accumulations of thousands of galaxies (each light in the image is a galaxy), which are held together by their own gravity. This gravity affects the light that is sent out into space from the galaxies. Credit: Niels Bohr Institute/ Radek Wojtak.

The Copenhagen team was looking specifically at redshift, where the wavelength of distant galaxies shifts toward the red with increasing distance. Redshift has been used to tell us much about how the universe has expanded since the light being studied left its original source. Normally we think of the redshift being the result of the changing distance between the distant light source and ourselves as we both move through space. This is the familiar Doppler shift, and it could be either a redshift or, if the objects are approaching each other, a blueshift.

But another kind of redshift can come into play when space itself is expanding. Here the distance between the distant light source and ourselves is also increasing, but because of its nature, we call this a cosmological redshift rather than a Doppler shift. Gravity can also cause a redshift, as light emitted by a massive object is affected by the gravity of the object. The loss of energy from emitted photons shows up as a redshift commonly called a gravitational redshift.

The General Theory of Relativity can be used to predict how light – and thus redshift – is affected by large masses like galaxy clusters. In the new work, we’re focusing on both cosmological and gravitational redshift, effects apparent when the light from galaxies in the middle of the galactic clusters is compared to those on the outer edges of the cluster. Wojtak puts it this way:

“We could measure small differences in the redshift of the galaxies and see that the light from galaxies in the middle of a cluster had to ‘crawl’ out through the gravitational field, while it was easier for the light from the outlying galaxies to emerge.”

Going on to measure the total mass of each galaxy cluster, the team could then use the General Theory of Relativity to calculate the gravitational redshift for the differently placed individual galaxies. What emerged from the work was that the theoretical calculations of the gravitational redshift based on the General Theory of Relativity were in agreement with the astronomical observations. Says Wotjak:

“Our analysis of observations of galaxy clusters show[s] that the redshift of the light is proportionally offset in relation to the gravitational influence from the galaxy cluster’s gravity. In that way our observations confirm the theory of relativity.”

Does the testing of the General Theory on a cosmological scale tell us anything about dark matter and its even more mysterious counterpart, dark energy? On that score, this Niels Bohr Institute news release implies more than it delivers. The new work confirms current modeling that incorporates both dark matter and dark energy into our model of the universe, but beyond that it can provide no new insights into what dark matter actually is or how dark energy, thought to comprise 72 percent of the structure of the universe, actually works. We can make inferences from our understanding of the behavior of the light, but we have a long way to go in piecing together just what these dark components are.

The paper is Wojtak et al., “Gravitational redshift of galaxies in clusters as predicted by general relativity,” Nature 477 (29 September 2011), pp. 567-569 (abstract).

A Machine-Driven Way to the Stars

Are humans ever likely to go to the stars? The answer may well be yes, but probably not if we’re referring to flesh-and-blood humans aboard a starship. That’s the intriguing conclusion of Keith Wiley (University of Washington), who brings his background in very large computing clusters and massively parallel image data processing to bear on the fundamental question of how technologies evolve. Wiley thinks artificial intelligence (he calls it ‘artificial general intelligence,’ or AGI) and mind-uploading (MU) will emerge before other interstellar technologies, thus disrupting the entire notion of sending humans and leading us to send machine surrogates instead.

It’s a notion we’ve kicked around in these pages before, but Wiley’s take on it in Implications of Computerized Intelligence on Interstellar Travel is fascinating because of the way he looks at the historical development of various technologies. To do this, he has to assume there is a correct ‘order of arrival’ for technologies, and goes to work investigating how that order develops. Some inventions are surely prerequisites for others (the wheel precedes the wagon), while others require an organized and complex society to conceive and build the needed tools.

Some technologies, moreover, are simply more complicated, and we would expect them to emerge only later in a given society’s development. Among the technologies needed to get us to the stars, Wiley flags propulsion and navigation as the most intractable. We might, for example, develop means of suspended animation, and conquer the challenges of producing materials that can withstand the rigors and timeframes of interstellar flight. But none of these are useful for an interstellar mission until we have the means of accelerating our payload to the needed speeds. AGI and MU, in his view, have a decided edge in development over these technologies.

Researchers report regularly on steady advancements in robotics and AI and many are even comfortable speculating on AGI and MU. It is true that there is wide disagreement on such matters, but the presence of ongoing research and regular discussion of such technologies demonstrates that their schedules are well under way. On the other hand, no expert in any field is offering the slightest prediction that construction of the first interstellar spaceships will commence in a comparable time frame. DARPA’s own call to action is a 100-year window, and rightfully so.

Wiley is assuming no disruptive breakthroughs in propulsion, of course, and relies on many of the methods we have long discussed on Centauri Dreams, such as solar sails, fusion, and antimatter. All of these are exciting ideas that are challenged by the current level of our engineering. In fact, Wiley believes that the development of artificial general intelligence, mind uploading and suspended animation will occur decades to over a century before the propulsion conundrum is resolved.

Consequently, even if suspended animation arrives before AGI and MU — admittedly, the most likely order of events — it is still mostly irrelevant to the discussion of interstellar travel since by the time we do finally mount the first interstellar mission we will already have AGI and MU, and their benefits will outweigh not just a waking trip, but probably also a suspended animation trip, thus undermining any potential advantage that suspended animation might otherwise offer. For example, the material needs of a computerized crew grow as a slower function of crew size than those of a human crew. Consider that we need not necessarily send a robotic body for every mind on the mission, thus vastly reducing the average mass per individual. The obvious intention would be to manufacture a host of robotic bodies at the destination solar system from raw materials. As wildly speculative as this idea is, it illustrates the considerable theoretical advantages of a computerized over a biological crew, whether suspended or not. The material needs of computerized missions are governed by a radically different set of formulas specifically because they permit us to separate the needs of the mind from the needs of the body.

We could argue about the development times of various technologies, but Wiley is actually talking relatively short-term, saying that none of the concepts currently being investigated for interstellar propulsion will be ready any earlier than the second half of this century, if then, and these would only be the options offering the longest travel times compared to their more futuristic counterparts. AGI and MU, he believes, will arrive much earlier, before we have in hand not only the propulsion and navigation techniques we need but also the resolution of issues like life-support and the sociological capability to govern a multi-generational starship.

The scenario assumes not that starflight is impossible, nor that generation ships cannot be built. It simply assumes that when we are ready to mount a genuine mission to a star, it will be obvious that artificial intelligence is the way to go, and while Wiley doesn’t develop the case for mind-uploading in any detail because of the limitations of space, he does argue that if it becomes possible, sending a machine with a mind upload on the mission is the same as sending ourselves. But put that aside: Even without MU, artificial intelligence would surmount so many problems that we are likely to deploy it long before we are ready to send biological beings to the stars.

Whether mediated by human or machine, Wiley thinks moving beyond the Solar System is crucial:

The importance of adopting a realistic perspective on this issue is self-evident: if we aim our sights where the target is expected to reside, we stand the greatest chance of success, and the eventual expansion of humanity beyond our own solar system is arguably the single most important long-term goal of our species in that the outcome of such efforts will ultimately determine our survival. We either spread and thrive or we go extinct.

If we want to reach the stars, then, Wiley’s take is that our focus should be on the thorny issues of propulsion and navigation rather than life support, psychological challenges or generation ships. These will be the toughest nuts to crack, allowing us ample time for the development of computerized intelligence capable of flying the mission. As for the rest of us, we’ll be vicarious spectators, which the great majority of the species would be anyway, whether the mission is manned by hyper-intelligent machines or actual people. Will artificial intelligence, and especially mind uploading, meet Wiley’s timetable? Or will they prove as intractable as propulsion?

The SN 1987A Experiment

If neutrinos really do travel at a velocity slightly higher than the speed of light, we have a measurement that challenges Einstein, a fact that explains the intense interest in explaining the results at CERN that we discussed on Friday. I think CERN is taking exactly the right approach in dealing with the matter with caution, as in this statement from a Saturday news release:

…many searches have been made for deviations from Einstein’s theory of relativity, so far not finding any such evidence. The strong constraints arising from these observations make an interpretation of the OPERA measurement in terms of modification of Einstein’s theory unlikely, and give further strong reason to seek new independent measurements.

And this is followed up by a statement from CERN research director Sergio Bertolucci:

“When an experiment finds an apparently unbelievable result and can find no artifact of the measurement to account for it, it’s normal procedure to invite broader scrutiny, and this is exactly what the OPERA collaboration is doing, it’s good scientific practice. If this measurement is confirmed, it might change our view of physics, but we need to be sure that there are no other, more mundane, explanations. That will require independent measurements.”

All this is part of the scientific process, as data are sifted, results are published, and subsequent experiments either confirm or question the original results. I’m glad to see that the supernova SN 1987A has turned up here in comments to the original post. The supernova, which exploded in February of 1987 in the Large Magellanic Cloud, was detected by the “Kamiokande II” neutrino detector in the Kamioka mine in Japan. It was also noted by the IMB detector located in the Morton-Thiokol salt mine near Fairport, Ohio and the ‘Baksan’ telescope in the North Caucasus Mountains of Russia.

Neutrinos scarcely interact with matter, which means they escape an exploding star more quickly than photons, something the SN 1987A measurements confirmed. But SN 1987A is 170,000 light years away. If neutrinos moved slightly faster than the speed of light, they would have arrived at the Earth years — not hours — before the detected photons from the supernova. The 25 detected neutrinos were a tiny fraction of the total produced by the explosion, but their timing matched what physicists believed about their speed. The OPERA result, in other words, is contradicted by an experiment in the sky, and we have a puzzle on our hands, one made still more intriguing by Friday’s seminar at CERN, where scientists like Nobel laureate Samuel Ting (MIT) congratulated the team on what he called an ‘extremely beautiful experiment,’ one in which systematic error had been carefully checked.

Image: In February 1987, light from the brightest stellar explosion seen in modern times reached Earth — supernova SN1987A. This Hubble Space Telescope image from the sharp Advanced Camera for Surveys taken in November 2003 shows the explosion site over 16 years later. Supernova SN1987A lies in the Large Magellanic Cloud, a neighboring galaxy some 170,000 light-years away. That means that the explosive event – the core collapse and detonation of a star about 20 times as massive as the Sun – actually occurred 170,000 years before February 1987. Credit: P. Challis, R. Kirshner (CfA), and B. Sugerman (STScI), NASA.

It’s true that OPERA was working with a large sample — some 16000 neutrino interaction events — but skepticism remains the order of the day, because as this New Scientist story points out, there is potential uncertainty in the neutrinos’ departure time, there being no neutrino detector at the CERN end. As for the GPS measurements, New Scientist labels them so accurate that they could detect the drift of the Earth’s tectonic plates. Can we still tease out a systematic error from the highly detailed presentation and paper produced by the CERN researchers? They themselves are cautious, as the paper makes clear:

Despite the large significance of the measurement reported here and the stability of the analysis, the potentially great impact of the result motivates the continuation of our studies in order to investigate possible still unknown systematic effects that could explain the observed anomaly. We deliberately do not attempt any theoretical or phenomenological interpretation of the results.

A prudent policy. Let’s see what subsequent experiments can tell us about neutrinos and their speed. The paper is The OPERA Collaboration, “Measurement of the neutrino velocity with the OPERA detector in the CNGS beam,” available as a preprint.

On Neutrinos and the Speed of Light

If you’re tracking the interesting news from CERN on neutrinos moving slightly faster than the speed of light, be advised that there is an upcoming CERN webcast on the matter at 1400 UTC later today (the 23rd). Meanwhile, evidence that the story is making waves is not hard to find. I woke up to find that my local newspaper had a headline — “Scientists Find Signs of Particles Faster than Light” — on the front page. This was Dennis Overbye’s story, which originally ran in the New York Times, but everyone from the BBC to Science Now is hot on the trail of this one.

The basics are these: A team of European physicists has measured neutrinos moving between the particle accelerator at CERN to the facility beneath the Gran Sasso in Italy — about 725 kilometers — at a speed about 60 nanoseconds faster that it would have taken light to make the journey. The measurement is about 0.0025 percent (2.5 parts in a hundred thousand) greater than the speed of light, a tiny deviation, but one of obvious significance if confirmed. The results are being reported by OPERA (Oscillation Project with Emulsion-Tracking Apparatus), a group led by physicist Antonio Ereditato (University of Bern).

Neutrinos are nearly massless subatomic particles that definitely should not, according to Einstein’s theory of special relativity, be able to travel faster than light, which accounts for the explosion of interest. According to this account in Science Now, the OPERA team measured roughly 16,000 neutrinos that made the trip from CERN to the detector, and Ereditato is quoted as saying that the measurement itself is straightforward: “We measure the distance and we measure the time, and we take the ratio to get the velocity, just as you learned to do in high school.” The measurement has an uncertainty of 10 nanoseconds.

It’s hard to do any better than Ereditato himself when bringing caution to these findings. Let me quote the Science Now story again:

…even Ereditato says it’s way too early to declare relativity wrong. “I would never say that,” he says. Rather, OPERA researchers are simply presenting a curious result that they cannot explain and asking the community to scrutinize it. “We are forced to say something,” he says. “We could not sweep it under the carpet because that would be dishonest.”

And the BBC quotes Ereditato to this effect: “My dream would be that another, independent experiment finds the same thing. Then I would be relieved.” One reason for the relief would be that other attempts to measure neutrino speeds have come up with results consistent with the speed of light. Is it possible there was a systematic error in the OPERA analysis that gives the appearance of neutrinos moving faster than light? The timing is obviously exquisitely precise and critical for these results, and a host of possibilities will now be investigated.

This paragraph from a NatureNews story is to the point:

At least one other experiment has seen a similar effect before, albeit with a much lower confidence level. In 2007, the Main Injector Neutrino Oscillation Search (MINOS) experiment in Minnesota saw neutrinos from the particle-physics facility Fermilab in Illinois arriving slightly ahead of schedule. At the time, the MINOS team downplayed the result, in part because there was too much uncertainty in the detector’s exact position to be sure of its significance, says Jenny Thomas, a spokeswoman for the experiment. Thomas says that MINOS was already planning more accurate follow-up experiments before the latest OPERA result. “I’m hoping that we could get that going and make a measurement in a year or two,” she says.

Unusual results are wonderful things, particularly when handled responsibly. The OPERA team is making no extravagant claims. It is simply putting before the scientific community a finding that even Ereditato calls a ‘crazy result,’ the idea being that the community can bring further resources to bear to figure out whether this result can be confirmed. Both the currently inactive T2K experiment in Japan, which directs neutrinos from its facility to a detector 295 kilometers away, and a neutrino experiment at Fermilab may be able to run tests to confirm or reject OPERA’s result. A confirmation would be, as CERN physicist Alvaro de Rujula says, ‘flabbergasting,’ but one way or another, going to work on these findings is going to take time, and patience.

The paper “Measurement of the neutrino velocity with the OPERA detector in the CNGS beam” is now up on the arXiv server (preprint).

Addendum: For an excellent backgrounder on neutrino detection and the latest measurements, replete with useful visuals, see Starts With a Bang. Thanks to @caleb_scharf for the tip.

And this comment from a new Athena Andreadis post is quite interesting:

If it proves true, it won’t give us hyperdrives nor invalidate relativity. What it will do is place relativity in an even larger frame, as Eisteinian theory did to its Newtonian counterpart. It may also (finally!) give us a way to experimentally test string theory… and, just maybe, open the path to creating a fast information transmitter like the Hainish ansible, proving that “soft” SF writers like Le Guin may be better predictors of the future than the sciency practitioners of “hard” SF.

Exoplanet Discoveries via PC

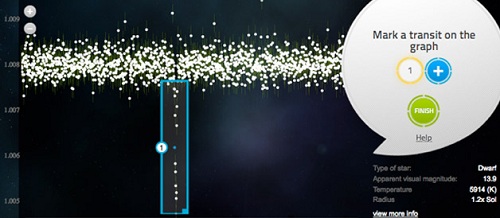

Because the financing for missions like Kepler is supported by tax dollars, it’s gratifying to see the public getting actively involved in working with actual data from the Kepler team. That’s what has been going on with the Planet Hunters site, where 40,000 users from a wide variety of countries and backgrounds have been analyzing what Kepler has found. Planet hunter Debra Fischer (Yale University), a key player in the launch of Planet Hunters, has this to say:

“It’s only right that this data has been pushed back into the public domain, not just as scientifically digested results but in a form where the public can actively participate in the hunt. The space program is a national treasure — a monument to America’s curiosity about the Universe. It is such an exciting time to be alive and to see these incredible discoveries being made.”

So far, so good on the citizen science front. Using publicly available Kepler data, Planet Hunters has found two new planets, both of them discarded initially by the Kepler team for a variety of technical reasons. Fischer believes the odds on the detections being actual planets are 95 percent or higher. The candidate planets have periods of 10 and 50 days, and radii from two and a half to eight times that of the Earth. One of them is conceivably a rocky world, though not in the habitable zone. Several dozen Planet Hunters users had spotted the planet candidates.

Image: One of the tutorial figures explaining how to use Planet Hunters at the site. The time it takes a planet to complete one orbit is called the orbital period. For transiting planets, this can be determined by counting the number of days from one transit to the next. Planets in longer period orbits will be more challenging to detect, both for humans and for computers because a transit will not appear in every 30-day set of light curve data. Large planets with short orbital periods are the easiest ones to detect. The most challenging detections will be small planets with long orbital periods. These will require patience and care, but are the real treasures in the Kepler data. Credit: Planet Hunters.

Following up the detection, astronomers used the Keck Observatory to study the host stars. A new study is to be published on the discoveries in the Monthly Notices of the Royal Astronomical Society, marking the first time the public has used NASA space mission data to find planets around other stars. So while the heavy lifting continues to be done by the Kepler team itself, public science has proven to be a helpful supplement, bringing more eyes to the data at hand. And, of course, the next round of Kepler data provides just as intriguing a hunting ground.

When it began, Planet Hunters was described as a bet on the ability of humans to beat computers, at least occasionally, because of the way people can use pattern recognition. The Kepler team uses computer algorithms fine-tuned to analyze light curve data because of the sheer number of stars the mission is working with. But while computers excel at finding what they are trained to find, the potential for surprise is always there as tens of thousands of users put pattern recognition to work to examine light curves, track down anomalies, and pay close attention to transit signals. For this kind of analysis, using powerful computers with a widely distributed human backup component is proving ideal for the task at hand.