Centauri Dreams

Imagining and Planning Interstellar Exploration

Dustfall: Earth’s Encounter with Micrometeorites

Interesting news out of CNRS (the French National Center for Scientific Research) renews our attention to the mechanisms for supplying the early Earth with water and carbonaceous molecules. We’ve looked at comets as possible water sources for a world forming well inside the snow line, and asteroids as well. What the CNRS work reminds us is that micrometeorites also play a role. In fact, according to the paper just out in Earth and Planetary Science Letters, 5,200 tons of extraterrestrial materials — dust particles from space — reach the ground yearly.

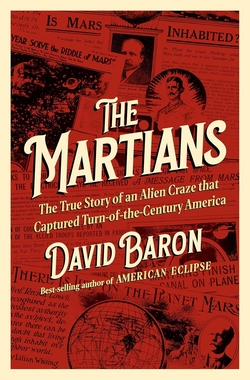

Image: From the paper’s Figure 1, although not the complete figure. The relevant part of the caption: Fig. 1. Left: Location of the CONCORDIA station (Dome C, Antarctica). Centre: View of a trench at Dome C. Credit: Rojas et al.

This conclusion comes from a study spanning almost twenty years, conducted by scientists in an international collaboration involving laboratories in France, the United States and the United Kingdom. CNRS researcher Jean Duprat has led six expeditions during this time span to the Franco-Italian Concordia station (Dome C), 1100 kilometers from the coast in the Antarctic territory Terre Adélie, which is claimed by France. France’s permanent outpost in Terre Adélie is Dumont d’Urville Station. The Dome C area is considered ideal for the study of micrometeorites because of the near absence of terrestrial dust and low rates of accumulation of snow.

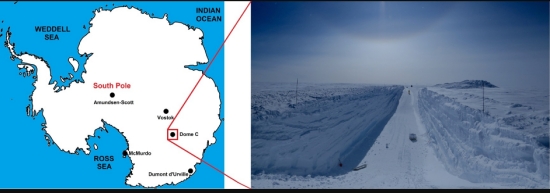

Image: Electron micrograph of a Concordia micrometeorite extracted from Antarctic snow at Dome. Credit: © Cécile Engrand/Jean Duprat.

The dust particles collected by these expeditions have ranged from 30 to 200 micrometers (µm) in size, and enough have been gathered to calculate the mass accreted to Earth per square meter per year, the aforementioned 5,200 tons. This would make micrometeorites the primary source of extraterrestrial matter on our planet — the flux from meteorites is measured as less than 10 tons per year.

The paper explains what happens as micrometeorites encounter the Earth’s atmosphere:

The degree of heating experienced by the particles during their atmospheric entry depends on various factors including the initial mass of the particles, their entry angle and velocity. The ablated metallic vapours oxidize and the resulting metal oxides, hydroxides and carbonates condense into nm-sized particles termed meteoric smoke (Plane et al., 2015). These particles are transported by the general atmospheric circulation until eventually deposited at the surface, where their flux can be evaluated by elemental or isotopic measurements (Gabrielli et al. (2004).

The size distribution of extraterrestrial particles before entry into the atmosphere was studied by measuring the craters that high velocity sub-millimetre grain collisions produced on the Long Duration Exposure Facility satellite (LDEF) panel. This was an interesting mission. The LDEF spent over five years orbiting the Earth to measure the effects of micrometeoroids, space debris, radiation particles, atomic oxygen, and solar radiation on spacecraft materials, components, and systems before being retrieved in 1990 by the Space Shuttle STS-32 mission.

Image: Collecting micrometeorites in the central Antarctic regions, at Dome C in 2002. Snow sampling. Credit: © Jean Duprat/ Cécile Engrand/ CNRS Photothèque.

The paper is Rojas et al., “The micrometeorite flux at Dome C (Antarctica), monitoring the accretion of extraterrestrial dust on Earth,” Earth and Planetary Science Letters Volume 560 (15 April 2021) 116794 (full text).

How Planetesimals Are Born

What governs the size of a newly forming star as it emerges from the molecular cloud around it? The answer depends upon the ability of gravity to overcome internal pressure within the cloud, and that in turn depends upon exceeding what is known as the Jeans Mass, whose value will vary with the density of the gas and its temperature. Exceed the Jeans mass and runaway contraction begins, forming a star whose own processes of fusion will arrest the contraction.

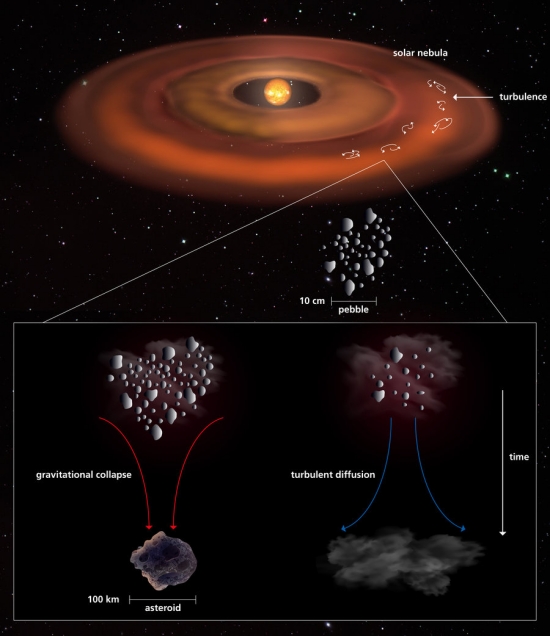

At the Max Planck Institute for Astronomy (Heidelberg), Hubert Klahr and colleagues have been working on a different kind of contraction, the processes within the protoplanetary disk around such young stars. Along with postdoc Andreas Schreiber, Klahr has come up with a type of Jeans Mass that can be applied to the formation of planetesimals. While stars in formation must overcome the pressure of their gas cloud, planetesimals work against turbulence within the gas and dust of a disk — a critical mass is needed for the clump to overcome turbulent diffusion. The new paper on this work extends the findings reported in a 2020 paper by the same authors.

The problem this work is trying to solve has to do with the size distribution of asteroids; specifically, why do primordial asteroids — planetesimals that have survived intact since the formation of the Solar System, without the collisions that have fragmented so many such objects — tend to be found with a diameter in the range of 100 kilometers? To answer the question, the researchers look at the behavior of pebbles in the disk, small clumps in the range of a few millimeters to a few centimeters in size. Here the plot thickens.

Pebbles tend to drift inward toward the star, meaning that if these pebbles accrete slowly, they would fall into the star before reaching the needed size. Klahr and Schreiber argue that this drift can be overcome thanks to turbulence within the gas of the protoplanetary disk, creating traps where the pebbles can accumulate enough mass for them to become bound together by gravity. Turbulence is a local effect, to be sure, but it can also vary as the disk changes with increased distance from the star. A fast enough drop in gas pressure with distance can create a streaming instability within the disk, so that pebbles move chaotically and churn the gas around them.

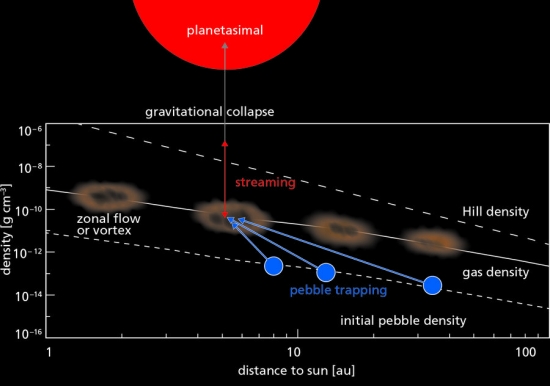

Image: The solar primordial nebula of gas and dust surrounds the sun in the form of a flat disk. In it are vortices in which the small pebbles accumulate. These pebbles can grow up to asteroid size by gravitational collapse, if this is not hindered by turbulent diffusion. Hubert Klahr, Andreas Schreiber, MPIA / MPIA Graphics Department, Judith Neidel.

So on the one hand, turbulence helps create the pebble clusters that can aggregate into larger objects, but to grow larger still, such clouds need to reach a certain mass or they will be dispersed by these instabilities. Klahr and Schreiber’s calculations of the necessary mass show that objects would need to reach 100 kilometers in size to overcome the turbulence pressure for most regions within our own Solar System, matching observation. Thus we have a limiting mass for planetesimal formation.

All of this grows out of simulations and the comparison of their results with telescopic observations of protoplanetary disks around young stars. In the numerical models the authors used, planetesimals grow quickly from the projected pebble traps. Low mass pebble clouds below this turbulence-based Jeans Mass are less likely to grow through accretion, while larger clouds would collapse as they exceeded the critical mass.

Image: In this schematic the sequence of planetesimal formation starts with particles growing to pebble size. These pebbles then accumulate at the midplane and drift toward the star to get temporarily trapped in a zonal flow or vortex. Here the density of pebbles in the midplane is eventually sufficient to trigger the streaming instability. This instability concentrates and diffuses pebbles likewise, leading to clumps that reach the Hill density at which tidal forces from the star can no longer shear the clumps away. If now also the turbulent diffusion is weak enough to let the pebble cloud collapse, then planetesimal formation will occur. Hubert Klahr, Andreas Schreiber, MPIA / MPIA Graphics Department, Judith Neidel.

Planetesimal size does vary at larger distances, such that objects forming in the outer regions of the disk cluster around 10 kilometers at 100 AU. The authors suggest that the characteristic size of Kuiper Belt Objects will be found to decrease with increasing distance from the Sun, something that future missions into that region can explore. The 100 kilometer diameter seems to hold out to about 60 AU, beyond which smaller objects form from a depleted disk.

New Horizons comes to mind as the only spacecraft to study such an object, the intriguing 486958 Arrokoth, which at 45 AU is the most distant primordial object visited by a space probe. Future missions will give us more information about the size distribution of Kuiper Belt Objects. We can also think about the Lucy mission, whose solar panels we looked at yesterday. The target asteroids orbit at the Jupiter Lagrange points 4 and 5, one group ahead of the planet in its orbit, one group behind. Lucy is to visit six Trojans, objects which evidently migrated from different regions in the Solar System and may include a population of primordial planetesimals.

Klahr and Schreiber have come up with a model that can make predictions about how planetesimals form within the protoplanetary disk and their likely sizes within the disk. Whether these predictions are born out by subsequent observation will help us choose between this new Jeans Mass model for planetesimals and other formation processes, including the possibility that ice is the mechanism allowing pebbles to stick together, or whether aggregates of silicate flakes are the answer. Klahr is not convinced that either of these models can withstand scrutiny:

“Even if collisions were to lead to growth up to 100 km without eventually switching to a gravitational collapse, this method would predict too large a number of asteroids smaller than 100 km. It would also fail to describe the high frequency of binary objects in the Edgeworth-Kuiper belt. Both properties of our Solar System are easily reconcilable with the gravitational pebble cloud collapse.”

The effects of turbulence in other stellar systems will likewise rely upon the concentration of mass within the disk, with the ability for larger planetesimals to form decreasing as the gas within the disk is depleted, either by planet formation or by absorption by the host star. If Klahr and Schreiber’s model of planetesimal formation as a function of gas pressure is applicable to what we are seeing in protoplanetary disks around other stars, then it should prove useful for scientists building population synthesis models to incorporate planetesimals into the mix.

The previously published paper is Klahr & Schreiber, “Turbulence Sets the Length Scale for Planetesimal Formation: Local 2D Simulations of Streaming Instability and Planetesimal Formation,” Astrophysical Journal Volume 901, Issue 1, id.54, (September, 2020). Abstract. The upcoming paper is “Testing the Jeans, Toomre and Bonnor-Ebert concepts for planetesimal formation: 3D streaming instability simulations of diffusion regulated formation of planetesimals,” in process at the Astrophysical Journal (preprint).

Lucy: Solar Panel Deployment Tests a Success

It’s easy to forget how large our space probes have been. A replica of the Galileo probe during at the Jet Propulsion Laboratory can startle at first glance. The spacecraft was 5.3 meters high (17 feet), but an extended magnetometer boom telescoped out to 11 meters (36 feet). Not exactly the starship Enterprise, of course, but striking when you’re standing there looking up at the probe and pondering what it took to deliver this entire package to Jupiter orbit in the 1990s.

The same feeling settles in this morning with news out of Lockheed Martin Space, where in both December 2020 and February of 2021 final deployment tests were conducted on the solar arrays that will fly aboard the Lucy mission. Scheduled for launch this fall (the launch window opens on October 16), Lucy is to make a 12-year reconnaissance of the Trojan asteroids of Jupiter. Given that energy from the Sun is inversely proportional to the square of the distance, Lucy in Jupiter space will receive only 1/27th of the energy available at Earth orbit

Image: Seen here partially unfurled, the Lucy spacecraft’s massive solar arrays completed their first deployment tests in January 2021 inside the thermal vacuum chamber at Lockheed Martin Space in Denver, Colorado. To ensure no extra strain was placed on the solar arrays during testing in Earth’s gravity environment, the team designed a special mesh wire harness to support the arrays during deployment. Credit: Lockheed Martin

“The success of Lucy’s final solar array deployment test marked the end of a long road of development. With dedication and excellent attention to detail, the team overcame every obstacle to ready these solar panels.”

Those are the words of Matt Cox, Lockheed Martin’s Lucy program manager, in Littleton, Colorado, who added:

“Lucy will travel farther from the Sun than any previous solar-powered Discovery-class mission, and one reason we can do that is the technology in these solar arrays.”

Lucy will actually move beyond Jupiter’s orbit in its study of the Trojans, up to 853 million kilometers out, so we need big panels. With the panels attached and fully extended, they could cover a five-story building. They’ll need to supply about 500 watts to the spacecraft and its instruments. Manufactured by Northrop Grumman, each solar panel when folded is no more than 4 centimeters thick, but when expanded, will have a diameter of almost 7.3 meters.

[But see Alex Tolley’s comment below. I believe the solar panel thickness is actually 10 cm]

The good news is that with the help of weight offloading devices for needed support under gravity, the deployment tests, conducted in a thermal vacuum chamber at Lockheed Martin Space in Denver, went flawlessly. Hal Levison (Southwest Research Institute) points to the critical nature of the process:

“At about one hour after the spacecraft launches, the solar panels will need to deploy flawlessly in order to assure that we have enough energy to power the spacecraft throughout the mission. These 20 minutes will determine if the rest of the 12 year mission will be a success. Mars landers have their seven minutes of terror, we have this.”

Which takes me back momentarily to Galileo, where the ‘seven minutes of terror’ involved about 165 seconds, the time it was believed it would take the spacecraft’s actuators to deploy its high-gain antenna. The failure to deploy properly ultimately forced controllers to use a low-gain antenna to return data, a workaround that produced great science but at a much reduced rate. We know what happened here, which involved events during the 4.5 years Galileo spent in storage following the explosion of the Challenger shuttle, and we can hope that the thorough deployment tests at Lockheed Martin Space will ensure a successful deployment for Lucy.

Image: At 24 feet (7.3 meters) across each, Lucy’s two solar panels underwent initial deployment tests in January 2021. In this photo, a technician at Lockheed Martin Space in Denver, Colorado, inspects one of Lucy’s arrays during its first deployment. These massive solar arrays will power the Lucy spacecraft throughout its entire 4-billion-mile, 12-year journey as it heads out to explore Jupiter’s elusive Trojan asteroids. Credit: Lockheed Martin.

Explaining Earth’s Carbon: Enter the ‘Soot Line’

Let’s take a look at how Earth’s carbon came to be here, through the medium of two new papers. This is a process most scientists have assumed involved molecules in the original solar nebula that wound up on our world through accretion as the gases cooled and the carbon molecules precipitated. But the first of the papers (both by the same team, though with different lead authors) points out that gas molecules carrying carbon won’t do the trick. When carbon vaporizes, it does not condense back into a solid, and that calls for some explanation.

University of Michigan scientist Jie Li is lead author of the first paper, which appears in Science Advances. The analysis here says that carbon in the form of organic molecules produces much more volatile species when it is vaporized, and demands low temperatures to form solids. Moreover, says Li, it does not condense back into organic form.

“The condensation model has been widely used for decades. It assumes that during the formation of the sun, all of the planet’s elements got vaporized, and as the disk cooled, some of these gases condensed and supplied chemical ingredients to solid bodies. But that doesn’t work for carbon.”

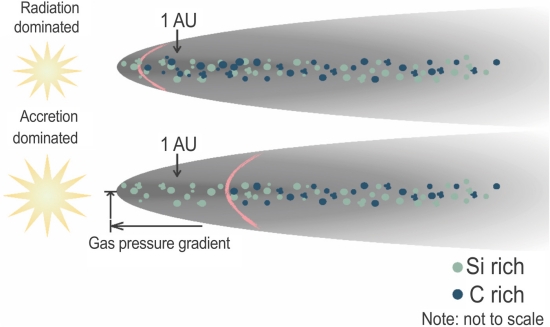

Most of Earth’s carbon, the researchers believe, accumulated directly from the interstellar medium well after the protoplanetary disk had formed and warmed; it was never vaporized in the way the condensation model suggests. Interesting concepts come into play here, among them the cleverly titled ‘soot line,’ in analogy to the ‘snow line’ in planetary systems. This marker has a lot to do with how carbon behaves. The authors use astronomical observations and modeling to explore the concept. From the paper — watch what happens as the disk warms:

Astronomical observations show that approximately half of the cosmically available carbon entered the protoplanetary disk as volatile ices and the other half as carbonaceous organic solids. As the disk warms up from 20 K, all the volatile carbon carriers sublimate by 120 K, followed by the conversion of major refractory carbon carriers into CO and other gases near a characteristic temperature of ~500 K… The sublimation sequence of carbon exhibits a “cliff” where dust grains in an accreting disk lose most of their carbon to gas within a narrow temperature range near 500 K.

The ‘cliff’ is another good analogy. It’s the edge of the soot line:

The division between the stability fields of solid and gas carbon carriers corresponds to the “soot line,” a term coined to describe the location where the irreversible destruction of presolar polycyclic hydrocarbons via thermally driven reactions in the planet-forming region of disks occurred.

Modeling the sublimation process and loss of carbon in the solar nebula, the authors chart the soot line as it migrates with time as the system matures and the pressure and temperature of the disk evolve. Shortly after the birth of the Sun, the soot line might have extended out 10s of AU, but as the accretion rate diminished, it would have migrated inward. A carbon poor early Earth, then, would be the result of formation during the period when the soot line was well beyond Earth’s orbit, during the first million years, when accretion rates were high.

Image: This is Figure 2 from the paper. Caption: Fig. 2 Schematic illustration of the soot line in a protoplanetary disk: The soot line (red parabola) delineates the phase boundary between solid and gaseous carbon carriers. In the accretion-dominated disk phase, it is located far from the proto-Sun and divides carbon-poor dust and pebbles (green dots) from carbon-rich ones (dark blue dots). Within 1 Ma, as a result of the transition to a radiation-dominated, or passive, disk phase, the soot line migrates inside Earth’s current orbit. Note that the Si-rich and C-rich solids do not represent distinct reservoirs because carbonaceous material is likely associated with silicates. They are provided for ease in illustration. Credit: Li et al.

Drawing again from the paper:

If the bulk carbon content of Earth is low, then most of its source materials must have lost carbon through sublimation early in the nebula’s history or by additional processes such as planetesimal differentiation. Constraining the fraction of carbon-depleted source material accreted by Earth requires us to constrain the maximum amount of carbon in the bulk Earth.

Which the authors do by determining the maximum amount of carbon the Earth’s core could contain — after all, they mention planetary differentiation — a figure that turns out to be less than half a percent of Earth’s mass. Says Li’s colleague Edwin Bergin (University of Michigan):

“We asked how much carbon could you stuff in the Earth’s core and still be consistent with all the constraints. There’s uncertainty here. Let’s embrace the uncertainty to ask what are the true upper bounds for how much carbon is very deep in the Earth, and that will tell us the true landscape we’re within.”

The paper points to a severe carbon deficit in the newly formed Earth, and suggests still more about the environment producing it. Centimeter-to-meter sized pebbles delivering mass as they drift inward from the outer Solar System would carry both water and carbon. Simulations of their movement show that a giant planet core in the disk would create a pressure bump where drifting pebbles would pile up, diminishing the supply of carbon for the emerging inner system. The carbon-poor composition of iron meteorites is cited as evidence of this early carbon depletion.

In the second paper, the same group of researchers examined these iron meteorites, which represent the metallic cores of planetesimals, looking at how they retained carbon in their early formation. Here melting and loss of carbon is apparent. Marc Hirschmann (University of Minnesota) led the second study, which included the same co-authors along with Li:

“Most models have the carbon and other life-essential materials such as water and nitrogen going from the nebula into primitive rocky bodies, and these are then delivered to growing planets such as Earth or Mars.. But this skips a key step, in which the planetesimals lose much of their carbon before they accrete to the planets.”

Thus we see two different aspects of carbon loss, highlighting the delicate nature of carbon, so necessary for climate regulation but capable of creating Venus-like conditions when found in excess. The loss of carbon in the early Earth may play an essential role in our planet’s habitability. How carbon loss occurs in other planetary systems is a topic that will require a multidisciplinary approach involving both astronomy and geochemistry. As the second paper suggests, it’s a topic that could be vital to life’s chances elsewhere:

…the volatile-depleted character of parent body cores reflects processes that affected whole planetesimals. As the parent bodies of iron meteorites formed early in solar system history and likely represent survivors of a planetesimal population that was mostly consumed during planet formation, they are potentially good analogs for the compositions of planetesimals and embryos accreted to terrestrial planets. Less depleted chondritic bodies, which formed later and did not experience such significant devolatilization, are possibly less apt models for the building blocks of terrestrial planets. More globally, the process of terrestrial planet formation appears to be dominated by volatile carbon loss at all stages, making the journey of carbon-dominated interstellar precursors (C/Si > 1) to carbon-poor worlds inevitable.

Thus sublimation, not condensation, tells the tale of carbon abundance on Earth, with presumably the same processes at work elsewhere in the galaxy.

The Li paper is “Earth’s carbon deficit caused by early loss through irreversible sublimation,” Science Advances Vol. 7, No. 14 (2 April 2021). Full text. The Hirschmann paper is “Early volatile depletion on planetesimals inferred from C-S systematics of iron meteorite parent bodies,” Proceedings of the National Academy of Sciences Vol. 118 (30 March 2021). Full text.

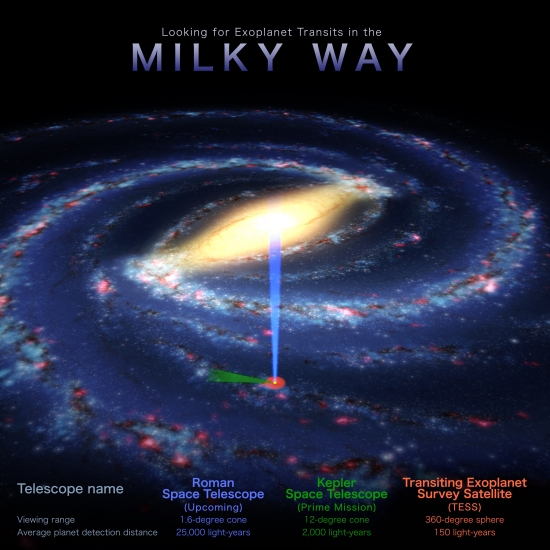

Roman Space Telescope: Planets in the Tens of Thousands

The Nancy Grace Roman Space Telescope is the instrument until recently known as WFIRST (Wide-Field Infrared Survey Telescope), a fact I’ll mention here for the last time just because there are so many articles about WFIRST in the archives. From now on, I’ll just refer to the Roman Space Telescope, or RST. Given our focus on exoplanet research, we should keep in mind that the project’s history has been heavily influenced by concepts for studying dark energy and the expansion history of the cosmos. The exoplanet component has grown, however, into a vital part of the mission, and now includes both gravitational microlensing and transit studies.

We’ve discussed both methods frequently in these pages, so I’ll just note that microlensing relies on the movement of a star and its accompanying planetary system in front of a background star, allowing the detection because of the resultant brightening of the background star’s light. We’re seeing the effects of the warping of spacetime caused by the nearer objects, with the brightening carrying the data on one or more planets around the central star. You can see why a target-rich environment is needed here — such occulations are random and lots of stars are needed to cull out a few. Thus RST looks toward galactic center.

Assuming a successful launch and operations beginning in the mid-2020s, the RST should be a prolific planet-hunter indeed. NASA is now projecting, based on a 2017 paper from Benjamin Montet (now at the University of New South Wales) and colleagues, that the anticipated light curves for millions of stars should turn up as many as 100,000 planets. Montet et al. actually cite as many as 150,000, noting that the WFIRST field is more metal rich than the main Kepler field. All of these systems should have measured parallaxes, though most will be too faint for follow-ups.

Even so, the Montet paper finds ways to confirm some of these:

We find that secondary eclipse depth measurements can be used to confirm as many as 2900 giant planets, which can be detected at distances of > 8 kpc. From these confirmed WFIRST planets, we will be able to measure the variation in the occurrence rate of short-period giant planets. Furthermore, we show that WFIRST is capable of detecting TTVs which can be used to confirm the planetary nature of some systems, especially those with smaller planets.

This is particularly fruitful — consider that the transiting planets that RST finds will in most cases not be found around the same host stars as the planets found through microlensing. The two methods enable separate probes of the same population of planets but at different separations, with transits being most detectable for close-in planets and microlensing being the method to detect planets much further out in the system. We go from star-hugging hot Jupiters to planets beyond 10 AU. Microlensing should also turn up free-floating ‘rogue’ planets.

The Kepler mission studied stars in an area encompassing parts of Lyra, Cygnus and Draco, most of them ranging from 600 to 3,000 light years away. With RST, we will be going from Kepler’s 115 square degree field of view of relatively nearby stars to a 3 square degree field that, because it is toward galactic center, will track up to 200 million stars. The average distance of stars in this field will be in the range of 10,000 light years in what will be the first space-based microlensing survey, looking at planets all a wide range of distance from the host star.

Image: This graphic highlights the search areas of three planet-hunting missions: the upcoming Nancy Grace Roman Space Telescope, the Transiting Exoplanet Survey Satellite (TESS), and the retired Kepler Space Telescope. Astronomers expect Roman to discover roughly 100,000 transiting planets, worlds that periodically dim the light of their stars as they cross in front of them. While other missions, including Kepler’s extended K2 survey (not pictured in this graphic), have unveiled relatively nearby planets, Roman will reveal a wealth of worlds much farther from home. Credit: NASA’s Goddard Space Flight Center.

The synergy between microlensing and transit work is clear. Jennifer Yee, an astrophysicist at the Center for Astrophysics | Harvard & Smithsonian, notes that thousands of transiting planets are going to turn up within the microlensing data. “It’s free science,” says Yee. Benjamin Montet agrees:

“Microlensing events are rare and occur quickly, so you need to look at a lot of stars repeatedly and precisely measure brightness changes to detect them. Those are exactly the same things you need to do to find transiting planets, so by creating a robust microlensing survey, Roman will produce a nice transit survey as well.”

So we’ve gone from the relatively close — Kepler with stars at an average distance of 2,000 light years — to the much closer — TESS, with its scans of the entire sky focusing in particular on stars in the range of 150 light years — and now to RST, which backs out all the way to galactic center, a field encompassing stars as far as 26,000 light years away. NASA estimates that three-quarters of the transits RST detects will be gas or ice giants, with most of the rest being planets between four and eight times as massive as Earth, the intriguing ‘mini-Neptunes.’ For its part, microlensing takes us down to rocky planets smaller than Mars and up to gas giant size.

The Montet paper is “Measuring the Galactic Distribution of Transiting Planets with WFIRST,” Publications of the Astronomical Society of the Pacific Vol. 129, No. 974 (24 February 2017). Abstract.

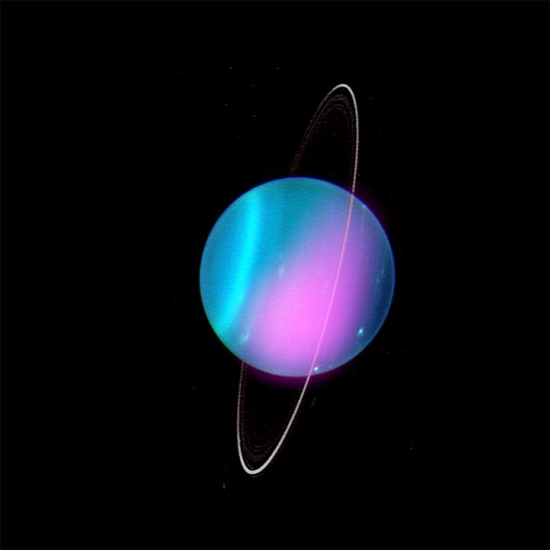

Uranus: Detection of X-rays and their Implications

Just as Earth’s atmosphere scatters light from the Sun, both Jupiter and Saturn scatter X-rays produced by our star. In a new study using data from the Chandra X-ray Observatory, we now learn that Uranus likewise scatters X-rays, but with an interesting twist. For there is a hint — and only a hint — that scattering is only one of the processes at work here, and that could produce insights into a system that thus far we have been able to study up close only once, through the flyby of Voyager 2. As the paper on this work notes: “These fluxes exceed expectations from scattered solar emission alone.” Just what is going on here will demand further work.

William Dunn (University College London) is lead author of the paper, which includes co-authors from an international team working with Chandra data from 2002 and 2017. In the image below, the X-ray data from Chandra is superimposed upon a 2004 observation of Uranus from the Keck telescope, which shows the planet at essentially the same orientation as the 2002 Chandra observations. Any image of Uranus reminds us that the ice giant, about four times Earth’s diameter, rotates on its side, with twin sets of rings around the equator.

Image: The first X-rays from Uranus have been captured by Chandra during observations obtained in 2002 and 2017, a discovery that may help scientists learn more about this ice giant planet. Researchers think most of the X-rays come from solar X-rays that scatter off the Uranus’s atmosphere as well as its ring system. Some of the X-rays may also be from auroras on Uranus, a phenomenon that has previously been observed at other wavelengths. This Uranus image is a composite of optical light from the Keck telescope in Hawaii (blue and white) and X-ray data from Chandra (pink). Credit: X-ray: NASA/CXO/University College London/W. Dunn et al; Optical: W.M. Keck Observatory.

Uranus’ orientation is significant given that its rotation axis is almost parallel to the plane of its orbit. Moreover, the tilt of the rotation axis differs from that of the planet’s magnetic field and is also offset from the planet’s center. That should play into the auroral activity observed on Uranus, a useful trait because exactly how the auroral process works has not been determined. On Earth, auroras are the result of electrons traveling along the planet’s magnetic field lines to the poles, and we likewise find auroral activity on Jupiter, where a similar effect is in play. But the Jovian auroras are also fired by positively charged atoms entering the polar regions.

Are the auroras of Uranus one source of the recently detected X-rays? The possibility remains in the mix pending further study. Given that X-rays are emitted on Earth and Jupiter as a result of auroral activity, the unusual axial and magnetic field tilt on Uranus may clarify our understanding of the process, while illuminating how other astrophysical objects emit X-rays.

Without further data, the authors can only speculate, but they see another possible cause in the rings of Uranus. The rings of Saturn are known to produce X-rays, and it is possible that the interactions of the Uranian rings with charged particles could cause an X-ray glow that is detectable.

As we learn more, it’s clear that Uranus is an interesting laboratory for X-ray work, with a highly variable magnetosphere interacting in complicated ways with the solar wind. That hint of processes beyond X-ray scattering, however, may point to an actual mechanism for their production or, as the authors note, may be no more than a statistical fluctuation in the Chandra data. How we develop this picture depends upon further Chandra work and possibly data from XMM-Newton, the European Space Agency’s X-ray observatory. The roadmap for that investigation is explained in the paper:

…an observation lasting a few XMM?Newton orbits would be needed to provide a Uranus X?ray spectrum that could be modeled. This would enable a deeper characterization of the spectrum from Uranus, to explore, for example, the presence of fluorescence line emissions from the rings. Further, and longer, observations with Chandra would help to produce a map of X?ray emission across Uranus and to identify, with better signal?to?noise, the source locations for the X?rays, constraining possible contributions from the rings and aurora. Such longer timescale observations would also permit exploration of whether the emissions vary in phase with rotation, potentially suggestive of auroral emissions rotating in and out of view.

The paper is Dunn et al., “A Low Signal Detection of X?Rays From Uranus,” JGR Space Physics 31 March 2021 (Abstract / Full Text).