Centauri Dreams

Imagining and Planning Interstellar Exploration

A Black Cloud of Computation

Moore’s Law, first stated all the way back in 1965, came out of Gordon Moore’s observation that the number of transistors per silicon chip was doubling every year (it would later be revised to doubling every 18-24 months). While it’s been cited countless times to explain our exponential growth in computation, Greg Laughlin, Fred Adams and team, whose work we discussed in the last post, focus not on Moore’ Law but a less publicly visible statement known as Landauer’s Principle. Drawing from Rolf Landauer’s work at IBM, the 1961 equation defines the lower limits for energy consumption in computation.

You can find the equation here, or in the Laughlin/Adams paper cited below, where the authors note that for an operating temperature of 300 K (a fine summer day on Earth), the maximum efficiency of bit operations per erg is 3.5 x 1013. As we saw in the last post, a computational energy crisis emerges when exponentially increasing power requirements for computing exceed the total power input to our planet. Given current computational growth, the saturation point is on the order of a century away.

Thus Landauer’s limit becomes a tool for predicting a problem ahead, given the linkage between computation and economic and technological growth. The working paper that Laughlin and Adams produced looks at the numbers in terms of current computational throughput and sketches out a problem that a culture deeply reliant on computation must overcome. How might civilizations far more advanced than our own go about satisfying their own energy needs?

Into the Clouds

We’re familiar with Freeman Dyson’s interest in enclosing stars with technologies that can exploit the great bulk of their energy output, with the result that there is little to mark their location to distant astronomers other than an infrared signature. Searches for such megastructures have already been made, but thus far with no detections. Laughlin and Adams ponder exploiting the winds generated by Asymptotic Giant Branch stars, which might be tapped to produce what they call a ‘dynamical computer.’ Here again there is an infrared signature.

Let’s see what they have in mind:

In this scenario, the central AGB star provides the energy, the raw material (in the form of carbon-rich macromolecules and silicate-rich dust), and places the material in the proper location. The dust grains condense within the outflow from the AGB star and are composed of both graphite and silicates (Draine and Lee 1984), and are thus useful materials for the catalyzed assembly of computational components (in the form of nanomolecular devices communicating wirelessly at frequencies (e.g. sub-mm) where absorption is negligible in comparison to required path lengths.

What we get is a computational device surrounding the AGB star that is roughly the size of our Solar System. In terms of observational signatures, it would be detectable as a blackbody with temperature in the range of 100 K. It’s important to realize that in natural astrophysical systems, objects with these temperatures show a spectral energy distribution that, the authors note, is much wider than a blackbody. The paper cites molecular clouds and protostellar envelopes as examples; these should be readily distinguishable from what the authors call Black Clouds of computation.

It seems odd to call this structure a ‘device,’ but that is how Laughlin and Adams envision it. We’re dealing with computational layers in the form of radial shells within the cloud of dust being produced by the AGB star in its relatively short lifetime. It is a cloud in an environment that subjects it to the laws of hydrodynamics, which the paper tackles by way of characterizing its operations. The computer, in order to function, has to be able to communicate with itself via operations that the authors assume occur at the speed of light. Its calculated minimum temperature predicts an optimal radial size of 220 AU, an astronomical computing engine.

And what a device it is. The maximum computational rate works out to 3 x 1050 bits s-1 for a single AGB star. That rate is slowed by considerations of entropy and rate of communication, but we can optimize the structure at the above size constraint and a temperature between 150 and 200 K, with a mass roughly comparable to that of the Earth. This is a device that is in need of refurbishment on a regular timescale because it is dependent upon the outflow from the star. The authors calculate that the computational structure would need to be rebuilt on a timescale of 300 years, comparable to infrastructure timescales on Earth.

Thus we have what Laughlin, in a related blog post, describes as “a dynamically evolving wind-like structure that carries out computation.” And as he goes on to note, AGB stars in their pre-planetary nebula phase have lifetimes on the order of 10,000 years, during which time they produce vast amounts of graphene suitable for use in computation, with photospheres not far off room temperature on Earth. Finding such a renewable megastructure in astronomical data could be approached by consulting the WISE source catalog with its 563,921,584 objects. A number of candidates are identified in the paper, along with metrics for their analysis.

These types of structures would appear from the outside as luminous astrophysical sources, where the spectral energy distributions have a nearly blackbody form with effective temperature T ? 150 ? 200 K. Astronomical objects with these properties are readily observable within the Galaxy. Current infrared surveys (the WISE Mission) include about 200 candidate objects with these basic characteristics…

And a second method of detection, looking for nano-scale hardware in meteorites, is rather fascinating:

Carbonaceous chondrites (Mason 1963) preserve unaltered source material that predates the solar system, much of which was ejected by carbon stars (Ott 1993). Many unusual materials have been identified within carbonaceous chondrites, including, for example, nucleobases, the informational sub-units of RNA and DNA (see Nuevo et al. 2014). Most carbonaceous chondrites have been subject to processing, including thermal metamorphism and aqueous alteration (McSween 1979). Graphite and highly aromatic material survives to higher temperatures, however, maintaining structure when heated transiently to temperatures of order, T ? 700K (Pearson et al. 2006). It would thus potentially be of interest to analyze carbonaceous chondrites to check for the presence of (for example) devices resembling carbon nanotube field-effect transistors (Shulakar, et al. 2013).

Meanwhile, Back in 2021

But back to the opening issue, the crisis posited by the rate of increase in computation vs. the energy available to our society. Should we tie Earth’s future economic growth to computation? Will a culture invariably find ways to produce the needed computational energies, or are other growth paradigms possible? Or is growth itself a problem that has to be surmounted?

At the present, the growth of computation is fundamentally tied to the growth of the economy as a whole. Barring the near-term development of practical ireversible computing (see, e.g., Frank 2018), forthcoming computational energy crisis can be avoided in two ways. One alternative involves transition to another economic model, in contrast to the current regime of information-driven growth, so that computational demand need not grow exponentially in order to support the economy. The other option is for the economy as a whole to cease its exponential growth. Both alternatives involve a profound departure from the current economic paradigm.

We can wonder as well whether what many are already seeing as the slowdown of Moore’s Law will lead to new forms of exponential growth via quantum computing, carbon nanotube transistors or other emerging technologies. One thing is for sure: Our planet is not at the technological level to exploit the kind of megastructures that Freeman Dyson and Greg Laughlin have been writing about, so whatever computational crisis we face is one we’ll have to surmount without astronomical clouds. Is this an aspect of the L term in Drake’s famous equation? It referred to the lifetime of technological civilizations, and on this matter we have no data at all.

The working paper is Laughlin et al., “On the Energetics of Large-Scale Computation using Astronomical Resources.” Full text. Laughlin also writes about the concept on his oklo.org site.

Cloud Computing at Astronomical Scales

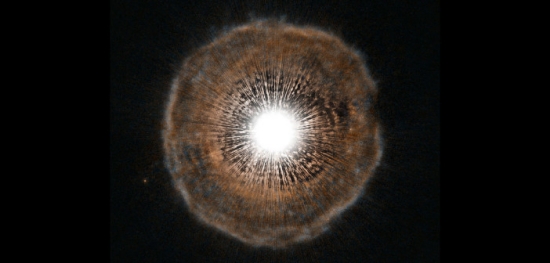

Interesting things happen to stars after they’ve left the main sequence. So-called Asymptotic Giant Branch (AGB) stars are those less than nine times the mass of the Sun that have already moved through their red giant phase. They’re burning an inner layer of helium and an outer layer of hydrogen, multiple zones surrounding an inert carbon-oxygen core. Some of these stars, cooling and expanding, begin to condense dust in their outer envelopes and to pulsate, producing a ‘wind’ off the surface of the star that effectively brings an end to hydrogen burning.

Image: Hubble image of the asymptotic giant branch star U Camelopardalis. This star, nearing the end of its life, is losing mass as it coughs out shells of gas. Credit: ESA/Hubble, NASA and H. Olofsson (Onsala Space Observatory).

We’re on the way to a planetary nebula centered on a white dwarf now, but along the way, in this short pre-planetary nebula phase, we have the potential for interesting things to happen. It’s a potential that depends upon the development of intelligence and technologies that can exploit the situation, just the sort of scenario that would attract Greg Laughlin (Yale University) and Fred Adams (University of Michigan). Working with Darryl Seligman and Khaya Klanot (Yale), the authors of the brilliant The Five Ages of the Universe (Free Press, 2000) are used to thinking long-term, and here they ponder technologies far beyond our own.

For a sufficiently advanced civilization might want to put that dusty wind from a late Asymptotic Giant Branch star to work on what the authors call “a dynamically evolving wind-like structure that carries out computation.” It’s a fascinating idea because it causes us to reflect on what might motivate such an action, and as we learn from the paper, the energetics of computation must inevitably become a problem for any technological civilization facing rapid growth. A civilization going the AGB route would also produce an observable signature, a kind of megastructure that is to my knowledge considered here for the first time.

Laughlin refers to the document that grows out of these calculations (link below) as a ‘working paper,’ a place for throwing off ideas and, I assume, a work in progress as those ideas are explored further. It operates on two levels. The first is to describe the computational crisis facing our own civilization, assuming that exponential growth in computing continues. Here the numbers are stark, as we’ll see below, and the options problematic. The second level is the speculation about cloud computing at the astronomical level, which takes us far beyond any solution that would resolve our near-term problem, but offers fodder for thought about the possible behaviors of civilizations other than our own.

Powering Up Advanced Computation

The kind of speculation that puts megastructures on the table is productive because we are the only example of technological civilization that we have to study. We have to ask ourselves what we might do to cope with problems as they scale up to planetary size and beyond. Solar energy is one thing when considered in terms of small-scale panels on buildings, but Freeman Dyson wanted to know how to exploit not just our allotted sliver of the energy being put out by the Sun but all of it. Thus the concept of enclosing a star with a shell of technologies, with the observable result of gradually dimming the star while producing a signature in the infrared.

Laughlin, Adams and colleagues have a new power source that homes in on the drive for computation. It exploits the carbon-rich materials that condense over periods of thousands of years and are pushed outward by AGB winds, offering the potential for computers at astronomical scales. It’s wonderful to see them described here as ‘black clouds,’ a nod the authors acknowledge to Fred Hoyle’s engaging 1957 novel The Black Cloud, in which a huge cloud of gas and dust approaches the Solar System. Like the authors’ cloud, Hoyle’s possesses intelligence, and learning how to deal with it powers the plot of the novel.

Hoyle wasn’t thinking in terms of computation in 1957, but the problem is increasingly apparent today. We can measure the capabilities of our computers and project the resource requirements they will demand if exponential rates of growth continue. The authors work through calculations to a straightforward conclusion: The power we need to support computation will exceed that of the biosphere in roughly a century. This resource bottleneck likewise applies to data storage capabilities.

Just how much computation does the biosphere support? The answer merges artificial computation with what Laughlin and team call “Earth’s 4 Gyr-old information economy.” According to the paper’s calculations, today’s artificial computing uses 2 x 10-7 of the Earth’s insolation energy budget. The biosphere in these terms can be considered an infrastructure for copying the digital information encoded in strands of DNA. Interestingly, the authors come up with a biological computational efficiency that is a factor of 10 more efficient than today’s artificial computation. They also provide the mathematical framework for the idea that artificial computing efficiencies can be improved by a factor of more than 107.

Artificial computing is, of course, a rapidly moving target. From the paper:

At present, the “computation” performed by Earth’s biosphere exceeds the current burden of artificial computation by a factor of order 106. The biosphere, however, has carried out its computation in a relatively stable manner over geologic time, whereas artificial computation by devices, as well as separately, the computation stemming from human neural processing, are both increasing exponentially – the former though Moore’s Law-driven improvement in devices and increases in the installed base of hardware, and the latter through world population growth. Environmental change due to human activity can, in a sense, be interpreted as a computational “crisis” a situation that will be increasingly augmented by the energy demands of computation.

We get to the computational energy crisis as we approach the point where the exponential growth in the need for power exceeds the total power input to the planet, which should occur in roughly a century. We’re getting 1017 watts from the Sun here on the surface and in something on the order of 100 years we will need to use every bit of that to support our computational infrastructure. And as mentioned above, the same applies to data storage, even conceding the vast improvements in storage efficiency that continue to occur.

Civilizations don’t necessarily have to build megastructures of one form or another to meet such challenges, but we face the problem that the growth of the economy is tied to the growth of computation, meaning it would take a transition to a different economic model — abandoning information-driven energy growth — to reverse the trend of exponential growth in computing.

In any case, it’s exceedingly unlikely that we’ll be able to build megastructures within a century, but can we look toward the so-called ‘singularity’ to achieve a solution as artificial intelligence suddenly eclipses its biological precursor? This one is likewise intriguing:

The hypothesis of a future technological singularity is that continued growth in computing capabilities will lead to corresponding progress in the development of artificial intelligence. At some point, after the capabilities of AI far exceed those of humanity, the AI system could drive some type of runaway technological growth, which would in turn lead to drastic changes in civilization… This scenario is not without its critics, but the considerations of this paper highlight one additional difficulty. In order to develop any type of technological singularity, the AI must reach the level of superhuman intelligence, and implement its civilization-changing effects, before the onset of the computational energy crisis discussed herein. In other words, limits on the energy available for computation will place significant limits on the development of AI and its ability to instigate a singularity.

So the issues of computational growth and the energy to supply it are thorny. Let’s look in the next post at the ‘black cloud’ option that a civilization far more advanced than ours might deploy, assuming it figured out a way to get past the computing bottleneck our own civilization faces in the not all that distant future. There we’ll be in an entirely speculative realm that doesn’t offer solutions to the immediate crisis, but makes a leap past that point to consider computational solutions at the scale of the solar system that can sustain a powerful advanced culture.

The paper is Laughlin et al., “On the Energetics of Large-Scale Computation using Astronomical Resources.” Full text. Laughlin also writes about the concept on his oklo.org site.

Parsing Exoplanet Weather

Although it seems so long ago as to have been in another century (which it actually almost was), the first detection of an exoplanet atmosphere came in the discovery of sodium during a transit of the hot Jupiter HD 209458b in 2002. To achieve it, researchers led by David Charbonneau used the method called transmission spectroscopy, in which they analyzed light from the star as it passed through the atmosphere of the planet. Since then, numerous other compounds have been found in planetary atmospheres, including water, methane and carbon dioxide.

Scientists also expect to find the absorption signatures of metallic compounds in hot Jupiters, and these have been detected in brown dwarfs as well as ultra-hot Jupiters. Now we have new work out of SRON Netherlands Institute for Space Research and the University of Groningen. Led by Marrick Braam, a team of astronomers has found evidence for chromium hydride (CrH) in the atmosphere of the planet WASP-31b, a hot Jupiter with a temperature of about 1200° C in the twilight region where light from the star passes through the atmosphere during a transit.

This is an interesting find because it has implications for weather. The temperature in this region is just where chromium hydride transitions from a liquid to a gas at the corresponding pressure in the atmosphere, making it a potential weather-maker just as water is for Earth. Braam speaks of the possibility of clouds and rain coming out of this, but hastens to add that while his team found chromium hydride with Hubble and Spitzer data, it found none in data from the Very Large Telescope (VLT). That would place the find in the realm of ‘evidence’ rather than proof.

Even so, it gives us something interesting to work with, assuming we do get the James Webb Space Telescope into space later this year. Co-author and SRON Exoplanets program leader Michiel Min notes the significance of the orbital lock hot Jupiters fall into:

“Hot Jupiters, including WASP-31b, always have the same side facing their host star. We therefore expect a day side with chromium hydride in gaseous form and a night side with liquid chromium hydride. According to theoretical models, the large temperature difference creates strong winds. We want to confirm that with observations.”

Image: A hot Jupiter crossing the face of its star as seen from Earth. Credit: ESA/ATG medialab, CC BY-SA 3.0 IGO.

WASP-31b orbits an F star at a distance of 0.047 AU and is one of the lowest density exoplanets yet found, with a mass of 0.478 that of Jupiter and a radius 1.549 times Jupiter’s. Potassium has already been found in its atmosphere as well as evidence for aerosols in the form of clouds and hazes, with some evidence for water vapor and ammonia. Braam and team analyzed previously available transmission data from Hubble and Spitzer using a software retrieval code called TauRex (Tau Retrieval for Exoplanets), reporting “the first statistical evidence for the signatures of CrH in an exoplanet atmosphere.” The paper goes on to note:

The evidence for CrH naturally follows from its presence in brown dwarfs and is expected to be limited to planets with temperatures between 1300 and 2000 K. Cr-bearing species may play a role in the formation of clouds in exoplanet atmospheres, and their detection is also an indication of the accretion of solids during the formation of a planet.

The paper is Braam et al., “Evidence for chromium hydride in the atmosphere of hot Jupiter WASP-31b,” accepted at Astronomy & Astrophysics. Abstract / Full Text.

Magnetic Reconnection in New Thruster Concept

At the Princeton Plasma Physics Laboratory (PPPL) in Plainsboro, New Jersey, physicist Fatima Ebrahimi has been exploring a plasma thruster that, on paper at least, appears to offer significant advantages over the kind of ion thruster engines now widely used in space missions. As opposed to electric propulsion methods, which draw a current of ions from a plasma source and accelerate it using high voltage grids, a plasma thruster generates currents and potentials within the plasma itself, thus harnessing magnetic fields to accelerate the plasma ions.

What Ebrahimi has in mind is to use magnetic reconnection, a process observed on the surface of the Sun (and also occurring in fusion tokamaks), to accelerate the particles to high speeds. The physicist found inspiration for the idea in PPPL’s ongoing work in fusion. Says Ebrahimi:

“I’ve been cooking this concept for a while. I had the idea in 2017 while sitting on a deck and thinking about the similarities between a car’s exhaust and the high-velocity exhaust particles created by PPPL’s National Spherical Torus Experiment (NSTX). During its operation, this tokamak produces magnetic bubbles called plasmoids that move at around 20 kilometers per second, which seemed to me a lot like thrust.”

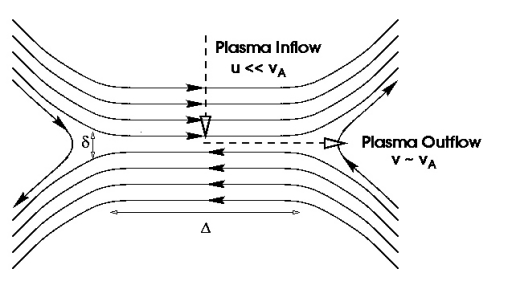

In magnetic reconnection, magnetic field lines converge, separate, and join together again, producing energy that Ebrahimi believes can be applied to a thruster. Whereas ion thrusters propelling plasma particles via electric fields can produce a useful but low specific impulse, the magnetic reconnection thruster concept can, in theory, generate exhausts with velocities of hundreds of kilometers per second, roughly ten times the capability of conventional thrusters.

Image: Magnetic reconnection refers to the breaking and reconnecting of oppositely directed magnetic field lines in a plasma. In the process, magnetic field energy is converted to plasma kinetic and thermal energy. Credit: Magnetic Reconnection Experiment.

Writing up the concept in the Journal of Plasma Physics, Ebrahimi notes that this thruster design allows thrust to be regulated through changing the strength of the magnetic fields. The physicist describes it as turning a knob to fine-tune the velocity by the application of more electromagnets and magnetic fields. In addition, the new thruster ejects both plasma particles and plasmoids, the latter a component of power no other thruster can use.

If we could build a magnetic reconnection thruster like this, it would allow flexibility in the plasma chosen for a specific mission, with the more conventional xenon used in ion thrusters giving way to a range of lighter gases. Ebrahimi’s 2017 work showed how magnetic reconnection could be triggered by the motion of particles and magnetic fields within a plasma, with the accompanying production of plasmoids. Her ongoing fusion research investigates the use of reconnection to both create and confine the plasma that fuels the reaction without the need for a large central magnet.

Image: PPPL physicist Fatima Ebrahimi in front of an artist’s conception of a fusion rocket. Credit: Elle Starkman, PPPL Office of Communications, and ITER.

Thus research into plasmoids and magnetic reconnection filters down from the investigation of fusion into an as yet untested concept for propulsion. It’s important to emphasize that we are, to say the least, in the early stages of exploring the new propulsion concept. “This work was inspired by past fusion work and this is the first time that plasmoids and reconnection have been proposed for space propulsion,” adds Ebrahimi. “The next step is building a prototype.”

The paper is Ebrahimi, “An Alfvenic reconnecting plasmoid thruster,” Journal of Plasma Physics Vol. 86, Issue 6 (21 December 2020). Abstract.

The Xallarap Effect: Extending Gravitational Microlensing

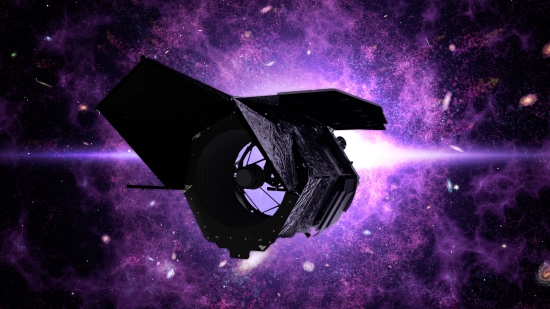

‘Xallarap’ is parallax spelled backward (at least it’s not another acronym). And while I doubt the word will catch on in common parlance, the effect it stands for is going to be useful indeed for astronomers using the Nancy Grace Roman Space Telescope. This is WFIRST — the Wide Field Infrared Survey Telescope — under its new name, a fact I mention because I think this is the first time we’ve talked about the mission since the name change in 2020.

Image: High-resolution illustration of the Roman spacecraft against a starry background. Credit: NASA’s Goddard Space Flight Center.

While a large part of its primary mission will be devoted to dark energy and the growth of structure in the cosmos, a significant part of the effort will be directed toward gravitational microlensing, which should uncover thousands of exoplanets. This is where the xallarap effect comes in. It’s a way of drawing new data out of a microlensed event, so that while we can continue to observe planets around a nearer star as it aligns with a background star, we will also be able to find large planets and brown dwarfs orbiting the more distant stars themselves.

Let’s back up slightly. The gravitational microlensing we’ve become familiar with relies on a star crossing in front of a more distant one, a chance alignment that causes light from the farther object to bend, a result of the curvature of spacetime deduced by Einstein. The closer star acts as a lens, making light from the background star appear magnified, and the analysis of that magnified light can also show the signature of a planet orbiting the lensing star. It’s a method sensitive to planets as small as Mars.

Moreover, gravitational microlensing makes it possible to see planets in a wide range of orbits. While the alignment events are one-off affairs — the stars from our vantage point are not going to be doing this again — we do have the benefit of being able to detect analogs of most of the planets in our own system. What Shota Miyazaki (Osaka University) and colleagues have demonstrated in a new paper is that ‘hot Jupiters’ and brown dwarfs will also be detectable around the more distant star. Xallarap is their coinage.

David Bennett leads the gravitational microlensing group at NASA GSFC:

“It’s called the xallarap effect, which is parallax spelled backward. Parallax relies on motion of the observer – Earth moving around the Sun – to produce a change in the alignment between the distant source star, the closer lens star and the observer. Xallarap works the opposite way, modifying the alignment due to the motion of the source.”

Image: This animation demonstrates the xallarap effect. As a planet moves around its host star, it exerts a tiny gravitational tug that shifts the star’s position a bit. This can pull the distant star closer and farther from a perfect alignment. Since the nearer star acts as a natural lens, it’s like the distant star’s light will be pulled slightly in and out of focus by the orbiting planet. By picking out little shudders in the starlight, astronomers will be able to infer the presence of planets. Credit: NASA’s Goddard Space Flight Center.

The Roman telescope will be digging into the Milky Way’s central bulge in search of such objects as well as the thousands of exoplanets expected to be found through the older microlensing method, which Xallarap complements. And while microlensing works best at locating planets farther from their star than the orbit of Venus, xallarap appears to be better suited for massive worlds in tight orbits, which produce the biggest tug on the host star.

This will include the kind of ‘hot Jupiters’ we’ve found before but still have problems explaining in terms of their formation and possible migration, so finding them through the new method should add usefully to the dataset. In a similar way, the Roman instrument’s data on brown dwarfs found through xallarap should extend our knowledge of multiple star systems that include these objects, which are more massive than Jupiter but roughly the same radius.

Given that the view toward galactic center takes in stars that formed as much as 10 billion years ago, the Roman telescope will be extending the exoplanet search significantly. Until now, we’ve homed in on stars no more than a few thousand lights years out, the exception being those previously found through microlensing. The xallarap effect will complement the mission by helping us find older planets and brown dwarfs that fill in our knowledge of how such systems evolve. How long can a hot Jupiter maintain its tight orbit? How frequently will we find objects like these around ancient stars?

We should start getting useful data from both xallarap and more conventional microlensing some time in the mid-2020s, when the Roman instrument is due to launch. Nice work by Miyazaki and team.

The paper is Miyazaki et al., “Revealing Short-period Exoplanets and Brown Dwarfs in the Galactic Bulge Using the Microlensing Xallarap Effect with the Nancy Grace Roman Space Telescope,” Astronomical Journal Vol. 161, No 2 (25 January 2021). Abstract.

How Common Are Giant Planets around Red Dwarfs?

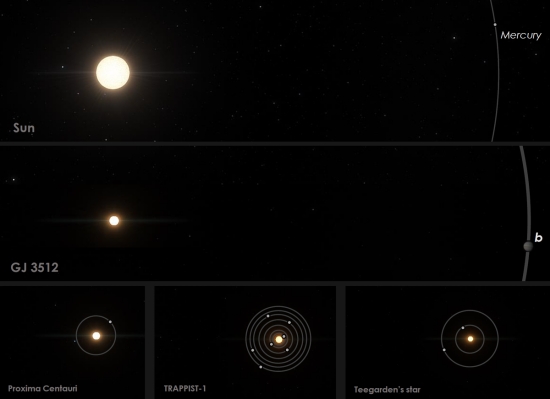

A planet like GJ 3512 b is hard to explain. Here we have a gas giant that seems to be the result of gravitational instabilities inside the ring of gas and dust that circles its star. This Jupiter-like world is unusual because of the ratio between planet and star. The Sun, for example, is about 1050 times more massive than Jupiter. But for GJ 3512 b, that ratio is 270, a reflection of the fact that this gas giant orbits a red dwarf with about 12 percent of the Sun’s mass. How does a red dwarf produce a debris disk that allows such a massive planet to grow?

Image: Comparison of GJ 3512 to the Solar System and other nearby red-dwarf planetary systems. Planets around solar-mass stars can grow until they start accreting gas and become giant planets such as Jupiter, in a few millions of years. However, up to now astronomers suspected that, except for some rare exceptions, small stars such as Proxima, TRAPPIST-1, Teegarden’s star, and GJ 3512 were not able to form Jupiter mass planets. Credit: © Guillem Anglada-Escude – IEEC/Science Wave, using SpaceEngine.org (Creative Commons Attribution 4.0 International; CC BY 4.0).

I bring up GJ 3512 b (discovered in 2019) as an example of the kind of anomaly that highlights the gaps in our knowledge. While we often talk about red dwarf systems in these pages, the processes of planet formation around these stars remain murky. M-dwarfs may make up 80 percent of the stars in the Milky Way, but they host a scant 10 percent of the exoplanets we’ve found thus far. To tackle this gap, a team of scientists led by Nicolas Kurtovic (Max Planck Institute for Astronomy, Heidelberg) has released the results of its analysis of six very low mass stars n the Taurus star-forming region. These are stars with mass less than 20% that of the Sun.

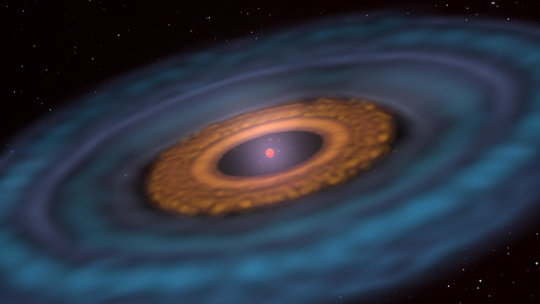

The new work relies on data from the Atacama Large Millimeter/submillimeter Array (ALMA), taken at a wavelength of 0.87 millimeters in order to trace dust and gas in the disk around these stars at an angular resolution of 0.1 arcseconds. What’s intriguing here are the signs of ring-like structures in the dust that extend between 50 and 90 AU from the stars, which reminds us of similar, much larger disks around more massive stars. Half of the disks the Kurtovic team studied showed structures at these distances. The consensus is that such rings are markers for planets in the process of formation as they accumulate gas and dust.

Image: Artistic representation of a planet-forming disk of dust and gas around a very low-mass star (VLMS). The inner dust disk contains a ring structure that indicates the formation of a new planet. The dust disk resides inside a larger gas disk whose thickness increases towards the edge. Credit: MPIA graphics department.

In the case of the M-dwarfs under study, the gaps in the rings being cleared by these planets would require worlds about as massive as Saturn, an indication that the material for gas giant formation is available. But time is a problem: Inward-movng dust evaporates close to the star, and in the case of red dwarfs, the migration is twice as fast as for more massive stars. There is little time in such a scenario for the planetary embryos needed for core accretion to form.

This is, I think, the key point in the paper, which notes the problem and explains current thinking:

The core accretion scenario for planet formation assumes collisional growth from sub-µm-sized dust particles from the interstellar medium (ISM) to kilometer-sized bodies or planetesimals… The collisions of particles and their dynamics within the disk are regulated by the interaction with the surrounding gas. Different physical processes lead to collisions of particles and their potential growth, such as Brownian motion, turbulence, dust settling, and radial drift…

Much depends upon the star in question. The paper continues (italics mine):

All of these processes have a direct or indirect dependency on the properties of the hosting star, such as the temperature and mass. For instance, from theoretical calculations, settling and radial drift are expected to be more efficient in disks around VLMS [Very Low Mass Stars] and BDs [Brown Dwarfs], with BD disks being 15-20% flatter and with radial drift velocities being twice as high or even more in these disks compared to T-Tauri disks.

The authors estimate that the ringed structures they’ve found around three of the red dwarfs under study formed approximately 200,000 years before the dust would have migrated to the central star. So planet formation must be swift: The planetary embryos need to accumulate enough mass to create gaps in the disk, which effectively block the dust from further inward migration. Without these gaps, the likelihood of planet formation drops.

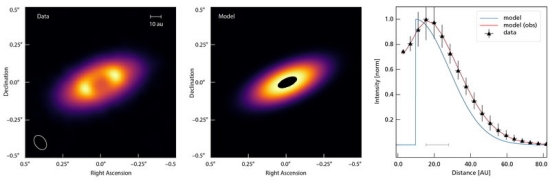

Image: Observational data and model of the dust disk around the VLMS MHO6. Left: Image of the dust disk. Middle: The disk model with a 20 au wide central hole, which is consistent with a Saturn-mass planet located at a distance of 7 au from the star, accreting disk material. Right: Radial profile of the model (blue) and after convolving it with the telescope’s angular resolution (red). The black symbols represent the data obtained from the measured brightness distribution. The grey bar corresponds to the angular resolution of the observations. Credit: Kurtovic et al./MPIA.

Remember, we only have six stars to work with here, and the other three stars under investigation are likewise problematic. They appear to show dust concentrations between 20 and 40 AU from their stars, but in all three cases lack structure that can be defined by ALMA. Better resolution would, the authors believe, tease out rings inside even these smaller disks.

But the paper acknowledges that disks around stars that are still lower in mass demand that inward migration be reduced through ‘gas pressure bumps’ that can trap dust efficiently. Here’s the process (once again, the italics are mine):

The presence of pressure bumps produces substructures, such as rings, gaps, spiral arms, and lopsided asymmetries, with a different amplitude, contrasts, and locations depending on the origin of the pressure variations… Currently, due to sensitivity limitations, most of our observational knowledge about substructures comes from bright (and probably massive) disks, such as the DSHARP sample… A less biased sample of ALMA observations of disks in the star-formation region of Taurus has demonstrated that at least 33% of disks host substructures at a resolution of 0.1”, and the disks that do not have any substructures are compact (dust disk radii lower that ?50 au…). It remains an open question if compact disks are small because they lack pressure bumps or because current observations lack the resolution to detect rings and gaps in these disks.

Such pressure bumps are a workable explanation but one without the kind of observational evidence we’ll need as we continue to investigate planet formation around low-mass stars and brown dwarfs. We are a long way from having a fully developed model for planet formation in this environment, and the Kurtovic et al. paper drives the point home. On a matter as fundamental as how common planets around red dwarfs are, we are still in the early stages of data gathering.

The paper is Kurtovic, Pinilla, et al., “Structures of Disks around Very Low Mass Stars in the Taurus Star-Forming Region,” Astronomy & Astrophysics, 645, A139 (2021). Abstract / Preprint.