Centauri Dreams

Imagining and Planning Interstellar Exploration

A Planet Reborn?

Objects that seem younger than they ought to be attract attention. Take the so-called ‘blue stragglers.’ Found in open or globular clusters, they’re more luminous than the cluster stars around them, defying our expectation that stars that formed at about the same time should develop consistent with their neighbors. Allan Sandage discovered the first blue stragglers back in 1953 while working on the globular cluster M3. Because blue stragglers are more common in the dense core regions of globular clusters, they may be binary stars that have merged, but a number of theories exist, most of them focusing on interactions within a given cluster.

Image: The center of globular cluster NGC 6397, in an image taken by the Hubble Space Telescope. Credit: Francesco Ferraro (Bologna Observatory), ESA, NASA.

Now we may have found a planet that seems to be younger than it ought to be. Michael Jura (UCLA) and team report on the results in the Astrophysical Journal Letters, making the case that a planet orbiting an aging red giant may feed off mass flowing outward from its dying primary. A red giant can lose half its mass or more as it makes the transition to white dwarf, with sheets of material flowing into the outer system where aging gas giants may lurk.

Thus the white dwarf PG 0010+280, noted for its unexpectedly bright infrared signature in data UCLA student Blake Pantoja examined from the WISE (Wide-field Infrared Survey Explorer) mission. Spitzer observations from 2006 confirmed the excess of infrared, which could come from a small companion star — conceivably a brown dwarf — or a planet that has been rejuvenated by the inflow. The paper is careful to examine a range of alternatives, but further work is definitely called for given that this might represent planetary survival after the red giant phase.

At this point, we don’t have a good understanding of how common planets are around white dwarfs. Direct imaging has turned up a large gas giant at about 2500 AU orbiting the white dwarf WD 0806-661, and we also have theoretical calculations showing that planets can survive a red giant phase and remain in orbit around a white dwarf if they’re more than several AU out. Systems like this look to be unstable, with surviving minor planets eventually accreting onto the white dwarf to produce an enriched stellar atmosphere or a disk around the star.

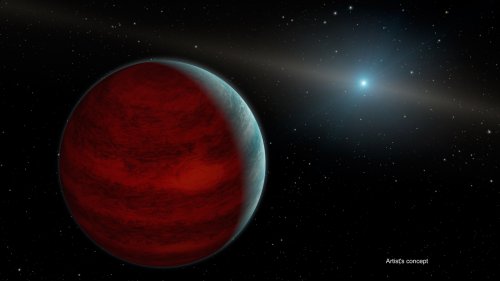

Image: This artist’s concept shows a hypothetical “rejuvenated” planet — a gas giant that has reclaimed its youthful infrared glow. NASA’s Spitzer Space Telescope found tentative evidence for one such planet around a dead star, or white dwarf, called PG 0010+280 (depicted as white dot in illustration). Credit: NASA/JPL-Caltech.

Digging around in the new paper, I learn that we find dust disks around about four percent of white dwarfs, with all of those so far studied also showing an atmosphere enriched with heavy elements like oxygen, magnesium, silicon and iron. These elements have been assumed to be leftover materials from asteroids pulled apart by gravitational forces. An excess at infrared wavelengths is one way researchers have looked for low-mass companions around white dwarfs, and the first assumption about PG 0010+280 surely went in the same direction.

But as we learn, this is one white dwarf that does not fit the model for asteroid disks well. PG 0010+280 shows an infrared excess but no sign of heavy elements. From the paper:

Its unique infrared color as well as the non-detection of heavy elements with high-resolution spectroscopic observations suggest a possible alternative origin than white dwarfs with infrared excess from a circumstellar disk. From fitting the SED [Spectral Energy Distribution], we can not exclude models of either an opaque dust disk within the tidal radius or a substellar object at 1300 K, from an irradiated object or a re-heated planet. Future observations, particularly with spectroscopic observations in the ultraviolet and near-infrared, could reveal the nature of the infrared excess.

To fit the data, a cool brown dwarf would need a radius too small to produce the observed infrared excess, while a hot brown dwarf (re-heated during the red giant phase) would be too hot. A re-heated giant planet remains a real possibility, and the paper adds that spectroscopy in the near-infrared might be able to detect the signature of such a world, whose rejuvenation would leave a huge amount of dust in its atmosphere. “Due to possible accretion of carbon-rich material, the composition of the object’s atmosphere could be substantially modified and display CH4 and CO.”

A gas giant like the one hypothesized here would be about ten times the size of its primary — white dwarfs are about the size of the Earth, and as this JPL news release notes, such a star would be small enough to easily fit inside the Great Red Spot on Jupiter.

The paper is Xu et al., “A Young White Dwarf with an Infrared Excess,” Astrophysical Journal Letters Vol. 806, No. 1 (abstract / preprint). Thanks to Ashley Baldwin for the pointer on this one.

The Zoo Hypothesis as Thought Experiment

Imagine a civilization one million years old. As Nick Nielsen points out in today’s essay, the 10,000 year span of our terrestrial civilization would only amount to one percent of the older culture’s lifetime. The ‘zoo hypothesis’ considers extraterrestrials studying us as we study animals in controlled settings. Can a super-civilization study a planetary culture for the whole course of its technological development? Nielsen, an author and strategic analyst, runs a thought experiment on two possible courses of observation, asking how we would be perceived by outsiders, and how they might relate us to the history of their own development.

by J. N. Nielsen

In 1973 John A. Ball wrote a paper published in Icarus called “The Zoo Hypothesis” in which he posited an answer to the Fermi paradox involving the deliberate non-communication of advanced ETI (extraterrestrial intelligence) elsewhere in our universe:

“…the only way that we can understand the apparent non-interaction between ‘them’ and us is to hypothesize that they are deliberately avoiding interaction and that they have set aside the area in which we live as a zoo. The zoo hypothesis predicts that we shall never find them because they do not want to be found and they have the technological ability to insure this.” [1]

Suppose we were being observed at a distance by alien beings. We already have a chilling description of this from the nineteenth century in the opening passage of H. G. Wells’ The War of the Worlds:

“No one would have believed in the last years of the nineteenth century that this world was being watched keenly and closely by intelligences greater than man’s and yet as mortal as his own; that as men busied themselves about their various concerns they were scrutinised and studied, perhaps almost as narrowly as a man with a microscope might scrutinise the transient creatures that swarm and multiply in a drop of water. With infinite complacency men went to and fro over this globe about their little affairs, serene in their assurance of their empire over matter. It is possible that the infusoria under the microscope do the same. No one gave a thought to the older worlds of space as sources of human danger, or thought of them only to dismiss the idea of life upon them as impossible or improbable. It is curious to recall some of the mental habits of those departed days. At most terrestrial men fancied there might be other men upon Mars, perhaps inferior to themselves and ready to welcome a missionary enterprise. Yet across the gulf of space, minds that are to our minds as ours are to those of the beasts that perish, intellects vast and cool and unsympathetic, regarded this earth with envious eyes, and slowly and surely drew their plans against us.”

Like many responses to the Fermi paradox, the zoo hypothesis and its variants make assumptions about the motivations of ETI, and among the first responses to these responses is to question any projection of human motivations onto ETIs. Prior to a survey of the universe entire, with all its life and intelligences (if any), which survey will come (if at all) at a much later stage in the development of our own civilization, the most we can do is to formulate a range of possible ETI motivations (including motivations that do not exist for us, and which must remain unknown unknowns for the time being) and attempt to weight them appropriately in any assessment of the possibility of human/ETI interaction.

The problem of alien minds raises the traditional philosophical difficulty known as the problem of other minds to an even more inaccessible reach of mystery—but this is a philosophical and scientific mystery, not an impenetrable religious mystery [2], and as such we can approach the problem rationally. While we have as yet no empirical basis on which to speculate about alien minds, we can make some deductions about the behaviors and practices of ETIs that have developed an advanced civilization.

Any ETI capable of SETI/METI or interstellar travel would have to have developed something like science (regardless of the motivation for having developed something like science), and science-like activity presupposes science-like observation. Any ETI technologically capable of observing our planet, whether from near or from far, would have developed protocols of observation under controlled conditions, so we could expect the observation of our planet by some ETI to have a science-like character.

In a zoo, we are able to observe the development of individuals of other species. A contemporary zoo does not exist on a scale of time that allows us to observe the evolution of species [3], and is built more for entertainment than for science, but if our terrestrial civilization endures for a sufficient period of time we may yet develop zoo-like institutions that endure over biological scales of time and that allow us to observe not only the development of the individuals of a species, but also the evolution of new species under controlled conditions. Given the zoo hypothesis, we might posit that an alien observer would be able to observe not only the development of individual terrestrial civilizations, but, given the idea that exocivilizations might be of far greater longevity than our civilization (a view held in common by Ball, Sagan [4], Kardashev [5], Norris [6], and many others), it might even be possible to observe the entire evolution of civilization on Earth.

The ten thousand years of terrestrial civilization would constitute only one percent of the lifespan of a million year old supercivilization. Ten thousand years for a million year old civilization would be proportional to one hundred years for a ten thousand year old civilization (in each case, the observed period of time is two orders of magnitude less than the total lifespan on the civilization), and we already have scientific research programs and data collection efforts that have been in continual operation for more than a century.

But allow me to back up for a moment in order to give an example of the sort of thought experiment we would like to conduct at present, illustrated by a particular historical example that highlights our knowledge of our own past in order to see this in the context of ourselves as the more advanced civilization conducting a scientific survey of an earlier and less sophisticated civilization.

First Thought Experiment

Suppose, as a thought experiment in the counter-factual, that the Cuban Missile Crisis had escalated and resulted in a massive nuclear exchange between the US and the USSR. In this thought experiment, terrestrial civilization came to an end in 1962. [7] Imagine visiting the Earth, as an alien (an alien whose mind has been shaped by 21st century terrestrial science and technology), a sufficiently long period of time after this event that the radioactivity had subsided, seeing the ruins of a global civilization—empty cities, silent highways, decaying factories under a layer of dust, and some insects crawling through the rubble.

Today we have slightly more knowledge about the development of technological civilization than we possessed fifty years ago, and given that industrialized civilization is only about two hundred years old, fifty years constitutes a significant portion of that history (specifically, a quarter of it). [8] From our perspective today, we can see fifty years into the future from the Cuban Missile Crisis. We know what was to come, and so we can, in imagination, assume the perspective of a more technologically advanced civilization in assessing the ruins of the world of 1962, frozen in time by nuclear catastrophe, now a planet-wide archaeological site displaying the material culture of a planetary civilization.

Arriving in the solar system on a mission of scientific exploration, to discover why electromagnetic spectrum transmissions from Earth had suddenly ceased, an alien visitor would first find the Mariner 2 and Venera 1 probes in heliocentric orbit around the sun, along with a handful of other artificial objects, and the now-defunct terrestrial civilization would be immediately classified as one capable of interplanetary travel. The alien visitor would also find two small probes crashed on the lunar surface [9], and numerous Earth satellites, all now dead and silent.

Surveying that ruined world from orbit, the cause of the sudden end to terrestrial transmissions would be obvious: on every continent except Antarctica, the surface of Earth reveals enormous radioactive craters. A keen observer from orbit would find that all these craters are connected to each other by the remains of transportation networks, and such an observer would conclude that the greatest cities of this past civilization had once stood at these junctures. Clearly this was a civilization that had mastered both the technologies of nuclear weapons and ballistic missiles, and had developed these capacities to the point that they were able to extinguish themselves by their own efforts. At this point, the ETI can rule out a natural cause for the extinction of terrestrial civilization.

Large airfields at the edge of former urban sites, and the remains of turbojet aircraft, suggest a worldwide subsonic aviation network was functional prior to the end of the civilization. No supersonic aircraft appeared to have been in service, but the earlier discovery of space probes and artificial satellites confirmed the use of rocketry to achieve escape velocity from the planet. Subsequent investigation would reveal that the civilization of the planet had, not long before destroying itself, inserted a few individuals of their species into planetary orbit and safely returned these same individuals to the planet. Thus the dominant species had just passed several crucial thresholds toward becoming a spacefaring civilization and thereby ensuring itself against the existential risk inherent in being an exclusively planetary civilization. But it had not sufficiently mitigated the existential risk of nuclear war in order to allow it to survive.

The terrestrial civilization had possessed a rudimentary digital computing technology for less than twenty years [10], but this had not yet been fully exploited, as the breakthrough to miniaturization had not yet occurred. [11] Transistors had been invented, but not integrated circuitry. Computing was confined to large mainframes available to business and scientific research. The relative degree of the penetration of computer technology into the ordinary business of life appears to have been at a very low level. Telecommunications primarily consisted of the transmission of analog spoken language and visual signals.

The remnants of the power generation and distribution infrastructure reveal that significant effort had been made to exploit fossil fuels to power industry. A small number of nuclear reactors, all less than ten years old when they ceased operation, had been part of the global electrical grid, with more locations under construction, but this appears to have been a nascent industry not yet fully exploited. There is evidence of research into nuclear fusion, but no evidence of the use of fusion for electrical power generation.

The terrestrial civilization had mastered physical science up to the point of formulating a quantum theory to account for the smallest constituents of nature and a theory of gravity to account for the largest structures of observational cosmology, but it could not yet see its way clear to a comprehensive scientific solution to this impasse. Their physics was stalled at a level of development insufficient to comprehend (and to technologically harness) fundamental forces of cosmology. As further evidence of the civilization’s scientific progress in understanding itself and its place in the universe in scientific terms, the civilization had crossed the SETI threshold [12] and was capable of employing its technology to search for EM spectrum signals over interstellar distances, though it had not yet achieved the energy levels or industrial capacity to make itself known to the wider universe through active messaging (i.e., METI).

All in all, this was a civilization of great promise—global in extent, with the planet connected by transportation and communications infrastructure reaching all demographically significant inhabited areas, but still almost entirely reliant upon fossil fuels employed at a very low efficiency. New technologies were under development at the time of the civilization’s demise, but were far from maturity.

Image credit: Space Studies Institute.

Second Thought Experiment

A similar thought experiment can be performed in regard to our world today. With the experience of imagining our own past frozen in time, we can better appreciate the perspective of a more technologically advanced civilization objectively assessing the capacities and liabilities of the early stages of industrialization and planetary civilization. With this reflection in mind, we can dispense with the macabre conceit of a nuclear extirpation of civilization (which was only a device to dramatically facilitate the first thought experiment) and attempt to imagine an alien observer entering our solar system from outside and viewing our present level of development as a civilization. [13]

To an alien observer, terrestrial civilization would have announced its presence long before any visitor arrived in the solar system, first by its EM spectrum transmissions, and, alerted by these transmissions of a technologically-capable civilization, the alien visitor is surprised to discover that this civilization has already exceeded its own solar system, as it discovers two spacecraft, still viable and transmitting scientific data, having passed beyond the sun’s heliosheath into interstellar space. The alien visitor is impressed by the robust design, still functioning after decades, and still powered by a radioisotope thermoelectric generator. The scientific sophistication is impressive, as evidenced by the range of instrumentation, though still limited in its technological application.

As the alien visitor passes through the outer gas giant planets a few more robotic probes are encountered, more recent and more technically sophisticated than the probes first encountered, and approaching the smaller, rocky planets of the inner solar system there are dozens of robotic probes on Mars in communication with Earth. A cacophony of radio signals connects Earth to its satellites and space probes.

Passing the moon, the ETI detects signs of human visitation, but determines that this dates from several decades previously. An early promise of spacefaring has apparently been followed by a lull in development during which resources that might have gone into exploiting this early push toward space exploration were diverted to other purposes. Later, in a more careful observation of the civilization, it is found that, while military flights routinely employ supersonic aviation, an earlier attempt at commercial supersonic aviation had similarly been abandoned.

Approaching Earth, the night side of the planet is brightly lit by electrical lighting in patterns following transportation networks linking major urban centers, which latter are the brightest visible spectrum points on the surface of the planet. Airports and military installations are strong radar hot spots, while commercial communications networks are continually transmitting over many different bands of the EM spectrum, including a mixture of analog and digital signals encoding spoken language, moving visual images, and data.

The presence of visible light, infrared, and radio telescopes both in orbit and on the planet surface continue to testify to the scientific curiosity of terrestrial civilization. Despite this scientific curiosity, a comprehensive solution to physics at the largest and smallest scales still eludes the civilization, as it had in the earlier period of time considered. Anomalies have appeared within their scientific framework [14], but as yet no systematic solutions to these anomalies are available.

There is a global electricity grid supplied from a variety of energy sources, including a significant percentage of electricity from nuclear fission, but not nuclear fusion, which has not yet been mastered. The presence of a few recent large-scale solar and wind generation facilities suggests that the electrical grid is just at the beginning of a transition away from fossil fuels to renewable energy sources. The development of sustainable sources of electric generation may be compared to the early stages of development of nuclear power from the earlier time period. The increasing level of energy use is such that the supply of the planetary electrical grid by fossil fuels is having a significant impact both on the planetary climate and the social institutions by which the planet is organized.

Even as the large-scale structures of the civilization remain conflicted and inchoate, resulting in institutional friction on a civilizational scale and retarding civilizational development, enterprises likely to materially contribute to a better future for the dominant species on the whole, or which will remain relevant over historically significant scales of time, are economically marginal and involve only a vanishingly small proportion of the total population. Resources are disproportionately made available for retrograde and already antiquated industries at the cost of failing to allocate resources to industries that would facilitate the development of civilization. Much of the civilization’s ideological superstructure is dedicated to producing rationalizations for this suboptimal performance.

While the planet is superficially unified by transportation, telecommunications, and networks of production and distribution, including that for electricity, there is a profound gulf between the ways of life of peoples who have full access to the industrialized economy and those whose relationship to the industrialized economy is only tangential. While the dominant species on the planet has been engaged in a civilizational level of social organization for approximately ten thousand years, the lives of those participating in the industrial economy has been completely transformed even while a significant portion of the population remains in conditions of subsistence agriculture, essentially undifferentiated from the conditions of life untouched by science and technology and almost unchanged over time.

This, too, is a civilization of great promise and great accomplishment, but also a civilization that is troubled in proportion to its growth. Each phase of development introduces additional problems at a civilizational scale. The ETI classifies terrestrial civilization as a “late-adopter spacefaring civilization,” since, having the capacity for large-scale extraterrestrialization [15], and having earlier employed this capacity in a limited way, the dominant species prefers to invest its not inconsiderable economic and industrial capacity in conspicuous consumption and spectacular instances of self-aggrandizement confined within a very narrow horizon of prestige requirements.

Image: A different take on humans in zoos, from a 1960 Twilight Zone episode called ‘People Are Alike All Over.’ Astronaut Roddy McDowall thinks he has found a friendly civilization when he gets to Mars, but he’s in for a surprise. Rod Serling’s closing comment: “Species of animal brought back alive. Interesting similarity in physical characteristics to human beings in head, trunk, arms, legs, hands, feet. Very tiny undeveloped brain. Comes from primitive planet named Earth. Calls himself Samuel Conrad. And he will remain here in his cage with the running water and the electricity and the central heat as long as he lives. Samuel Conrad has found The Twilight Zone.”

Comparing the Two Thought Experiments

If some ETI had visited Earth in 1962, around the time of the Cuban Missile Crisis, perhaps our closest brush with an anthropogenic existential threat, and then again in our present, about fifty years later, making observations as in the two above thought experiments, such an alien would observe both similarities and differences across the span of a half century. The same planetary industrial civilization is in place, although having attained a significantly higher level of technological maturity at the later date. However, despite technological progress, the essential problems of that civilization have remain unresolved as though there were a total lack of interest on part of the dominant species to address the most glaring sources of global catastrophic risk and existential risk.

Although the terrestrial civilization has made some indirect progress in existential risk mitigation, this has primarily come about through limiting the scope and scale wars, which remain as pervasive as at any time in terrestrial history. The changed conditions that have lessened the risk of nuclear war, for example, are not the result of successful planetary initiative to ensure the survival of the dominant species and the biosphere on which it depends.

In the fifty years between observations, terrestrial civilization has passed from exploration of the inner solar system to sending robotic probes into interstellar space, and computing technology has passed from mainframe computers in industry and research to pervasive computing integrated into every aspect of life. The expansion of terrestrial industrial infrastructure that can be observed in this half century has resulted in an increase of several orders of magnitude of energy production and consumption, which demonstrates the vitality of the civilization, but the civilization has been very slow to learn the lessons of mitigating the unintended consequences of industrialization, and as a consequence the level of energy usage has begun to affect the entire biosphere and planetary climate. Industrial pollutants and effluents are measureable throughout the atmosphere and hydrosphere.

Much like the reduction of war risk through the limitation of the scope and scale of wars, the reductions in industrial pollution over the past fifty years have primarily come about through increasing efficiency driven by economic motives, not a purposeful focus on a rational and scientific effort to systematically address civilizational level problems. Future existential risks may not be manageable by this chaotic method, or rather lack of a method.

This is a civilization that has unquestionably achieved a planetary scale, but is utterly and completely unable to initiate and coordinate action on a planetary scale. In other words, this is a civilization that still remains in a stage in which developments are driven by accidental events, while the ability to effectively plan remains confined to meeting the immediate needs of populations (food, shelter, electrical generation, etc.). Even this ability to plan for the provision of immediate needs is compromised by extreme inequality in the allocation of goods. It is an open question whether this civilization can survive the tensions it is creating by its own uneven successes.

Will this planetary civilization endure for a period of time sufficient to advance to a further stage of development? Will it succumb to any of a range of existential risks that threaten exclusively planetary civilization, or will it be the cause of its own demise as the result of existential risks unique to industrial-technological civilization? New existential risks appear to be emerging from the vitality of the civilization at a rate that outpaces efforts at existential risk mitigation on a planetary scale.

While an advanced ETI could choose to focus on the problems and depravities that it could readily identify in terrestrial civilization, it would not be horrified, because it would see itself in the struggles of a younger civilization that it once resembled. It would only be able to recognize terrestrial problems because the more advanced ETI had passed through these stages of development in its own history—not precisely, not in any degree of detail, but in the general course of the development of civilization from a geographically local phenomenon, entirely integrated with the biological processes of a local ecosystem (i.e., biocentric civilization), to a planetary civilization forced by planetary constraints into reluctant cooperation, and eventually to a spacefaring civilization that has transcended its homeworld (and which will perhaps someday converge upon eternal intelligence).

An advanced ETI would not have any reason to fear or to despise terrestrial civilization—any more than a modern army would fear the charge of knights on horseback, or any more than contemporary engineers would despise the efforts of pyramid or cathedral builders—nor would it regard humanity and its civilization as a failure. This civilization could only be, like theirs, a work in progress. For both civilizations, young and old, come from essentially the same materials, were shaped by the same forces, and embody the same laws of nature. No species projects itself across a planet or across the cosmos without having out-competed rivals and threats and asserted itself in the face of adversity. The only thing that separates the two is that the older civilization has a longer record of successes in mitigating existential risks.

Thanks are due to Andreas Folkener for suggesting this exercise to me.

– – – – – – – – – – – – – – – – – – – – – – – – – – –

Notes

[1] John A. Ball, “The Zoo Hypothesis,” Icarus, 19, 1973, pp. 347-349. For a variation on the theme of the zoo hypothesis cf. The Wilderness Hypothesis.

[2] On the difference between religious mystery and scientific mystery cf. my post Scientific Curiosity and Existential Need.

[3] There are instances of microevolutionary change that can and have been scientifically observed, but macroevolutionary change such as would result in cladogenesis takes place on a scale of time many orders of magnitude longer than any existing scientific research program, with a few interesting exceptions such as the cichlid fishes of Lake Malawi.

[4] “…the transmitting civilization is likely to have technological and scientific capabilities immensely in excess of our own… The mere fact that they have survived the invention of technology (as we have so far) suggests that such civilizations might be very long lived…” Carl Sagan, “The Recognition of Extraterrestrial Intelligence,” Proceedings of the Royal Society of London. Series B, Biological Sciences, Vol. 189, No. 1095, A Discussion on the Recognition of Alien Life (May 6, 1975), pp. 143-153.

[5] “It is important to note that our civilization, capable of establishing contact with other civilizations, is still very young, and that its age represents an order of magnitude which is very small, possibly zero. Taking into account the fact that the Solar System is a second generation object, that its age is about 5 billion years, and that the age of the oldest objects in the Universe can be about 20 billion years, it becomes clear that the age of other civilizations (in particular the time period throughout which they have been communicating) can be enormously greater than ours.” N. S. Kardashev, “Strategy for the search for extraterrestrial intelligence,” Acta Astronautica, Vol. 6, pp. 33-46, Pergamon Press, 1979.

[6] “…if we do detect ET, the median age is of order 1 billion years. Note that, in this case, the probability of ET being less than one million years older than us is less than 1 part in 1000. Therefore, any successful SETI detection will have detected a civilisation almost certainly at least a million years older than ours, and more probably of order a billion years older.” Ray P. Norris, “How old is ET?” Acta Astronautica, Vol. 47, Nos. 2-9, pp. 73 l-733, 2000.

[7] The idea of a civilization-ending nuclear war was a prominent Cold War cultural theme represented, inter alia, in On the Beach (novel 1957, film 1959), Alas, Babylon (1959), A Canticle for Leibowitz (1960), and Panic in the Year Zero! (film, 1962). On the Beach is an extinction scenario, while the other works examine the collapse of civilizational scale social order in the aftermath of nuclear war. Those contemplating the possibility of the Cuban Missile Crisis resulting in a MAD scenario had these imaginary models to draw upon in considering the consequences of such an event.

[8] If the past fifty years represents approximately a quarter of the total lifespan of industrial-technological civilization, and if we taken the total lifespan of agrarian-ecclesiastical civilization as being about ten thousand years, from the origins of agriculture to the industrial revolution, then a proportional period of time for the observation of agrarian-ecclesiastical civilization would be a lapse of about 2,500 years. In other words, if we were to arrive on Earth about the time of the industrial revolution (during the late eighteenth century) and look back upon the whole of civilization to date, we would have to look back to what Karl Jaspers called the Axial Age in order to grasp a proportional period of time in agrarian-ecclesiastical civilization as we today have as a perspective on the industrial-technological civilization of 1962.

[9] Luna 2 made a planned hard (crash) landing on the moon on 14 September 1959; Ranger 4 crash landed on the far side of the moon 26 April 1962, failing to return data before impact. The Ranger 3 spacecraft missed a planned flyby of the moon and instead entered into a heliocentric orbit, and presumably could be found as well by an ETI arriving from deep space, along with Venera 1 and Mariner 2. Ranger 5 launched during the Cuban Missile Crisis on 18 October 1962 (the Cuban Missile Crisis occurred during 14-28 October 1962) and it, too, missed its planned impact on the moon and entered into heliocentric orbit.

[10] The first electronic digital computer was the Colossus, built at Bletchley Park in 1943, designed by Tommy Flowers for the top secret Ultra project of breaking Axis ciphers based on the Enigma machine and its variants. Due to the secrecy of the program all the Colossus computers and plans were destroyed. The decision taken by Churchill to destroy all the Colossus computers at the end of the war was, I believe, motivated at least in part by Churchill’s heroic conception of history (on which cf. The Heroic Conception of Civilization) in which computers could be treated as a modern parallel to Greek Fire. It would be another thought experiment to consider how subsequent history might have been different if this early example of digital computing technology had been made widely available rather than being hidden from public view.

[11] The miniaturization of electronics was largely a result of the effort by the space program to make electronic components lighter for purposes of liftoff—lighter electronics meant a proportionally larger science payload to takeoff weight.

[12] Project Ozma was conducted in 1960. We now know that there are planetary systems at Tau Ceti and Epsilon Eridani, possibly in these stars’ habitable zone. If an advanced technological civilization had been present on any of these planets and had been powerfully transmitting in our direction (because they would already have been able to determine that there was a planet in our solar system with a biosphere and an atmosphere with industrial pollutants), Frank Drake would have received these signals as soon as he had switched on his apparatus.

[13] In my post Astrobiological Thought Experiment I developed another thought experiment placing ourselves in an alien zoo in a more zoo-like context; in this present scenario, human beings and their civilization are observed under conditions more like that of a wildlife sanctuary—a scenario also covered in Ball’s paper cited above: “Occasionally we set aside wilderness areas, wildlife sanctuaries, or zoos in which other species (or other civilizations) are allowed to develop naturally, i.e., interacting very little with man.”

[14] Among these anomalies I would count the absence of a unified field theory, dark matter, dark energy, the absence of a scientific theory of consciousness (a working hypothesis that could be considered the basis of an ongoing scientific research program), and even the Fermi paradox for what it implies for astrobiology. In addition to anomalies there are predictions and projections made on the basis of current science, but which go beyond the available science sensu stricto. Due to the underdetermination of theory by evidence one must expect that there will always be non sequiters, but logical errors sometimes are transmuted into an ideological program associated with, but not identical to, science (and sometimes called “scientism”). The ideology of science can, in turn, come to retard scientific progress when it is integrated into scientific institutions and is employed as a pretext to marginalize fields of research that call that ideology into question.

[15] The ETI performing this civilizational survey can only with hesitation identify terrestrial civilization as a “late-adopter spacefaring civilization,” as there are no signs at present of a breakthrough to demographically significant extraterrestrialization, although the ETI prefers to remain optimistic, as nothing in the survey reveals a disability to pursue this course of action, only a disinclination. “Extraterrestrialization” is the term I employ for the demographically significant presence of a dominant species beyond the biosphere of its homeworld, i.e., demographically significant spacefaring. For present purposes, “demographically significant” may be defined as a biologically viable and independent population. I suppose I should adopt some other term — extraplanetary? extraplanetarization? transplanetary?—so as to avoid the reference to terrestrial civilization, which is admittedly anthropocentric.

Beaming ‘Wafer’ Probes to the Stars

The last interstellar concept I can recall with a 20-year timeline to reach Alpha Centauri was Robert Forward’s ‘Starwisp,’ an elegant though ultimately flawed idea. Proposed in 1985, Starwisp would take advantage of a high-power microwave beam that would push its 1000-meter fine carbon mesh to high velocities. As evanescent as a spider web, the craft would use wires spaced the same distance apart as the wavelength of the microwaves that drove it, which is how it could be so lightweight and yet maintain rigidity under the microwave beam.

Throw in sensors and circuitry built-into the sail itself and you had no need for a separate probe payload — Starwisp was its own payload. This was conceived as a flyby mission, in which the microwaves would again bathe the craft as it neared its target, providing just enough energy to drive its communications and sensor array to return data to Earth. What a mission: Starwisp would accelerate at 115 g’s, its beam pushing it up to 20 percent of lightspeed within days.

Addendum: I had two typos above when this ran, both now corrected thanks to a note from James Jason Wentworth who caught my error re Starwisp’s acceleration (115 g’s, not 11) and diameter (1000 meters, not 100). I need to put the coffee on earlier in the morning…

Significant cost advantages emerge in comparison to beamed laser ideas. Microwaves, Jim Benford once reminded me, are typically two orders of magnitude cheaper than lasers in terms of the optics used to broadcast and the power efficiency of the laser. But Geoffrey Landis’ subsequent work on Forward’s original concept revealed that the craft’s wires would absorb rather than reflecting the microwaves, destroying the craft in a fraction of a second. The beaming system in Forward’s paper, incidentally, called for a lens 50,000 kilometers in diameter, another strike against the notion, but the idea of lightweight probes remains alive.

A Laser Beaming Infrastructure

In fact, beamed propulsion continues to have many advocates at both laser and microwave wavelengths. Philip Lubin (UC-Santa Barbara) has been awarded one of fifteen proof-of-concept grants from the NASA Innovative Advanced Concepts office. Lubin’s project is called Directed Energy Propulsion for Interstellar Exploration (DEEP-IN), and like Forward he sees it as a way to achieve, through laser beaming, a very fast mission to the nearest stars.

“One of humanity’s grand challenges is to explore other solar systems by sending probes — and eventually life,” says Lubin. “We propose a system that will allow us to take the first step toward interstellar exploration using directed energy propulsion combined with miniature probes. Along with recent work on wafer-scale photonics, we can now envision combining these technologies to enable a realistic approach to sending probes far outside our solar system.”

Image: Beamed propulsion leaves propellant behind, a key advantage. Coupled with very small probes, it could provide a path for flyby missions to the nearest stars. Credit: Adrian Mann.

A major talking point for beamed strategies is that they allow designers to keep the propellant back here in the Solar System. Moreover, build Lubin’s photon driver and you can go to work on many problems, not all of them interstellar. The beaming technology he advocates is called DE-STAR, for Directed Energy Solar Targeting of Asteroids and Exploration. Any laser powerful enough to drive even a highly miniaturized craft to 20 percent of lightspeed is also powerful enough to serve as a planetary defense against asteroids on wayward trajectories, an idea Lubin’s group studied last spring at the Planetary Defense Conference in Italy.

Imagine an orbital planetary defense system in the form of a modular phased array of kilowatt class lasers, the modular design promoting incremental development and upward scaling of the system over time. The idea is to use laser energies to raise spot temperatures on a dangerous asteroid to roughly 3000 K, which would create enough ejection of evaporated material to alter the asteroid’s orbit. A basic DE-STAR 1 could handle space debris, but the system, in Lubin’s view, scales up in useful ways. A DE-STAR 2 could divert 100-meter asteroids at distances up to 0.5AU, and the phased array configuration could create multiple beams, so a single DE-STAR could engage several threats simultaneously. Lubin says that a DE-STAR 4 would produce energies sufficient to completely vaporize a 500-meter asteroid over a year of beaming.

The NIAC grant will presumably help Lubin flesh out the details. Given this kind of power, an interstellar application seems worth investigating. In a talk Lubin gave at 2013’s Starship Congress in Dallas, he explained that a system as powerful as his DE-STAR 4 would be able to propel a 102 kg spacecraft up to 2 percent of the speed of light, shutting down after 30 AU, after which the spacecraft would coast. In the abstract for this talk, Lubin said that a far more powerful DE-STAR 6 system could push a 104 kg probe “to near the speed of light allowing for interstellar probes.” If you’d like to view the talk, it’s available here.

But the NIAC work operates at the other end of the size spectrum. The description on the NIAC site says this: “We propose a system that will allow us to take a significant step towards interstellar exploration using directed energy propulsion combined with wafer scale spacecraft.”

Couple nanotechnology with highly reflective, extremely thin sail films and an Alpha Centauri flyby with flight time of 20 years becomes feasible. How long will it be until we have the needed sail materials and laser capabilities? Lubin will speak on this in August at the SPIE conference on Nanophotonics and Macrophotonics for Space Environments IX in San Diego. The session is called “Directed energy propulsion of wafer scale spacecraft for interstellar missions.”

What NIAC funding gives Lubin’s group at UCSB is the ability to create a detailed roadmap for such a craft, one with fully contained communications, power systems and controllable photon thrusters. Both the spacecraft and the photon driver that would propel it will be studied in terms of necessary technology development. It will be interesting to see how the Lubin team will handle the huge problems of communications at this scale, and what sort of time frame they propose for deployment of a prototype DE-STAR system.

Centauri Dreams‘ take: Miniaturized probes that could accomplish interstellar flybys would not be one-off events. The potential for ‘swarm’ technologies that would take advantage of such a laser infrastructure is immense. Here we can imagine interstellar probes one day being sent by the thousands, an interactive mesh that could give us our first close-up images of extrasolar planets.

The original Starwisp paper is Forward, “Starwisp: An Ultra-Light Interstellar Probe,” Journal of Spacecraft 22 (1985b), pp. 345-50. And see Geoffrey Landis’ significant follow up, “Advanced Solar- and Laser-Pushed Lightsail Concepts,” Final Report for NASA Institute for Advanced Concepts, May 31, 1999 (downloadable from the older NIAC site). For more on DE-STAR and propulsion, see Bible et al., “Relativistic Propulsion Using Directed Energy,” in Taylor and Cardimona, ed, Nanophotonics and Macrophotonics for Space Environments VII, Proc. of SPIE Vol. 8876, 887605 (full text). A UCSB news release on Lubin’s grant from NIAC is also available.

Capturing Sedna: A Close Stellar Encounter?

With New Horizons scheduled for its flyby of Pluto/Charon in a matter of weeks and a Kuiper Belt extended mission to follow, it’s interesting to note a new paper on objects well beyond Pluto’s orbit. Lucie Jílková (Leiden Observatory) and colleagues address the problem of Sedna and recently discovered 2012VP113. The problem they present is that even at their closest approach to the Sun, these two objects are outside the Kuiper Belt, while their aphelion distances are too short for them to be considered members of the Oort Cloud.

So where do Sedna and 2012VP113 belong in our taxonomy of the Solar System? Thirteen such objects have now been discovered, a group collectively referred to as Sednitos. These objects have orbital elements in common: A large semi-major axis (with perihelion beyond 30 AU and aphelion beyond 150 AU), a common orbital inclination, and a similar argument of perihelion. A common origin seems likely. Jílková’s team is interested in the possibility that Sedna and its ilk were captured from the outer disk of a passing star, an event that would have happened in the Solar System’s infancy in its natal star cluster.

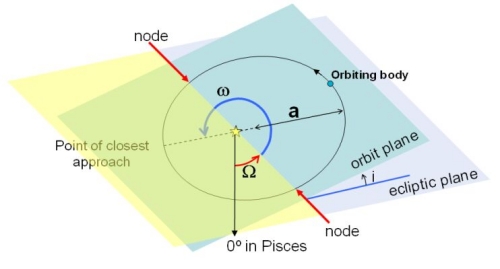

Digression: Have a look at the diagram below to clarify the orbital elements that explain the orientation of an orbit around a star. Here the orbital inclination is shown by (i) — you can see that the orbital plane differs by an appreciable angle from the plane of the ecliptic. The so-called ascending node is (?), showing the orbiting object passing from one side of the reference plane to the other. The argument of perihelion — the angle between the ascending node and the perihelion — is shown at (?). The image comes from Swinburne University of Technology, whose pages on orbital parameters offer an excellent overview. They form part of COSMOS, The SAO Encyclopedia of Astronomy, an online resource I frequently consult.

To make their case, the researchers simulated over 10,000 stellar encounters to constrain the parameters of the disrupting star. In each calculation, up to 20,000 ‘particles’ in the planetesimal disk were simulated, the calculations being run until at least 13 objects with perihelion of 30 AU and aphelion of 80 AU were captured by the Sun. The distributions thus generated were compared with the known population of Sednitos, a limited dataset but one that yields rough numbers for the mass of a flyby star, its distance at closest approach and its velocity.

We wind up with a star 1.8 times the Sun’s mass that would have passed by the Sun at roughly 340 AU at an inclination (relative to the ecliptic) of 17–34 degrees and a velocity in the range of 4.3 kilometers per second. During this encounter, the Kuiper Belt would have been disrupted, with many ice dwarfs being captured by the passing star, while others would have been pushed into the inner Oort Cloud. A large number would have been flung into the interstellar depths. The researchers estimate that the inner Oort would have acquired on the order of 440 planetesimals in the encounter, while over 900 should populate the Sednitos region.

Jílková and team believe that the orbital parameters for the Sednitos population fit the capture scenario (“Such clustering is a general characteristic of an exchanged population.”) The paper thus explains the orbital properties of Sedna and its ilk, while also contesting the theory that a distant, thus far unobserved planet is responsible for the Sednitos’ orbits. Such a planet would have been disrupted by the stellar encounter, although the researchers do not rule out the possibility that a planet that now affects their orbit could have been captured after the transfer of the Sednitos.

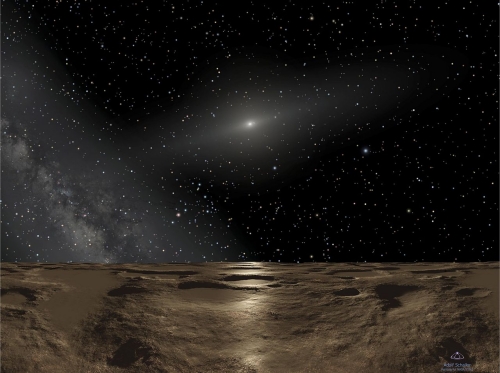

Image: This is an artist’s impression of noontime on Sedna, the farthest known planetoid from the Sun. Over 8 billion miles away, the Sun is reduced to a brilliant pinpoint of light that is 100 times brighter than the full Moon. (The Sun would actually be the angular size of Saturn as seen from Earth, way too small to be resolved with the human eye.) The dim spindle-shaped glow of dust around the Sun defines the ecliptic plane of the solar system where the major planets dwell. To the left, the hazy plane of our Galaxy, the Milky Way, stretches into the sky. The background constellations are Virgo and Libra. Credit: NASA, ESA and Adolf Schaller.

Do we have any prospect of finding the disruptive star? From the paper:

Understanding the origin of Sednitos and testing the theories for an outer planetary-mass object requires additional observations. The Gaia astrometric mission is expected to discover ? 50 objects in the outer Solar System. Being a solar sibling…, the encountering star may be also discovered in the coming years in the Gaia catalogues. Having been formed in the same molecular cloud, one naively expects that the chemical composition of this star is similar to that of the Sun…

It’s an interesting thought, though the paper speculates that by now, the culprit star has probably turned into a white dwarf, an outcome that happens within two billion years for a star in the 1.8 Solar mass range. The fate of the planetesimals the passing star presumably stole from Sol’s system is probably to wander without a host, free-floating objects cast loose after the parent star blew through its red giant phase. If the paper is right, objects like Sedna could one day become the first targets from another star we ever reach with our spacecraft.

The paper is Jílková et al., “How Sedna and family were captured in a close encounter with a solar sibling,” submitted to Monthly Notices of the Royal Astronomical Society (preprint).

Charon’s ‘Dark Pole’

An abrupt change: I’m holding today’s post (about halfway done, on a stellar flyby that may have produced Sedna and other such objects long in our system’s past) to turn to New Horizons’ latest imagery, which is provocative indeed. We’ll cover the Sedna story tomorrow.

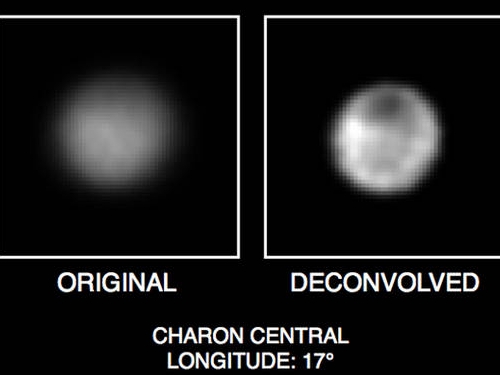

What we have from New Horizons is the work of the spacecraft’s Long Range Reconnaissance Imager (LORRI) in a series of images that show Pluto and its largest moon Charon as they more than double in size between May 29 and June 19. There’s plenty here to marvel at, but what stands out for me is the mysterious dark region that NASA’s latest release refers to as ‘a kind of anti-polar cap’ on Charon. Have a look:

Image: These recent images show the discovery of significant surface details on Pluto’s largest moon, Charon. They were taken by the New Horizons Long Range Reconnaissance Imager (LORRI) on June 18, 2015. The image on the left is the original image, displayed at four times the native LORRI image size. After applying a technique that sharpens an image called deconvolution, details become visible on Charon, including a distinct dark pole. Deconvolution can occasionally introduce “false” details, so the finest details in these pictures will need to be confirmed by images taken from closer range in the next few weeks. Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute.

No wonder principal investigator Alan Stern is beside himself:

“This system is just amazing. The science team is just ecstatic with what we see on Pluto’s close approach hemisphere: Every terrain type we see on the planet—including both the brightest and darkest surface areas — is represented there, it’s a wonderland! And about Charon—wow—I don’t think anyone expected Charon to reveal a mystery like dark terrains at its pole. Who ordered that?”

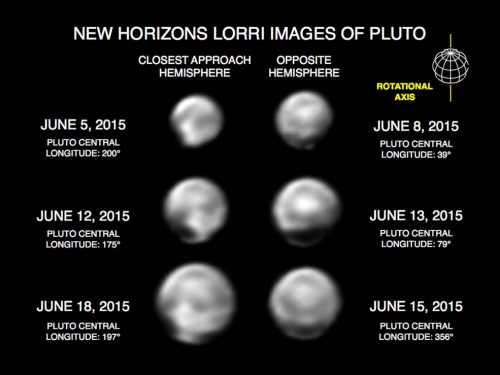

Moreover, we have these images of Pluto itself, with the encouraging news that the hemisphere over which New Horizons will make its closest approach is also the one with the greatest variety of terrain types we’ve yet seen on the dwarf planet. Here’s the Pluto imagery:

Image: These images, taken by New Horizons’ Long Range Reconnaissance Imager (LORRI), show numerous large-scale features on Pluto’s surface. When various large, dark and bright regions appear near limbs, they give Pluto a distinct, but false, non-spherical appearance. Pluto is known to be almost perfectly spherical from previous data. These images are displayed at four times the native LORRI image size, and have been processed using a method called deconvolution, which sharpens the original images to enhance features on Pluto. Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute.

At left is Pluto/Charon as viewed in 1978 when USNO astronomer James Christy noticed the ‘bump’ that seemed to emerge from Pluto. This was how Charon was discovered, through imagery that, like Pluto itself in 1930, was studied on photographic plates taken in Flagstaff, AZ. Weighing only twelve percent as much as Pluto, Charon may be as much as half ice, while Pluto seems to be about 70 percent rock by mass. As this JHU//APL news release notes, an astronaut standing on Pluto’s surface would see Charon always in the same part of the sky, but it would appear seven times larger than the Earth’s moon, spanning 3.5 degrees. Now we have imagery like that above to bring both Pluto and Charon into ever tighter focus. Will we find ice volcanoes on Charon, and perhaps a trace of atmosphere?

Yarkovsky and YORP Effect Propulsion for Long-life Starprobes

Centauri Dreams regular James Jason Wentworth wrote recently with some musings about Bracewell probes, proposed by Ronald Bracewell in a 1960 paper. Bracewell conceived the idea of autonomous craft that could monitor developments in a distant solar system, perhaps communicating with any local species that developed technology. Pondering how such a craft might manage station-keeping over the aeons, Jason hit on the idea of using a natural effect that would draw little attention to itself, one he explains below. An amateur astronomer and interstellar travel enthusiast who worked at the Miami Space Transit Planetarium and volunteered at the Weintraub Observatory atop the adjacent Miami Museum of Science, Jason now makes his home in Fairbanks (AK). He was the historian for the Poker Flat Research Range sounding rocket launch facility near Fairbanks. His space history and advocacy articles have appeared in Quest: The History of Spaceflight magazine and Space News.

by James Jason Wentworth

Dreams, daydreams, and flights of fancy have far greater value than most modern people realize. (Centauri Dreams are *not* just two nice-sounding words, but instead constitute a vital and necessary prelude to, and continuing inspiration for, interstellar space flight. Without Centauri dreams, there will be no “Centauri do’s,” as in visiting that stellar system via robotic probes or crewed starships!) Besides being pleasant forms of mental play, dreams can also bring insights that are of great practical importance. Such activities are usually considered the province of poets, storytellers, and songwriters, but scientists have also been helped by them. The most well-known example of this involved Friedrich August Kekulé, a 19th century German chemist, who gained answers he was seeking about molecular configurations from two dreams that he had [1]. The more famous of these two dreams–which involved a snake-like string of atoms that formed a circle, which then transformed into a snake eating its tail–led him to the ring structure of the benzene molecule.

In January of last year, such an insight came to me regarding a new form of fuel-less spacecraft propulsion and attitude control – one that, to my knowledge, no one has suggested before. It would be something that used the forces of nature, and it would also be something subtle and non-polluting. A light sail would fit these preferences well, but it occurred to me that there was another alternative (particularly for use within star systems) which would also employ starlight but would be more subtle than a sail, not blazing forth in the skies of nearby planets. Moreover, it would have other advantages, which would be useful to long-life spacecraft of all kinds, from unmanned Earth satellites to mobile space colonies to Bracewell interstellar messenger probes. I shall explore these advantages below.

The Power of Emitted Photons

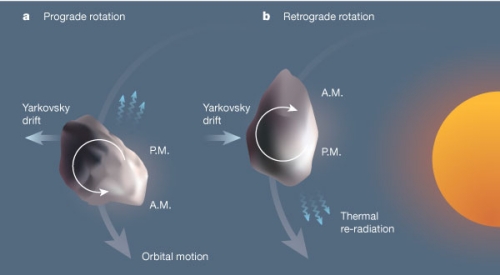

The Yarkovsky effect [2] was discovered by Ivan Yarkovsky (1844-1902), a Russian civil engineer who worked on scientific problems in his spare time. The effect imparts a very small but constant thrust to small, rotating bodies in orbit around the Sun, via the heating of the bodies’ surfaces by sunlight. As such an object rotates, its “afternoon” quadrant emits infrared photons as it cools, and this photon emission imparts an asymmetrical thrust force to the object. The Yarkovsky effect affects the orbits of meteoroids and asteroids between about 10 cm and 10 km across. (Smaller objects are heated more uniformly via internal heat transfer, which precludes the asymmetrical infrared photon emission, and larger asteroids are too massive to be affected appreciably by the infrared photon thrust.) A prograde-rotating meteoroid or asteroid (one that is rotating in the same direction that it is orbiting the Sun, counter-clockwise in the case of our solar system) gradually spirals outward away from the Sun due to the Yarkovsky effect, while a retrograde-rotating body spirals inward toward the Sun.

A related phenomenon, the Yarkovsky-O’Keefe-Radzievskii-Paddack effect (YORP effect) [3], affects the rotation rate, the rotational axis tilt, and the rotational axis precession rate in small asymmetric meteoroids and asteroids. These two effects could also be utilized by spacecraft, for fuel-less propulsion as well as attitude control.

Image: The Yarkovsky Effect: An asteroid is warmed by sunlight, its afternoon side becoming hottest. As a result, that face of the asteroid re-radiates most thermal radiation, creating a recoil force on the asteroid and causing it to drift a little. The direction of the radiation depends on whether the asteroid is rotating in a prograde (anticlockwise) manner (a) or in a retrograde (clockwise) manner (b). Credit: “Planetary science: Spin control for asteroids,” by Richard Binzel in Nature 425 (11 September 2003), 131-132.

Putting Yarkovsky to Work

The now-solved Pioneer anomaly was an unintentional demonstration of the Yarkovsky Effect’s ability to impart measurable thrust to a spacecraft. The Pioneer 10 and 11 spacecrafts’ Radioisotope Thermoelectric Generators (RTGs), rather than the Sun, supplied the infrared photons, which produced a tiny thrust toward the Sun by bouncing off the back of the probes’ dish antennas. A spacecraft that was purposely designed to utilize the Yarkovsky effect (and also the YORP effect, if desired) could move (and maneuver) much more quickly than either massive, rock/metal asteroids or the “accidentally-propelled” Pioneer spacecraft. The rate of acceleration of such a spacecraft would likely be comparable to that of a solar sail, although a higher thrust/mass ratio would increase its possible acceleration rate. A spacecraft of this type might be designed as follows:

Picture a black, rotating, drum-shaped vehicle, whose spin axis is perpendicular to the plane of its orbit around the Sun. (The drum could be a “stand-off” cylinder, like Skylab’s lost meteoroid shield, which could be deployed from a central spacecraft via centrifugal force.) The vehicle would spiral away from the Sun if it rotated in a prograde direction, and it would spiral inward toward the Sun if it rotated in a retrograde direction, just as asteroids (those which are small enough to be affected by the Yarkovsky effect) do. It could also change the plane of its orbit, by tilting its spin axis to inclinations other than perpendicular to its orbit plane. Changing its spin rate and spin direction would alter the magnitude and direction of its infrared photon thrust. Reversing the vehicle’s spin direction could be accomplished either by stopping the spin and re-starting it in the opposite direction or by precessing the spin axis 180 degrees around (the latter method would be preferable for large spacecraft). Like a solar sail, a Yarkovsky/YORP effect propelled spacecraft would have a low rate of acceleration, but it could achieve very high velocities over time.

The YORP effect could be utilized, if desired, to control the spacecraft’s spin rate, spin axis tilt, and spin axis precession rate (using no moving parts) by equipping the drum-shaped vehicle with short, wedge-shaped “blades” (which could, optionally, be made retractable) that would protrude from its sides. The blades could also have electronically-variable light reflectivity and absorption, like the variable-reflectivity liquid crystal steering panels on JAXA’s IKAROS solar sail. These blades would create an asymmetrical total vehicle solar illumination, which is the cause of the multiple YORP effects. As an alternative, the spacecraft’s spin rate control and spin axis pointing might be handled – again without any moving parts – by using selectively-charged wires (or other vehicle parts) to interact with the local planetary or solar magnetic fields. Or the vehicle might use magnetically-levitated, internal torque flywheels to control its spin rate and direction.

The black drum could be a soft (“quilted” quartz cloth, optionally rigidized by a vacuum-hardening pre-impregnated resin) or rigid (a folding metal or composite) outer cylinder standing off from the surface of the spacecraft, held there either by rigid struts or by tensioned cables or cords, in concert with centrifugal force. Either type could contain thermovoltaic cells to generate electricity for the spacecraft’s systems.

Photovoltaic solar cells could, however, be utilized by such a vehicle if desired. Its instruments, imaging system (if any – perhaps a spin-scan camera), and solar cells could be mounted on parts of the spacecraft “bus” that protrude above and below the ends of the black drum. Or, by using angled circumferential mirrors on the exposed ends of the bus (and metallized Kapton or other such material on the inside of the black drum), solar cells on the drum-obscured parts of the bus could be illuminated by sunlight. If a soft fabric drum were used, it would absorb some of the solar and cosmic radiation that degrades solar cells, and so would enable them to last longer.

Such a spacecraft could even use thermocouples in order to utilize the solar heat on the black drum (and the cold in the shadowed areas at its ends, by placing circumferential “heat shades” between the inside wall of the black cylinder and the cold sides of the thermocouples) to generate electricity for its onboard systems. Thermocouples made of dissimilar refractory metals might be very long-lived electricity generating devices for spacecraft of this type.

Station-Keeping for the Long Haul

Such a capability, combined with the ability to change orbits, maintain orbits, and perform Lagrangian point station-keeping without using any propellant (and with no moving parts), would enable Yarkovsky/YORP effect-utilizing spacecraft to operate for very long periods, whether in orbit around the Earth, other planets, the Sun, or other stars. The black drums used by these spacecraft would likely also have at least three advantages over solar sails. Over long periods of time, it is more difficult for a reflective object to remain reflective than for a black object to remain black. Unlike a sail, the drum could be more compact as well as have greater thickness and strength, and its rotation would increase its stiffness. Spacecraft using this method of propulsion should also be able to maneuver more effectively closer to a planet (especially one possessing an atmosphere) than a sail could.

A further possible advantage – for human-dispatched Bracewell probes sent to “loiter” in their target star systems for decades, centuries, or even millennia – would be that such black spacecraft wouldn’t attract visual attention as a sail-equipped probe would. An infrared search could find such a probe, but with dark asteroids and black, extinct comet nuclei likely being as common in other stellar systems as in our own, it might escape positive identification as an alien visitor, at least for some time.

Image: One of many science fiction treatments of Bracewell probes occurs in Michael McCollum’s Life Probe (Del Rey, 1983).

As Robert Freitas [4] has written, any civilization – perhaps even our own – might consider an alien Bracewell probe in its star system to be a threat, at least initially. Providing such probes with a measure of protection would “buy them time to explain themselves” by making them less-than-easy to find. This, and their ability to move between broadcasts, would better enable them to establish contact and demonstrate their peaceful purposes before they might otherwise be attacked by a wary race.

While brute-force methods got humanity into space, it is increasingly obvious that for far journeys and long sojourns there, harnessing the subtle natural forces that are freely available just above our heads is the only way that humanity can truly thrive and prosper in that realm.

Notes

[1] “Kekulé’s Dream” (see: http://web.chemdoodle.com/kekules-dream)

[2] “Yarkovsky Effect” (see: http://en.wikipedia.org/wiki/Yarkovsky_Effect)

[3] “Yarkovsky–O’Keefe–Radzievskii–Paddack Effect” (YORP Effect, (see: http://bit.ly/1fsVRRl)

[4] Robert Freitas Official Website (see: http://www.rfreitas.com/)