Centauri Dreams

Imagining and Planning Interstellar Exploration

A Black Hole of Information?

A couple of interesting posts to talk about in relation to yesterday’s essay on the Encyclopedia Galactica. At UC-Santa Cruz, Greg Laughlin writes entertainingly about The Machine Epoch, an idea that suggested itself because of the spam his systemic site continually draws from “robots, harvesters, spamdexing scripts, and viral entities,” all of which continually fill up his site’s activity logs as they try to insert links.

Anyone who attempts any kind of online publishing knows exactly what Laughlin is talking about, and while I hate to see his attention drawn even momentarily from his ongoing work, I always appreciate his insights on systemic, a blog whose range includes his exoplanet analyses as well as his speculations on the far future (as I mentioned yesterday, Laughlin and Fred Adams are the authors behind the 1999 title The Five Ages of the Universe, as mind-bending an exercise in extrapolating the future as anything I have ever read). I’ve learned on systemic that he can take something seemingly mundane and open it into a rich venue for speculation.

So naturally when I see Laughlin dealing with blog spam, I realize he’s got much bigger game in mind. In his latest, spam triggers his recall of a conversation with John McCarthy, whose credentials in artificial intelligence are of the highest order. McCarthy is deeply optimistic about the human future, believing that there are sustainable paths forward. He goes so far as to say “There are no apparent obstacles even to billion year sustainability.” Laughlin comments:

Optimistic is definitely the operative word. It’s also possible that the computational innovations that McCarthy had a hand in ushering in will consign the Anthropocene epoch to be the shortest — rather than one of the longest — periods in Earth’s geological history. Hazarding a guess, the Anthropocene might end not with the bang with which it began, but rather with the seemingly far more mundane moment when it is no longer possible to draw a distinction between the real visitors and the machine visitors to a web site.

Long or short-term? A billion years or a human future that more or less peters out as we yield to increasingly powerful artificial intelligence? We can’t know, though I admit that by temperament, I’m in the McCarthy camp. Either way, an Encyclopedia Galactica could get compiled by whatever kind of intelligences arise and communicate with each other in the galaxy.

Into the Rift

Of course, one problem is that any encyclopedia needs material to work with, and we are seeing some signs that huge amounts of information may now be falling into what Google’s Vinton Cerf, inventor of the TCP/IP protocols that drive the Internet, calls “an information black hole.”

Speaking at the American Association for the Advancement of Science’s annual meeting in San Jose (CA), Cerf asked whether we are facing into a century’s worth of ‘forgotten data,’ noting how much of our lives — our letters, music, family photos — are now locked into digital formats. Cerf’s warning, reported by The Guardian in an article called Google boss warns of ‘forgotten century’ with email and photos at risk, is stark:

“We are nonchalantly throwing all of our data into what could become an information black hole without realising it. We digitise things because we think we will preserve them, but what we don’t understand is that unless we take other steps, those digital versions may not be any better, and may even be worse, than the artefacts that we digitised… If there are photos you really care about, print them out.”

If that seems extreme, consider that no special technology was needed to read stored information from our past, from the clay tablets of ancient Mesopotamia to the scrolls and codices on which early historians and later medieval monks recorded their civilization. A great library is a grand thing that does not demand dedicated hardware and software to use. ‘Bit rot’ occurs when the equipment on which we record our data becomes obsolete — a box of floppy disks sitting on my file cabinet mutely reminds me that I currently have no way to read them.

Cerf’s point isn’t that information can’t be migrated from one format to another. We’ll surely preserve documents, photos, audio and video considered to be significant in many formats. But what’s surprising when you start prowling a big academic library is how often the most insignificant things can act as pointers to useful information. A bus schedule, a note dashed off by the acquaintance of an artist, a manuscript filed under the wrong heading — all these can unlock parts of our history, and we can assume the same about many a casual email.

Historians have learned how the greatest mathematician of antiquity considered the concept of infinity and anticipated calculus in 3 BC after the Archimedes palimpsest was found hidden under the words of a Byzantine prayer book from the 13th century. “We’ve been surprised by what we’ve learned from objects that have been preserved purely by happenstance that give us insights into an earlier civilisation,” [Cerf] said.

Will we preserve what we need to so that we have the kind of record of our time that is so tightly locked up in the books and artifacts of previous eras? The article mentions work at Carnegie Mellon University in Pittsburgh, where a project called Olive is archiving early versions of software. The project allows a computer to mimic the device the original software ran on, a technology that seems a natural solution as we try to record the early part of the desktop computer era. We’ll be refining such solutions as we continue to address this problem.

Cerf talks about preserving information for hundreds or thousands of years, but of course what we’d like to see is a seamless way to migrate our cultural output through advancing levels of technology so that if John McCarthy’s instincts are right, our remote ancestors will still have access to the things we did and said, both meaningful and seemingly inconsequential. That’s a long way from an Encyclopedia Galactica, but learning how to do this could teach us principles of archiving and preservation that could eventually feed such a compilation.

Information and Cosmic Evolution

Keeping information viable is something that has to be on the mind of a culture that continually changes its data formats. After all, preserving information is a fundamental part of what we do as a species — it’s what gives us our history. We’ve managed to preserve the accounts of battles and migrations and changes in culture through a wide range of media, from clay tablets to compact disks, but the last century has seen swift changes in everyday products like the things we use to encode music and video. How can we keep all this readable by future generations?

The question is challenging enough when we consider the short term, needing to read, for example, data tapes for our Pioneer spacecraft when we’ve all but lost the equipment needed to manage the task. But think, as we like to do in these pages, of the long-term future. You’ll recall Nick Nielsen’s recent essay Who Will Read the Encyclopedia Galactica, which looks at a future so remote that we have left the ‘stelliferous’ era itself, going beyond the time of stars collected into galaxies, which is itself, Nick points out, only a small sliver of the universe’s history.

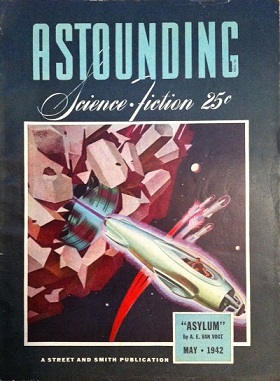

Can we create what Isaac Asimov called an Encyclopedia Galactica? If you go back and read Asimov’s Foundation books, you’ll find that the Encyclopedia Galactica appears frequently, often quoted as the author introduced new chapters. From its beginnings in a short story called “Foundation” (Astounding Science Fiction, May 1942), the Encyclopedia Galactica was seen as the entirety of knowledge throughout a widespread galactic empire. Carl Sagan introduced the idea to a new audience in his video series Cosmos as a cache of all information and knowledge.

Image: The May, 1942 issue of Astounding Science Fiction, containing the first of the short stories that would eventually be incorporated into the Foundation novels.

Now we have an interesting new paper from Stefano Mancini and Roberto Pierini (University of Camerino, Italy) and Mark Wilde (Louisiana State University) that looks at the question of information viability. An Encyclopedia Galactica is going to need to survive in whatever medium it is published in, which means preserving the information against noise or disruption. The paper, published in the New Journal of Physics, argues that even if we find a technology that allows for the perfect encoding of information, there are limitations that grow out of the evolution of the universe itself that cause information to become degraded.

Remember, we’re talking long-term here, and while an Encyclopedia Galactica might serve a galactic population robustly for millions, perhaps billions of years, what happens as we study the fate of information from the beginning to the end of time? Mancini and team looked at the question with relation to what is called a Robertson-Walker spacetime, which as Mark Wilde explains in this video abstract of the work, is a solution of Einstein’s field equations of general relativity that describes a homogeneous, isotropic, expanding or contracting universe.

In addition to Wilde’s video, let me point you to The Cosmological Limits of Information Storage, a recent entry on the Long Now Foundation’s blog. As for the team’s method using the Robertson-Walker spacetime as the background for its development of communication theory models, it is explained this way in the paper:

… we can consider any quantum state of the matter field before the expansion of the universe begins and define, without ambiguity, its particle content. We then let the universe expand and check how the state looks once the expansion is over. The overall picture can be thought of as a noisy channel into which some quantum state is fed. Once we have defined the quantum channel emerging from the physical model, we will be looking at the usual communication task as information transmission over the channel. Since we are interested in the preservation of any kind of information, we shall consider the trade-off between the different resources of classical and quantum information.

In other words, encoded information is modeled against an expanding universe to see what happens to it. The result challenges the idea that there is any such thing as perfectly stored information, for the paper finds that over time, as the universe expands and evolves, a transformation inevitably occurs in the quantum space in which it is encoded. Noise is the result, what the authors refer to as an ‘amplitude damping channel.’ Information that is encoded into a storage medium is inevitably corrupted by changes to the quantum state of the medium.

Cosmology comes into play here because the paper argues that faster expansion of the cosmos creates more noise. We can encode our information in the form of bits or we can use information stored and encoded by the quantum state of particular particles, but in both cases noise continues to mount as the universe continues to expand. Collecting all the material for our Encyclopedia Galactica may, then, be a smaller challenge than preserving it in the face of cosmic evolution. On the time scales envisioned in Nick Nielsen’s essay (and studied at length in Fred Adams’ and Greg Laughlin’s book The Five Ages of the Universe: Inside the Physics of Eternity, the keepers of the encyclopedia have their work cut out for them.

Preserving the Encyclopedia Galactica will demand, it seems, continual fixes and long-term vigilance. But consider the researchers’ thoughts on the direction for future work:

…one could also cope with the degradation of the stored information by intervening from time to time and actively correcting the contents of the memory during the evolution of the universe. In this direction, channel capacities taking into account this possibility have been introduced… In another direction, and much more speculatively, one might attempt to find a meaningful notion for entanglement assisted communication in our physical scenario by considering Einstein-Rosen bridges… or entanglement between different universe’s eras, related to dark energy.

Now we’re getting speculative indeed! I’ve left out the references to papers on each of the possibilities within that quotation — see the paper, which is available on arXiv, for more. Mark Wilde notes in the video referenced above that another step forward would be to look at more general models of the universe in which information could be encoded in such exotic scenarios as the spacetime near a black hole. The latter is an interesting thought, and my guess is that we’ll have new work from these researchers delving into such models in the near future, a time close enough to our own that the data should still be viable.

The paper is Mancini et al., “Preserving Information from the beginning to the end of time in a Robertson-Walker spacetime,” New Journal of Physics 16 (2014), 123049 (abstract / full text).

A Full Day at Pluto/Charon

Have a look at the latest imagery from the New Horizons spacecraft to get an idea of how center of mass — barycenter — works in astronomy. When two objects orbit each other, the barycenter is the point where they are in balance. A planet orbiting a star may look as if it orbits without influencing the much larger object, but in actuality both bodies orbit around a point that is offset from the center of the larger body. A good thing, too, because this is one of the ways we can spot exoplanets, by the observed ‘wobble’ in the stars they orbit.

The phenomenon is really evident in what the New Horizons team describes as the ‘Pluto-Charon dance.’ Here we have a case where the two objects are close enough in size — unlike planet and star, or the Moon and the Earth — so that the barycenter actually falls outside both of them. The time-lapse frames in the movie below show Pluto and Charon orbiting a barycenter above Pluto’s surface, where Pluto and Charon’s gravity effectively cancel each other. Each frame here has an exposure time of one-tenth of a second.

Charon is about one-eighth as massive as Pluto. The images in play here come from New Horizons’ Long-Range Reconnaissance Imager (LORRI), being made between January 25th and 31st of this year. The New Horizons team is in the midst of an optical navigation (Opnav) campaign to nail down the locations of Pluto and Charon as preparations continue for the July 14th flyby. None of the other four moons of Pluto are visible here because of the short exposure times, but focus in on Charon. We’re looking at an object about the size of Texas.

Now take a look at Pluto/Charon back in 1978 when James Christy, an astronomer at the U.S. Naval Observatory, could see it using the 1.55-m (61-inch) Kaj Strand Astrometric Reflector at the USNO Flagstaff Station in Arizona. Christy was studying what was then considered to be a solitary ‘planet’ (since demoted) when he noticed that in a number of the images, Pluto seemed to be elongated, a distortion in shape that varied with respect to background stars over time. The discovery of a moon was formally announced in early July of that year by the International Astronomical Union. Charon received its official name in 1985.

Image: What Pluto/Charon looked like to James Christy in 1978. Credit: U.S. Naval Observatory.

The New Horizons time-lapse movie shows an entire rotation of each body, the first of the images being taken when the spacecraft was 203 million kilometers from Pluto. The last frame, six and a half days later, was taken when New Horizons was 8 million kilometers closer. Alan Stern (Southwest Research Institute), principal investigator for New Horizons, notes the significance of the latest imagery:

“These images allow the New Horizons navigators to refine the positions of Pluto and Charon, and they have the additional benefit of allowing the mission scientists to study the variations in brightness of Pluto and Charon as they rotate, providing a preview of what to expect during the close encounter in July.”

That’s an encounter that will close an early chapter in space exploration — all nine of the objects formerly designated planets will have had close-up examination — but of course it opens up yet another, as New Horizons looks toward an encounter with a Kuiper Belt object as it moves ever outward. Just as our Voyagers are still communicating long after Voyager 2 left Neptune, New Horizons gives us much to look forward to.

What Comets Are Made Of

When the Rosetta spacecraft’s Philae lander bounced while landing on comet 67P/Churyumov-Gerasimenko last November, it was a reminder that comets have a hard outer shell, a black coating of organic molecules and dust that previous missions, like Deep Impact, have also observed. What we’d like to learn is what that crust is made of, and just as interesting, what is inside it. A study out of JPL is now suggesting possible answers.

Antti Lignell is lead author on a recent paper, which reports on the team’s use of a cryostat device called Himalaya that was used to flash freeze material much like that found in comets. The procedure was to flash freeze water vapor molecules at temperatures in the area of 30 Kelvin (minus 243 degrees Celsius). What results is something called ‘amorphous ice,’ as explained in this JPL news release. Proposed as a key ingredient not only of comets but of icy moons, amorphous ice preserves the mix of water with organics along with pockets of space.

JPL’s Murthy Gudipati, a co-author of the paper on this work, compares amorphous ice to cotton candy, while pointing out that on places with much more moderate temperatures, like the Earth, all ice is in crystalline form. But comets, as we know, can change drastically as they approach the Sun. To mimic this scenario, the researchers used their cryostat instrument to gradually warm the amorphous ice they had created to 150 Kelvin (minus 123 degrees Celsius).

What happened next involved the kind of organics called polycyclic aromatic hydrocarbons (PAHs) common in deep space. These had been infused in the ice mixture that Lignell and Gudipati created. Lignell describes the result:

“The PAHs stuck together and were expelled from the ice host as it crystallized. This may be the first observation of molecules clustering together due to a phase transition of ice, and this certainly has many important consequences for the chemistry and physics of ice.”

Expelling the PAHs meant that the water molecules could then form the tighter structures of crystalline ice. The lab had produced, in other words, a ‘comet’ nucleus of its own, similar to what we have observed so far in space. Gudipati likens the lab’s ‘comet’ to deep fried ice cream — an extremely cold interior marked by porous, amorphous ice with a crystalline crust on top that is laced with organics. What we could use next, he notes, is a mission to bring back cold samples from comets to compare to these results.

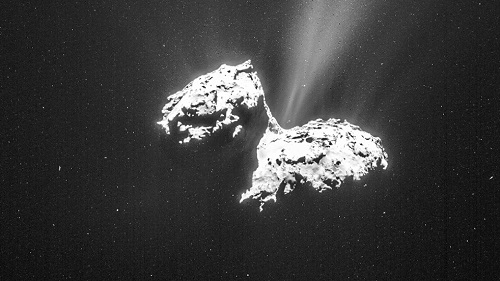

Image: Rosetta NAVCAM image of Comet 67P/C-G taken on 6 February from a distance of 124 km to the comet centre. In this orientation, the small comet lobe is to the left of the image and the large lobe is to the right. The image has been processed to bring out the details of the comet’s activity. The exposure time of the image is 6 seconds. Credits: ESA/Rosetta/NAVCAM – CC BY-SA IGO 3.0.

Meanwhile, we have the spectacular imagery above from Rosetta at comet 67P/Churyumov-Gerasimenko. What the European Space Agency refers to as ‘a nebulous glow of activity’ emanates from all over the sunlit surface, but note in particular the jets coming out of the neck region and extending toward the upper right. In this year of celestial marvels (Ceres, Pluto/Charon, and Rosetta at 67P/Churyumov-Gerasimenko), we’re seeing what happens to a comet as it warms and begins to vent gases from all over its surface. We’re now going to be able to follow Rosetta as it studies the comet from a range of distances, a view that until this year was solely in the domain of science fiction writers. What a spectacle lies ahead!

The paper is Lignell and Gudipati, “Mixing of the Immiscible: Hydrocarbons in Water-Ice near the Ice Crystallization Temperature,” published online by the Journal of Physical Chemistry on Oct0ber 10, 2014 (abstract).

Overcoming Tidal Lock around Lower Mass Stars

One of the big arguments against habitable planets around low mass stars like red dwarfs is the likelihood of tidal effects. An Earth-sized planet close enough to a red dwarf to be in its habitable zone should. the thinking goes, become tidally locked, so that it keeps one face toward its star at all times. The question then becomes, what kind of mechanisms might keep such a planet habitable at least on its day side, and could these negate the effects of a thick dark-side ice pack? Various solutions have been proposed, but the question remains open.

A new paper from Jérémy Leconte (Canadian Institute for Theoretical Astrophysics, University of Toronto) and colleagues now suggests that tidal effects may not be the game-changer we assumed them to be. In fact, by developing a three-dimensional climate model that predicts the effects of a planet’s atmosphere on the speed of its rotation, the authors now argue that the very presence of an atmosphere can overcome tidal effects to create a cycle of day and night.

The paper, titled “Asynchronous rotation of Earth-mass planets in the habitable zone of lower-mass stars,” was published in early February in Science. The authors note that the thermal inertia of the ground and atmosphere causes the atmosphere as a whole to lag behind the motion of the star. This is seen easily on Earth, when the normal changes we expect from night changing to day do not track precisely with the position of the Sun in the sky. Thus the hottest time of the day is not when the Sun is directly overhead but a few hours after this.

From the paper:

Because of this asymmetry in the atmospheric mass redistribution with respect to the subsolar point, the gravitational pull exerted by the Sun on the atmosphere has a nonzero net torque that tends to accelerate or decelerate its rotation, depending on the direction of the solar motion. Because the atmosphere and the surface are usually well coupled by friction in the atmospheric boundary layer, the angular momentum transferred from the orbit to the atmosphere is then transferred to the bulk of the planet, modifying its spin.

This effect is relatively minor on Earth thanks to our distance from the Sun, but is more pronounced on Venus, where the tug of tidal friction that tries to spin the planet down into synchronous rotation is overcome by the ‘thermal tides’ caused by this atmospheric torque. But Venus’ retrograde rotation has been attributed to its particularly massive atmosphere. The question becomes whether these atmospheric effects can drive planets in the habitable zone of low mass stars out of synchronous rotation even if their atmosphere is relatively thin.

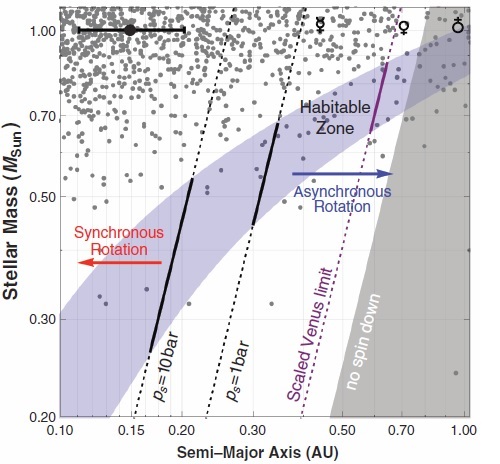

Pressure units in a planetary atmosphere are measured in bars — the average atmospheric pressure at Earth’s surface is approximately 1 bar (contrast this with the pressure on Venus of 93 bars). The paper offers a way to assess the efficiency of thermal tides for different atmospheric masses, with results that make us look anew at tidal lock. For the atmospheric tide model that emerges shows that habitable Earth-like planets with a 1-bar atmosphere around stars more massive than ~0.5 to 0.7 solar masses could overcome the effects of tidal synchronization. It’s a powerful finding, for the effects studied here should be widespread:

Atmospheres as massive as 1 bar are a reasonable expectation value given existing models and solar system examples. This is especially true in the outer habitable zone, where planets are expected to build massive atmospheres with several bars of CO2. So, our results demonstrate that asynchronism mediated by thermal tides should affect an important fraction of planets in the habitable zone of lower-mass stars.

Here is the graph from the paper that illustrates the results:

Image: Spin state of planets in the habitable zone.The blue region depicts the habitable zone, and gray dots are detected and candidate exoplanets. Each solid black line marks the critical orbital distance (ac) separating synchronous (left, red arrow) from asynchronous planets (right, blue arrow) for ps = 1 and 10 bar (the extrapolation outside the habitable zone is shown with dotted lines). Objects in the gray area are not spun down by tides. The error bar illustrates how limits would shift when varying the dissipation inside the planet (Q ~ 100) within an order of magnitude. Credit: Jérémy Leconte et al.

The result suggests that we may find planets in the habitable zone of lower-mass stars that are more Earth-like than expected. Do away with the permanent, frozen ice pack on what had been assumed to be the ‘dark side’ and water is no longer trapped, making it free to circulate. The implications for habitability seem positive, with a day-night cycle of weeks or months distributing temperatures, but Leconte remains cautious: “Whether this new understanding of exoplanets’ climate increases the ability of these planets to develop life remains an open question.”

The paper is Leconte et al., “Asynchronous rotation of Earth-mass planets in the habitable zone of lower-mass stars,” Science Vol. 347, Issue 6222 (6 February 2015). Abstract / preprint available. Thanks to Ashley Baldwin for a pointer to and discussion of this paper.

Twinkle: Studying Exoplanet Atmospheres

A small satellite designed to study and characterize exoplanet atmospheres is being developed by University College London (UCL) and Surrey Satellite Technology Ltd (SSTL) in the UK. Given the engaging name Twinkle, the satellite is to be launched within four years into a polar low-Earth orbit for three years of observations, with the potential for an extended mission of another five years. SSTL, based in Guildford, Surrey and an experienced hand in satellite development, is to build the spacecraft, with scientific instrumentation in the hands of UCL.

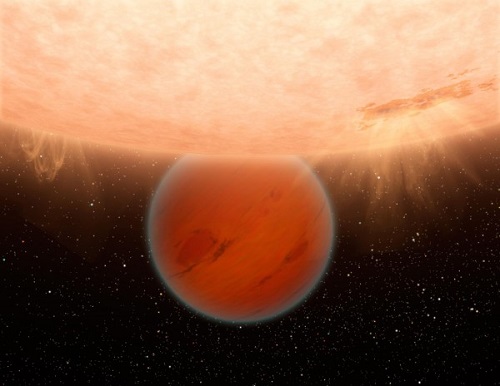

The method here is transmission spectroscopy, which can be employed when planets transit in front of their star as seen from Earth. Starlight passing through the atmosphere of the transiting world as it moves in front of and then behind the star offers a spectrum that can carry the signatures of the various molecules there, a method that has been used on a variety of worlds like the Neptune-class HAT-P-11b and the hot Jupiter HD 189733b. The goal of the Twinkle mission is to analyze at least 100 planets ranging from super-Earths to hot Jupiters, producing temperatures and even cloud maps.

Image: This artist’s impression depicts a Neptune-class world grazing the limb of its star as seen from our vantage point. Analyzing starlight as transiting worlds pass in front of and then behind their star can tell us much about the constituents of the planet’s atmosphere. Credit: NASA/JPL-Caltech.

Giovanna Tinetti (UCL), lead scientist for the mission, describes it as the first mission dedicated to analyzing exoplanet atmospheres, adding that understanding the chemical composition of an atmosphere serves as a key to the planet’s background. Distance from the parent star, notes this UCL news release, affects the chemistry and physical processes driving an atmosphere, and the atmospheres of small, terrestrial-class worlds, like that of our own Earth, can evolve substantially from their initial state through impacts with other bodies, loss of light molecules, volcanic activity or the effect of life. The atmosphere, then, can help us trace a planet’s history as we learn whether it was born in its current orbit or migrated from another part of the system..

I should mention that Tinetti was deeply involved in the discovery of water and methane in the atmosphere of HD 189733b. From Tinetti’s website at University College London:

A key observable for planets is the chemical composition and state of their atmosphere. Knowing what atmospheres are made of is essential to clarify, for instance, whether a planet was born in the orbit it is observed in or whether it has migrated a long way; it is also critical to understand the role of stellar radiation on escape processes, chemical evolution and climate. The atmospheric composition is the only indicator able to discriminate an habitable/inhabited planet from a sterile one.

Twinkle was presented several days ago at an open meeting of the Royal Astronomical Society. Interestingly, the mission is being developed with a mixture of private and public sources, at a total cost of about £50 million, including launch. That number seems strikingly low to me, with mission designers claiming that Twinkle is a factor of ten times cheaper to build and operate than other spacecraft developed through international space agency programs. The low cost is being attributed to the use of off-the-shelf components and growing expertise in small mission development. These numbers will be worth remembering if Twinkle performs as expected.