Centauri Dreams

Imagining and Planning Interstellar Exploration

‘Blue Stragglers’ in the Galactic Bulge

I’m fascinated by how much the exoplanet hunt is telling us about celestial objects other than planets. The other day we looked at some of the stellar spinoffs from the Kepler mission, including the unusual pulsations of the star HD 187091, now known to be not one star but two. But the examples run well beyond Kepler. Back in 2006, a survey called the Sagittarius Window Eclipsing Extrasolar Planet Search (SWEEPS) used Hubble data to study 180,000 stars in the galaxy’s central bulge, the object being to find ‘hot Jupiters’ orbiting close to their stars.

But the seven-day survey also turned up 42 so-called ‘blue straggler’ stars in the galactic bulge, their brightness and temperature far more typical of stars younger than those around them. It’s generally accepted that star formation in the central bulge has all but stopped, the giant blue stars of the region having exploded into supernovae billions of years ago. Blue stragglers are unusual because they are more luminous and bluer that would be expected. They’ve been identified in star clusters but never before seen inside the core of the galaxy.

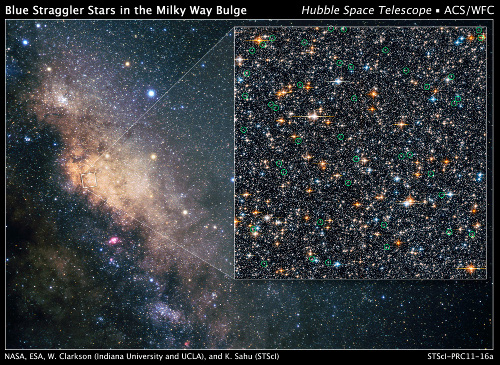

Image: Peering deep into the star-filled, ancient hub of our Milky Way (left), the Hubble Space Telescope has found a rare class of oddball stars called blue stragglers, the first time such objects have been detected within our galaxy’s bulge. Blue stragglers — so named because they seem to be lagging behind in their rate of aging compared with the population from which they formed — were first found inside ancient globular star clusters half a century ago. Credit: NASA, ESA, W. Clarkson (Indiana University and UCLA), and K. Sahu (STScI).

The galactic bulge is a tricky place to study because foreground stars in the disk compromise our view. But the SWEEPS data led to a re-examination of the target region, again with Hubble, two years after the original observations were made. The blue stragglers could clearly be identified as moving at the speed of the bulge stars rather than the foreground stars. Of the original 42 blue straggler candidates, anywhere from 18 to 37 are now thought to be genuine, the others being foreground objects or younger bulge stars that are not blue stragglers.

Allan Sandage discovered blue stragglers in 1953 while studying the globular cluster M3, leading scientists to ask why a star would appear so much younger than the stars around it. Stars in a cluster form at approximately the same time and should therefore show common characteristics determined by their age and initial mass. A Hertzsprung-Russell diagram of a cluster, for example, should show a readily defined curve on which the stars can be plotted.

Blue stragglers are the exception, giving the appearance of stars that have defied the aging process. One possibility is that they form in binaries, with the less massive of the two stars gathering in material from the larger companion, causing the accreting star to undergo fusion at a faster rate. More dramatic still would be the collision and merger of two stars — more likely in a region where stars are dense — which would cause the newly formed, more massive object to burn at a faster rate.

Scientists will use the blue straggler data to tune up their theories of star formation. Lead author Will Clarkson comments on the work, which will be published in the Astrophysical Journal:

“Although the Milky Way bulge is by far the closest galaxy bulge, several key aspects of its formation and subsequent evolution remain poorly understood. While the consensus is that the bulge largely stopped forming stars long ago, many details of its star-formation history remain controversial. The extent of the blue straggler population detected provides two new constraints for models of the star-formation history of the bulge.”

I’ll note in passing that Martin Beech (University of Regina) has suggested looking at blue stragglers in a SETI context, noting that some could be examples of astroengineering, the civilization in question using its technology to mix shell hydrogen into the inner stellar core to prolong its star’s lifetime on the main sequence. It’s an interesting suggestion though an unlikely one given that we can explain blue stragglers through conventional astrophysics. In fact, blue stragglers point to an important fact about the field some are calling ‘interstellar archaeology’ — gigantic astroengineering may be extremely difficult to tell apart from entirely natural phenomena, in which case Occam’s razor surely comes into play.

For the recent blue straggler discoveries, see Clarkson et al., “The First Detection of Blue Straggler Stars in the Milky Way Bulge,” in press at the Astrophysical Journal (preprint). On the possible application of blue stragglers to SETI, see Beech, “Blue Stragglers as Indicators of Extraterrestrial Civilizations?” Earth, Moon, and Planets 49 (1990), pp.177-186. And Greg Laughlin (UC-Santa Cruz) looks at blue stragglers as targets for photometric transit searches in this post on his systemic site.

On the Calendar: Exoplanets and Worldships

Be aware of two meetings of relevance for interstellar studies, the first of which takes place today at the Massachusetts Institute of Technology. There, a symposium called The Next 40 Years of Exoplanets runs all day, with presentations from major figures in the field — you can see the agenda here. I bring this up because MIT Libraries is planning to stream the presentations, starting with Dave Charbonneau (Harvard University) at 0900 EST. Those of you who’ve been asking about Alpha Centauri planet hunts will be glad to hear that Debra Fischer (Yale University), who is running one of the three ongoing Centauri searches, will be speaking between 1130 and 1300 EST.

The poster for this meeting reminds me of the incessant argument about what constitutes a habitable planet. It shows two kids in a twilight setting pointing up at the sky, their silhouettes framed by fading light reflected off a lake. One of them is saying ‘That star has a planet like Earth.” An asterisk reveals the definition of Earth-like for the purposes of this meeting: “…a rocky planet in an Earth-like orbit about a sun-like star that has strong evidence for surface liquid water.” So this time around we really do mean ‘like the Earth,’ orbiting a G-class star and harboring temperatures not so different from what we’re accustomed to here.

And no, that doesn’t rule out more exotic definitions of habitability, including potential habitats around M-dwarfs or deep below the ice on objects far from their star. But finding an ‘Earth’ fitting the symposium’s definition would seize the public imagination and doubtless inspire many a career in science. The forty years referred to in the title of the meeting is a prediction that within that time-frame, we’ll be able to point to a star visible with the naked eye and know that such a planet orbits it. While following the events online, you might also want to track writer Lee Billings (@LeeBillings on Twitter), who’s in Cambridge for the day. Lee’s insights are invariably valuable.

Addendum: Geoff Marcy, amidst many a pointed comment about NASA’s priorities, also discussed in his morning session a probe to Alpha Centauri, as reported by Nature‘s newsblog:

On the back then of these serious policy criticisms came Marcy’s provocative idea for a mission to Alpha Centauri. He appealed to US President Barack Obama to announce the launch of a probe that would send back pictures of any planets, asteroids and comets in the system in the next few hundred years, with the US partnering with Japan, China, India and Europe to make it happen. “It would jolt NASA back to life,” he declared. Maverick it might sound, but many in the room seemed to take the idea in the spirit of focusing minds on the ultimate goal of planet-hunting; to take humanity’s first steps towards reaching out to life elsewhere in the universe.

Into the Generational Deep

The other conference, this one deep in the summer, takes us into the domain of far future technology. Some of the great science fiction about starships talks about voyages that last for centuries — I’m thinking not only of Heinlein’s Orphans of the Sky (1963, but drawing on two novellas in Astounding Science Fiction from 1941), but also Brian Aldiss’ Non-Stop (1958) and the recently published Hull Zero Three, by Greg Bear. In each case, we’re dealing with people aboard a vessel that is meant to survive for many human generations, a frequent issue being to identify just what is going on aboard the craft and what the actual destination is.

Call them ‘generation ships’ or ‘worldships,’ vast constructs that are built around the premise that flight to the stars will be long and slow, but that human technology will find a way to attain speeds of several percent of the speed of light to make manned journeys possible. A surprising amount of work has been done addressing the problems of building and maintaining a worldship, much of it appearing in the Journal of the British Interplanetary Society. Now the BIS is preparing a session dealing explicitly with worldships. Here’s their description of the event:

In 1984 JBIS published papers considering the design of a World ship. This is a very large vehicle many tens of kilometres in length and having a mass of millions of tons, moving at a fraction of a per cent of the speed of light and taking hundreds of years to millennia to complete its journey. It is a self-contained, self-sufficient ship carrying a crew that may number hundreds to thousands and may even contain an ocean, all directed towards an interstellar colonisation strategy. A symposium is being organised to discuss both old and new ideas in relation to the concept of a World Ship. This one day event is an attempt to reinvigorate thinking on this topic and to promote new ideas and will focus on the concepts, cause, cost, construction and engineering feasibility as well as sociological issues associated with the human crew. All presentations are to be written up for submission to a special issue of JBIS. Submissions relating to this topic or closely related themes are invited. Interested persons should submit a title and abstract to the Executive Secretary.

The session will run on August 17, 2011 from 0930 to 1630 UTC at the BIS headquarters on South Lambeth Road in London. The call for papers is available online. My own thinking is that a worldship is almost inevitable if we find no faster means of propulsion somewhere down the line. Imagine a future in which O’Neill-style space habitats begin to create a non-planetary choice for living and working. If we develop the infrastructure to make that happen, it’s not an unthinkable stretch to see generations that have adapted to this environment moving out between the stars.

Would their aim be colonisation of a remote stellar system? Perhaps, but my guess is that humans who have lived for a thousand years in a highly customized artificial environment may choose not to plant roots on the first habitable planet they find. They may explore it and study its system while deciding to stay aboard their familiar vessel, eventually casting off once again for the deep. In any case, worldships offer an interesting take on how we might make interstellar journeys relying not so much on startling breakthroughs in physics as steady progress in engineering and the production of energy. The London session should be provocative indeed.

OSIRIS-REx: Sampling an Asteroid

Asteroid 1999 RQ36 may or may not pose a future problem for our planet — the chances of an impact with the Earth in 2182 are now estimated at roughly one in 1800. But learning more about it will help us understand the population of near-Earth objects that much better, one of several reasons why the OSIRIS-REx mission is significant. The acronym stands for Origins, Spectral Interpretation, Resource Identification, Security-Regolith Explorer, a genuine mouthful, but a name we’ll be hearing more of as the launch of this sample-return mission approaches in 2016.

The target asteroid, 575 meters in diameter, has been the subject of extensive study not only by ground-based telescopes including the Arecibo planetary radar but also by the Spitzer Space Telescope. We know that 1999 RQ36 orbits the Sun every 1.2 years and crosses the Earth’s orbit every September, with a shape and rotation rate that are well understood. OSIRIS-REx will carry pristine samples of carbonaceous materials of a quality never before analyzed in our laboratories back to Earth, using a sample collecting device that will inject nitrogen to stir up surface materials for capture and storage on the journey back.

This University of Arizona video gives an overview of the mission:

Dante Lauretta (University of Arizona) is deputy principal investigator for OSIRIS-REx:

“OSIRIS-REx will usher in a new era of planetary exploration. For the first time in space-exploration history, a mission will travel to, and return pristine samples of a carbonaceous asteroid with known geologic context. Such samples are critical to understanding the origin of the solar system, Earth, and life.”

The 60 gram sample will be collected in a surface contact that lasts for no more than five seconds. In fact, it’s hard to describe this as a landing. A little over a year ago I quoted Joseph Nuth (NASA GSFC) on the problems of sampling a quickly rotating object of this size. Think in terms of two spacecraft trying to link rather than one trying to land on a surface:

“Gravity on this asteroid is so weak, if you were on the surface, held your arm out straight and dropped a rock, it would take about half an hour for it to hit the ground,” says Nuth. “Pressure from the sun’s radiation and the solar wind on the spacecraft and the solar panels is about 20 percent of the gravitational attraction from RQ36. It will be more like docking than landing.”

The spacecraft will spend more than a year orbiting the asteroid before collecting the samples for return to Earth in 2023. You’ll recall the Japanese Hayabusa spacecraft’s mission to Itokawa, with the first return of asteroid materials in June of 2010. 1999 RQ36 may be a more interesting target given its carbonaceous composition. The idea is to go after an asteroid rich in organics, the kind of object that might have once seeded the Earth with life’s precursors.

OSIRIS-REx should also provide useful data on the Yarkovsky effect, which induces uneven forces on a small orbiting object because of surface heating from sunlight. You can imagine how tricky the Yarkovsky effect is to model given the variables of surface composition, but learning more about it will be helpful as we learn to tighten the precision of projected asteroid orbits. That, in turn, can help us decide whether or not a particular object really does pose a threat to the Earth at some future date.

Meanwhile, we’re keeping a close eye on the Dawn mission, now closing on Vesta and eventually destined to orbit Ceres. Both missions will add significantly to our knowledge of asteroids and their role in Solar System development, but Dawn will not return samples of either of its destinations to Earth. OSIRIS-REx also recalls the Stardust spacecraft, which returned particles from comet Wild 2 in 2006. And like Stardust, OSIRIS-REx will be capable of an extended mission if called upon — Stardust (renamed NExT, for New Exploration of Tempel 1) performed a flyby of previously visited comet Tempel 1 earlier this year.

Beyond the Kepler Planets

Kepler is a telescope that does nothing more than stare at a single patch of sky, described by its principal investigator, with a touch of whimsy, as the most boring space mission in history. William Borucki is referring to the fact that about the only thing that changes on Kepler is the occasional alignment of its solar panels. But of course Borucki’s jest belies the fact that the mission in question is finding planets by the bushel, with more than 1200 candidates already reported, and who knows how many other interesting objects ripe for discovery. Not all of these are planets, to be sure, and as we’ll see in a moment, many are intriguing in their own right.

But the planets have center stage, and the talk at the American Astronomical Society’s 218th meeting has been of multiple planet systems found by Kepler, after a presentation by David Latham (Harvard-Smithsonian Center for Astrophysics). Of Kepler’s 1200 candidates, fully 408 are found in multiple planet systems. Latham told the conference that finding so many multiple systems was a surprise to a team that had expected to find no more than two or three.

To discover this many multiple systems requires planetary orbits to be relatively flat in relation to each other. In our Solar System, for example, some planetary orbits are tilted up to seven degrees, meaning that no one observing the system from outside would be able to detect all eight planets by the transit method. What Kepler has uncovered are numerous multiple systems whose planetary orbits are much flatter than our own, tilted less than a single degree.

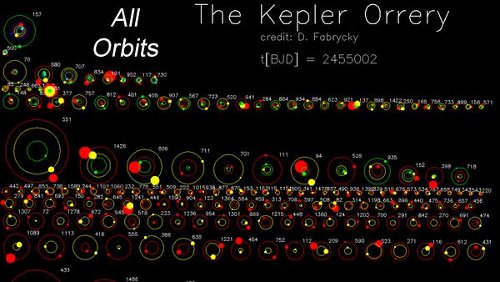

Image (click to enlarge and animate): Multiple-planet systems discovered by Kepler as of 2/2/2011; orbits go through the entire mission (3.5 years). Hot colors to cool colors (red to yellow to green to cyan to blue to gray) are big planets to smaller planets, relative to the other planets in the system. Credit: Daniel Fabrycky.

Interestingly, multiple planet systems may give us the help we need to detect small, rocky worlds. While the radial velocity method helps us find larger objects orbiting a star, terrestrial-class worlds are small enough that their radial velocity signal is hard to detect. With a multiple planet systems, astronomers will be able to use transit timing variations, measuring how gravitational interactions between the planets cause tiny changes to the time between transits. Latham’s colleague Matthew Holman notes the power of such a signal:

“These planets are pulling and pushing on each other, and we can measure that. Dozens of the systems Kepler found show signs of transit timing variations.”

Using the transit method, Kepler should be able to identify small planets in wider orbits around their stars, including those that may be in the habitable zone, but transit timing variations may flag the presence of such planets and play a role in the intense follow-up that will produce a confirmation. We have exciting times ahead of us as Kepler continues its mission. Meanwhile, what accounts for the flatness of the planetary orbits in these multiple planet systems? Latham gives a nod to the fact that most of them are dominated by planets smaller than Neptune. Jupiter-class worlds cause system disruptions that can result in tilted orbits for smaller planets.

“Jupiters are the 800-pound gorillas stirring things up during the early history of these systems,” said Latham. “Other studies have found plenty of systems with big planets, but they’re not flat.”

A Catalog of Eccentric Objects

Kepler’s treasure trove includes far more than planets, as an interesting article in Science News points out (thanks to Antonio Tavani for the pointer to this one). After all, the observatory is looking at tens of thousands of stars to produce its planetary finds, and in most cases, planets aren’t lined up in such a way that they can be seen from Earth, if they exist. But Science News quotes Geoff Marcy (UC-Berkeley) on the variety of stars being seen: “There are so many stars that show bizarre, utterly unexplainable brightness variations that I don’t know where to begin.”

Consider the English amateur Kevin Apps, who became curious about a red dwarf in the Kepler field that was not among the 156,000 chosen for full investigation. Apps discovered that he could get light curves for the system from data produced by Kepler’s initial commissioning phase. The light curve showed dips spaced 12.71 days apart, an intriguing find that led him to contact professional astronomers who went to work on the system themselves. The result: The red dwarf turned out to be not a single star but a widely spaced binary of two M-dwarfs, with a massive object in orbit around the larger of the stars, evidently a brown dwarf.

Kepler keeps turning up oddities. A star called KIC 10195926, for example, twice the mass of the Sun, shows ‘torsional modes’ in its rotation — the star’s northern and southern halves spin at different rates, trading off which spins fastest. This is the first time such torsional modes have been seen. The star has now been classed as an Ap star — A-peculiar — with a strong magnetic field. It’s the subject of a paper in Monthly Notices of the Royal Astronomical Society.

The star HD 187091, about a thousand light years from the Earth, is twice as massive as the Sun. Kepler’s light curve showed a 42-day cycle, with the star’s brightness rising to a peak and then quickly subsiding, with numerous secondary brightness variations between the peaks. It turns out this is not a single A-class star, as previously believed, but two stars of nearly the same size in a highly elliptical orbit. Let me quote from Science News on this, drawing on the magazine’s interview with William Welsh (San Diego State University):

The brightening occurs as the stars, tidally warped by their gravity at closest approach into slight egg shapes, roast one another on their facing sides and heat up. And that explains the spike in brightness, the team reported online in February at arXiv.org. The more surprising revelation of Kepler’s data is that one, and perhaps both, pulsate furiously at rates that are precise multiples of their rate of close encounters, in some cases pulsing exactly 90 and 91 times for each orbit. “Nobody had ever seen, or even thought, something like this could happen,” Welsh says. Discovering that a star’s rapid pulsations are not always driven by internal processes, but can be paced by a tidal metronome from a partner star, offers a new window into stellar dynamics and structure.

How many more such surprises will Kepler give us? An extended Kepler mission (and we might be able to get an additional four or five years beyond the 2013 original mission end date) should yield interesting objects galore. The follow-up to Kepler might be the European Space Agency’s Plato (Planetary Transits and Oscillations of Stars), which would, like Kepler, examine star fields for lengthy periods of time, but would also be able to swivel and look at different stellar fields. Perhaps the success of Kepler will give Plato the boost it needs for a liftoff in this decade.

Progress Toward the Dream of Space Drives and Stargates

by James F. Woodward

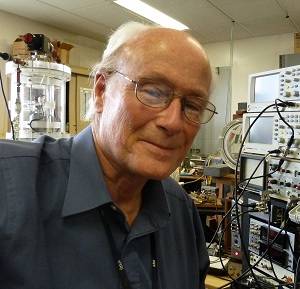

I first wrote about James Woodward’s work in my 2004 book Centauri Dreams: Imagining and Planning Interstellar Exploration, and have often been asked since to comment further on his research. But it’s best to leave that to the man himself, and I’m pleased to turn today’s post over to him. A bit of biography: Jim Woodward earned bachelor’s and master’s degrees in physics at Middlebury College and New York University (respectively) in the 1960s. From his undergraduate days, his chief interest was in gravitation, a field then not very popular. So, for his Ph.D., he changed to the history of science, writing a dissertation on the history of attempts to deal with the problem of “action-at-a-distance” in gravity theory from the 17th to the early 20th centuries (Ph.D., University of Denver, 1972).

On completion of his graduate studies, Jim took a teaching job in the history of science at California State University Fullerton (CSUF), where he has been ever since. Shortly after his arrival at CSUF, he established friendships with colleagues in the Physics Department who helped him set up a small-scale, table-top experimental research program doing offbeat experiments related to gravitation – experiments which continue to this day. In 1980, the faculty of the Physics Department elected Jim to an adjunct professorship in the department in recognition of his ongoing research.

In 1989, the detection of an algebraic error in a calculation done a decade earlier led Jim to realize that an effect he had been exploring proceeded from standard gravity theory (general relativity), as long as one were willing to admit the correctness of something called “Mach’s principle” – the proposition enunciated by Mach and Einstein that the inertial properties of matter should proceed from the gravitational interaction of local bodies with the (chiefly distant) bulk of the matter in the universe. Since that time, Jim’s research efforts have been devoted to exploring “Mach effects”, trying to manipulate them so that practical effects can be produced. He has secured several patents on the methods involved.

Jim retired from teaching in 2005. Shortly thereafter, he was diagnosed with some inconvenient medical problems, problems that have necessitated ongoing care. But, notwithstanding these medical issues, he passes along the good news that he remains “in pretty good health” and continues to be active in his chosen area of research. Herewith a look at the current thinking of this innovative researcher.

Travel to even the nearest stars has long been known to require a propulsion system capable of accelerating a starship to a significant fraction of the speed of light if the trip is to be done in less than a human lifetime. And if such travel is to be seriously practical – that is, you want to get back before all of your stay-behind friends and family have passed on – faster than light transit speeds will be needed. That means “warp drives” are required. Better yet would be technology that would permit the formation of “absurdly benign wormholes” or “stargates”: short-cuts through “hyperspace” with dimensions on the order of at most a few tens of meters that leave the spacetime surrounding them flat. Like the wormholes in the movie and TV series “Stargate” (but not nearly so long and without “event horizons” as traversable wormholes don’t have event horizons). With stargates you can dispense with all of the claptrap attendant to starships and get where you want to go (and back) in literally no time at all. Indeed, you can get back before you left (if Stephen Hawking’s “chronology protection conjecture” is wrong) – but you can’t kill yourself before you leave.

Starships and stargates were the merest science fiction until 1988. In 1988 the issue of rapid spacetime transport became part of serious science when Kip Thorne and some of his graduate students posed the question: What restriction does general relativity theory (GRT) place on the activities of arbitrarily advanced aliens who putatively travel immense distances in essentially no time at all? The question was famously instigated by Carl Sagan’s request that Thorne vet his novel Contact, where travel to and from the center of the galaxy (more than 20,000 light years distant) is accomplished in almost no time at all. Thorne’s answer was wormholes – spacetime distortions that connect arbitrarily distant events through a tunnel-like structure in hyperspace – held open by “exotic” matter. Exotic matter is self-repulsive, and for the aforementioned “absurdly benign” wormholes, this stuff must have negative restmass. Not only does the restmass have to be negative, to make a wormhole large enough to be traversable, you need a Jupiter mass (2 X 1027 kg) of the stuff. This is almost exactly one one thousandth of the mass of the Sun and hundreds of times the mass of the Earth. In your livingroom, or on your patio. Warp drives, in this connection at least, are no better than wormholes. Miguel Alcubierre, in 1994, wrote out the “metric” for a warp drive; and it too places the same exotic matter requirement on would be builders.

Long before Thorne and Alcubierre laid out the requirements of GRT for rapid spacetime transport, it was obvious that finding a way to manipulate gravity and inertia was prerequisite to any scheme that hoped to approach, much less vastly surpass the speed of light. Indeed, in the late 1950s and early 1960s the US Air Force sponsored research in gravitational physics at Wright Field in Ohio. As a purely academic exercise, the Air Force could have cared less about GRT. Evidently, they hoped that such research might lead to insights that would prove of practical value. It seems that such hopes were not realized.

If you read through the serious scientific literature of the 20th century, until Thorne’s work in the late ’80s at any rate, you will find almost nothing ostensibly relating to rapid spacetime transport. The crackpot literature of this era, however, is replete with all sorts of wild claims and deeply dubious schemes, none of which are accompanied by anything resembling serious science. But the serious (peer reviewed) scientific literature is not devoid of anything of interest.

If you hope to manipulate gravity and inertia to the end of rapid spacetime transport, the “obvious conjecture” is that you need a way to get some purchase on gravity and inertia. Standard physics, embodied in the field equations of Einstein (GRT) and Maxwell (electrodynamics), seems to preclude such a possibility. So that “obvious conjecture” suggests that some “coupling” beyond that contained in the Einstein-Maxwell equations needs to be found. And if we are lucky, such a coupling, when found, will lead to a way to do the desired manipulations. As it turns out, there are at least two instances of such proposed couplings advanced by physicists of impeccable credentials. The first was made by Michael Faraday – arguably the pre-eminent experimental physicist of all time – in the 1840s. He wanted to kill the action-at-a-distance character of Newtonian gravity (that is, its purported instantaneous propagation) by inductively coupling it to electromagnetism (which he had successfully shown to not be an action-at-a-distance interaction by demonstrating the inductive coupling of electricity and magnetism). He did experiments intended to reveal such coupling. He failed.

The second proposal was first made by Arthur Schuster (President of the Royal Society in the 1890s) and later Patrick M.S. Blackett (1947 Nobel laureate for physics). They speculated that planetary and stellar magnetic fields might be generated by the rotational motion of the matter that makes them up. That is, electrically neutral matter in rotation might generate a magnetic field. Maxwell’s electrodynamics, of course, makes no such prediction. There were other proposals. In the 1930s and ’40s Wolfgang Pauli and then Erwin Schrodinger constructed five-dimensional “unified” field theories of gravity and electromagnetism that predicted small coupling effects not present in the Einstein-Maxwell equations. But the Schuster-Blackett conjecture is more promising as the effects there are much larger – large enough for experimental investigation. And George Luchak, a Canadian graduate student (at the time), had written down a set of coupled field equations for Blackett’s proposal.

Some worthwhile experiments can be done with limited means in a short time but only a fool tries to do serious experiments without having a plausible theory as a guide. Plausible theory does not mean Joe Doak’s unified field theory. It means theory that only deviates from standard physics in explicit, precise ways that are transparent to inspection and evaluation. (The contra positive, by the way, is also true.) So, armed with Faraday’s conjecture and then the Schuster-Blackett conjecture and Luchak’s field equations, in the late 1960s I set out to investigate whether they might lead to some purchase on gravity and inertia. The better part of 25 years passed doing table-top experiments and poking around in pulsar astrophysics (with its rapidly rotating neutron stars with enormous magnetic fields, pulsars are the ultimate test bed for Blackett’s conjecture) to see whether anything was there. Suggestive, but not convincing, results kept turning up. In the end, nothing could be demonstrated beyond a reasonable doubt – the criterion of merit in this business. However, as this investigation was drawing to a close, about the time that Thorne and others got serious about traversable wormholes, detection of an algebraic error in a calculation led to serious re-examination of Luchak’s formalism for the Blackett effect.

Luchak, when he wrote down his coupled field equations, had been chiefly interested in getting the terms to be added to Maxwell’s electrodynamic equations that would account for Blackett’s conjecture. So, instead of invoking the full formal apparatus of GRT, he wrote down Maxwell’s equations using the usual four dimensions of spacetime, and included a Newtonian approximation for gravity using the variables made available by invoking a fifth dimension. He wanted a relativistically correct formalism, so his gravity field equations included some terms involving time. They were required because of the assumed speed of light propagation velocity of the gravity field – where Newton’s gravity theory has no time-dependent terms as gravity “propagates” instantaneously. You might think all of this not particularly interesting, because it is well-known that special relativity theory (SRT) hasn’t really got anything to do with gravity – notwithstanding that you can write down modified Newtonian gravity field equations that are relativistically correct (technospeak: “Lorentz invariant”).

But this isn’t quite right. Special relativity has inertia implicitly built right into the foundations of the theory. Indeed, SRT is only valid in “inertial” frames of reference.[ref]Inertial reference frames are those in which Newton’s first law is valid for objects that do not experience external forces. They are not an inherent part of spacetime per se unless you adhere to the view that spacetime itself has inertial properties that cause inertial reaction forces. This is not the common view of the content of SRT where inertial reaction forces are attributed to material objects themselves, not the spacetime in which they reside.[/ref] So, consider the most famous equation in all physics (that Einstein published as an afterthought to SRT): E=mc2. But write it as Einstein first did: m=E/c2. The mass of an object – that is, its inertia – is equal to its total energy divided by the square of the speed of light. [Frank Wilczek has written a very good book about this: The Lightness of Being.] If inertia and gravity are intimately connected, then since inertia is an integral part of SRT, gravity suffuses SRT, notwithstanding that it does not appear explicitly anywhere in the theory.[ref] As a technical note it is worth mentioning that in GRT inertial reaction forces arise from gravity if space is globally flat, as in fact it is measured to be, and global flatness is the distinctive feature of space in SRT. This, however, does not mean that spacetime has inherent inertial properties.[/ref] Are gravity and inertia intimately connected? Einstein thought they were. A well known part of this connection is the “Equivalence Principle” (that inertial and gravitational forces are the same thing) but there is an even deeper notion needing attention. He gave this notion its name: Mach’s principle, for Einstein attributed the idea to Ernst Mach (of Mach number fame).[ref] Another technical note: spacetime figures into the connection between gravity and inertia as the structure of spacetime is determined by the distribution of all gravitating stuff in the universe in the field equations of GRT. So if gravity and inertia are the obverse and reverse of the same coin, the structure of spacetime is automatically encompassed. Spacetime per se only acquires inertial properties if it is ascribed material properties – that is, it gravitates. Interestingly, if “dark energy” is an inherent property of spacetime, it gravitates.[/ref]

What is Mach’s principle? Well, lots of people have given lots of versions of this principle, and protracted debates have taken place about it. Its simplest expression is: Inertial reaction forces are produced by the gravitational action of everything that gravitates in the universe. But back in 1997 Herman Bondi and Joseph Samuel, answering an argument by Wolfgang Rindler, listed a dozen different formulations of the principle. Generally, they fall into one of two categories: “relationalist” or “physical”. In the relationalist view, the motion of things can only be related to other things, but not to spacetime itself. Nothing is said about the interaction (via fields that produce forces) of matter on other matter. The physical view is different and more robust as it asserts that the principle requires that inertial reaction forces be caused by the action of other matter, which depends on its quantity, distribution, and forces, in particular, gravity, as well as its relative motion. [Brian Greene not long ago wrote a very good book about Mach’s principle called The Fabric of the Cosmos. Alas, he settled for the “relationalist” version of the principle, which turns out to be useless as far as rapid spacetime transport is concerned.]

The simplest “physical” statement of the principle, endorsed by Einstein and some others, says that all inertial reaction forces are produced by the gravitational action of chiefly the distant matter in the universe. Note that this goes a good deal farther than Einstein’s Equivalence Principle which merely states that the inertial and gravitational masses of things are the same (and, as a result, that all objects “fall” with the same acceleration in a gravity field), but says nothing about why this might be the case. Mach’s principle provides the answer to: why?

Guided by Mach’s principle and Luchak’s Newtonian approximation for gravity – and a simple calculation done by Dennis Sciama in his doctoral work for Paul Dirac in the early 1950s – it is possible to show that when extended massive objects are accelerated, if their “internal” energies change during the accelerations, fluctuations in their masses should occur. That’s the purchase on gravity and inertia you need. (Ironically, though these effects are not obviously present in the field equations of GRT or electrodynamics, they do not depend on any novel coupling of those fields. So, no “new physics” is required.) But that alone is not enough. You need two more things. First, you need experimental results that show that this theorizing actually corresponds to reality. And second, you need to show how “Mach effects” can be used to make the Jupiter masses of exotic matter needed for stargates and warp drives. This can only be done with a theory of matter that includes gravity. The Standard Model of serious physics, alas, does not include gravity. A model for matter that includes gravity was constructed in 1960 by three physicists of impeccable credentials. They are Richard Arnowitt (Texas A and M), Stanley Deser (Brandeis), and Charles Misner (U. of Maryland). Their “ADM” model can be adapted to answer the question: Does some hideously large amount of exotic matter lie shrouded in the normal matter we deal with every day? Were the answer to this question “no”, you probably wouldn’t be reading this. Happily, the argument about the nature of matter and the ADM model that bears on the wormhole problem can be followed with little more than high school algebra. And it may be that shrouded in everyday stuff all around us, including us, is the Jupiter mass of exotic matter we want. Should it be possible to expose the exotic bare masses of the elementary particles that make up normal matter, then stargates may lie in our future – and if in our future, perhaps our present and past as well.

The physics that deals with the origin of inertia and its relation to gravitation is at least not widely appreciated, and may be incomplete. Therein lie opportunities to seek new propulsion physics. Mach’s principle and Mach effects is an active area of research into such possibilities. Whether these will lead to propulsion breakthroughs cannot be predicted, but we will certainly learn more about unfinished physics questions along the way.

REFERENCES

More technical and extensive discussions of some of the issues mentioned above are available in the peer reviewed literature and other sources. A select bibliography of some of this material is provided below. Here at Centauri Dreams you will shortly find more recent and less technical treatments available. They will be broken down into three parts. One will deal with the issues surrounding the origin of inertia and the prediction of Mach effects [tenatively titled “Mach’s principle and Mach effects”]. The second will present recent experimental results [tentatively titled “Mach effects: Recent experimental results”]. And the third will be an elaboration of the modifications of the ADM model that suggest exotic matter may be hiding in plain sight all around us [tentatively titled “Stargates, Mach’s principle, and the structure of elementary particles”]. The first two pieces will not involve very much explicit mathematics. The third will have some math, but not much beyond quadratic equations and high school algebra.

- Books:

Greene, Brian, The Fabric of the Cosmos: Space, Time, and the Texture of Reality (Knopf, New York, 2004).

Sagan, Carl, Contact (Simon and Schuster, New York, 1965).

Wilczek, Frank, The Lightness of Being: Mass, Ether, and the Unification of Forces (Basic Books, New York, 2008).

- Articles:

Alcubierre, M., “The warp drive: hyper-fast travel within general relativity,” Class. Quant. Grav. 11 (1994) L73 – L77. The paper where Alcubierre writes out he metric for warp drives.

Arnowitt, R., Deser, S., and Misner, C.W., “Gravitational-Electromagnetic Coupling and the Classical Self-Energy Problem,” Phys. Rev. 120 (1960a) 313 – 320. The first of the ADM papers on general relativistic “electrons”.

Arnowitt, R., Deser, S., and Misner, C.W., “Interior Schwartzschild Solutions and Interpretation of Source Terms,” Phys. Rev 120 (1960b) 321 – 324. The second of the ADM papers.

Bondi, H. and Samuel, J., “The Lense-Thirring effect and Mach’s principle,” Phys. Lett. A 228 (1997) 121 – 126. One of the best papers on Mach’s principle.

Luchak, George, “A Fundamental Theory of the Magnetism of Massive Rotating Bodies,” Canadian J. Phys. 29 (1953) 470 – 479. The paper with the formalism for the Schuster-Blackett effect.

Morris, M.S. and Thorne, K. S., “Wormholes in spacetime and their use for interstellar travel: A tool for teaching general relativity,” Am. J. Phys. 56 (1988) 395 – 412. The paper where Kip Thorne and his then grad student Michael Morris spelled out the restrictions set by general relativity for interstellar travel. Their “absurdly benign wormhole” solution is found in the appendix on page 410.

Sciama, D. “On the Origin of Inertia,” Monthly Notices of the Royal Astronomical Society 113 (1953) 34 – 42. The paper where Sciama shows that a vector theory of gravity (that turns out to be an approximation to general relativity) can account for inertial reaction forces when certain conditions are met.

Woodward, J.F., “Making the Universe Safe for Historians: Time Travel and the Laws of Physics,” Found. Phys. Lett. 8 (1995) 1 – 39. The first paper where essentially all of the physics of Mach effects and their application to wormhole physics is laid out.

- Other sources for Mach effects and related issues

“Flux Capacitors and the Origin of Inertia,” Foundations of Physics 34, 1475 – 1514 (2004). [Appendicies give a line-by-line elaboration of the derivation of Mach effects, and a careful evaluation of how Newton’s second law applies to systems in which Mach effects are present.]

“The Technical End of Mach’s Principle,” in: eds. M. Sachs and A.R. Roy, Mach’s Principle and the Origin of Inertia (Apeiron, Montreal, 2003), pp. 19 – 36. [Contributed paper for a commemorative volume for the 50th anniversary of the founding of the Kharagpur campus of the Indian Institute of Technology. It is the only published paper where the wormhole term in Mach effects was sought.]

“Are the Past and Future Really Out There,” Annales de la Fondation Louis de Broglie 28, 549 – 568 (2003). [Contributed paper for a commemorative issue honoring the 60th anniversary of the completion of Olivier Costa de Beauregard’s doctoral work with Prince Louis de Broglie. The instantaneity of inertial reaction forces, combined with the lightspeed restriction on signal propagation of SRT, suggest that the Wheeler-Feynman “action-at-a-distance” picture of long range interactions is correct. This picture suggests that the past and future have some meaningful objective physical existence. This is explored in this paper, for Olivier Costa de Beauregard was one of the early proposers of the appropriateness of the action-at-a-distance picture in quantum phenomena.]

- Presentations

Presentations at STAIF and SPESIF (most with accompanying papers in the conference proceedings) yearly since 2000.

Presentation at the Society for Scientific Exploration meeting in June, 2010, now available in video format on the SSE website.

Presentation: Why Science Fiction has little to fear from Science, at the 75th Birthday Symposium for John Cramer, University of Washington, September 2009.

- Radio Interviews

The Space Show [3/20/2007]

The Space Show [3/3/2009]

Support for Dark Energy

The far future may be a lonely place, at least in extragalactic terms. Scientists studying gravity’s interactions with so-called dark energy — thought to be the cause of the universe’s accelerating expansion — can work out a scenario in which gravity dominated in the early universe. But somewhere around eight billion years after the Big Bang, the continuing expansion and consequent dilution of matter caused gravity to fall behind dark energy in its effects. We’re left with what we see today, a universe whose expansion will one day spread galaxies so far apart that any civilizations living in them won’t be able to see any other galaxies.

The initial dark energy findings, released in 1998, were based on Type Ia supernovae, using these as ‘standard candles’ which allowed us to calculate their distance from Earth. Now we have new data from both the Galaxy Evolution Explorer satellite (drawing on a three-dimensional map of galaxies in the distant universe containing hundreds of millions of galaxies) and the Anglo-Australian Telescope (Siding Spring Mountain, Australia). Using this information, scientists are studying the pattern of distance between individual galaxies. Here we have not a ‘standard candle’ but a ‘standard ruler,’ based on the tendency of pairs of galaxies to be separated by roughly 490 million light years.

A standard ruler is an astronomical object whose size is known to an approximate degree, one that can be used to determine its distance from the Earth by measuring its apparent size in the sky. The new dark energy investigations used a standard ruler based on galactic separation. Scientists believe that acoustic pressure waves ‘frozen’ in place approximately 370,000 years after the Big Bang (the result of electrons and protons combining to form neutral hydrogen) define the separation of galaxies we see. The pressure waves, known as baryon acoustic oscillations, left their imprint in the patterns of galaxies, accounting for the separation of galactic pairs. This provides a standard ruler that can be used to measure the distance of galaxy pairs from the Earth — closer galaxies appear farther apart from each other in the sky.

We’re looking, then, at patterns of distance between galaxies, using bright young galaxies of the kind most useful in such work. Galaxy Evolution Explorer identified the galaxies to be studied, while the Anglo-Australian Telescope was used to study the pattern of distance between them. Folding distance data into information about the speeds at which galaxy pairs are receding confirms what the supernovae studies have been telling us, that the universe’s expansion is accelerating. GALEX’s ultraviolet map also shows how galactic clusters draw in new galaxies through gravity while experiencing the counterweight of dark energy, which acts to tug the clusters apart, slowing the process.

Chris Blake (Swinburne University of Technology, Melbourne), lead author of recent papers on this work, says that theories that gravity is repulsive when acting at great distances (an alternative to dark energy) fail in light of the new data:

“The action of dark energy is as if you threw a ball up in the air, and it kept speeding upward into the sky faster and faster. The results tell us that dark energy is a cosmological constant, as Einstein proposed. If gravity were the culprit, then we wouldn’t be seeing these constant effects of dark energy throughout time.”

Image: This diagram illustrates two ways to measure how fast the universe is expanding. In the past, distant supernovae, or exploded stars, have been used as “standard candles” to measure distances in the universe, and to determine that its expansion is actually speeding up. The supernovae glow with the same intrinsic brightness, so by measuring how bright they appear on the sky, astronomers can tell how far away they are. This is similar to a standard candle appearing fainter at greater distances (left-hand illustration). In the new survey, the distances to galaxies were measured using a “standard ruler” (right-hand illustration). This method is based on the preference for pairs of galaxies to be separated by a distance of 490 million light-years today. The separation appears to get smaller as the galaxies move farther away, just like a ruler of fixed length (right-hand illustration). Credit: NASA/JPL-Caltech.

Dark energy is still a huge unknown, but Jon Morse, astrophysics division director at NASA Headquarters in Washington, thinks the new work provides useful confirmation:

“Observations by astronomers over the last 15 years have produced one of the most startling discoveries in physical science; the expansion of the universe, triggered by the Big Bang, is speeding up. Using entirely independent methods, data from the Galaxy Evolution Explorer have helped increase our confidence in the existence of dark energy.”

For more, see Blake et al., “The WiggleZ Dark Energy Survey: testing the cosmological model with baryon acoustic oscillations at z=0.6,” accepted for publication in Monthly Notices of the Royal Astronomical Society (preprint) and Blake et al., “The WiggleZ Dark Energy Survey: the growth rate of cosmic structure since redshift z=0.9,” also accepted at MNRAS (preprint).