Shortly before publishing my article on David Kipping’s TARS concept (Torqued Accelerator using Radiation from the Sun), I received an email from Centauri Dreams associate editor Alex Tolley. Alex had come across TARS and offered his thoughts on how to improve the concept for greater efficiency. The publication of my original piece has launched a number of comments that have also probed some of these areas, so I want to go ahead and present Alex’s original post, which was written before my essay got into print. All told, I’m pleased to see the continuing contribution of the community at taking an idea apart and pondering alternative solutions. It’s the kind of thing that gives me confidence that the interstellar effort is robust and continuing. by Alex Tolley Dr. Kipping’s TARS proposed system for accelerating probes to high velocity is both simple and elegant. With no moving parts other than any tether deployment and probe release, if it works, there is little that can fail during...

3I/ATLAS: Observing and Modeling an Interstellar Newcomer

Let’s run through what we know about 3I/ATLAS, now accepted as the third interstellar object to be identified moving through the Solar System. It seems obvious not only that our increasingly powerful telescopes will continue to find these interlopers, but that they are out there in vast numbers. A calculation in 2018 by John Do, Michael Tucker and John Tonry (citation below) offers a number high enough to make these the most common macroscopic objects in the galaxy. But that may well depend on how they originate, a question of lively interest and one that continues to produce papers. Let me draw on a just released preprint from Matthew Hopkins (University of Oxford) and colleagues that runs through the formation options. Pointing out that interstellar object (ISO) studies represent an entirely new field, they note that theoretical thinking about such things trended toward comets as the main source, an idea immediately confronted by ‘Oumuamua, which appeared inert even as it drew...

New Explanations for the Enigmatic Wow! Signal

The Wow! signal, a one-off detection at the Ohio State ‘Big Ear’ observatory in 1977, continues to perplex those scientists who refuse to stop investigating it. If the signal were terrestrial in origin, we have to explain how it appeared at 1.42 GHz, while the band from 1.4 to 1.427 GHz is protected internationally – no emissions allowed. Aircraft can be ruled out because they would not remain static in the sky; moreover, the Ohio State observatory had excellent RFI rejection. Jim Benford today discusses an idea he put forward several years ago, that the Wow signal could have originated in power beaming, which would necessarily sweep past us as it moved across the sky and never reappear. And a new candidate has emerged, as Jim explains, involving an entirely natural process. Are we ever going to be able to figure this signal out? Read on for the possibilities. A familiar figure in these pages, Jim is a highly regarded plasma physicist and CEO of Microwave Sciences, as well as being...

A Necessary Break

It's time to write a post I've been dreading to write for several years now. Some of my readers already know that my wife has been ill with Alzheimer's for eleven years, and I've kept her at home and have been her caregiver all the way. We are now in the final stages, it appears, and her story is about to end. I will need to give her all my caring and attention through this process, as I'm sure you'll understand. And while I have no intention of shutting down Centauri Dreams, I do have to pause now to devote everything I have to her. Please bear with me and with a bit of time and healing, I will be active once again.

Do You Really Want to Live Forever?

Supposing you wanted to live forever and found yourself in 2024, would you sign up for something like Alcor, a company that offers a cryogenic way to preserve your body until whatever ails it can be fixed, presumably in the far future? Something over 200 people have made this choice with Alcor, and another 200 at the Cryonics Institute, whose website says “life extension within reach.” A body frozen at −196 °C using ‘cryoprotectants’ can, so the thinking goes, survive lengthy periods without undergoing destructive ice damage, with life restored when science masters the revival process. It’s not a choice I would make, although the idea of waking up refreshed and once again healthy in a few thousand years is a great plot device for science fiction. It has led to one farcical public event, in the form of Nederland, Colorado’s annual Frozen Dead Guys Days festival. The town found itself with a resident frozen man named Bredo Morstøl, brought there by his grandson Trygve Bauge in 1993 and...

Go Clipper

Is this not a beautiful sight? Europa Clipper sits atop a Falcon Heavy awaiting liftoff at launch complex 39A at Kennedy Space Center. Launch is set for 1206 EDT (1606 UTC) October 14. Clipper is the largest spacecraft NASA has ever built for a planetary mission, 30.5 meters tip to tip when its solar arrays are extended. Orbital operations at Jupiter are to begin in April of 2030, with the first of 49 Europa flybys occurring the following year. The closest flyby will take the spacecraft to within 25 kilometers of the surface. Go Europa Clipper! Photo Credit: NASA. In less than 24 hours, NASA's @EuropaClipper spacecraft is slated to launch from @NASAKennedy in Florida aboard a @SpaceX Falcon Heavy rocket.Tune in at 2pm PT / 5pm ET as experts discuss the prelaunch status of the mission. https://t.co/Nq36BeKieX— NASA JPL (@NASAJPL) October 13, 2024

The Physics of Starship Catastrophe

Now that gravitational wave astronomy is a viable means of investigating the cosmos, we’re capable of studying extreme events like the merger of black holes and even neutron stars. Anything that generates ripples in spacetime large enough to spot is fair game, and that would include supernovae events and individual neutron stars with surface irregularities. If we really want to push the envelope, we could conceivably detect the proposed defects in spacetime called cosmic strings, which may or may not have been formed in the early universe. The latter is an intriguing thought, a conceivably observable one-dimensional relic of phase transitions from the beginning of the cosmos that would be on the order of the Planck length (about 10-35 meters) in width but lengthy enough to encompass light years. Oscillations in these strings, if indeed they exist, would theoretically generate gravitational waves that could be involved in the large-scale structure of the universe. Because new physics...

ACS3: Refining Sail Deployment

Rocket Lab, a launch service provider based in Long Beach CA, launched a rideshare payload on April 23 from its launch complex in New Zealand. I’ve been tracking that launch because aboard the Electron rocket was an experimental solar sail that NASA is developing to study boom deployment. This is important stuff, because the lightweight materials we need to maximize payload and performance are evolving, and so are boom deployment methods. Hence the Advanced Composite Solar Sail System (ACS3), created to test composites and demonstrate new deployment methods. The thing about sails is that they are extremely scalable. In fact, it’s remarkable how many different sizes and shapes of sails we’ve discussed in these pages, ranging from Jordin Kare’s ‘nanosails’ to the small sails envisioned by Breakthrough Starshot that are just a couple of meters to the side, and on up to the behemoth imaginings of Robert Forward, designed to take a massive starship with human crew to Barnard’s Star and...

Data Return from Proxima Centauri b

The challenges involved in sending gram-class probes to Proxima Centauri could not be more stark. They’re implicit in Kevin Parkin’s analysis of the Breakthrough Starshot system model, which ran in Acta Astronautica in 2018 (citation below). The project settled on twenty percent of the speed of light as a goal, one that would reach Proxima Centauri b well within the lifetime of researchers working on the project. The probe mass is 3.6 grams, with a 200 nanometer-thick sail some 4.1 meters in diameter. The paper we’ve been looking at from Marshall Eubanks (along with a number of familiar names from the Initiative for Interstellar Studies including Andreas Hein, his colleague Adam Hibberd, and Robert Kennedy) accepts the notion that these probes should be sent in great numbers, and not only to exploit the benefits of redundancy to manage losses along the way. A “swarm” approach in this case means a string of probes launched one after the other, using the proposed laser array in the...

Atmospheric Types and the Results from K2-18b

The exoplanet K2-18b has been all over the news lately, with provocative headlines suggesting a life detection because of the possible presence of dimethyl sulfide (DMS), a molecule produced by life on our own planet. Is this a 'Hycean' world, covered with oceans under a hydrogen-rich atmosphere? Almost nine times as massive as Earth, K2-18b is certainly noteworthy, but just how likely are these speculations? Centauri Dreams regular Dave Moore has some thoughts on the matter, and as he has done before in deeply researched articles here, he now zeroes in on the evidence and the limitations of the analysis. This is one exoplanet that turns out to be provocative in a number of ways, some of which will move the search for life forward. by Dave Moore 124 light years away in the constellation of Leo lies an undistinguished M3V red dwarf, K2-18. Two planets are known to orbit this star: K2-18c, a 5.6 Earth mass planet orbiting 6 million miles out, and K2-18b, an 8.6 Earth mass planet...

What We’re Learning about TRAPPIST-1

It’s no surprise that the James Webb Space Telescope’s General Observers program should target TRAPPIST-1 with eight different efforts slated for Webb’s first year of scientific observations. Where else do we find a planetary system that is not only laden with seven planets, but also with orbits so aligned with the system’s ecliptic? Indeed, TRAPPIST-1’s worlds comprise the flattest planetary arrangement we know about, with orbital inclinations throughout less than 0.1 degrees. This is a system made for transits. Four of these worlds may allow temperatures that could support liquid water, should it exist in so exotic a locale. Image: This diagram compares the orbits of the planets around the faint red star TRAPPIST-1 with the Galilean moons of Jupiter and the inner Solar System. All the planets found around TRAPPIST-1 orbit much closer to their star than Mercury is to the Sun, but as their star is far fainter, they are exposed to similar levels of irradiation as Venus, Earth and Mars...

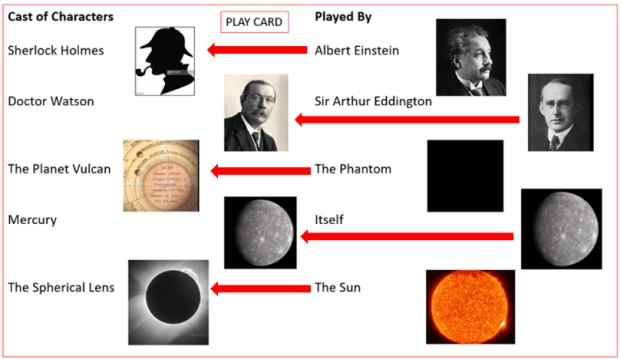

Part II: Sherlock Holmes and the Case of the Spherical Lens: Reflections on a Gravity Lens Telescope

Aerospace engineer Wes Kelly continues his investigations into gravitational lensing with a deep dive into what it will take to use the phenomenon to construct a close-up image of an exoplanet. For continuity, he leads off with the last few paragraphs of Part I, which then segue into the practicalities of flying a mission like JPL's Solar Gravitational Lens concept, and the difficulties of extracting a workable image from the maze of lensed photons. The bending of light in a gravitational field may offer our best chance to see surface features like continents and seasonal change on a world around another star. The question to be resolved: Just how does General Relativity make this possible? by Wes Kelly Conclusion of Part I At this point, having one’s hands on an all-around deflection angle for light at the edges of a “spherical lens” of about 700,000 kilometers radius (or b equal to the radius of the sun rS), if it were an objective lens of a corresponding telescope, what would be...

Ring of Life? Terminator Habitability around M-dwarfs

It would come as no surprise to readers of science fiction that the so-called ‘terminator’ region on certain kinds of planets might be a place where the conditions for life can emerge. I’m talking about planets that experience tidal lock to their star, as habitable zone worlds around some categories of M-dwarfs most likely do. But I can also go way back to science fiction read in my childhood to recall a story set, for example, on Mercury, then supposed to be locked to the Sun in its rotation, depicting humans setting up bases on the terminator zone between broiling dayside and frigid night. Addendum: Can you name the science fiction story I’m talking about here? Because I can’t recall it, though I suspect the setting on Mercury was in one of the Winston series of juvenile novels I was absorbing in that era as a wide-eyed kid. The subject of tidal lock is an especially interesting one because we have candidates for habitable planets around stars as close as Proxima Centauri, if...

Interstellar Research Group: 8th Interstellar Symposium Second Call for Papers

Abstract Submission Final Deadline: April 21, 2023 The Interstellar Research Group (IRG) in partnership with the International Academy of Astronautics (IAA) hereby invites participation in its 8th Interstellar Symposium, hosted by McGill University, to be held from Monday, July 10 through Thursday, July 13, 2023, in Montreal, Quebec, Canada. This is the first IRG meeting outside of the United States, and we are excited to partner with such a distinguished institution! Topics of Interest Physics and Engineering Propulsion, power, communications, navigation, materials, systems design, extraterrestrial resource utilization, breakthrough physics Astronomy Exoplanet discovery and characterization, habitability, solar gravitational focus as a means to image exoplanets Human Factors Life support, habitat architecture, worldships, population genetics, psychology, hibernation, finance Ethics Sociology, law, governance, astroarchaeology, trade, cultural evolution Astrobiology Technosignature...

Chasing nomadic worlds: Opening up the space between the stars

Ongoing projects like JHU/APL’s Interstellar Probe pose the question of just how we define an ‘interstellar’ journey. Does reaching the local interstellar medium outside the heliosphere qualify? JPL thinks so, which is why when you check on the latest news from the Voyagers, you see references to the Voyager Interstellar Mission. Andreas Hein and team, however, think there is a lot more to be said about targets between here and the nearest star. With the assistance of colleagues Manasvi Lingam and Marshall Eubanks, Andreas lays out targets as exotic as ‘rogue planets’ and brown dwarfs and ponders the implications for mission design. The author is Executive Director and Director Technical Programs of the UK-based not-for-profit Initiative for Interstellar Studies (i4is), where he is coordinating and contributing to research on diverse topics such as missions to interstellar objects, laser sail probes, self-replicating spacecraft, and world ships. He is also an associate professor of...

Super Earths/Hycean Worlds

Dave Moore is a Centauri Dreams regular who has long pursued an interest in the observation and exploration of deep space. He was born and raised in New Zealand, spent time in Australia, and now runs a small business in Klamath Falls, Oregon. He counts Arthur C. Clarke as a childhood hero, and science fiction as an impetus for his acquiring a degree in biology and chemistry. Dave has kept up an active interest in SETI (see If Loud Aliens Explain Human Earliness, Quiet Aliens Are Also Rare) as well as the exoplanet hunt, and today examines an unusual class of planets that is just now emerging as an active field of study. by Dave Moore Let me draw your attention to a paper with interesting implications for exoplanet habitability. The paper is “Potential long-term habitable conditions on planets with primordial H–He atmospheres,” by Marit Mol Lous, Ravit Helled and Christoph Mordasini. Published in Nature Astronomy, this paper is a follow-on to Madhusudhan et al’s paper on Hycean...

137496 b: A Rare ‘Hot Mercury’

We haven't had many examples of so-called 'hot Mercury' planets to work with, or in this case, what might be termed a 'hot super-Mercury' because of its size. For HD 137496 b actually fits the 'super-Earth' category, at roughly 30 percent larger in radius than the Earth. What makes it stand out, of course, is the fact that as a 'Mercury,' it is primarily made up of iron, with its core carrying over 70 percent of the planet's mass. It's also a scorched world, with an orbital radius of 0.027 AU and a period of 1.6 days. Another planet, non-transiting, turns up at HD 137496 as well. It's a 'cold Jupiter' with a minimum mass calculated at 7.66 Jupiter masses, an eccentric orbit of 480 days, and an orbital distance of 1.21 AU from the host star. HD 137496 c is thus representative of the Jupiter-class worlds we'll be finding more of as our detection methods are fine-tuned for planets on longer, slower orbits than the 'hot Jupiters' that were so useful in the early days of radial velocity...

Exomoons: The Binary Star Factor

Centauri Dreams readers will remember Billy Quarles’ name in connection with a 2019 paper on Alpha Centauri A and B, which examined not just those stars but binary systems in general in terms of obliquity -- axial tilt -- on potential planets as affected by the gravitational effects of their systems. The news for habitability around Centauri B wasn’t good. Whereas the Moon helps to stabilize Earth’s axial tilt, the opposite occurs on a simulated Centauri B planet. And without a large moon, gravitational forcing from the secondary star still causes extreme obliquity variations. Orbital precession induced by the companion star is the problem, and it may be that Centauri A and B are simply too close together, whereas more widely separated binaries are less disruptive. I’ll send you to the paper for more (citation below), but you can get an overview with Axial Tilt, Habitability, and Centauri B. It’s exciting to think that our ongoing investigations of Centauri A and B will, one of these...

L 98-59 b: A Rocky World with Half the Mass of Venus

ESPRESSO comes through. The spectrograph, mounted on the European Southern Observatory's Very Large Telescope, has produced data allowing astronomers to calculate the mass of the lightest exoplanet ever measured using radial velocity techniques. The star is L 98-59, an M-dwarf about a third of the mass of the Sun some 35 light years away in the southern constellation Volans. It was already known to host three planets in tight orbits of 2.25 days, 3.7 days and 7.5 days. The innermost world, L 98-59b, has now been determined to have roughly half the mass of Venus. What extraordinary precision from ESPRESSO (Echelle SPectrograph for Rocky Exoplanets and Stable Spectroscopic Observations). The three previously known L 98-59 planets were discovered in data from TESS, the Transiting Exoplanet Survey Satellite, which spots dips in the lightcurve from a star when a planet crosses its face. Adding ESPRESSO's data, and incorporating previous data from HARPS, has allowed Olivier Demangeon...

Notes on the Magnetic Ramjet II

Building a Bussard ramjet isn't easy, but the idea has a life of its own and continues to be discussed in the technical literature, in addition to its long history in science fiction. Peter Schattschneider, who explored the concept in Crafting the Bussard Ramjet last February, has just published an SF novel of his own called The EXODUS Incident (Springer, 2021), where the Bussard concept plays a key role. But given the huge technical problems of such a craft, can one ever be engineered? In this second part of his analysis, Dr. Schattschneider digs into the question of hydrogen harvesting and the magnetic fields the ramjet would demand. The little known work of John Ford Fishback offers a unique approach, one that the author has recently explored with Centauri Dreams regular A. A. Jackson in a paper for Acta Astronautica. The essay below explains Fishback's ideas and the options they offer in the analysis of this extraordinary propulsion concept. The author is professor emeritus in...